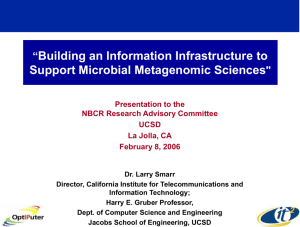

ppt - California Institute for Telecommunications and Information

advertisement

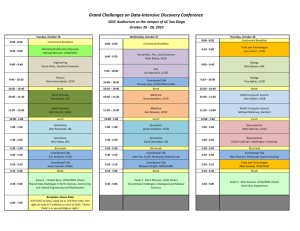

The OptIPuter – From SuperComputers to SuperNetworks GEON Meeting San Diego Supercomputer Center, UCSD La Jolla, CA November 19, 2002 Dr. Larry Smarr Director, California Institute for Telecommunications and Information Technologies Professor, Dept. of Computer Science and Engineering Jacobs School of Engineering, UCSD California Has Initiated Four New Institutes for Science and Innovation California Institute for Bioengineering, Biotechnology, and Quantitative Biomedical Research UCD UCSF Center for Information Technology Research in the Interest of Society UCM UCB California NanoSystems Institute UCSC UCSB UCLA UCI California Institute for Telecommunications and Information Technology UCSD www.ucop.edu/california-institutes Cal-(IT)2 An Integrated Approach to the Future Internet 220 UC San Diego & UC Irvine Faculty Working in Multidisciplinary Teams With Students, Industry, and the Community The State’s $100 M Creates Unique Buildings, Equipment, and Laboratories www.calit2.net SDSC and Cal-(IT)2 Have The Same Knowledge and Data Team by Construction! Applications: Medical informatics, Biosciences, Ecoinformatics,… Visualization Data Mining, Simulation Modeling, Analysis, Data Fusion Knowledge-Based Integration Advanced Query Processing Grid Storage Filesystems, Database Systems High speed networking Networked Storage (SAN) sensornets Storage hardware instruments SDSC Data and Knowledge Systems Program Cal-(IT)2 Knowledge and Data Engineering Laboratory The Move to Data-Intensive Science & Engineeringe-Science Community Resources ALMA LHC Sloan Digital Sky Survey ATLAS Why Optical Networks Are Emerging as the 21st Century Driver for the Grid Scientific American, January 2001 The Rapid Increase in Bandwidth is Driven by Parallel Lambdas On Single Optical Fibers (WDM) c* f Parallel Lambdas Will Drive This Decade The Way Parallel Processors Drove the 1990s A LambdaGrid Will Be the Backbone for an e-Science Network Apps Middleware Clusters Dynamically Allocated Lightpaths Switch Fabrics Physical Monitoring C O N T R O L P L A N E Source: Joe Mambretti, NU The Next S-Curves of Networking Exponential Technology Growth Lambda Grids Experimental Networks Production/ Mass Market DWDM 100% Technology Penetration Internet2 Abilene Experimental/ Early Adopters Connections Program 0% Research Gigabit Testbeds Time Technology S-Curve ~1990s 2000 2010 Networking Technology S-Curves Data Intensive Scientific Applications Require Experimental Optical Networks • Large Data Challenges in Neuro and Earth Sciences – Each Data Object is 3D and Gigabytes – Data are Generated and Stored in Distributed Archives – Research is Carried Out on Federated Repository • Requirements – – – – Computing Requirements PC Clusters Communications Dedicated Lambdas Over Fiber Data Large Peer-to-Peer Lambda Attached Storage Visualization Collaborative Volume Algorithms • Response – OptIPuter Research Project Illinois’ I-WIRE The First State Dark Fiber Experimental Network Starlight (NU-Chicago) Argonne 18 pair 4 pair Qwest 455 N. Cityfront UC Gleacher 450 N. Cityfront 4 10 pair UIC 4 pair McLeodUSA 4 pair 12 pair 151/155 N. Michigan Doral Plaza 12 pair Level(3) Illinois Century Network 111 N. Canal James R. Thompson Ctr City Hall State of IL Bldg 2 pair 2 pair 2 pair UChicago IIT Source: Charlie Catlett 12/2001 UIUC/NCSA CENIC Plans to Create a Dark Fiber Experimental and Research Network The SoCal Component From Telephone Conference Calls to Access Grid International Video Meetings Can We Modify This Technology To Create a GEON Research Virtual Laboratory? Access Grid Lead-Argonne NSF STARTAP Lead-UIC’s Elec. Vis. Lab iGrid 2002 September 24-26, 2002, Amsterdam, The Netherlands • Fifteen Countries/Locations Proposing 28 Demonstrations: Canada, CERN, France, Germany, Greece, Italy, Japan, The Netherlands, Singapore, Spain, Sweden, Taiwan, United Kingdom, United States • Applications Demonstrated: Art, Bioinformatics, Chemistry, Cosmology, Cultural Heritage, Education, High-Definition Media Streaming, Manufacturing, Medicine, Neuroscience, Physics, Tele-science • Grid Technologies: Grid Middleware, Data Management/ Replication Grids, Visualization Grids, Computational Grids, Access Grids, Grid Portal Sponsors: HP, IBM, Cisco, Philips, Level (3), Glimmerglass, etc. UIC www.startap.net/igrid2002 iGrid 2002 Was Sustaining 1-3 Gigabits/s Total Available Bandwidth Between Chicago and Amsterdam Was 30 Gigabit/s The NSF TeraGrid A LambdaGrid of Linux SuperClusters This will Become the National Backbone to Support Multiple Large Scale Science and Engineering Projects Applications Caltech 0.5 TF 0.4 TB Memory 86 TB disk Intel, IBM, Qwest Myricom, Sun, Oracle TeraGrid Backbone (40 Gbps) Data SDSC 4.1 TF 2 TB Memory 250 TB disk $53Million from NSF Visualization Argonne 1 TF 0.25 TB Memory 25 TB disk Compute NCSA 8 TF 4 TB Memory 240 TB disk From SuperComputers to SuperNetworks-Changing the Grid Design Point • The TeraGrid is Optimized for Computing – – – – 1024 IA-64 Nodes Linux Cluster Assume 1 GigE per Node = 1 Terabit/s I/O Grid Optical Connection 4x10Gig Lambdas = 40 Gigabit/s Optical Connections are Only 4% Bisection Bandwidth • The OptIPuter is Optimized for Bandwidth – – – – 32 IA-64 Node Linux Cluster Assume 1 GigE per Processor = 32 gigabit/s I/O Grid Optical Connection 4x10GigE = 40 Gigabit/s Optical Connections are Over 100% Bisection Bandwidth The OptIPuter is an Experimental Network Research Project • Driven by Large Neuroscience and Earth Science Data – EarthScope and BIRN • Multiple Lambdas Linking Clusters and Storage – – – – – – LambdaGrid Software Stack Integration with PC Clusters Interactive Collaborative Volume Visualization Lambda Peer to Peer Storage With Optimized Storewidth Enhance Security Mechanisms Rethink TCP/IP Protocols • NSF Large Information Technology Research Proposal – UCSD and UIC Lead Campuses—Larry Smarr PI – USC, UCI, SDSU, NW Partnering Campuses – Industrial Partners: IBM, Telcordia/SAIC, Chiaro Networks, CENIC • $13.5 Million Over Five Years Metro Optically Linked Visualization Walls with Industrial Partners Set Stage for Federal Grant • Driven by SensorNets Data – – – – Real Time Seismic Environmental Monitoring Distributed Collaboration Emergency Response • Linked UCSD and SDSU – Dedication March 4, 2002 Linking Control Rooms UCSD SDSU 44 Miles of Cox Fiber Cox, Panoram, SAIC, SGI, IBM, TeraBurst Networks SD Telecom Council OptIPuter NSF Proposal Partnered with National Experts and Infrastructure Asia Pacific Vancouver Seattle Portland CA*net4 Pacific Light Rail Chicago UIC NU San Francisco Asia Pacific SURFnet CERN PSC NYC NCSA USC Los Angeles UCI UCSD, SDSU San Diego (SDSC) Atlanta AMPATH Source: Tom DeFanti and Maxine Brown, UIC OptIPuter LambdaGrid Enabled by Chiaro Networking Router Medical Imaging and Microscopy Chemistry, Engineering, Arts switch switch • Cluster – Disk • Disk – Disk Chiaro Enstara • Viz – Disk • DB – Cluster switch San Diego Supercomputer Center switch • Cluster – Cluster Scripps Institution of Oceanography www.calit2.net/news/2002/11-18-chiaro.html The UCSD OptIPuter The UCSD OptIPuter Deployment LambdaGrid Testbed To Other OptIPuter Sites Phase I, Fall 02 Phase II, 2003 Collocation point SDSC SDSC SDSC SDSC Annex Annex JSOE Engineering CRCA Arts SOM Medicine Chemistry Phys. Sci Keck Preuss High School 6th Undergrad College College Node M Collocation SIO Earth Sciences ½ Mile OptIPuter Transforms Individual Laboratory Visualization, Computation, & Analysis Facilities Fast polygon and volume rendering with stereographics + GeoWall = 3D APPLICATIONS: Earth Science Underground Earth Science Anatomy Neuroscience GeoFusion GeoMatrix Toolkit Rob Mellors and Eric Frost, SDSU SDSC Volume Explorer Visible Human Project NLM, Brooks AFB, SDSC Volume Explorer Dave Nadeau, SDSC, BIRN SDSC Volume Explorer The Preuss School UCSD OptIPuter Facility