Document

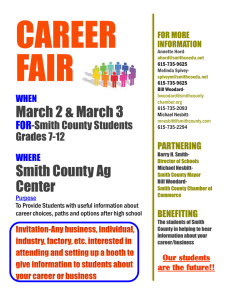

advertisement

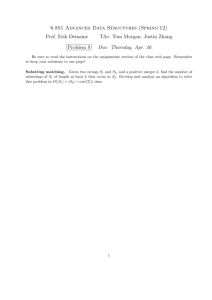

Algorithm Design and Analysis

LECTURES 10-11

Divide and Conquer

• Recurrences

• Nearest pair

Adam Smith

9/10/10

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Divide and Conquer

– Break up problem into several parts.

– Solve each part recursively.

– Combine solutions to sub-problems into overall solution.

•Most common usage.

– Break up problem of size n into two equal parts of size n/2.

– Solve two parts recursively.

– Combine two solutions into overall solution in linear time.

•Consequence.

– Brute force: (n2).

– Divide-and-conquer: (n log n).

9/19/2008

Divide et impera.

Veni, vidi, vici.

- Julius Caesar

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Mergesort

– Divide array into two halves.

– Recursively sort each half.

– Merge two halves to make sorted whole.

Jon von Neumann (1945)

A

G

O

R

I

T

H

M

S

A

L

G

O

R

I

T

H

M

S

divide

O(1)

A

G

L

O

R

H

I

M

S

T

sort

2T(n/2)

merge

O(n)

A

9/19/2008

L

G

H

I

L

M

O

R

S

T

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Merging

•Combine two pre-sorted lists into a sorted whole.

•How to merge efficiently?

– Linear number of comparisons.

– Use temporary array.

A

G

A

L

G

O

H

R

H

I

M

S

T

I

•Challenge for the bored: in-place merge [Kronrud, 1969]

using only a constant amount of extra storage

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recurrence for Mergesort

(1) if n = 1;

T(n) =

2T(n/2) + (n) if n > 1.

•T(n) = worst case running time of Mergesort on

an input of size n.

•Should be T( n/2 ) + T( n/2 ) , but it turns out

not to matter asymptotically.

•Usually omit the base case because our algorithms

always run in time (1) when n is a small constant.

• Several methods to find an upper bound on T(n).

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recursion Tree Method

• Technique for guessing solutions to recurrences

– Write out tree of recursive calls

– Each node gets assigned the work done during that

call to the procedure (dividing and combining)

– Total work is sum of work at all nodes

• After guessing the answer, can prove by

induction that it works.

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recursion Tree for Mergesort

Solve T(n) = 2T(n/2) + cn, where c > 0 is constant.

T(n)

T(n/2)

T(n/2)

h = lg n T(n/4)

T(n/4)

T(n/4)

T(n/4)

T(n / 2k)

T(1)

9/19/2008

#leaves = n

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recursion Tree for Mergesort

Solve T(n) = 2T(n/2) + cn, where c > 0 is constant.

cn

T(n/2)

T(n/2)

h = lg n T(n/4)

T(n/4)

T(n/4)

T(n/4)

T(n / 2k)

T(1)

9/19/2008

#leaves = n

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recursion Tree for Mergesort

Solve T(n) = 2T(n/2) + cn, where c > 0 is constant.

cn

cn/2

cn/2

h = lg n T(n/4)

T(n/4)

T(n/4)

T(n/4)

T(n / 2k)

T(1)

9/19/2008

#leaves = n

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recursion Tree for Mergesort

Solve T(n) = 2T(n/2) + cn, where c > 0 is constant.

cn

cn/2

cn/2

h = lg n cn/4

cn/4

cn/4

cn/4

T(n / 2k)

T(1)

9/19/2008

#leaves = n

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recursion Tree for Mergesort

Solve T(n) = 2T(n/2) + cn, where c > 0 is constant.

cn

cn

cn/2

h = lg n cn/4

cn/4

cn/4

T(n / 2k)

(1)

#leaves = n

cn

cn/4

cn

…

cn/2

(n)

Total = (n lg n)

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Counting Inversions

•Music site tries to match your song preferences with others.

– You rank n songs.

– Music site consults database to find people with similar tastes.

•Similarity metric: number of inversions between two rankings.

– My rank: 1, 2, …, n.

– Your rank: a1, a2, …, an.

– Songs i and j inverted if i < j, but ai > aj.

Songs

A

B

C

D

E

Me

1

2

3

4

5

You

1

3

4

2

5

Inversions

3-2, 4-2

•Brute force: check all (n2) pairs i and j.

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Counting Inversions: Algorithm

•Divide-and-conquer

1

9/19/2008

5

4

8

10

2

6

9

12

11

3

7

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Counting Inversions: Algorithm

•Divide-and-conquer

– Divide: separate list into two pieces.

1

1

9/19/2008

5

5

4

4

8

8

10

10

2

2

6

6

9

9

12

12

11

11

3

3

7

Divide: (1).

7

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Counting Inversions: Algorithm

•Divide-and-conquer

– Divide: separate list into two pieces.

– Conquer: recursively count inversions in each half.

1

1

5

5

4

4

8

8

10

10

5 blue-blue inversions

5-4, 5-2, 4-2, 8-2, 10-2

9/19/2008

2

2

6

6

9

9

12

12

11

11

3

3

7

7

Divide: (1).

Conquer: 2T(n / 2)

8 green-green inversions

6-3, 9-3, 9-7, 12-3, 12-7, 12-11, 11-3, 11-7

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Counting Inversions: Algorithm

•Divide-and-conquer

– Divide: separate list into two pieces.

– Conquer: recursively count inversions in each half.

– Combine: count inversions where ai and aj are in different halves,

and return sum of three quantities.

1

1

5

5

4

4

8

8

10

10

2

2

6

6

5 blue-blue inversions

9

9

12

12

11

11

3

3

7

7

Divide: (1).

Conquer: 2T(n / 2)

8 green-green inversions

9 blue-green inversions

5-3, 4-3, 8-6, 8-3, 8-7, 10-6, 10-9, 10-3, 10-7

Combine: ???

Total = 5 + 8 + 9 = 22.

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Counting Inversions: Combine

Combine: count blue-green inversions

– Assume each half is sorted.

– Count inversions where ai and aj are in different halves.

– Merge two sorted halves into sorted whole.

to maintain sorted invariant

3

7

10

14

18

19

2

11

16

17

23

25

6

3

2

2

0

0

Count: (n)

13 blue-green inversions: 6 + 3 + 2 + 2 + 0 + 0

2

3

7

10

11

14

16

17

18

19

23

25

Merge:

(n)

T(n) = 2T(n/2) + (n). Solution: T(n) = (n log n).

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Implementation

•Pre-condition. [Merge-and-Count] A and B are sorted.

•Post-condition. [Sort-and-Count] L is sorted.

Sort-and-Count(L) {

if list L has one element

return 0 and the list L

Divide the list into two halves A and B

(rA, A) Sort-and-Count(A)

(rB, B) Sort-and-Count(B)

(rB, L) Merge-and-Count(A, B)

}

9/19/2008

return r = rA + rB + r and the sorted list L

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Binary search

Find an element in a sorted array:

1. Divide: Check middle element.

2. Conquer: Recursively search 1 subarray.

3. Combine: Trivial.

Example: Find 9

3

9/19/2008

5

7

8

9

12

15

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Binary search

Find an element in a sorted array:

1. Divide: Check middle element.

2. Conquer: Recursively search 1 subarray.

3. Combine: Trivial.

Example: Find 9

3

9/19/2008

5

7

8

9

12

15

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Binary search

Find an element in a sorted array:

1. Divide: Check middle element.

2. Conquer: Recursively search 1 subarray.

3. Combine: Trivial.

Example: Find 9

3

9/19/2008

5

7

8

9

12

15

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Binary search

Find an element in a sorted array:

1. Divide: Check middle element.

2. Conquer: Recursively search 1 subarray.

3. Combine: Trivial.

Example: Find 9

3

9/19/2008

5

7

8

9

12

15

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Binary search

Find an element in a sorted array:

1. Divide: Check middle element.

2. Conquer: Recursively search 1 subarray.

3. Combine: Trivial.

Example: Find 9

3

9/19/2008

5

7

8

9

12

15

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Binary search

Find an element in a sorted array:

1. Divide: Check middle element.

2. Conquer: Recursively search 1 subarray.

3. Combine: Trivial.

Example: Find 9

3

9/19/2008

5

7

8

9

12

15

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Binary Search

BINARYSEARCH(b, A[1 . . n]) B find b in sorted array A

1. If n=0 then return “not found”

2. If A[dn/2e] = b then returndn/2e

3. If A[dn/2e] < b then

4.

return BINARYSEARCH(A[1 . . dn/2e])

5. Else

6.

9/19/2008

return dn/2e + BINARYSEARCH(A[dn/2e+1 . . n])

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recurrence for binary search

T(n) = 1 T(n/2) + (1)

# subproblems

subproblem size

9/19/2008

work dividing

and combining

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Recurrence for binary search

T(n) = 1 T(n/2) + (1)

# subproblems

subproblem size

work dividing

and combining

T(n) = T(n/2) + c = T(n/4) + 2c

= cdlog ne= (lg n) .

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Exponentiation

Problem: Compute a b, where b N is n bits long

Question: How many multiplications?

Naive algorithm: (b) = (2n) (exponential

in the input length!)

Divide-and-conquer algorithm:

ab =

a b/2 × a b/2

if b is even;

a (b–1)/2 × a (b–1)/2 × a if b is odd.

T(b) = T(b/2) + (1) T(b) = (log b) = (n) .

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

So far: 2 recurrences

• Mergesort; Counting Inversions

T(n) = 2 T(n/2) + (n)

= (n log n)

• Binary Search; Exponentiation

T(n) = 1 T(n/2) + (1)

= (log n)

Master Theorem: method for solving recurrences.

9/19/2008

S. Raskhodnikova; based on slides by E. Demaine, C. Leiserson, A. Smith, K. Wayne

Review Question: Exponentiation

Problem: Compute a b, where b N is n bits long.

Question: How many multiplications?

Naive algorithm: (b) = (2n) (exponential

in the input length!)

Divide-and-conquer algorithm:

ab =

a b/2 × a b/2

if b is even;

a (b–1)/2 × a (b–1)/2 × a if b is odd.

T(b) = T(b/2) + (1) T(b) = (log b) = (n) .

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

So far: 2 recurrences

• Mergesort; Counting Inversions

T(n) = 2 T(n/2) + (n)

= (n log n)

• Binary Search; Exponentiation

T(n) = 1 T(n/2) + (1)

= (log n)

Master Theorem: method for solving recurrences.

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

The master method

The master method applies to recurrences of

the form

T(n) = a T(n/b) + f (n) ,

where a 1, b > 1, and f is asymptotically

positive, that is f (n) >0 for all n > n0.

First step: compare f (n) to nlogba.

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Three common cases

Compare f (n) with nlogba:

1. f (n) = O(nlogba – e) for some constant e > 0.

• f (n) grows polynomially slower than nlogba

(by an ne factor).

Solution: T(n) = (nlogba) .

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Three common cases

Compare f (n) with nlogba:

1. f (n) = O(nlogba – e) for some constant e > 0.

• f (n) grows polynomially slower than nlogba

(by an ne factor).

Solution: T(n) = (nlogba) .

2. f (n) = (nlogba lgkn) for some constant k 0.

• f (n) and nlogba grow at similar rates.

Solution: T(n) = (nlogba lgk+1n) .

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Three common cases (cont.)

Compare f (n) with nlogba:

3. f (n) = W(nlogba + e) for some constant e > 0.

• f (n) grows polynomially faster than nlogba

(by an ne factor),

and f (n) satisfies the regularity condition that

a f (n/b) c f (n) for some constant c < 1.

Solution: T(n) = ( f (n)) .

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Idea of master theorem

Recursion tree:

f (n)

a

f (n/b) f (n/b) … f (n/b)

a

f (n/b2) f (n/b2) … f (n/b2)

T (1)

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Idea of master theorem

Recursion tree:

f (n)

a f (n/b)

a2 f (n/b2)

…

a

f (n/b) f (n/b) … f (n/b)

a

f (n/b2) f (n/b2) … f (n/b2)

f (n)

T (1)

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Idea of master theorem

Recursion tree:

f (n)

a f (n/b)

a2 f (n/b2)

…

a

f (n/b) f (n/b) … f (n/b)

a

h = logbn

f (n/b2) f (n/b2) … f (n/b2)

f (n)

T (1)

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Idea of master theorem

f (n)

a

f (n/b) f (n/b) … f (n/b)

a

h = logbn

f (n/b2) f (n/b2) … f (n/b2)

T (1)

9/22/2008

#leaves = ah

= alogbn

= nlogba

f (n)

a f (n/b)

a2 f (n/b2)

…

Recursion tree:

nlogbaT (1)

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Idea of master theorem

f (n)

a

f (n/b) f (n/b) … f (n/b)

a

h = logbn

f (n/b2) f (n/b2) … f (n/b2)

CASE 1: The weight increases

geometrically from the root to the

T (1) leaves. The leaves hold a constant

fraction of the total weight.

9/22/2008

f (n)

a f (n/b)

a2 f (n/b2)

…

Recursion tree:

nlogbaT (1)

(nlogba)

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Examples

EX. T(n) = 4T(n/2) + n3

a = 4, b = 2 nlogba = n2; f (n) = n3.

CASE 3: f (n) = W(n2 + e) for e = 1

and 4(n/2)3 cn3 (reg. cond.) for c = 1/2.

T(n) = (n3).

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Examples

EX. T(n) = 4T(n/2) + n3

a = 4, b = 2 nlogba = n2; f (n) = n3.

CASE 3: f (n) = W(n2 + e) for e = 1

and 4(n/2)3 cn3 (reg. cond.) for c = 1/2.

T(n) = (n3).

EX. T(n) = 4T(n/2) + n2/lg n

a = 4, b = 2 nlogba = n2; f (n) = n2/lg n.

Master method does not apply. In particular,

for every constant e > 0, we have ne = w(lg n).

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Notes

• Master Th’m generalized by Akra and Bazzi to

cover many more recurrences:

where

• See http://www.dna.lth.se/home/Rolf_Karlsson/akrabazzi.ps

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Multiplying large integers

• Given n-bit integers a, b (in binary), compute

c=ab

• Naïve (grade-school) algorithm: a a … a

– Write a,b in binary

– Compute n intermediate

products

– Do n additions

– Total work: (n2)

n-1

n-2

£ bn-1 bn-2 … b0

n bits

n bits

n bits

0

00 0

2n bit output

9/22/2008

0

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Multiplying large integers

• Divide and Conquer (Attempt #1):

– Write a = A1 2n/2 + A0

b = B1 2n/2 + B0

– We want ab = A1B1 2n + (A1B0 + B1A0) 2n/2 + A0B0

– Multiply n/2 –bit integers recursively

– T(n) = 4T(n/2) + (n)

– Alas! this is still (n2) (Master Theorem, Case 1)

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Multiplying large integers

• Divide and Conquer (Attempt #1):

– Write a = A1 2n/2 + A0

b = B1 2n/2 + B0

– We want ab = A1B1 2n + (A1B0 + B1A0) 2n/2 + A0B0

– Multiply n/2 –bit integers recursively

– T(n)

= 4T(n/2)

+ (n)

Karatsuba’s

idea:

B1)(n

= A20)B. 0 + A1B1 + (A0B1 + B1A0)

– (A

Alas!

still

0+Athis

1) (Bis

0+

the3 recursion

tree.) (in yellow)

– (Exercise:

We can getwrite

awayout

with

multiplications!

x = A1B1

y = A0B0

z = (A0+A1)(B0+B1)

– Now we use ab = A1B1 2n + (A1B0 + B1A0) 2n/2 + A0B0

= x 2n + (z–x–y) 2n/2 + y

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Multiplying large integers

MULTIPLY (n, a, b)

B a and b are n-bit integers

B Assume n is a power of 2 for simplicity

1. If n · 2 then use grade-school algorithm else

2.

3.

4.

5.

6.

7.

9/22/2008

A1 Ã a div 2n/2

; B1 Ã b div 2n/2 ;

A0 Ã a mod 2n/2

; B0 Ã b mod 2n/2 .

x à MULTIPLY(n/2 , A1, B1)

y à MULTIPLY(n/2 , A0, B0)

z à MULTIPLY(n/2 , A1+A0 , B1+B0)

Output x 2n + (z–x–y)2n/2 + y

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Multiplying large integers

• The resulting recurrence

T(n) = 3T(n/2) + (n)

• Master Theorem, Case 1:

T(n) = (nlog23) = (n1.59…)

• Note: There is a (n log n) algorithm for

multiplication (more on it later in the course).

9/22/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova.

Matrix multiplication

9/10/10

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Matrix multiplication

Input: A = [aij], B = [bij].

Output: C = [cij] = A× B.

c11 c12

c c

21 22

c c

n1 n 2

c1n a11 a12

c2 n a21 a22

=

cnn an1 an 2

i, j = 1, 2,… , n.

a1n b11 b12

a2 n b21 b22

ann bn1 bn 2

b1n

b2 n

bnn

n

cij = aik bkj

k =1

9/24/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Standard algorithm

for i 1 to n

do for j 1 to n

do cij 0

for k 1 to n

do cij cij + aik× bkj

Running time = (n3)

9/24/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Divide-and-conquer algorithm

IDEA:

n£n matrix = 2£2 matrix of (n/2)£(n/2) submatrices:

r s a b e f

t u = c d g h

C = A × B

r = ae + bg recursive

s = af + bh

8 mults of (n/2) £(n/2) submatrices

^

t = ce + dh

4 adds of (n/2) £(n/2) submatrices

u = cf + dg

9/24/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Analysis of D&C algorithm

T(n) = 8 T(n/2) + (n2)

# submatrices

submatrix size

work adding

submatrices

nlogba = nlog28 = n3 CASE 1 T(n) = (n3).

No better than the ordinary algorithm.

9/24/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Strassen’s idea

• Multiply 2£2 matrices with only 7 recursive mults.

P1 = a × ( f – h)

P2 = (a + b) × h

P3 = (c + d) × e

P4 = d × (g – e)

P5 = (a + d) × (e + h)

P6 = (b – d) × (g + h)

P7 = (a – c) × (e + f )

9/24/2008

r

s

t

u

= P5 + P4 – P2 + P6

= P1 + P2

= P3 + P4

= P5 + P1 – P3 – P7

7 mults, 18 adds/subs.

Note: No reliance on

commutativity of

multiplication!

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Strassen’s idea

• Multiply 2£2 matrices with only 7 recursive mults.

P1 = a × ( f – h)

P2 = (a + b) × h

P3 = (c + d) × e

P4 = d × (g – e)

P5 = (a + d) × (e + h)

P6 = (b – d) × (g + h)

P7 = (a – c) × (e + f )

9/24/2008

r = P5 + P4 – P2 + P6

= (a + d) (e + h)

+ d (g – e) – (a + b) h

+ (b – d) (g + h)

= ae + ah + de + dh

+ dg –de – ah – bh

+ bg + bh – dg – dh

= ae + bg

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Strassen’s algorithm

1. Divide: Partition A and B into (n/2) x (n/2)

submatrices. Form terms to be multiplied

using + and – .

2. Conquer: Perform 7 multiplications of (n/2) x

(n/2) submatrices recursively.

3. Combine: Form product matrix C using + and

– on (n/2) x (n/2) submatrices.

T(n) = 7 T(n/2) + (n2)

9/24/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne

Analysis of Strassen

T(n) = 7 T(n/2) + (n2)

nlogba = nlog27 n2.81 CASE 1 T(n) = (nlg 7).

•Number 2.81 may not seem much smaller than 3.

•But the difference is in the exponent.

•The impact on running time is significant.

•Strassen’s algorithm beats the ordinary algorithm

on today’s machines for n 32 or so.

Best to date (of theoretical interest only): (n2.376).

9/24/2008

A. Smith; based on slides by E. Demaine, C. Leiserson, S. Raskhodnikova, K. Wayne