ppt

advertisement

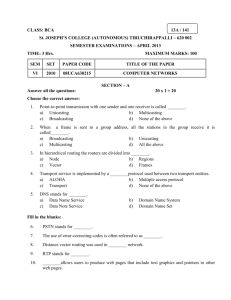

CS252 Graduate Computer Architecture Lecture 16 Multiprocessor Networks (con’t) March 16th, 2011 John Kubiatowicz Electrical Engineering and Computer Sciences University of California, Berkeley http://www.eecs.berkeley.edu/~kubitron/cs252 Review: The Routing problem: Local decisions Input Ports Receiver Input Buffer Output Buffer Transmiter Output Ports Cross-bar Control Routing, Scheduling • Routing at each hop: Pick next output port! 3/16/2011 cs252-S11, Lecture 16 2 Reducing routing delay: Express Cubes • Problem: Low-dimensional networks have high k – Consequence: may have to travel many hops in single dimension – Routing latency can dominate long-distance traffic patterns • Solution: Provide one or more “express” links – Like express trains, express elevators, etc » Delay linear with distance, lower constant » Closer to “speed of light” in medium » Lower power, since no router cost – “Express Cubes: Improving performance of k-ary n-cube interconnection networks,” Bill Dally 1991 • Another Idea: route with pass transistors through links 3/16/2011 cs252-S11, Lecture 16 3 Review: Virtual Channel Flow Control • Basic Idea: Use of virtual channels to reduce contention – Provided a model of k-ary, n-flies – Also provided simulation • Tradeoff: Better to split buffers into virtual channels – Example (constant total storage for 2-ary 8-fly): 3/16/2011 cs252-S11, Lecture 16 4 When are virtual channels allocated? Input Ports Output Ports Hardware efficient design For crossbar Cross-Bar • Two separate processes: – Virtual channel allocation – Switch/connection allocation • Virtual Channel Allocation – Choose route and free output virtual channel – Really means: Source of link tracks channels at destination • Switch Allocation – For incoming virtual channel, negotiate switch on outgoing pin 3/16/2011 cs252-S11, Lecture 16 5 Deadlock Freedom • How can deadlock arise? – necessary conditions: » shared resource » incrementally allocated » non-preemptible – channel is a shared resource that is acquired incrementally » source buffer then dest. buffer » channels along a route • How do you avoid it? – constrain how channel resources are allocated – ex: dimension order • Important assumption: – Destination of messages must always remove messages • How do you prove that a routing algorithm is deadlock free? – Show that channel dependency graph has no cycles! 3/16/2011 cs252-S11, Lecture 16 6 Consider Trees • Why is the obvious routing on X deadlock free? – butterfly? – tree? – fat tree? • Any assumptions about routing mechanism? amount of buffering? 3/16/2011 cs252-S11, Lecture 16 7 Up*-Down* routing for general topology • • • • • Given any bidirectional network Construct a spanning tree Number of the nodes increasing from leaves to roots UP increase node numbers Any Source -> Dest by UP*-DOWN* route – up edges, single turn, down edges – Proof of deadlock freedom? • Performance? – Some numberings and routes much better than others – interacts with topology in strange ways 3/16/2011 cs252-S11, Lecture 16 8 Turn Restrictions in X,Y +Y -X +X -Y • XY routing forbids 4 of 8 turns and leaves no room for adaptive routing • Can you allow more turns and still be deadlock free? 3/16/2011 cs252-S11, Lecture 16 9 Minimal turn restrictions in 2D +y +x -x West-first north-last 3/16/2011 -y cs252-S11, Lecture 16 negative first 10 Example legal west-first routes • Can route around failures or congestion • Can combine turn restrictions with virtual channels 3/16/2011 cs252-S11, Lecture 16 11 General Proof Technique • resources are logically associated with channels • messages introduce dependences between resources as they move forward • need to articulate the possible dependences that can arise between channels • show that there are no cycles in Channel Dependence Graph – find a numbering of channel resources such that every legal route follows a monotonic sequence no traffic pattern can lead to deadlock • network need not be acyclic, just channel dependence graph 3/16/2011 cs252-S11, Lecture 16 12 Example: k-ary 2D array • Thm: Dimension-ordered (x,y) routing is deadlock free • Numbering – – – – +x channel (i,y) -> (i+1,y) gets i similarly for -x with 0 as most positive edge +y channel (x,j) -> (x,j+1) gets N+j similary for -y channels • any routing sequence: x direction, turn, y direction is increasing • Generalization: 1 00 2 01 2 17 18 10 17 3 02 1 03 0 11 12 13 21 22 23 31 32 33 18 20 19 16 30 – “e-cube routing” on 3-D: X then Y then Z 3/16/2011 cs252-S11, Lecture 16 13 Channel Dependence Graph 1 00 2 01 2 17 18 10 17 3 02 1 30 11 12 13 21 22 23 31 32 3 2 1 0 18 17 0 18 17 33 18 17 2 3 2 1 0 17 18 17 18 17 18 1 2 3 2 1 0 16 19 16 19 1 2 3/16/2011 18 17 1 17 18 19 16 2 03 18 20 1 cs252-S11, Lecture 16 16 19 16 19 2 3 1 0 14 More examples: • What about wormhole routing on a ring? 2 1 0 3 7 4 5 6 • Or: Unidirectional Torus of higher dimension? 3/16/2011 cs252-S11, Lecture 16 15 Breaking deadlock with virtual channels Packet switches from lo to hi channel • Basic idea: Use virtual channels to break cycles – Whenever wrap around, switch to different set of channels – Can produce numbering that avoids deadlock 3/16/2011 cs252-S11, Lecture 16 16 Administrative • Should be working full blast on project by now! – I’m going to want you to submit an update after spring break – We will meet shortly after that • Midterm I: 3/30 – Two-hour exam over three hours. » Tentatively 2:30-5:30 – Material: Everything up to today is fair game (including papers) – One page of notes – both sides – Plenty of sample exams up on the handouts page 3/16/2011 cs252-S11, Lecture 16 17 General Adaptive Routing • R: C x N x S -> C • Essential for fault tolerance – at least multipath • Can improve utilization of the network • Simple deterministic algorithms easily run into bad permutations • fully/partially adaptive, minimal/non-minimal • can introduce complexity or anomalies • little adaptation goes a long way! 3/16/2011 cs252-S11, Lecture 16 18 Paper Discusion: Linder and Harden “An Adaptive and Fault Tolerant Wormhole” • General virtual-channel scheme for k-ary n-cubes – With wrap-around paths • Properties of result for uni-directional k-ary n-cube: – 1 virtual interconnection network – n+1 levels • Properties of result for bi-directional k-ary n-cube: – 2n-1 virtual interconnection networks – n+1 levels per network 3/16/2011 cs252-S11, Lecture 16 19 Example: Unidirectional 4-ary 2-cube Physical Network • Wrap-around channels necessary but can cause deadlock 3/16/2011 Virtual Network • Use VCs to avoid deadlock • 1 level for each wrap-around cs252-S11, Lecture 16 20 Bi-directional 4-ary 2-cube: 2 virtual networks Virtual Network 1 3/16/2011 Virtual Network 2 cs252-S11, Lecture 16 21 Use of virtual channels for adaptation • Want to route around hotspots/faults while avoiding deadlock • Linder and Harden, 1991 – General technique for k-ary n-cubes » Requires: 2n-1 virtual channels/lane!!! • Alternative: Planar adaptive routing – Chien and Kim, 1995 – Divide dimensions into “planes”, » i.e. in 3-cube, use X-Y and Y-Z – Route planes adaptively in order: first X-Y, then Y-Z » Never go back to plane once have left it » Can’t leave plane until have routed lowest coordinate – Use Linder-Harden technique for series of 2-dim planes » Now, need only 3 number of planes virtual channels • Alternative: two phase routing – Provide set of virtual channels that can be used arbitrarily for routing – When blocked, use unrelated virtual channels for dimension-order (deterministic) routing – Never progress from deterministic routing back to adaptive routing 3/16/2011 cs252-S11, Lecture 16 22 Message passing • Sending of messages under control of programmer – User-level/system level? – Bulk transfers? • How efficient is it to send and receive messages? – Speed of memory bus? First-level cache? • Communication Model: – Synchronous » Send completes after matching recv and source data sent » Receive completes after data transfer complete from matching send – Asynchronous » Send completes after send buffer may be reused 3/16/2011 cs252-S11, Lecture 16 23 Synchronous Message Passing Source Destination Recv Psrc, local VA, len (1) Initiate send (2) Address translation on P src Send Pdest, local VA, len (3) Local/remote check Send-rdy req (4) Send-ready request (5) Remote check for posted receive (assume success) Wait Tag check Processor Action? (6) Reply transaction Recv-rdy reply (7) Bulk data transfer Source VADest VA or ID Data-xfer req Time • • • • 3/16/2011 Constrained programming model. Deterministic! What happens when threads added? Destination contention very limited. User/System boundary? cs252-S11, Lecture 16 24 Asynch. Message Passing: Optimistic Destination Source (1) Initiate send (2) Address translation Send (Pdest, local VA, len) (3) Local /remote check (4) Send data (5) Remote check for posted receive; on fail, allocate data buffer Tag match Data-xfer req Allocate buffer Recv P src, local VA, len Time • More powerful programming model • Wildcard receive => non-deterministic • Storage required within msg layer? 3/16/2011 cs252-S11, Lecture 16 25 Asynch. Msg Passing: Conservative Destination Source (1) Initiate send (2) Address translation on P dest Send Pdest, local VA, len (3) Local /remote check Send-rdy req (4) Send-ready request (5) Remote check for posted receive (assume fail); record send-ready Return and compute Tag check (6) Receive-ready request Recv Psrc, local VA, len (7) Bulk data reply Source VADest VA or ID Recv-rdy req Data-xfer reply Time • Where is the buffering? • Contention control? Receiver initiated protocol? • Short message optimizations 3/16/2011 cs252-S11, Lecture 16 26 Features of Msg Passing Abstraction • Source knows send data address, dest. knows receive data address – after handshake they both know both • Arbitrary storage “outside the local address spaces” – may post many sends before any receives – non-blocking asynchronous sends reduces the requirement to an arbitrary number of descriptors » fine print says these are limited too • Optimistically, can be 1-phase transaction – Compare to 2-phase for shared address space – Need some sort of flow control » Credit scheme? • More conservative: 3-phase transaction – includes a request / response • Essential point: combined synchronization and communication in a single package! 3/16/2011 cs252-S11, Lecture 16 27 Discussion of Active Messages paper • Thorsten von Eicken, David E. Culler, Seth Copen Goldstein, Laus Erik Schauser: – “Active messages: a mechanism for integrated communication and computation” • Essential idea? – Fast message primitive – Handlers must be non-blocking! – Head of message contains pointer to code to run on destination node – Handler’s sole job is to integrate values into running computation • Could be compiled from Split-C into TAM runtime • Much better balance of network and computation on existing hardware! 3/16/2011 cs252-S11, Lecture 16 28 Active Messages Request handler Reply handler • User-level analog of network transaction – transfer data packet and invoke handler to extract it from the network and integrate with on-going computation • Request/Reply • Event notification: interrupts, polling, events? • May also perform cs252-S11, memory-to-memory transfer 3/16/2011 Lecture 16 29 Summary • Routing Algorithms restrict the set of routes within the topology – simple mechanism selects turn at each hop – arithmetic, selection, lookup • Virtual Channels – Adds complexity to router – Can be used for performance – Can be used for deadlock avoidance • Deadlock-free if channel dependence graph is acyclic – limit turns to eliminate dependences – add separate channel resources to break dependences – combination of topology, algorithm, and switch design • Deterministic vs adaptive routing 3/16/2011 cs252-S11, Lecture 16 30