Assessment Challenges

In Education

Jamie Cromack (Chair)

Program Manager, ER&P

Microsoft Corporation

Wilhelmina Savenye

Professor – Educational Technology

Arizona State University

Jane Prey

Program Manager, ER&P

Microsoft Corporation

Agenda

Introducing the MSR Assessment Toolkit

Background and goals

Brief overview of the Toolkit

Discussion of assessment challenges

Background

What is the MSR Assessment Toolkit?

The Assessment Toolkit is a unique

resource for CS, Engineering, other

STEM faculty

Though many web-based resources exist,

none will do what this MSR tool will

A partnership among MSR and leading

faculty in assessment and faculty in

Computing Science, Science, Science,

Technology, Engineering and Mathematics

(STEM) disciplines

MSR Faculty Summit Packet

White Paper

Learning about Learning in

Computational Science and Science,

Technology, Engineering and Mathematics

(STEM) Education

J. Cromack and W. Savenye, 2007

Background

Why was the MSR Assessment Toolkit

developed?

Faculty must do assessment; few have the

time, training, and support to do it as

well as they would like

STEM faculty are very interested in using

the MSR Assessment Toolkit

Background

Why has assessment become increasingly

important?

External factors – trends:

Accountability/accreditation

Funding agency requirements

Funding and enrollment issues

Changes in pedagogy, instructional design

Internal factors – collect data to:

Improve educational experience for students

Motivate students

Microsoft Research

AssessmentToolkit

Developed by:

Willi Savenye and Gamze Ozogul,

Arizona State University

Jamie Cromack,

EP&R, MSR

The MSR Assessment Toolkit

The Assessment Toolkit consists of a set

of assessment resources designed to aid

faculty in building stronger measures of

the success of their projects, during all

phases of their projects’ life cycles

Version 1 now deployed

We are looking forward to your feedback

MSR AssessmentToolkit

Components

1. Assessment Planning Overview

2. Assessment FAQs

3. User Grid to help faculty determine

which resources might be most useful for

their level of assessment experience.

4. Assessment Methods Selection Guide

5. Focused Assessment Resources

(an annotated bibliography)

6. Case Studies in Effective Assessment

MSR AssessmentToolkit

Components

1. Assessment Planning Overview

2. Assessment FAQs

3. The User Grid

What level of assessment user are you?

BASIC (B)

TYPICAL (T)

POWER

(P)

If you :

Want to know more about how

students learn in or experience your

class

Have generally administered only

the university-constructed end-ofterm student surveys

Have not used a rubric to grade an

assignment

If you have done some of:

Have thought about specific

learning goals or outcomes for your

class

Have thought about the overall

goals of your curriculum and how it

prepares students

Supplemented the universityconstructed end-of-term surveys

with simple mid-term surveys

Done minute papers or fastfeedback sheets

Have used pre-designed rubrics

with minimal adaptation

If you

Seek to define and describe in detail

student experience with learning

goals in your classroom

Seek to define and describe in detail

student preparation as experienced

through your curriculum

Have regularly administered

pre/post surveys, mid-term surveys

and end-of-term surveys

Have created from scratch or

significantly adapted a rubric for

grading

Have reported on qualitative or

quantitative results of studies of

student understanding in peerreviewed literature venues

4. Assessment Methods

Selection Guide

As faculty begin to select their assessment

methods, they should consider making sure that

they measure:

Student Performance,

Satisfaction, and

Retention (if desired)

Include a balance of both direct and indirect

measures, as well as both formative measures

(to improve instruction in process) and

summative measures (to make evaluative

decisions at or near the end of the project)

4. Assessment Methods

Selection Guide

Direct (observable phenomena)

Indirect (self-report data)

Summative

(accountability,

retroactive,

evaluative)

Exams & quizzes (may be used pre- and postcourse)

Projects & assignments (often evaluated using

rubrics/checklists)

Student performance on case studies

Final grades

Concept maps/flowcharts

Observations (often using rubrics/checklists)

Portfolios

Capstone projects

Course/test embedded assessment

Outcomes assessment (often using

rubrics/checklists)

Demonstration of a skill (often using

rubrics/checklists)

Exit surveys

Exit interviews

End of term survey

Student ratings

Interviews

Formative (decision

making,

proactive,

diagnostic)

Exams & quizzes (may be used pre- and postcourse)

Projects & assignments (often evaluated using

rubrics/checklists)

Student performance on case studies

Mid-course and assignment grades

Concept maps/flowcharts

Observations (often using rubrics/checklists)

Background knowledge probes

Minute or fast-feedback papers

Student-generated test questions

Diagnostic learning logs or journals

Muddiest point surveys

Satisfaction surveys

Attitude surveys

Mid-semester feedback surveys

Student ratings

Interviews

Methods For Measuring Student Performance

And Retention: (E.g., a short selection)

Method

User

level

Class

Size

Type

Description

Strengths

Limitations

Example

question(s)

Constructed

responsemultiple

choice

items

(performan

ce,

retention)

B, T,

P

La

F/S

Used to gather data about

student performance and

level of mastery of the

course content. Can be used

in pretest and posttest

forms administered at the

beginning of the course and

again at the end.

• Can cover broad

scope of

content

• Objective

assessment

• Easy to score

• Reusable

• Reliability and

validity

concerns

• Time consuming

to build

• Answers limited

to constructed

responses

• Which one

below is a

type of

processing?

A) Batch

B) Series

C) Network

D) Distributed

Short

answer

items

(performan

ce/

retention)

B, T

La

F/S

Used to gather data about

student knowledge about a

topic Can be used in pretest

and posttest forms

administered at the

beginning of the course and

again at the end.

• Easy to prepare

• Reusable

• Easy to score

• Limited number

of questions

• Limited scope

of content

What is an

algorithm?

Grades

(mid-course

or final

grades)

(performan

ce or

retention)

B, T,

P

La/

Sm

F/S

Collected on projects,

assignments, quizzes, etc.

mid-course, as well as

collected at the end of the

course.

• Easy to collect,

as necessary

• Can be tracked

over multiple

courses over

time

• May not be finegrained enough

to yield

differences

• Influenced by

many factors in

addition to

course

innovations

• Grade book

entries

METHODS FOR MEASURING STUDENT SATISFACTION

(Eg. a short selection)

Instrument

User

level

Class

Size

Type

Description

Strengths

Limitations

Example

question(s)

Attitude

surveys

(satisfaction)

B,

T,P

La/

Sm

F/S

Provide data about students’

perceptions about a

topic/class. Can be used to

make formative or summative

decisions depending on the

time of administration.

Generally Likert type

questions; may be followed by

a few open-ended questions.

May be used as a pre/post

measure.

• Easy to

administer

• Cover many

areas of

attitude

• Can be used

in large

groups

• Do not provide

in- depth

information

about attitudes

• The course

projects were

difficult for

me.

• Strongly

agree, agree,

disagree,

strongly

disagree

Happy sheets

(satisfaction)

B

La

F

Provide data about students’

perceptions. Generally used to

make formative decisions; can

be given at the end of any

class or seminar. Likert-type or

open-ended questions.

• Easy to

prepare and

administer

• Provide

specific and

fast feedback

on a class

session

• Limited

number of

questions

• This session

was useful to

me:

• Agree

• Neutral

• Disagree

Open-ended

questionnair

es

(satisfaction)

B, T

Sml/S

mpl

F/S

Provide data about students’

perceptions in detail on a

specific topic. Open ended

questions.

• Easy to

prepare

• Collect

detailed

information.

• Suitable for

smaller groups

• It takes time to

analyze

responses to

each openended question

• Limited

number of

questions

• What did you

like the most

(and least)

about the

materials

used in this

session?

5. Focused Assessment Resources

Annotated, responsive to user needs

Selected books and articles on assessment

focusing on Engineering and Computer Science

Education

Online “HANDBOOKS” on Assessment and

Evaluation

Web Sites of Professional Organizations Offering

Resources on Assessment and Evaluation

Other Useful Evaluation and Assessment

Web Sites

Selected Books on Learning and

Instructional Planning

Online Journal on Assessment and Evaluation

6. Case Studies In Effective

Assessment

6. Case Studies in Effective

Assessment

Mark Guzdial

Professor of Computer Science

Georgia Tech

Example of Comprehensive Assessment

Projects, adapted at many universities

Human Subjects – consent letter

Pre-course surveys

Post-course surveys

Interview protocols

Grades

Discussion Of Assessment Challenges

Introduction

Dr. Jane Prey and Dr. Jamie Cromack

MSR, ER&P

Open Discussion – Participants

What are your and your colleagues’

important assessment challenges?

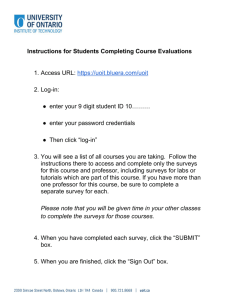

MSR Assessment Toolkit

http://research.microsoft.com/erp/AssessmentToolkit

We welcome your input!

© 2007 Microsoft Corporation. All rights reserved. Microsoft, Windows, Windows Vista and other product names are or may be registered trademarks and/or trademarks in the U.S. and/or other

countries. The information herein is for informational purposes only and represents the current view of Microsoft Corporation as of the date of this presentation. Because Microsoft must respond to

changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information provided after the date of

this presentation. MICROSOFT MAKES NO WARRANTIES, EXPRESS, IMPLIED OR STATUTORY, AS TO THE INFORMATION IN THIS PRESENTATION.

Microsoft Research

Faculty Summit 2007