Multicollinearity

advertisement

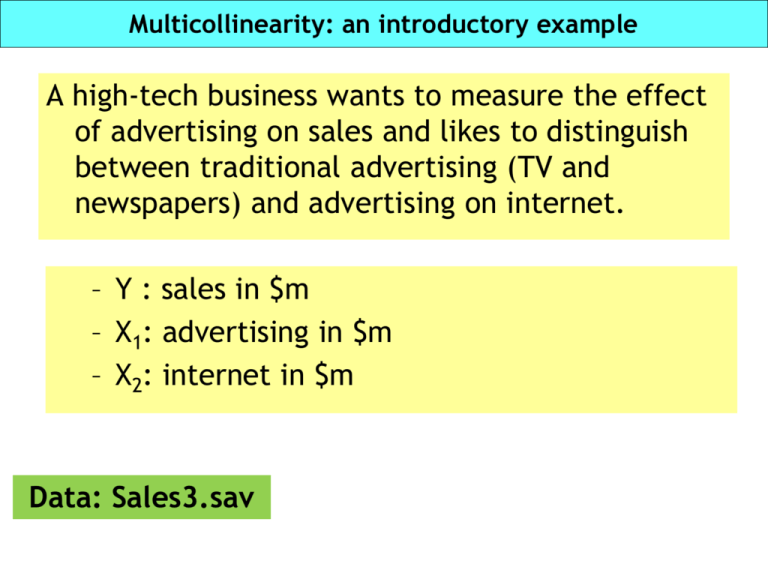

Multicollinearity: an introductory example A high-tech business wants to measure the effect of advertising on sales and likes to distinguish between traditional advertising (TV and newspapers) and advertising on internet. – Y : sales in $m – X1: advertising in $m – X2: internet in $m Data: Sales3.sav A matrix scatter plot of the data Cor(y, x1) 0.983 Cor(y, x2) 0.986 Cor(x1,x2) 0.990 x1 and x2 are strongly correlated, i.e. they have a substantial amount of common information x1 = α 0 + α 1x2 + ε Regression output R ,983a R2 Using x1 only With equivalent results when using x2 only R2 DS Adj 1 ,965 ,962 ,9764 Anovab SS df MS 1 Regressione 265,466 1 265,466 Residuo 9,534 10 ,953 Totale 275,000 11 Coefficients Model B DS 1 (Costante) ,885 ,696 X1 = traditional advertising in $m 2,254 ,135 F Sig. 278,438 ,000a t Sig. 1,272 ,232 16,686 ,000 Regression output R ,987a R2 Using x1 and x2 x1 and x2 are not significant anymore R2 DS Adj 1 ,974 ,968 ,8916 Anovab SS df MS 1 Regressione 267,846 2 133,923 Residuo 7,154 9 ,795 Totale 275,000 11 Coefficients 1 (Costante) X1 = traditional advertising in $m X2 = internet advertising in $m F Sig. 168,483 ,000a B 1,992 DS ,902 ,767 ,868 ,884 ,400 1,275 ,737 1,730 ,118 t Sig. 2,210 ,054 Multicollinearity Multicollinearity exists when two or more of the independent variables are moderately or highly correlated with each other. xi = α0 + α1xj +… + αpxj+p+ ε, j+p<k, i≠j,j+1,…, j+p In the extreme case, if there exists perfect correlation among some of the independent variables, OLS estimates cannot be computed. In practice if independent variables are (highly) correlated they contribute too much redundant information which prevents isolating the effect of single independent variables on y. Confusion is often the result. High levels of multicollinearity: a) inflate the variance of the β estimates b) regression results maybe misleading and confusing. Detecting Multicollinearity The following are indicators of multicollinearity: 1. Significant correlations between pairs of independent variables in the model (sufficient but not necessary). 2. Nonsignificant t-tests for all (or nearly all) the individual β parameters when the F test for model adequacy H0: β1= β2 = … = βk = 0 is significant. 3. Opposite signs (from what expected) in the estimated parameters 4. A variance inflation factor (VIF) for a β parameter greater that 10. The VIFs can be calculated in SPSS by selecting “Collinearity diagnostics” in the “Statistics” options in the “Regression” dialog box. A typical situation Multicollinearity can arise when transforming variables, e.g. using x1 and x12 in the regression equations if the range of values of x1 is limited. X square 1,00 1,44 1,96 2,56 3,24 4,00 4,84 5,76 6,76 7,84 9,00 10,24 11,56 12,96 14,44 16,00 18,00 Cor(x,x2)=0.987 16,00 14,00 12,00 X square X 1,0 1,2 1,4 1,6 1,8 2,0 2,2 2,4 2,6 2,8 3,0 3,2 3,4 3,6 3,8 4,0 10,00 8,00 6,00 4,00 2,00 0,00 1,0 1,5 2,0 2,5 3,0 X 3,5 4,0 4,5 Remember, if the multicollinearity is present but not excessive (no high correlations, no VIFs above 10), you can ignore it. Each variable provides enough independent information and one can assess its value. If your main goal is prediction (using the available explanatory variables to predict the response), then you can safely ignore the multicollinearity. If your main goal is explaining relationships, then the multicollinearity maybe a problem because measured effects can be misleading. Some solutions to Multicollinearity Get more data if you can. Drop one or more of the correlated independent variables from the final model. A screening procedure like Stepwise regression may be helpful in determining which variable to drop. If you keep all independent variables be cautios in interpreting parameter values and keep prediction within the range of your data. Use Ridge regression (we do not touch this subject in the course). Some solutions to MC If the multicollinearity is introduced by the use of higher order models (e.g. use x and x2 or x1, x2 and x1x2) use IV as deviations from their mean. Example: suppose multicollinearity is present in E(Y) = β0 + β1x + β2x2 1) Compute: x* = x – Mean(X) 2) Run the regression E(Y) = β0 + β1x* + β2(x*)2 In most cases multicollinearity is greatly reduced. Clearly the parameters β of the new regression will have different values and meaning. Example: Shipping costs - continues A company conducted a study to investigate the relationship between the cost of shipment and the variables that control the shipping charge: weight and distance. – Y : cost of shipment in dollars – X1: package weight in pounds – X2: distance shipped in miles It is suspected that non linear effect may be present, let us analyze the model Model 1: E(Y) = β0 + β1x1 + β2x2 + β3x1x2 + β4x12 + β5x22 Data: Express.sav Matrix scatter-plot A matrix scatterplot shows at once the bivariate scatter plots for the selected variables. Use it as preliminary screening. Symmetric matrix, just look at the lower triangle In SPSS choose the “Matrix” option from “Scatter/Dot” Graph and input the variables of interest Note the obvious quadratic relation for some of the variables, very close to linearity Correlation matrix Correlazioni Weight of Distance Cost of Weight Dist. Weight* parcel shipped shipm. squared squared Dist. Weight of Correlazione 1 ,182 ,774** parcel in lbs. Sig. (2-code) ,444 ,000 N 20 20 20 Distance Correlazione ,182 1 ,695** shipped Sig. (2-code) ,444 ,001 N 20 20 20 ** ** Cost of Correlazione ,774 ,695 1 shipment Sig. (2-code) ,000 ,001 N 20 20 20 ** Weight Correlazione ,967 ,202 ,799** squared Sig. (2-code) ,000 ,393 ,000 N 20 20 20 ** Distance Correlazione ,151 ,980 ,652** squared Sig. (2-code) ,524 ,000 ,002 N 20 20 20 ** ** Weight*Dist Correlazione ,820 ,633 ,989** ance Sig. (2-code) ,000 ,003 ,000 N 20 20 20 **. La correlazione è significativa al livello 0,01 (2-code). ,967** ,151 ,820** ,000 ,524 ,000 Individually 20 20 20 ** ,202 ,980 ,633** strongly related ,393 ,003 to,000 Y 20 20 20 ** ** ,799 ,652 ,989** ,000 ,002 ,000 20 20 20 1 ,160 ,821** ,500 ,000 20 20 20 ,160 1 ,590** ,500 ,006 20 20 20 ** ** ,821 ,590 1 ,000 ,006 20 20 20 Model 1:VIF statistics The VIFs can be calculated in SPSS by selecting “Collinearity diagnostics” in the “Statistics” options in the “Regression” dialog box. A VIF statistics larger than 10 is usualy considered an indicator of substantial collinearity Coefficientia Model B 1 (Costante) DS t Sig. VIF ,827 ,702 1,178 ,259 Weight of parcel in lbs. -,609 ,180 -3,386 ,004 20,031 Distance shipped ,004 ,008 ,503 ,623 35,526 Weight squared ,090 ,020 4,442 ,001 17,027 1,507E-5 ,000 ,672 ,513 28,921 ,007 ,001 11,495 ,000 12,618 Distance squared Weight*Distance Model 2: Using IV as deviations from their mean Coefficientsa Model 1 B (Costante) 5,467 X1star 1,263 X2star ,038 X1x2star ,007 X1star2 ,090 x2star2 1,507E-5 DS ,216 ,042 ,001 ,001 ,020 ,000 t 25,252 30,128 27,563 11,495 4,442 ,672 Sig. ,000 ,000 ,000 ,000 ,001 ,513 VIF 1,087 1,081 1,095 1,113 1,120 Seems actually irrelevant, drop it Note: problems of multicollinearity have disappeared Note: R-square (adjusted), ANOVA table and prediction are the same for the two models (check).