Lecture 4

advertisement

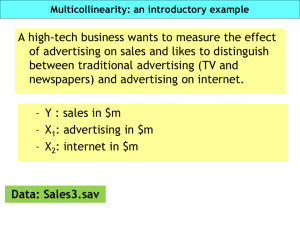

Quantitative Methods Multicollinearity What is multicollinearity? Multicollinearity is shared variance across independent variables. An example—predicting an individual’s view on tax cuts with both years of education and annual income. Multicollinearity What is the consequence of multicollinearity? Multicollinearity makes it more difficult to come up with robust (or efficient, or stable) parameter estimates. In other words, it’s more difficult to determine what the effect is of income (on attitudes toward tax cuts) separate from the effect of education (on attitudes toward tax cuts). This makes sense—imagine if you go out into the quad, and just ask undergraduate students their views on Governor Jindal. Will you be able to easily separate out the effect of “age” from the effect of “year at LSU”? There will be some variance—because there are older students, and because only some students graduate in 4 years. The two variables are not “perfectly collinear”. But it will be difficult to figure out whether age has an effect, independent of “year at LSU”. Multicollinearity Multicollinearity across variables means that you are less sure of your estimates of the effects (of age and year in school for instance)—and so you expect those estimates could potentially be much different from sample to sample... And so the standard errors are potentially larger than they would be otherwise. Multicollinearity What does that mean in terms of OLS? Recall that to calculate out estimates in multivariate OLS, you are using the information left over in Y after you have predicted Y with all the other independent variables as well as the information left over in that particular after you have predicted that particular X with all the other independent variables. Multicollinearity And, recall that the “standard error” of b taps into the stability, robustness, efficiency of the parameter estimate “b”. In other words, the standard error represents the degree to which you expect that the estimate remains stable over repeated sampling. Multicollinearity What do large standard errors mean? Smaller t scores (because the t is calculated by dividing the parameter estimate by the standard error) Larger confidence intervals (recall that if you took an infinite number of samples, and calculated out a 95% confidence intervals for each one, you would expect the “true slope” to fall in the 95% of those confidence intervals). Less statistically significant results But note that the “b”’s – the actual parameter estimates—are not biased. In other words, over repeated sampling, you’d expect that your parameter estimates would average out to the “true slope”. Multicollinearity How do we test for multicollinearity? Calculate out auxiliary R-squareds. First, take each independent variable, and regress it on all the other independent variables—and save the R-squareds. These are the “auxiliary R-squareds”—which represent the variance in each independent variable that is explained (or used up) by all the others. Multicollinearity If the auxiliary R-squareds are high, that means that for those variables, there isn’t much information (variance) left over to estimate the effect of that variable. So, for example, back to the “opinion of Governor Jindal”. Imagine your independent variables were gender, race, political party, ideology, age, science major dummy variable, humanities major dummy variable, social science major dummy variable, business major dummy variable, and year in school. Multicollinearity Which subsets of the following: variables would seem to “covary” together? gender, race, political party, ideology, age, science major dummy variable, humanities major dummy variable, social science major dummy variable, business major dummy variable, and year in school If you regress year in school (as the dependent variable) on all the other independent variables, would you expect a high or low auxiliary R-squared? Multicollinearity What SAS, Stata, or SPSS will give you are actually “VIF” scores. VIF scores are calculated by calculating a “tolerance” figure by subtracting the auxiliary R-squared from 1, then dividing 1 by that tolerance figure. Anything above a .75 auxiliary R-squared is relatively high— so anything below a .25 tolerance is considered a sign of potentially high multicollinearity. And therefore, a VIF score greater than 4 is considered a sign of high multicollinearity. Multicollinearity What can one do about multicollinearity? First, if you have significant effects, multicollinearity isn’t a problem (as a practical matter)—once you have concluded that you can reject the null hypothesis of “no effect” it might be nice to be even more confident in that conclusion—but rejection is rejection, and asterisks are asterisks. Multicollinearity Second, if you don’t have significant effects, unfortunately, there is no way to know for sure whether it is because of multicollinearity—it could just be that even if you had much more information to work with, you would still have insignificant effects. However, it’s reasonable to note to your reader that collinearity does exist—and it’s reasonable to point out the magnitude of your parameter estimates (perhaps by presenting predicted outcomes). We talked about the importance of predicted outcomes when we discussed non-linearity relationships between x and y, but in general, talking about the actual predictions based on hypothetical cases is a pretty useful way to show your reader the substantive magnitude of the effects. Multicollinearity There aren’t that many “solutions” to multicollinearity. Sometimes, it’s appropriate to combine independent variables into an index (i.e., “socioeconomic status”). And there’s the option of gathering more data— multicollinearity is essentially an information problem, and so more information is always useful. However, that obviously isn’t always feasible. Dummy Variables Why would we use dummy variables? Example—religion—or major--why can’t we use an interval level variable? Rule #1 with dummy variables—we need to leave a comparison category out. (Why? Why can’t we include “male” and “female” in the same equation?) Dummy Variables Rule # 2 with dummy variables—all the parameter estimates are interpreted relative to that omitted comparison category. Rule #3 with dummy variables—the parameter estimates essentially work to change the intercept. Interactions Interactions are useful when you believe that the effect of one variable depends on the value of another. So, for example, if you believe that “gender” influences the types of bills that legislators introduce—but that the effect of gender becomes less pronounced as the number of women in the legislature increases. Interactions In that case, you would include in a model gender (probably coded as 1=female), the % (or number) of women in the legislature, and an interaction that is calculated by % women in legislature multiplied by gender Interactions Let’s think about this in terms of the actual equation. X1 = gender X2 = % women in legislature X3 = interaction (=gender * % women in legislature) Y = a + b1 * x1 + b2 * X2 + b3 * x3 + e Predicted Y = a + b1 * x1 + b2 * x2 + b3 * x3 Interactions Y = a + b1 * x1 + b2 * X2 + b3 * x3 + e Predicted Y = a + b1 * x1 + b2 * x2 + b3 * x3 What is predicted Y if the legislator is male? (What is the only variable that really factors into the prediction, for male legislators?) What is predicted Y if the legislator is female? What is the slope for male legislators? What is the slope for female legislators? What is the intercept for male legislators? What is the intercept for female legislators? Interactions So, the “b3” – the estimated effect – for the interaction variables represents the additional effect of “percentage women in the legislature” for women. And the “b1” – the estimated effect – for the dummy variable for “female” represents the change in intercept for women (compared to men) Interactions A couple of practical points, and then an example.... First, there are no legislatures with 0 women. Therefore, the dummy variable for women can never be interpreted in isolation of the interaction. And, the interaction can’t really be interpreted in isolation of the dummy variable. Given that, there are joint tests of significance—that we’ll talk about next week, based on our example. Interactions Second, could you just have a model with only the interaction term, or perhaps only the interaction term plus one of the comonent variables, if your theory indicated that the other variable(s) X1 and / or X2 were not relevant? I would not advise it. It’s possible that X1 and X2 are just randomly “significant”—in which case the interaction will look significant, but it might not be if you include X1 in the model. You really don’t know if the interaction is significant unless you control for X1 and X2. Interactions Third, you do have a lot of multicollinearity with interactions. So, if you suspect that multicollinearity is a problem, it’s sometimes useful to tell your reader (in a footnote, or in additional columns in a table) what the results looked like if you dropped one or both of the variables, or dropped the interaction. Interactions And now the example. There’s been a lot of debate over the selection system for judges—partisan election versus non-partisan election versus appointment. Interactions And in the paper I’m handing out, the authors examine whether individuals in a state with a partisan election system are less confident in courts—and whether the effect of the election system depends on the person’s education level. (Essentially arguing that the partisan election system only has an effect among those who are well educated—and actually know what selection system is used0. Interactions Additional work has examined whether the effect of “partisan election system” on confidence in the courts depends on the degree to which the selection system produces a court that is unbalanced (skewed Democratic or Republican) or not representative of the state’s partisan breakdown. So let’s look at the tables.