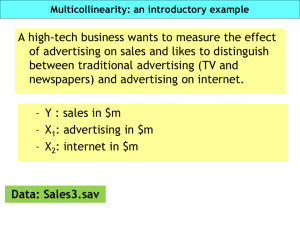

Lecture 16: Multicollinearity BUEC 333 Professor David Jacks

advertisement

Lecture 16: Multicollinearity BUEC 333 Professor David Jacks 1 The six assumptions of the CLRM: 1.) The regression model is correctly specified: a.) has the correct functional form and b.) has an additive error term. 2.) The error term has zero population mean, or E(εi) = 0. 3.) All independent variables are uncorrelated with the error term, or Cov(Xi,εi) = 0, for each independent variable Xi . The classical assumptions 2 4.) No independent variable is a perfect linear function of any other independent variable. 5.) Errors are uncorrelated across observations, or Cov(εi,εj) = 0 for two observations i and j. 6.) The error term has a constant variance, or Var(εi) = σ2 for every i. Violating the classical assumptions 3 As always, we want to know: 1.) What is the nature of the problem? 2.) What are the consequence of the problem? 3.) How is the problem diagnosed? 4.) What remedies for the problem are available? We now consider these in turn… Violating the classical assumptions 4 If a variable X1 can be written as a perfect linear function of X2 , X3 ,..., then we say these variables are perfectly collinear. When this is true of more than one independent variable, they are said to be perfectly multicollinear. Perfect collinearity presents technical problems Collinearity 5 Example: suppose we want to estimate Yi = β0 + β1X1i + β2X2i + εi where X1 = 2X2 + 5. That is, X1 and X2 are perfectly collinear: whenever X2 increases by one unit, we see X1 increase by two units and Y increase by 2β1 + β2. Collinearity 6 In this case, if X1 is in the model, then X2 is completely redundant: it contains exactly the same information as X1…if we know the value of X1, we know the value of X2 exactly and vice versa. Because of this, there is no unique solution to the least squares minimization problem. Collinearity 7 It is quite rare that two independent variables have an exact linear relationship. And it is usually obvious when it does happen; e.g., ―the dummy variable trap‖. However, it is very common in economic data that two (or more) independent variables are strongly—but not exactly—related. Types of collinearity 8 Example of perfect collinearity: X1i = α0 + α1X2i Example of imperfect collinearity: X1i = α0 + α1X2i + ζi where ζi is a stochastic error term. Strongly—but not exactly—related variables: Types of collinearity 9 Perfect and imperfect collinearity 10 Perfect and imperfect collinearity 11 Basic model from Lecture 8: Yi = β0 + β1Xi + εi Y represents variation in Yi; X represents variation in Xi. Y X Overlap between the two (in green) represents variation that Y and X have in common. Another way of “seeing” collinearity 12 Now think about this a little more: 1.) If the information (the green area) corresponds to variation in Y uniquely explained by variation in X, the resulting estimate of β1 is unbiased. 2.) A larger green area means more information, implying a smaller variance for the estimate of β1. Another way of “seeing” collinearity 13 What does this have to do with collinearity? Imagine we introduce another regressor: Yi = β0 + β1Xi + β2Zi + εi Y a c b X Z Interpretation of circles remains the same as does running separate regressions. Another way of “seeing” collinearity 14 In particular, what does OLS do with ―b‖? Continue to use it to estimate both the betas? Throw it away and just use ―a‖ and ―c‖? Or somehow divide ―b‖ into parts? Another way of “seeing” collinearity 15 Finally, what does greater collinearity imply? Y Y X Z Another way of “seeing” collinearity X Z 16 In many cases, theory and intuition tell us our variables will be strongly related, so we should already be aware of potential problems. When in doubt, we can look at the sample correlation between independent variables to detect perfect/imperfect multicollinearity. Multicollinearity 17 So what does multicollinearity (not) do for/to us? 1.) Least squares estimates are still unbiased. Recall that only Assumptions 1 through 3 of the CLRM (correct specification, zero expected error, and exogenous independent variables) are required for the OLS estimator to be unbiased. Consequences of multicollinearity 18 So if that is the case, why is it problematic? 2.) Least squares estimates will have larger standard errors. We are trying to estimate the marginal effects of independent variables, holding the other independent variables constant. Consequences of multicollinearity 19 Consequently, there is very little information in the data about the parameters we are trying to estimate…and precision suffers. Consider the formula for the standard error in a model with two independent variables: s.e. ˆ1 2 e i i / n k 1 2 X X 1 r i 1i 1 12 Consequences of multicollinearity 2 20 This has obvious implications for significance… 3.) The computed t-scores will be smaller. Think about what 1.) and 2.) imply for the sampling distribution of the least squares estimates. Consequences of multicollinearity 21 Consequences of multicollinearity 22 Thus, we are more likely to obtain estimates that are ―far‖ from the true parameter values. Because the estimates are imprecise, we have a difficult time rejecting any null hypothesis. Why? Even when the null is true, we could easily find an estimate far from the hypothesized value. Consequences of multicollinearity 23 This has less obvious implications for inference… 4.) Small changes in input lead to large changes in output. In the presence of multicollinearity, adding/ deleting an independent variable or adding/deleting observations can have big effects on the parameter estimates. Consequences of multicollinearity 24 Think about the sampling distribution of the least squares estimates: they are very spread out around the true coefficient values. Thus, in different samples/specifications, we are likely to get very different estimates. Because there is so little independent variation in the independent variables Consequences of multicollinearity 25 In this case, small changes in sample/specification that affect these small differences (even a little bit) get a lot of weight in the estimates. For example, if two variables are almost identical, the OLS estimator relies on the one or two observations where they move differently to distinguish between them; e.g., shoe sizes. Consequences of multicollinearity 26 But what about everything else in the regression? 5.) Nothing else is affected dramatically. The overall fit of the equation—R2, adjusted-R2, the F-test—is not vitally affected by multicollinearity; nor are any related predictions. Consequences of multicollinearity 27 Additionally, coefficient estimates for any independent variables that are uncorrelated with the collinear variables will be unaffected. Consider the following model where Yi = β0 + β1X1i + β2X2i + β3X3i + εi Supposing that X1 and X2 are highly collinear but X3 is uncorrelated with them, the least squares Consequences of multicollinearity 28 Previously, considered the effects of POINTS (GOALS + ASSISTS) on NHL salaries. Example: salaries in the NHL 29 What would happen if we included ASSISTS (= POINTS – GOALS)? Example: salaries in the NHL 30 Finally, what would happen if we included ASSISTS and GOALS (= POINTS – ASSISTS)? Example: salaries in the NHL 31 This would have been an obvious problem if we had first checked the correlations among the independent variables. Example: salaries in the NHL 32 It is important to keep in mind that most economic variables are correlated to some degree. That is, we invariably face some multicollinearity in every regression that we run. The key questions become how much and whether it is enough to cause problems? Detecting multicollinearity 33 The rule of thumb? If sample correlation is greater than 0.80, then severe collinearity is present. Potential problem: if the collinear relationship involves more than 2 independent variables, you may not detect it this way. Thus, we need a sense of the degree to which a particular independent variables moves Detecting multicollinearity 34 VIFs gauge the extent to which one independent variable can be explained by the others. Measure how much the variance of a coefficient has been increased because of multicollinearity. Begin with your standard regression model: Yi = β0+ β1X1i+…+ βkXki+εi Detecting multicollinearity 35 That is, regress each independent variable Xji on all the other independent variables (here, k = 3): X1i = α0+α1X2i+α2X3i+εi Collect the R2 of this regression and call it R12. Now, compute the VIF: VIF(X1) = 1 / (1 – R12). Detecting multicollinearity 36 How to make sense of the VIFs? In the auxiliary regression, if: a.) Rj2 = 0, then there is no multicollinearity problem as VIF(Xj) = 1 / (1 – 0) = 1; and βj-hat’s variance has been “inflated” by a factor of 1. b.) Rj2 = 1, then there is perfect multicollinearity as VIF(Xj) = 1 / (1 – 1) = ∞; and βj-hat’s variance has been inflated by an infinite factor. Detecting multicollinearity 37 And there are other problems with using VIFs: 1.) There is no hard and fast decision rule; that is, there no table of formal critical values for the VIF to look up. 2.) There can still be severe multicollinearity in the presence of small VIFs. Detecting multicollinearity 38 Bottomline: multicollinearity bad but tolerable; but what are the potential remedies? 1.) Get more data: not enough independent variation to separately identify marginal effects… more data = more variation = less variance. 2.) Do nothing: we know our estimates are still Final word on multicollinearity 39