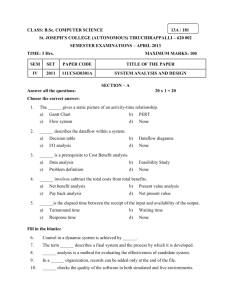

Car-radio architecture template

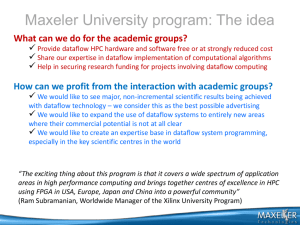

advertisement

Programming many core systems

Marco Bekooij

Outline

Definition many core systems

Application domain of many core systems

Microsoft Parallel Computing Initiative

– simplify programming

– improve quality of service

Mapping stream processing on real-time multiprocessor systems

– Automatic parallelization

– Budget computation

– Multiprocessor system hardware design with budget enforcement

Conclusion

Definition many core system according to Intel’s

white paper

Many core systems are multiprocessor systems with a large number of

cores (>8)

– Many core systems have a shared address space and its resources are

under control of the operating system

Computing industry shifts and their effects

on user experience

Parallel computing and the next generation of

user experiences

Microsoft: many core applications

Next-Generation Personal Computing Experiences

– Personal modeling: e.g. “walk-through” 3-dimensional, photo-realistic

renderings of a home renovation

– Personalized adaptive learning: create a personalized, context-aware

curriculum in real-time.

– Public safety: detailed 2- or 3-dimensional renderings, object recognition,

help responders to make well-informed decisions critical to rescue tactics,

evacuations, and emergency response

Business Opportunities

– Financial modeling

– Product design simulation

Many core application example of NXP

Multi-stream multi-standard car-infotainment systems

– Advanced radios contain already 13 processors (10 DSPs + 3 µP) +

number of hardware accelerators

Beamforming

Improved radio reception

Microsoft Parallel Computing Initiative

Objectives:

• simplify parallel software development

• take quality-of-service requirements into account

Microsoft Parallel Computing Initiative

Applications: next experiences, improve productivity

Domain libraries: system building blocks for example imageprocessing libraries

Programming models and languages: easy application development

without the need for expert knowledge

Developer tooling: simplify software integration

Runtime, platform, and operating systems: more effectively budget

and arbitrate among competing requests for available resources in the

face of parallelism and quality-of-service demands. Additionally,

Microsoft will continue to improve the reliability and security of the

platform.

NXP’s application domain: real-time stream

processing

use-case

f2

f1

ADC

PDC

CFE

VIT

CBE

SRC

APP

digital radio job A

f3

BR

use-case

MP3

SRC

source decoding job B

APP

DAC

DAC

Software mapping flow for real-time stream

processing applications

Temporal constraints

Architecture instance

NLP

Omphale

(parallelization)

Task graph +Dataflow graph

Execution time

analysis

Task-graph + Dataflow graph

Hebe

(budget computation)

Task-graph + budgets

Use-cases + transitions

Off-line = at design-time

On-line = at run-time

Helios

(resource allocation)

Table with resource allocations

Start/stop job

Minos

(resource assignment)

Preemptive

kernel

FIFO

com lib

Run-time mapping of tasks to processors

with admission control per job

Temporal constraints

Architecture instance

NLP

Omphale

(parallelization)

Task graph +Dataflow graph

Execution time

analysis

Task-graph + Dataflow graph

Hebe

(budget computation)

Task-graph + budgets

Off-line = at design-time

On-line = at run-time

Start/stop job

Minos

(resource assignment)

Preemptive

kernel

FIFO

com lib

Setting computation requires property

preserving abstraction

Budget

computation

Dataflow model

v1

v0

v2

Abstraction

DSP

P

$

External

SDRAM

I/O

ctrl

mem

NI

NI

Network

NI

NI

Experimental predictable many core system

Timer

Blaze

ROM

MEM

Timer

Blaze

ROM

$

MEM

RS232

$

Aethereal NoC

SDRAM ctrl

Distributed shared memory system

– Pthread support

SDRAM

Budget scheduler for every shared resource

– processors, memory ports, inter-connect

Flow control

– back-pressure

Mapped on a Vitex 4 FPGA

Heterogeneous many core system

Timer

DSP

ROM

IMEM

Timer

Blaze

ROM

DMEM

MEM

RS232

$

Aethereal NoC

Heterogeneous for area-efficiency and

power-efficiency reasons

Streaming without addresses over the

network beside address based streaming

SDRAM ctrl

SDRAM

Essential elements in the approach

Key assumption: characteristics of other jobs are not completely known

at design time:

– Other jobs are downloaded

– Worst-case execution times of the tasks are not known at design time

Essential element

– Budget schedulers

– Flow control

Budget schedulers

All schedulers

Budget

schedulers

Budget scheduler: subclass of the aperiodic server

– minimum budget in a replenishment interval is independent of the execution-time and

event arrival-rate

Budget reservation:

– incomplete knowledge: worst-case execution times of the tasks of other jobs are not

known

– overload protection: estimated execution times are optimistic

Budget scheduler example:

time division multiplex

x(j): execution time of the j-th execution, P: period, B: slice length

Budget scheduler with priorities: PBS

• number of preemptions in a RI is fixed preemption overhead is known

• maximum time between event and start of high priority task with budget

Flow control

Data can be lost without flow control non deterministic functional behavior

Buffer overflow

P

Buffer overflow can occur if:

C

– best-case execution time of producer P is over estimated

– worst-case execution time of consumer C is under estimated

Task graph and dataflow graph extration

Extraction of a task graph is difficult

– Data dependency analysis

Derivation of an (dataflow) analysis model of an application is difficult an

error prone

– No one-to-one correspondence between task graph and dataflow graph

Our approach: describe top-level of the application as a nested loop

program

– Allow while loops, if conditions, and non-affine index expressions

Nested loop programs (NLPs)

A nested loop program is specified in a coordination language

– Specifies dependencies (communication) between functions

– functions are defined in a programming language, e.g. C

– to simplify/enable parallelization

• many programming language constructs are not supported to simplify/enable

analysis

• new program language constructs have been added to improve the

analyzability (and therefore NLPs are not an C-subset)

Nested loop programs should be seen as a sequential specification of a

task graph

– single assignment

• each array location is written at most once during one execution of the outer

while loop

– functions must be side-effect free

Nested loop program example

mode=0;

while(1){

in=input();

switch(mode){

case 0: {mode=detect(in); }

case 1: {mode,o1=decode1(in); o2=decode2(o1); output(o2);}

}

}

Resulting task graph

det

in

dec1

dec2

out

Every function becomes a task

Buffers can have multiple readers

Buffers can have multiple mutual exclusive writers

– That writes are mutual exclusive is explicit in the NLP but not in the task-graph

Budget computation

Budgets are computed given real-time constraints

– only end-2-end constraints are imposed by the environment

• throughput + latency and not the deadlines of the tasks

Requires an suitable analysis model for real-time applications

– should take pipelining into account

• the i-th input sample is consumed before the (i-1)th output sample is produced

We apply dataflow analysis with measured (not worst-case) execution

times

Definition real-time system

Real-time systems are those systems in which the correctness of the

system depends not only on the logical results of computation, but also

on the time at which the results are produced.

Real-time analysis

Use of measured execution times instead of worst-case execution times

– Guarantees?

– Load hypothesis

Basics of dataflow analysis

Distinguishing features of real-time systems

High level of determinism:

– It should be possible to derive useful properties of the system, given the

stated assumptions and the information available with an acceptable effort

and a useful accuracy

Concurrency:

– Deal with the inherent physical concurrency

– Deal with a concurrent description of the system

– Deal with a concurrent implementation of the system

Emphasis and significance of reliability and fault tolerance:

– Reliability is the probability that a system will perform correctly over a given

period of time

– Fault tolerance is concerned with the recognition and handling of failures

Computer Assisted Control

Definition predictability

Is should be possible to show, demonstrate, or prove that requirements

are met subject to assumptions made, for example, concerning failures

and workloads.

Note that:

– predictability is always subject to the underlying assumptions made

Real-time system classification

Note that:

• no deadlines are defined for best-effort tasks

• assumes that all tasks in the system have the same criticality

Criticality spectrum for systems

Hard RT

Very critical

Firm RT

Soft RT

Best effort

Not critical at all

Load hypothesis

Statement about the assumption of the peak load of the system

Translates often in an assumption about the worst-case execution times

of the tasks

Difference between guarantee and a

statistical assertion

A guarantee is an assurance of a fulfillment of a condition

– a guarantee is binary statement

– guarantees about the reality are given under certain assumptions

Statistical assertion is a statement about a probability of an occurrence

Focus is on analysis techniques that result in guarantees

– guarantees are given under explicit and testable assumptions (in our case

the load hypothesis)

Research Schools

1.

Real-time system theory should help to give guarantees about the temporal

behavior of the system

Testing can provide only a partial verification of the behavior. This justifies the use of

analytical techniques that can provide complete coverage.

Classical view of the real-time community

2.

Real-time system theory should provide means to manage the system

resources such that the temporal behavior improves

3.

Real-time system theory should provide means to compute system settings

4.

Real-time system theory should provide means to reduce the verification effort

5.

Real-time system theory should provide means to improve the robustness of a

system

Load hypothesis for firm real-time systems

Often execution times are measured instead of computed with WCET

tools

– reason: WCET tools are not available or computed WCETs are overly

pessimistic

Typically a load hypothesis is defined which states that the execution

times of the tasks are not larger than the WCETs used during analysis

Given that the load hypothesis holds we can guarantee with analysis

techniques that no deadlines are missed

If the load hypothesis does not hold then no statements can be made

about the worst-case temporal behavior of the system

Assumption coverage

Strength of materials theory

– Model is for example an approximation of a bridge

• E.g. the stiffness of the metal beam is intrinsically not exactly known, i.e. can be

worse or better

– However model can be a useful approximation of the reality

• Added safety margin (head room) is based on experience

Does the same reasoning apply to real-time system design?

False useful results?

Usefulness real-time analysis results even

given unsafe execution time estimates

Deadlock freedom and functional determinism of the application

Estimates of the real-time behavior of the tasks

Estimates of appropriate system configuration and system settings

Trends and anomalies

Responsiveness improvement

Sensitivity reduction

Robustness improvement

Synchronization and scheduling overhead reduction

Focus of real-time analysis techniques

Single processor

– Focus is on task scheduling of independent tasks + OS-kernel design

Multiprocessor

– Focus is on throughput and latency analysis of applications described as

task graphs

• also synthesis of settings & budget such that throughput and latency constraints

are met

Formal models for real-time analysis

Process algebra

– Algebra for communicating processes

– Allows transformation of one system into another

Temporal logics

– Propositional logic augmented by tense operators

– System representation with global states become prohibitive large

Automata

– Mathematical model for a finite statemachines

– Synchrony timing hypothesis OR clocks

• instantaneous broad-cast

• system evolves faster than events

Petri nets

– Dataflow graphs have similarities with Petri nets

Classical timing verification techniques

Logic based approach

– Deductive proof: IS or decision procedure Inot(S) is unsatisfiable

– Very high computational complexity and hard to automate

Automata based approach

– Language containment: Li Ls

– State explosion

Model checking

– State explosion

– High computational complexity

Outside scope of these formal verification

techniques

Techniques to include resource sharing

– effects of scheduling on the temporal behavior

Techniques to make an abstraction of the system while preserving properties

Techniques to synthesize properties instead of checking properties

Techniques to trade accuracy for lower computational complexity

Techniques to trade expressivity model for analyzability

Techniques to trade generality model for analyzability

However these techniques are essential for real-time multiprocessor system

design

– borrow ideas from performance analysis of communication networks

– Latency rate-analysis dataflow analysis

Rate based analysis

Rate based analysis determines, loosely speaking, the throughput of the

system

Three approaches:

1. Graph-based techniques: maximum cycle mean analysis

2. Algebraic techniques: determine eigenvectors with max-plus algebra

– Stochastic approaches: Markov process

Limitations:

–

–

–

1 and 2 assumes fixed timing delays instead of intervals, while 3 computes the for

real-time systems not very useful long term average throughput

supported models do not support any choice

supported models do not support inputs and outputs

Still useful for real-time analysis purposes? (yes)

Are there solutions available to analyze data dependent applications? (yes)

Related work: worst-case performance

analysis of communication networks

Objective compute maximum latency and minimum throughput for a flow of packets

Links between routers are shared by flows

No flow control: input buffers must be large enough such that overflow does not occur

Related work: worst-case performance

analysis

[R. Cruz, 1991]: A Calculus for Network Delay

– No flow control, does not require starvation free schedulers

– Bound traffic for t0 with a non-decreasing function

[K. Tindell and J. Clark, 1994]: Holistic Schedulability Analysis

– No flow control, static priority preemptive

– fixed point iteration in case of cyclic resource dependencies

[D. Stiliadis et.al., 1998]: Latency-Rate Servers: A General Model for Analysis of

Traffic Scheduling Algorithms

– No flow control

– Requires starvation-free schedulers there are no cyclic resource dependencies

– System can be characterize without knowledge about the input traffic

•

use of the concept of busy periods

– More accurate estimate of the end-to-end delay than [Cruz91] and [TC94]

[J.Y. Le Boudec, 1998]: Application of Network Calculus to Guaranteed Service

Networks

– No flow control

– Does not require schedulers to be starvation-free

– More accurate estimate of the end-to-end delay than [Cruz91] and [TC94]

Related work: worst-case performance

analysis of task graphs

[RZJE02, JRE02] Event model composition

– Definition of period+jitter traffic models, tasks with AND-condition, generalization of

[TC94]

[S. Chakraborty et.al., 2003] A General framework for ....

– Generalization network calculus, also known as real-time calculus

– Bound traffic and service for any interval t

– Acyclic task graphs

[M.Wiggers, et.al., 2007] Modeling Run-Time Arbitration by Latency-Rate

Servers in Data Flow Graphs

– Requires starvation-free schedulers

– Applicable in case of arbitrary deterministic task graphs: AND, cyclic task graphs,

buffer capacity can be given or computed

[M.Wiggers, et.al., 2009] Monotonicity and run-time scheduling

– Generalization of [M.Wiggers, and M. Bekooij, 2007]: allows sequence of execution

times and any deterministic dataflow graph

– not based on busy periods

[L. Thiele, and M. Stoimenov, 2009] Modular performance analysis of cyclic

dataflow graphs

– Generalization real-time calculus [S. Chakraborty et.al., 2003] : Analysis of cyclic

HSDF graphs

Related work: worst-case performance

analysis of task graphs

[J. Staschulat, et.al. 2009] Dataflow models for shared memory access

latency analysis

– piece-wise linear service approximation of priority based budget schedulers

[M. Wiggers, et.al., 2010] Simultaneous Budget and Buffer Size

Computation for Throughput-Constrained Task Graphs

– only HSDF graphs

– algorithm has a polynomial computational complexity

Dataflow analysis primer

Elements in the single-rate dataflow model

Actors

An actor is depicted as a node

An actor is stateless

An actor can represent a function

An actor can be use to represent a task (but also other things)

Actor fire (a task execute)

Actors have a firing conditions

A firing duration can be associated with an actor

Actors only interact with their environment through token consumption

from their input queues and token production through their output

queues

Queues

A queue is represented by an edge

Queues have per definition an unbounded capacity

Tokens are stored in a queue

Tokens can be consumed from a queue in the order that they are

produced

Tokens

A token is an undividable element

Tokens can be use to represent: data, space, or synchronization

moments

Firing rule

A firing rule is a condition that prescribes the number of tokens that

must be present in the input queues of an actor before the actor can

fire.

The firing rule of a single rate dataflow actor (also called HSDF actor) is:

– one token in each input queue

Notice: a firing rule cannot specify anything about the number of tokens

in an output queue

Throughput calculation example

What is the throughput during self-timed execution?

Token arrival times during self-timed

executed HSDF

Given an HSDF graph G(V,E)

The self-timed execution of this graph has some important properties if:

– The graph is strongly connected, i.e. there is a directed path from every

node to every other node in the graph

– Actors have a constant firing duration

It can be shown that the graph enters a periodic regime after an initial

transition phase

[BCOQ92]

Multi-dimensional periodic schedule

On average every a firing

Maximum cycle mean

[Rei68]

Maximum cycle mean example

MCM number example (1)

The critical cycle determines the mcm

The nodes and edges colored red belong to the critical cycle

MCM number example (2)

Overlapping firings

MCM calculation example

What is the mcm of this graph?

Related work: worst-case performance

analysis of communication networks

Objective compute maximum latency and minimum throughput for a flow of packets

Links between routers are shared by flows

No flow control: input buffers must be large enough such that overflow does not occur

Flow control

Related work: worst-case performance

analysis

[R. Cruz, 1991]: A Calculus for Network Delay

– No flow control, does not require starvation free schedulers

– Bound traffic for t0 with a non-decreasing function

[K. Tindell and J. Clark, 1994]: Holistic Schedulability Analysis

– No flow control, static priority preemptive

– fixed point iteration in case of cyclic resource dependencies

[D. Stiliadis et.al., 1998]: Latency-Rate Servers: A General Model for Analysis of

Traffic Scheduling Algorithms

– No flow control

– Requires starvation-free schedulers there are no cyclic resource dependencies

– System can be characterize without knowledge about the input traffic

•

use of the concept of busy periods

– More accurate estimate of the end-to-end delay than [Cruz91] and [TC94]

[J.Y. Le Boudec, 1998]: Application of Network Calculus to Guaranteed Service

Networks

– No flow control

– Does not require schedulers to be starvation-free

– More accurate estimate of the end-to-end delay than [Cruz91] and [TC94]

Related work: worst-case performance

analysis of task graphs

[RZJE02, JRE02] Event model composition

– Definition of period+jitter traffic models, tasks with AND-condition, generalization of

[TC94]

[S. Chakraborty et.al., 2003] A General framework for ....

– Generalization network calculus, also known as real-time calculus

– Bound traffic and service for any interval t

– Acyclic task graphs

[M.Wiggers, et.al., 2007] Modeling Run-Time Arbitration by Latency-Rate

Servers in Data Flow Graphs

– Requires starvation-free schedulers

– Applicable in case of arbitrary deterministic task graphs: AND, cyclic task graphs,

buffer capacity can be given or computed

[M.Wiggers, et.al., 2009] Monotonicity and run-time scheduling

– Generalization of [M.Wiggers, and M. Bekooij, 2007]: allows sequence of execution

times and any deterministic dataflow graph

– not based on busy periods

[L. Thiele, and M. Stoimenov, 2009] Modular performance analysis of cyclic

dataflow graphs

– Generalization real-time calculus [S. Chakraborty et.al., 2003] : Analysis of cyclic

HSDF graphs

Related work: worst-case performance

analysis of task graphs

[J. Staschulat, et.al. 2009] Dataflow models for shared memory access

latency analysis

– piece-wise linear service approximation of priority based budget schedulers

[M. Wiggers, et.al., 2010] Simultaneous Budget and Buffer Size

Computation for Throughput-Constrained Task Graphs

– only HSDF graphs

– algorithm has a polynomial computational complexity

Classical dataflow models

Fundamental differences dataflow models

HSDF: Homogenous synchronous dataflow model

– single-rate, polynomial MCM-algorithm

SDF: Synchronous dataflow model

– multi-rate, graph consistency, no known polynomial MCM-algorithm

CSDF: cyclo-static dataflow model

– fixed number of phases

FDDF: functional deterministic dataflow model

– data-dependent sequential firing rules, Turing complete (halting/deadlock)

DDF: dynamic dataflow model

– non-deteriministic firing rules

Dataflow model subclasses

DFM=data flow model subclass

If DFMA DFMB then DFGA DFMA DFGA DFMB

Recently introduced dataflow models with

data dependent quanta

Variable rate dataflow (VRDF) and variable rate phased dataflow

(VPDF) is Turing complete how can we deal with undecidability?

Hasse diagram representation including

some recently introduced dataflow models

Da Db if for every dataflow graph da Da it holds that da Db

Conclusion

Many core systems are

– shared address space multiprocessor systems

– number of core >8

A large number of cores put more stress on:

– system programming effort

– quality of service aspect

Ongoing research effort on:

– parallelization of a sequential description of stream processing algorithms

– resource management:

• computation of budgets

• enforcement of budgets

Computation of budgets

– focus is on dataflow analysis techniques, challenges include

• data dependent behavior (+ adaptation of resource budgets)

• tradeoff between run-time and computational complexity