q 1

advertisement

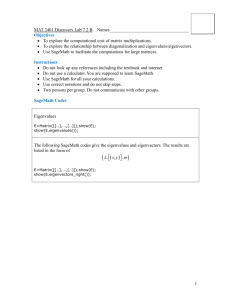

Orthogonal matrices based on excelent video lectures by Gilbert Strang, MIT http://ocw.mit.edu/OcwWeb/Mathematics/18-06Spring-2005/VideoLectures/detail/lecture17.htm Lecture 17 Orthogonality • we’ve met so far – orthogonal vectors • When are two vector orthogonal? – orthogonal subspaces • When are two subspaces orthogonal? Do you remember some examples? • today we’ll meet – orthogonal basis q1, …, qn – orthogonal matrix Q Orthonormal vectors 1. they are orthogonal, i.e. qiTqj = 0 if i ≠ j 2. the length of each of them is one • I can write this like condition: – qiTqj = 0 if i ≠ j – qiTqj = 1 if i = j • for general vector a, how do I get the same vector of unity length? – I divide every of its component by it’s norm |a| = (aTa)-1/2 • orthonormal vectors are independent – i.e. there is no linear combination (except 0) giving 0 • having orthonormal basis is nice, it makes all the calculations better • and I can put orthonormal vectors q1 … qn into matrix Q (when I use Q, I always mean orthonormal columns!!!) • Generally, Q is rectangular matrix, we can have e.g. just two columns with many rows. • Such a matrix Q is called matrix with orthonormal columns. • The name orthogonal matrix (should better be orthonormal matrix, but this is used rarely) is used when Q is square. • However, suppose my columns of A are not orthogonal. How do I make them orthonormal? • This can be done by Gram-Schmidt procedure: A → Q Q [q1 | | qn ] • In the last lecture we’ve been talking about ATA, it’s natural to look at QTQ. • What is QTQ? • Q TQ = I • If Q is square and it holds, that QTQ = I, what can you tell me about its inverse? • Its inverse is its transpose … Q-1 = QT • Example: 1 1 X1 1 1 Q1 1 1 2 1 1 1 1 • What’s the good of having Q? What formulas become easier? • I want to project to Q’s column space. – What’s the projection matrix P = A(ATA)-1AT? • P = QQT • So all the messy equations from the last lecture become nice and cute when I have matrix Q. • What do I mean by that? AT Axˆ AT b to compute ATA I must compute a lot of inner products But if I have Q instead of A, what happens? T T Q Qxˆ Q b Ixˆ Q b xˆ Q b so i-th component of ^x is qiT times b T T T xˆi qi b • If I have just matrix A with independent columns, I use Gram-Schmidt orthonormalization procedure to calculate Q. • The column space of A and Q are the same. • And the connection between A and Q is a matrix R. And it can be shown, that R is upper triangular. A = QR * * * R 0 * * 0 0 * Determinants based on excelent video lectures by Gilbert Strang, MIT http://ocw.mit.edu/OcwWeb/Mathematics/18-06Spring-2005/VideoLectures/detail/lecture18.htm Lecture 18 • • det A = |A| … determinant of a matrix is a number associated with every square matrix A determinant is a test for invertibility – matrix is invertible if |A| ≠ 0 – matrix is singular if |A| = 0 a b c d ad bc • In our quest for determinants, we start with three key properties. • Property 1 det I = 1 • Property 2 Exchange rows of a matrix: reverse sign of det • Property 3 The determinant is a linear function of each row separately. multiply row #1 by number t ta tb c d t add row #1 of A to row #1 of A’ a b c d a a b b c d a b c d a b c d • det of triangular matrix (except for the sign) * u11 * U 0 u22 * 0 0 u33 det U u11 u22 u33 upper triangular matrix U matrix is a result of Gauss elimination. So now we know, how any reasonable software (Matlab, e.g.) calculates det. Do the elimination, get U and multiply the diagonal elements. • det (A) = det (AT) – because of this property, all properties about rows (exchanging, all rows zero) are also valid about columns a b A c d det A ad bc • There exist an ugly formula (Leibniz formula) involving the sum of the terms (products of certain elements of A), determining the sign of the products involves permutations of numbers (i.e. combinatorics). • For those (not many, I guess) interested, I refer to http://en.wikipedia.org/wiki/Determinant#nby-n_matrices Eigenvalues and eigenvectors based on excelent video lectures by Gilbert Strang, MIT http://ocw.mit.edu/OcwWeb/Mathematics/18-06Spring-2005/VideoLectures/detail/lecture21.htm Lecture 21, 22 Introduction • Matrices A are square and we’re looking for some special numbers, eigenvalues, and some special vectors, eigenvectors. • Matrix A acts on vector x, the outcome is vector Ax. • And the vectors I'm specially interested in are the ones the come out in the same direction that they went in. • That won’t be typical. Most Ax vectors point into different direction. • But some vectors Ax will be parallel to x. These are called eigenvectors. • By parallel I mean Ax x • λ is called eigenvalue, and there is not just one eigenvalue for the given A. • Example: eigenvalues and eigevectors of the projection matrix P Pb x x b is the vector b eigenvector? no, it isn’t, its projection Pb is in another direction propose some eigenvector x any vector x in the plane is unchaged by P (Px = x) and this is telling me, that λ = 1 λ = 0, 1 Are there any other eigenvectors? I expect the answer to be yes, I am in 3D and I hope for getting three independent eigenvectors, two of them are in the plane, but where is the third? perpendicular to the plane, Px = 0, λ = 0 • I will jump ahead a bit and will tell you some nice things about eigenvalues. • n x n matrix has n eigenvalues • The sum of eigenvalues is equal to the sum of diagonal elements (trace). • The product of eigenvalues is determinant. • Eigenvalues can be complex numbers, but eigenvalues of symmetric matrices are always real numbers. • And let me introduce you antisymmetric matrices. These are matrices for which AT = -A. • And they have all eigenvalues complex . • What else can go wrong? • n x n matrix has n eigenvalues. But they don’t have to be different (algebraic multiplicity of the eigenvalue, two eigenvalues of the same value 2). – number of eigenvalues with the same value (for a given value), multiplicity of the corresponding root • If some of them are equal, then the eigenvectors may (but don’t have to!) also be equal (geometric multiplicity of the eigenvalue, two same eigenvectors 1). Such a matrix is called degenerate, and again it’s a bad thing. – number of linearly independent eigenvectors with that eigenvalue • Now the question: how do I find eigenvalues and eigenvectors? • How to solve Ax = λx, where I have two unknowns (λ and x) in one equation. – rewrite as (A - λI)x = 0 – I don’t know λ, x, but I do know something here. I know, that matrix (A - λI) (i.e. matrix A shifted on the diagonal by some λ) must be singular. Otherwise the only solution would be just x = 0, not particularly interesting eigenvector. – And what I know about singular matrices? – Their determinant is zero! • det(A - λI) = 0 • This is called characteristic (or eigenvalue) equation. And I got rid of x in the equation! • Now I am in the position to find λ first (I find n eigenvalues, they don’t necessarily differ), and then to find eigenvectors. • Eigenvectors are found by Gauss elimination, as the solution of (A - λI)x = 0. Example • 4 5 Find eigen for matrix A 2 3 1. Form A – λI. How will it look like? 4 A I 2 5 3 2. What is det(A – λI)? 4 3 10 2 2 characteristic polynomial 3. Find the roots of the polynomial, it must be equal to zero (determinant must be equal to zero). – What are the roots of λ2 – λ – 2? • Isn’t it (λ + 1)(λ - 2)? So λ1 = -1, λ2 = 2 4. For each λ find eigenvector, generally by Gauss elimination, as the solution of (A - λI)x = 0. • However, we can guess in our case. What is A – λI for λ1 = -1? 5 5 A 1I 2 2 • 4 5 A 2 3 and solve (A – λI)x = 0 5 5 bž 0 A 1I x1 2 2 vž 0 • so what is vector x1 = [bž, vž]T? • • x1 = [1, 1]T (eigenvector corresponding to λ1 = -1) and similarly we proceed for λ2 = 2 • If I have Ax = λx and Bx = αx, what about eigenvalues of A + B? – (A + B)x = (λ + α)x. Right? – WRONG! – What’s wrong? – B will, generally, have different eigenvectors than A. A and B are different matrices. • Or A · B. Also ≠ λ·α • So that was like caution. In these cases, the igenvalue problem must be solved for A+B (A·B). What to do with them? • We’ve eigenvalues/eigenvectors, but what do we do with them? • A small terminological diversion – Square matrix A is called diagonalizable if there exists an invertible matrix P such that P −1AP is a diagonal matrix. – Diagonalization is the process of finding a corresponding diagonal matrix for a diagonalizable matrix. • Eigendecomposition is used for diagonalization. • Construct matrix S, it will have eigenvectors as columns. • Start with AS multiplication. AS Ax1 x2 xn Ax1 Ax2 Axn 1 x1 2 x2 n xn • But I can factor out eigenvalues from this matrix. How? 1 x1 2 x 2 0 1 0 0 0 2 S n x n S 0 0 0 0 0 n AS = SΛ • And now I can multiply by S-1, but only if S is invertible, i.e. if n eigenvectors are independent. S-1AS = Λ A = SΛS-1 • There is a small number of matrices that don’t have independent eigenvectors. Such matrices ARE NOT diagonalizable (deffective matrix). • But if eigenvalues are distinct, their eigenvectors are independent and EVERY SUCH a matrix is diagonalizable. • Which matrices are diagonalizable (i.e. eigendecomposed)? – all λ’s are different (λ can be zero, the matrix is still diagonalizable. But it is singular.) – if some λ’s are same, I must be careful, I must check the eigenvectors. – If I have repeated eigenvalues, I may or may not have n independent eigenvectors. – If the eigenvectors are independent, than it is diagonalizable (even if I have some repeating eigenvalues) … geometric multiplicity = algebraic • Diagonalizability is concerned with eigenvectors. (independent) • Invertibility is concerned with eigenvalues. (λ=0) • Example of matrix with equivalent eigenvalues, but with different eigenvectors • Identity matrix I (n x n): – eigenvalues? • all ones (n ones) – but there is no shortage in eigenvectors • its got plenty of eigenvectors, I choose n independent • diagonal matrix – eigenvalues are on the diagonal – eigenvectors are columns with 0 and 1 3 0 1 0 A 1 3, 2 2 x1 , x2 0 2 0 1 • triangular matrix – eigenvalues are sitting along the diagonal • symmetric matrix (n x n) – real eigenvalues, real eigenvectors – n independent eigenvectors – they can be chosen such that they are orthonormal (A = QΛQT) • orthogonal matrix – eigenvalues are +1 or -1 Some facts about eigen • What does it mean, if we have λ = 0? – – – – Ax = λx = 0 So what follows from this? A has linearly dependent rows/columns. if A has λ = 0, then A is singular • A has λi, then A-1 has λi-1, eigenvectors of A and A-1 are the same • The sum of eigenvalues is equal to the sum of diagonal elements (trace). • The product of eigenvalues is determinant. By some unfortunate accident a new species of frog has been introduced into an area where it has too few natural predators. In an attempt to restore the ecological balance, a team of scientists is considering introducing a species of bird which feeds on this frog. Experimental data suggests that the population of frogs and birds from one year to the next can be modeled by linear relationships. Specifically, it has been found that if the quantities Fk and Bk represent the populations of the frogs and birds in the kth year, then Bk 1 0.6 Bk 0.4 Fk Fk 1 0.35Bk 1.4 Fk The question is this: in the long run, will the introduction of the birds reduce or eliminate the frog population growth? 0.4 Fk Fk 1 0.6 B 0.35 1.4 B k k 1 • So this system evolves in time according to x(k+1) = Ax(k) (k = 0, 1, …). Such a system is called discrete linear dynamical system, matrix A is called transition matrix. • So if we need to know the state of the system in time k = 50, we have to compute x(50) = A50 x(0). 50th power of matrix A, that’s a lot matrix multiplication. • However, it can be easily proved, tha if matrix A is diagonalizable (i.e. A = PDP −1, where D is a diagonal matrix), then Ak = PDkP-1 and Dk is easy peasy. • That’s one practical application of matrix diagonalization – matrix powers. • And in the last lecture we have shown, that solving eigenproblem and diagonalization is equivalent! A = SΛS-1 • Whenever you read “matrix was diagonalized”, it means its eigenvalues/vectors were found. Eigendecomposition demonstration • http://ocw.mit.edu/courses/mathematics/18-06-linearalgebra-spring-2010/tools/ • http://ocw.mit.edu/ans7870/18/18.06/javademo/Eigen/ Symmetric matrices based on excelent video lectures by Gilbert Strang, MIT http://ocw.mit.edu/OcwWeb/Mathematics/18-06Spring-2005/VideoLectures/detail/lecture25.htm Lecture 25 http://ocw.mit.edu/OcwWeb/Mathematics/18-06Spring-2005/VideoLectures/detail/lecture27.htm Lecture 27 Eigenvalues/eigenvectors • eigenvalues are real • eigenvectors are perpendicular (orthogonal) – what I mean by this? – have a look at identity matrix I, it certainly is symmetric, what are its eigenvalues? – ones – and eigenvectors? – every vector is an eigenvector – so I may choose these that are orthogonal – If eigenvalues of symmetric matrix are different, its eigenvectors are orthogonal. If some eigenvalues are equal, orthogonal vectors may be chosen. • usual case: A = SΛS-1 • symmetric case: A = QΛQT • so symmetric matrix can be factored into this form: orthogonal times diagonal times transpose of orthogonal • this factorization completely displays eigenvalues, eigenvectors, and the symmetry (QΛQT is clearly symmetric) • This is called spectral theorem. – Spectrum is the set of eigenvalues of matrix. q 1 1 0 A QQT q2 0 2 q3 0 0 0 q1T 0 q2T 1q1q1T 2 q2 q2T 3q3q3T 3 q3T • Every symmetric matrix breaks up into these pieces with real λ and orthonormal eigenvectors. • q1q1T – what kind of matrix is this matrix? – OK, I don’t expect you remember what we were talking about two weeks later. T aa – It was about matrices of this form: T a a T – In our case q q is what? • one – And q1q1T is a projection matrix ! • Every symmetric matrix is a combination of perpendicular projection matrices. • That's another way that people like to think of the spectral theorem. • For symmetric matrices eigenvalues are real. Question: are they positive or negative? • Symmetric matrices with all the eigenvalues positive are called positive definite. • Can I answer this question without actually computing the whole spectra? • What’s the sign of the determinant of such matrices? – The determinant is positive. – But that’s not enough! 1 0 0 3 • So I have to check all subdeterminants, they must all be positive. a a a a a a a a a a a a a a a a • Matrices with eigenvalues equal or higher than zero are called positive semidefinite. • • Definition: positive definite matrices have xTAx > 0 for every x (where x is any nonzero real vector). So we have three tests of positive semidefinitness: 1. all eigenvalues positive 2. determinant and all subdeterminants positive 3. xTAx > 0 for every nonzero x • Symmetric matrices are good, but positive semidefinite matrices are the best ! • Positive definite matrices are very important in applications. • Positive definite matrices can be factorized using Cholesky decomposition M = LLT = RTR (L – lower triangular, R – upper). – So we need to store just one factor (L or R). – This factorization takes into account the symmetry of M. • In least squares ATAx = ATb, ATA is always symmetric positive definite provided that all columns of A are independent.