DDoS Experiments: Issues, Solutions and Results

advertisement

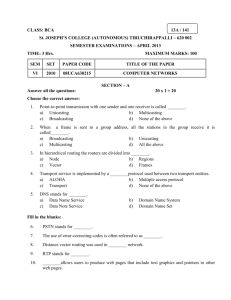

The DETER Testbed for Security Experiments Sonia Fahmy Center for Education and Research in Information Assurance and Security (CERIAS) Purdue University http://www.cs.purdue.edu/homes/fahmy/ Lehigh University August 4th, 2005 1 What this Tutorial is and is not! • In this tutorial, you will learn: • Pros and cons of different environments • An overview of tools available for experimenters • Sample DDoS, worm, routing experiments and tools • You will not learn • All possible Emulab features – the Emulab tutorial does a good job of that! • Detailed research results from all ongoing DETER experiments • Tutorial will be tailored to the audience! • Please feel free to interrupt at any time! 2 Outline • Why and when to use emulation? • Available testbeds • DETER/EMIST introduction • Emulab tutorial • EMIST tools from Purdue, Penn State, UC Davis, … • Purdue demos • DDoS experiments • Worm experiments • Routing experiments • Future work plans 3 A Security Experiment • Understanding an attack or evaluating a defense Simplest case: • Single TCP flow • Single UDP square pulse going from Node1->Node3 What approach should be taken to conduct experiments? 4 Options • Simulation • ns-2 • SSF-NET • Others • Emulation • Emulab (www.emulab.net) • DETER (www.deterlab.net) and EMIST • WAIL (www.schooner.wail.wisc.edu) • Other testbeds, e.g., PlanetLab (www.planet-lab.org) • Lab network 5 Simulation Pros • Easy to use. And requires little system knowledge. • Almost anything can be measured without additional overhead. Cons • Modeling abstractions take away from realism. 6 Sample ns-2 TCPSACK Agent 7 8 Emulation Pros • No modeling abstraction limitation as most aspects of the network are “real”. • More flexible than a lab network as no pulling of wires is required since network is built via VLANs. Cons • Requires extensive system knowledge: Linux internals, tools such as ifconfig, debugging skills • Can be prone to emulation artifacts. • Can be very tricky to measure certain things. 9 Physical Lab Network Pros • No emulation artifacts. Cons • Impossible to get desired delays w/o a traffic shaper. • Not only requires system knowledge, but also hardware knowledge as machines have to be built and cables need to be connected. 10 Outline • Why and when to use emulation? • Available testbeds • DETER/EMIST introduction • Emulab tutorial • EMIST tools from Purdue, Penn State, UC Davis, … • Purdue demos • DDoS experiments • Worm experiments • Routing experiments • Future work plans 11 Testbeds • Emulab (www.emulab.net) • DETER (www.deterlab.net) and EMIST • WAIL (www.schooner.wail.wisc.edu) • Other testbeds, e.g., PlanetLab (www.planet- lab.org) 12 Outline • Why and when to use emulation? • Available testbeds • DETER/EMIST introduction • Emulab tutorial • EMIST tools from Purdue, Penn State, UC Davis, … • Purdue demos • DDoS experiments • Worm experiments • Routing experiments • Future work plans 13 Emulation Details • Emulab tutorial gives a good overview • Caveats: • How are the delays implemented? • How do routers function? • Useful tools • Experiment control tools • Link monitoring • Topology and visualization tools from Penn State and UC Davis • Other tools being developed and released 14 Link Speed/Delays Hardware • Switch between 1000/100/10 modes on the NIC Software • Insert a node on the link that will perform link shaping in software using dummynet module of ipfw. 15 Routers • A PC can act as a static router by simply consulting forwarding tables. • The forwarding tables can be calculated when the experiment is created and then uploaded on the test machines. • To achieve dynamic routing a software routing daemon like Zebra can be used. 16 Routers • Typical routers: • Switch fabric • Fast packet classification • • • • Queuing and buffering Dedicated CPUs; no interrupt livelock Routers can be configured to prevent or reduce the effect of DDoS attacks • • • • Using Content-Addressable Memory (CAM) Traffic can be divided into classes of service Committed Access Rate on the input port Limits the rate of a specific traffic type, e.g., TCP, UDP, ICMP Software routers: • Used for firewalls, NATs, etc. • Prototype software routers implement similar elements in the kernel, e.g., Click, Cross. They are also polling-based 17 Experiment Control • Controlling more than two machines is very hard especially if timed events are involved. • An automated script similar to ns-2 events is needed for experiment automation. • As tasks can take arbitrary time to complete an event completion callback is required. 18 Our Tools • Can be found at http://www.cs.purdue.edu/homes/fahmy/software/emist/ • Scriptable Event System (SES): • Allows using a script to repeat experiments while changing parameters • As tasks can take arbitrary time to complete, an event completion callback is required • See documentation • Software link monitor • See EMIST technical note • Measurement and data integration tools, and other useful scripts (see documentation) 19 Scriptable Event System • A master application runs either on the main users account or on one of the test nodes. • The communication between the master and the zombies is done via a control network which is free of experimental traffic. • The server runs a script that determines the experiment. 1. Start measurements and configure things 2. Launch Attack/benchmark 3. Once the benchmark is complete stop the attack 4. Copy local measurement logs to a central location 20 Sample Event Script 0 node0 node2 node3 r1 r2 "./tmeas -f /usr/local/tmeas.out" 1 "pause" 0 node2 "/usr/bin/ttcp -r > /dev/null" 1 "pause" 0 node0 node2 "rm /usr/local/dump.dmp" 1 "pause" 0 node0 node2 r1 r2 "sh /proj/DDoSImpact/exp/bell/scripts/dump.sh" 1 "pause" 5 node3 "./flood node1 -U -s10 -W160-1000 -D80000" 9 node0 "/usr/bin/ttcp -v -t node2 < /usr/local/f30m >/usr/local/ttcp.out!" 1 "pause" 0 node0 node1 node2 node3 r1 r2 "stop" 1 "pause" 0 node0 node2 r1 r2 "killall tcpdump" 1 "pause" 0 node0 "cp /usr/local/dump.dmp /proj/DDoSImpact/exp/bell/data/dump.node0" 0 node2 "cp /usr/local/dump.dmp /proj/DDoSImpact/exp/bell/data/dump.node2" 1 "pause" 0 node0 "cp /usr/local/ttcp.out /proj/DDoSImpact/exp/bell/data" 1 "pause" 0 node0 "cp /usr/local/tmeas.out /proj/DDoSImpact/exp/bell/data/tmeas.out.node0" 0 node3 "cp /usr/local/tmeas.out /proj/DDoSImpact/exp/bell/data/tmeas.out.node3" 0 node1 "cp /usr/local/tmeas.out /proj/DDoSImpact/exp/bell/data/tmeas.out.node1" 21 Measurement and Integration Measurement of systems statistics at different points in the network can yield an understanding of what events are occurring in the entire network A tool based on a 1 sec timer records CPU, PPSin, PPSout, BPSin, BPSout, RTO, Memory, TCP congestion window size. The collected logs (plus routing logs) are aggregated and used to plot graphs via a collection of scripts The data can also be displayed by the EVST upon experiment completion 22 Link Monitor • An easy way to monitor links is to run tcpdump and drop counters on individual PCs • • • Tcpdump requires additional CPU processing Drop counters are not always accurate as they depend on the driver accuracy A software solution similar to a delay node can be placed on a link between two nodes • • Two monitors can be used to find out what was dropped by comparing traffic in and traffic out High traffic volumes require the Tap/Logger to be much faster than the test nodes 23 Our Topology Tools • Working on a tool to take Rocketfuel and RouteViews data, use algorithms to infer AS relationships, and produce an ns-2 file and router configuration files 24 Outline • Why and when to use emulation? • Available testbeds • DETER/EMIST introduction • Emulab tutorial • EMIST tools from Purdue, Penn State, UC Davis, … • Purdue demos • DDoS experiments • Worm experiments • Routing experiments • Future work plans 25 Purdue DDoS Experiments 26 TCP-Targeted Attacks Varied: Attacker, burst length l, sleep period T-l, pkt size, RTT, bfr size A. Kuzmanovic and E. W. Knightly. Low-rate targeted denial of service attacks. SIGCOMM 2003. M. Guirguis et al. Exploiting the transients of adaptation for RoQ attacks on Internet resources. ICNP 2004. H. Sun et al. Defending against low-rate TCP attacks: Dynamic detection and protection. ICNP 2004. Objective: Understand attack effectiveness (damage versus effort) in terms of application-level, transport-level, and network-level metrics at multiple nodes Reinforce the importance of DETER by comparing to simulations and modeling results l l Rate T-l R Time 27 Experimental Scenario • Original TCP-targeted attacks are tuned to RTO frequency for near zero throughput • Can exploit Additive Increase Multiplicative Decrease congestion avoidance of TCP without tuning period to RTO, and hence throttle TCP’s throughput at any predetermined level • Simple dumbbell topology with single file transfer flow results are easiest to interpret 28 Experimental Setup • All nodes run a zombie process that connects to the • • • • • master, thus forming our Scriptable Event System SES script informs the nodes to start measurements A file transfer and TCP-targeted attack are initiated When the file transfer is complete, the SES is informed and it stops the attack and instructs the nodes to copy the logs to a central location The same topology with similar events is simulated in ns-2 Data from DETER, Emulab, and ns-2 is compared to a simple throughput degradation model 29 Throughput Degradation • One packet gets lost during each pulse. • Connection does not enter a RTO. • There is no packet loss during attack sleep periods. is the Cwnd growth during a sleep period time between two loss events 30 Forward Direction Model corresponds to ns-2 results when attack pulse length is greater or equal to TCP flow RTT and when buffer sizes are not too large Emulab results close to model and ns-2 DETER is not as significantly affected by the attack 31 Reverse Direction Since ns-2 does not model CPU load, and opposing flows do not interfere at a router, data for ns-2 is not shown for reverse direction (Cwnd has no cuts) Model is also not considered in the reverse 32 direction for the same reason as ns-2 Congestion Window • • The irregular peaks in this ns-2 Cwnd plot indicate that not every pulse of the attack causes Cwnd to get halved This causes ns-2’s average Cwnd to be higher than the one predicted by the model when buffer sizes are large or attack pulse length is shorter than the RTT 33 Emulation vs. Emulation • Attack on Emulab has weaker parameters (83 byte versus 2 byte payload) • On Emulab routers are faster than on DETER (850 Mhz versus 733 Mhz) • Attacking machine on Emulab is slower (600 Mhz versus 733 Mhz) • One would expect the routers on DETER to be affected more 34 Emulab vs. DETER • Emulab router experiences a much higher load than a DETER router • There is a large difference between packets received and sent on Emulab • Even with largely different attack payloads, the packet per second flows are similar (~40,000 pps) 35 More Complex Benchmark 36 Throughput 37 Web Clients/Server Server Throughput Total Number of Pages Read 90 350000 80 300000 70 Number of Pages Mbit/sec 60 50 40 30 20 10 250000 200000 150000 100000 50000 0 0 20 40 60 0 80 0 20 Burst Length (ms) Average Client Throughput 60 80 Average Respone Time 2.5 Average Response Time (sec) 0.16 2 Mbit/sec 40 Burst Length (ms) 1.5 1 0.5 0.14 0.12 0.1 0.08 0.06 0.04 0.02 0 0 0 20 40 Burst Length (ms) 60 80 0 20 40 60 80 Burst Length (ms) 38 Attack Parameters vs. RTT 0.38 Mbps without an attack 0.75 Mbps without an attack Client with 63 ms RTT to the server 39 Short RTT 1.00 Mbps without an attack 1.40 Mbps without an attack Client with 12.6 ms RTT to the server 40 Timeouts Attack 100-1000 Unacked data during 5 MB file transfer (31.97 sec = 160.16 KB/sec) 41 TCP-targeted Attack Summary TCP congestion control can be successfully exploited by a pulsating attack with a fraction of needed attack traffic when compared to a flooding attack; attack frequency need not be tuned to RTO With a single flow under attack, attack pulse must be longer or equal to RTT and buffer sizes must not exceed 100 packets; attack packet size also an important parameter Simulation and emulation can produce very different results for very similar experiments Same experiment on different Emulation testbeds can yield different results Such differences are important as they allow us to identify vulnerabilities and fundamental limits. The Internet is an evolving, heterogeneous entity with protocol implementation errors and resource constraints, and not a modeling approximation in a simulator Need to study other scenarios with multiple flows and attackers, and different hw/sw routers with different buffer sizes 42 DDoS/Routing Experiment • At 222 sec, nodes 8, 11, and 14 attack node 9 (zebra router running BGP) for 400 seconds. • No activity for 200 seconds. Allow all nodes to stabilize. • Nodes 8, 11, and 14 attack node 9 for 400 seconds again. Node 36 attacks node 10 (neighbor of node 9) for 400 seconds. 43 Topology 44 Routing Need to understand magnitude of potential problems, causes, and defenses 45 Routing Tools eBGP and iBGP routing can be triggered by Quagga routing daemons Initialization scripts coupled with the central control make it easy to restart all of the routers in experiment Realistic AS relationships or policies? See RocketFuel/RouteViews/Policy inference Slides 46 Internet Topology • Goal: What is the relationship between DDoS, topology and routing? Three characteristics of topology: • Structural: The hierarchical structure of the Internet (transit and stub domains; core and edge routers) • Degree level: Variability of degree level of the internet routers and Autonomous Systems (e.g., power law) • Clustering: Connectivity between neighboring nodes (i.e., small world) 47 Lessons Learned Insights into sensitivity to emulation environment Key differences from simulations; especially when simulators do not accurately model resource under attack Emulab and DETER results differ for the same test scenario Software routers: No priority for routing packets and routing process; buffer sizes; NIC cards and device drivers E.g., Interference among forward and reverse directions DDoS scale down and benchmarks: problems with limit on the degree of router nodes, delays/bandwidths, and number of nodes Connectivity is a key parameter; flooding DDoS can be scaled down Required flexibility in node assignment for software routers and delay nodes Worm modeling and containment Testing third party products requires flexibility Both high-level and low-level metrics needed 48 Future Plans Continue development of general experiment automation and instrumentation/plotting tools and documentation Continue investigation of DDoS attacks (e.g., TCP-targeted) in more depth, and compare analytical, simulation, and emulation results to quantify simulation and emulation artifacts; study scenarios with multiple flows (RTTs/bottlenecks) and attackers 49 Future Plans (cont’d) Continue to collaborate with both the routing team and the DDoS team to identify benchmark experimental scenarios, especially topology, and build tools: • • • BGP and OSPF configuration tool Experiment scale down Interactions between DDoS, routing and topology Compare DETER testbed results with router results (DETER and WAIL) 50