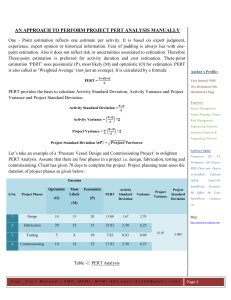

S-PERT estimates and probabilities

advertisement

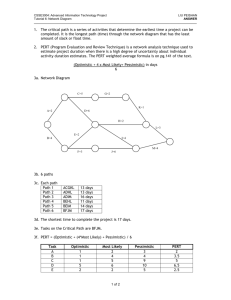

Statistical PERT: Easily create risk-adjusted, statistically-based project task estimates William W. Davis, MSPM, PMP October 21, 2014 Introduction ................................................................................................................................................... 1 Existing methods of task estimation ............................................................................................................. 2 Examining PERT estimation......................................................................................................................... 2 The foundational basis for S-PERT .............................................................................................................. 6 Summary on risk-adjusted, probabilistic, task estimates ............................................................................ 11 The basis for S-PERT ................................................................................................................................. 12 S-PERT estimates and probabilities ............................................................................................................ 15 Avoiding 3-point estimations ...................................................................................................................... 17 “But I’ve never had a problem with PERT estimating!” ............................................................................ 17 “I’m not good with statistics. S-PERT sounds too confusing to use.” ....................................................... 18 Using S-PERT during project negotiations ................................................................................................. 20 When to use S-PERT (and when not to) ..................................................................................................... 20 S-PERT and the Uniform Scheduling Method ........................................................................................... 21 Conclusion .................................................................................................................................................. 23 Bibliography ............................................................................................................................................... 24 i © Copyright 2014 – William W. Davis – DRAFT COPY Introduction One challenge every project manager faces is constructing an accurate project budget and schedule. Overestimating work efforts leads to under-utilized resources, poor organizational productivity, and activates Parkinson’s Law which reads, “Work expands to fill the time available for its completion.” Underestimating work efforts leads to cost overruns, schedule slips, project failure, stress and high anxiety among the project team and organizational managers. If an organization can effectively estimate project work efforts, it achieves optimal productivity while reducing unexpected stress coming from missed deadlines and exploded budgets. Traditionally-managed project budgets are often derived from the project schedule that identifies, orders, and sizes all activities needed to complete the project. Once a project manager has identified and sequenced all the project’s activities, the next step is to size those activities. To do so, a project manager may use various methods to estimate the work effort: parametric estimating, analogous estimation, and expert opinion are several methods, for example. When expert opinion is extensively used, the question the project manager must ask the task estimator is, “How confident are you in your estimate?” It is erroneous to think expert opinion equates to a high confidence estimate. A subject matter expert may be estimating the use of a new, unproven technology solution or integration with a new, untested, 3rd party vendor with whom no one in the organization has experience. In those cases, providing a high confidence estimate would usually be warrantless and foolhardy. Conversely, when a subject matter expert provides an estimate with a work task of great familiarity, the project manager should expect little variation in actual work effort from the expert’s estimate of that effort. Between these two extremes are a continuous number of risk-influenced possibilities. How should a project manager respond to those risk variations? If the project manager responds at all, it is often with an arbitrary adjustment of the expert’s work effort estimate based on little more than a subjective feeling the project manager has concerning the task description, the expert’s estimate to complete that task, and the reliability of the expert who provided the estimate. When project managers resort to these kinds of subjective adjustments of work effort estimates, an entire organization faces disparity and irregularity in the practice of project estimation. This whitepaper investigates a new method of project task estimation called Statistical PERT, or SPERT. S-PERT shares only a slight resemblance to the very familiar Program Evaluation and Review Technique (PERT). In fact, the only thing that S-PERT shares with PERT (besides the name) is a reliance on a 3-point task estimate: optimistic, most likely, and pessimistic point-estimates. The PERT formula is 1 © Copyright 2014 – William W. Davis – DRAFT COPY not used at all in S-PERT. Instead, S-PERT is grounded in the science of statistical mathematics in general, and use of the normal distribution curve in particular, to generate work effort estimates which are risk-adjusted based upon the estimator’s relative confidence level of the most likely outcome. S-PERT allows project managers to choose a risk-adjusted task estimate corresponding to their willingness to accept risk that the estimate will be exceeded by the actual work effort. By using S-PERT as a method of estimating, project and functional managers will have an agreed-upon understanding of how task estimates were developed, what is the relative confidence level associated with the most likely outcome, and what is the probability that a task won’t be exceeded by the project team during the task’s execution. Existing methods of task estimation Project managers have a variety of ways to solicit and adjust task estimates. If a project manager asks for a deterministic estimate from a task estimator, he has no way of implicitly knowing what degree of uncertainty exists with that estimate. The project manager can find out the relative confidence level of the estimate either by using his own expert judgment or by talking with the task estimator. Either way, any adjustment that the project manager makes to the estimate is likely arbitrary. Some project managers use PERT’s 3-point estimate to collect an optimistic, most likely, and pessimistic values for the work effort to be completed. By asking for a 3-point estimate, the project manager can easily see the range between the pessimistic and optimistic values, but he has no way of discerning the likelihood of either of those values occurring, or the likelihood that the task will finish according to the most likely value. Intuitively, a 3-point estimate of 10-40-200 is more risky than a 3-point estimate of 3040-50 even though both estimates have a most likely outcome of 40. But how does the project manager quantify that riskiness? And how likely is the occurrence of any of those 3-point values? Except through personal judgment or by talking with the task estimator, the project manager has no way of knowing. Examining PERT estimation When a project manager uses PERT, she may choose to use the PERT formula [(O + 4ML + P) / 6] which is a very simple – but, as we will see, not very useful – way to estimate tasks. Using the 3-point estimate of (20, 40, 80) hours, for example, the resulting PERT estimate would be 43.333, as shown below: 20 + 4(40) + 80 = 43.333 ℎ𝑜𝑢𝑟𝑠 6 2 © Copyright 2014 – William W. Davis – DRAFT COPY Using PERT, the project manager may enter 43 (or 43.3) hours into the project plan for that task. But what probability should she expect that this estimated effort will not be exceeded by the actual work effort for that task? The range is very wide – 60 hours – which suggests a lot of risk and volatility with completing the task. If we wish to utilize the normal distribution curve 1 to evaluate the probability that the task will be completed within 43.333 hours, we must first find the mean and standard deviation of the curve. The mean is easily obtained: 20 + 40 + 80 = 46.667 ℎ𝑜𝑢𝑟𝑠 3 The standard deviation is not as neatly discovered, however. Rather than delving into the mathematics required to derive the standard deviation from the three known estimation values, we elect, instead, to rely on the statistical functions in Microsoft Excel to do the heavy lifting for us. In Excel 2010, we have two functions from which to choose: STDEV.P and STDEV.S. STDEV.P returns the standard deviation for an entire population of results, whereas STDEV.S returns the standard deviation from just a sample of the entire population. Since our PERT estimate has only three values – 20, 40 and 80 – but a task can be completed in a mathematically infinite number of hours between 20 and 80, we first elect to use the STDEV.S function: 𝑆𝑇𝐷𝐸𝑉. 𝑆(20,40,80) = 30.551 ℎ𝑜𝑢𝑟𝑠 Now we can use Microsoft Excel’s normal distribution functions to calculate the probability that the estimated task using PERT will finish on or before PERT’s estimate of 43.333 hours. To do so, we employ the NORM.DIST function, which requires as input: the PERT estimate, mean2, standard deviation, and indication whether the returned probability is cumulative or not (in the function below, “TRUE” in the function’s parameter list indicates that we wish to obtain the cumulative probability): 𝑁𝑂𝑅𝑀. 𝐷𝐼𝑆𝑇(43.333, 46.667, 30.551, 𝑇𝑅𝑈𝐸) = 0.4566 1 We make the assumption here that the normal distribution curve suitably fits the probability of task completion, which is admittedly an arguable point. Most tasks do not finish sooner than the most likely estimate, and not infrequently do tasks take longer than the most likely estimate. In this whitepaper, we use the normal distribution curve for convenience and because the normal curve can be skewed to the right and still yield statistically sound probabilities which, although they may not be absolutely precise, are still accurate and useful for the purposes of task estimation. That said, S-PERT could be used with other distribution curves, such as Weibull, which better fit the way most tasks are completed, but which are unfamiliar to nearly all managers and those without a strong mathematical background. Our purpose is to be accurate and useful more than it is to be precise and exacting. 2 We could replace the PERT mean with its mode, that is, the most likely outcome. Doing so would favorably alter the probability, but not dramatically: 54.34%. 3 © Copyright 2014 – William W. Davis – DRAFT COPY The PERT estimate, then, has only a 45.66% probability of not being exceeded! There exists a greater than 50% probability that the task will take longer than 43.333 hours to complete. Not a very good estimation technique! If we wish to take a more aggressive approach to gauging PERT’s reliability, we could opt to use Excel’s standard deviation for a population (STDEV.P), and accept that our entire population of possibilities rests on the knowledge of only three possible outcomes: an optimistic outcome of 20 hours, a pessimistic outcome of 80 hours, and a most likely outcome of 40 hours. When we do that, we obtain a smaller standard deviation result: 𝑆𝑇𝐷𝐸𝑉. 𝑃(20,40,80) = 24.944 ℎ𝑜𝑢𝑟𝑠 Using this smaller standard deviation, we obtain this probability that PERT’s estimate of 43.333 will not be exceeded by the project team3: 𝑁𝑂𝑅𝑀. 𝐷𝐼𝑆𝑇(43.333,46.667,24.944, 𝑇𝑅𝑈𝐸) = 0.4468 The result of 44.68% is slightly worse than 45.66%. The skewing of the curve to the right (because only 20 hours separate the optimistic value from the most likely value, but 40 hours separate the most likely value from the pessimistic value) and a heightening of the distribution curve (because we only have three data points with which to construct the curve) increased the area under the curve and to the right of the PERT 3-point estimate, causing the probability of PERT’s success to actually decline slightly. In both cases, the PERT formula leads to a conclusion that the PERT task estimate does not enjoy a high probability of success, that is, that the project team will finish the task on or before the task estimate. But does this make sense? What if the task estimator insisted that, although there was a chance that the task might extend to 80 hours, the most likely estimate of 40 hours was very, very, VERY likely to occur? This highlights the problem of using PERT estimates: PERT estimates are not risk-adjusted estimates based on expert knowledge of how long the task will take to complete. Rather than calculating standard deviation by using Excel’s statistical functions, another approach to PERT estimation is to use a different, simplified formula for calculating an approximate standard deviation using the 3-point estimate. We will call this the “PERT standard deviation” in this whitepaper. The formula for the approximated PERT standard deviation is: (𝑃𝑒𝑠𝑠𝑖𝑚𝑖𝑠𝑡𝑖𝑐 − 𝑂𝑝𝑡𝑖𝑚𝑖𝑠𝑡𝑖𝑐) 6 3 Using the mode (40) instead of the mean (43.333) in the NORM.DIST function, the result would be 55.31% 4 © Copyright 2014 – William W. Davis – DRAFT COPY If we apply this formula to our PERT 3-point estimate example of (20, 40, 80), we obtain the following: (80 − 20) = 10 ℎ𝑜𝑢𝑟𝑠 6 This much lower standard deviation appears to be a much more viable value for our use. When we use this value for the PERT standard deviation, we can find a different probability that the PERT task estimate of 43.333 will not be exceeded during task execution4. 𝑁𝑂𝑅𝑀. 𝐷𝐼𝑆𝑇(43.333, 46.667, 10, 𝑇𝑅𝑈𝐸) = 0.3694 The lower PERT standard deviation of 10 leads to an even lower probability that the task will be completed by the PERT estimate of 43.333 hours! This approach suffers from the same problem that, when the standard deviation decreases, there is more area under the curve and to the right of the PERT 3point estimate, decreasing the probability that the task will be completed on or before the PERT estimate. Clearly, using the PERT estimate does not equate to a high-confidence task estimation technique! Estimators using PERT can very easily overcome this problem, though. Instead of accepting the PERT formula’s estimate as-is, task estimators can use the simplified PERT standard deviation formula to create a high-probability estimate just by adding two PERT standard deviations to the mean of the 3-point estimate. Two standard deviations to the right of the mean encompasses approximately 95% of the area under the curve, meaning that the new PERT task estimate has a 95% probability that the actual work effort necessary to complete the task will not be greater than the task’s PERT estimate. (20 + 40 + 80) + (2 ∗ 10) = 66.6667 ℎ𝑜𝑢𝑟𝑠 3 Our PERT estimate took a big jump up, though! What started out as an estimate of 43.33 hours is now much higher at 66.67 hours. In exchange for that much higher estimate, however, our probability that the task will be completed on or before our estimate raised mightily, to roughly 95%. The trade-off, then, is either we accept a high task estimate to gain a high degree of confidence our estimate will be correct (or, at least, that it won’t be exceeded), or we accept a much lower task estimate with a correspondingly low degree of confidence that the actual hours it takes to complete the task will not exceed our estimate. But a problem remains that, irrespective of which PERT estimate we decide to use, the PERT estimate does not take into account the task estimator’s true sense of confidence in the 3-point estimate, particularly with regards to the most likely outcome. By using the simplified PERT standard deviation 4 Using the mode (40) instead of the mean (43.333) in the NORM.DIST function, the result would be 63.05% 5 © Copyright 2014 – William W. Davis – DRAFT COPY formula, all we’ve done is reduce the variability of the normal curve out of convenience – not because we are responding to a judgment made on how likely the most likely outcome is likely to occur. The foundational basis for S-PERT The Statistical PERT (S-PERT) formula overcomes this deficiency in the PERT estimation method. When we realize that the probability of occurrence of any point on the normal distribution curve is affected by the shape of that curve, and that the normal curve is defined, in part, by the curve’s standard deviation, then we realize that if we had a suitable, defensible, rational, and useful way of adjusting or modifying the standard deviation, we could create task estimates which respond to our sense of confidence in the 3-point estimate and to our willingness to accept risk that our estimate may be exceeded during task execution. In this quest to develop a new, statistically-sound method of task estimation, modifying a standard deviation need not be scientifically precise to yield suitable, defensible, rational and useful results to a project manager. Suppose we asked a task estimator this question about the (20, 40, 80) 3-point estimate she provided: If you executed this task 100 times5 and the only three outcomes for how long the task takes to complete are 20, 40 or 80 hours how many times out of 100 would you expect the task to take 40 hours to complete? (We would have to add this qualifier: the task executor receives no learning benefit from repeating the task 100 times; we exclude, then, the possibility that later task executions are finished more quickly due to experience completing the same task over and over again). If the task estimator has a very high confidence that the most likely outcome will occur, then perhaps she might answer, “I think 98 out of 100 times the task will complete in 40 hours. Only one time out of 100 will the task take only 20 hours to complete, and only one time out of 100 will the task take 80 hours to complete.” This answer accounts for the 3-point estimate values and also demonstrates the extreme confidence in the 40 hour, most likely outcome. 5 Why 100 times? Why not 1000 or 10,000 times? When we spread the three outcomes of a 3-point estimate over just 100 task iterations, we obtain at least some level of uncertainty even if 98 of 100 iterations result in the most likely outcome value. Had we used 1000+ iterations, we would essentially remove all uncertainty if 998 iterations finished according to the most likely outcome, and only 1 iteration finished with the optimistic outcome and 1 iteration finished with the pessimistic outcome. Using 100 iterations is admittedly arbitrary, but it is also useful. 6 © Copyright 2014 – William W. Davis – DRAFT COPY If we want to calculate the standard deviation of this response, we can place 100 values into 100 cells in a spreadsheet, where only one value is equal to 20, one value is equal to 80, and the other 98 values are all equal to 40. The distribution curve of the data set would look like this: Chart 1 – Normal distribution curve for high confidence, (20, 40, 80) estimate Then, using Excel’s STDEV.P function6, we would calculate the standard deviation as 4.4677. Notably, this standard deviation is even lower than the PERT standard deviation of 10, but that makes sense because the task estimator’s answer expresses extreme confidence that there will be very little variability apart from the most likely outcome. Now, using Excel’s NORM.INV function, we can obtain a risk-adjusted, statistically sound, task estimate. The NORM.INV function requires three inputs: the desired confidence level, the mean, and the standard deviation of the normal curve. For a 95% confident estimate, the function would look like this: 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.95, 46.667, 4.4677) = 54.015 ℎ𝑜𝑢𝑟𝑠 6 We use STDEV.P instead of STDEV.S to obtain a smaller, more conservative standard deviation value, fully recognizing that the 3-point estimate is really a sample set, not the full population of possible task outcomes which is continuous, not deterministic. Again, our goal is not to be absolute precise, but rational and useful. 7 © Copyright 2014 – William W. Davis – DRAFT COPY This estimate is considerably lower than the 66.667 hours that we obtained using the PERT estimate and the PERT standard deviation with a 95% confidence level! In the above NORM.INV calculation, we used the mean (µ) of the 3-point estimate, 46.667. But the task estimator is nearly certain that the most likely outcome will occur during task execution. Instead of using the mean of the 3-point estimate, what would happen if we used the most likely outcome as a surrogate for the mean? Below, we replace the mean (46.667) with the most likely outcome (40). 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.95, 40, 4.4677) = 47.349 ℎ𝑜𝑢𝑟𝑠 At 95% confidence, the estimate declines even further, to a little more than 47 hours. So, at this point, we have three task estimates. All three estimates use the same 3-points (20, 40, 80) to create an adjusted estimate standard deviation. Using Excel’s NORM.INV function with 95% confidence, we obtained two different estimates depending on whether we use the mean of the 3-point estimate, or whether we use the most likely outcome as a surrogate for the mean. The range between the three estimates is about 20 hours – a lot of time for a project most likely to finish in 40 hours and no more than 80 hours! Which method is correct? Or rather, which answer is more suitable, defensible, rational, and useful? We advocate that the smaller estimate of 47 hours is “most correct.” Why? Since the task estimator is nearly certain that the outcome will be 40 hours, it seems reasonable that at 95% confidence along a continuous distribution (rather than across just three, discreet points like what we asked our task estimator for), the result would not be that far away from the most likely outcome. There is very little risk that the task will extend to 80 hours, and the 47 hour estimate models this reality best. If the project manager selected a non-risk-adjusted, 67-hour PERT estimate, that project manager is likely going to waste or lock-up organizational resources that, instead, could be allocated to a different task on the project plan; the 67-hour estimate exposes the task to Parkinson’s Law, too. When the project manager uses the mean in the NORM.INV function, the resulting normal curve does a poor job of modeling the near-certainty of the most likely result. Even using the most likely outcome as a surrogate for the mean in the NORM.INV function holds that the most likely outcome is only 50% likely, but because of the way we adjusted the standard deviation, the normal curve has a sharp, vertical rise and fall, so probabilities to the right of the surrogate are, intuitively, very reasonable. What would happen if the task estimator had virtually no confidence that the most likely outcome would occur? Instead of near-certainty that 40 hours was going to be the most likely outcome, what if the probability distribution curve was essentially uniform, not normal? What if the task estimator said, “I 8 © Copyright 2014 – William W. Davis – DRAFT COPY think 33 of 100 task executions would complete in 20 hours, 33 executions would complete in 80 hours, and 34 executions would complete in 40 hours, thereby making 40 hours the ‘most likely’ outcome.” (To which we would add, “Barely!”). Using a spreadsheet, we could plug-in 100 values into 100 cells to find the standard deviation of a nearly uniform distribution result set using STDEV.P; the resulting standard deviation from those 100 data points is 24.828. The distribution curve would look like this: Chart 2 – Normal distribution curve for low confidence, (20, 40, 80) estimate Knowing the standard deviation, it is a simple matter to create a revised, risk-adjusted task estimate using the NORM.INV function (using the mean rather than the most likely surrogate): 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.95, 46.667, 24.828) = 87.5054 ℎ𝑜𝑢𝑟𝑠 This result is even greater than our pessimistic estimate of 80 hours! The reason for this is the area under a normally-distributed curve will not fully disappear at either the optimistic or pessimistic values. Because the curve is so flat, a small portion of the curve is less than the optimistic value of 20 (it even enters negative territory), and another small portion of the curve is in excess of 80. Is this wrong, or bad? No. If a task estimator was so unconfident in the most likely value, how much confidence could a project 9 © Copyright 2014 – William W. Davis – DRAFT COPY manager have that the pessimistic estimate could not be exceeded? The notion that the pessimistic estimate could be exceeded in a situation where we have a uniform distribution of results is still suitable, defensible, rational and useful. We could also use the most likely outcome as a surrogate for the mean if we wanted to: 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.95,40,24.828) = 80.8384 ℎ𝑜𝑢𝑟𝑠 This result may be preferable because it is closer to the pessimistic point-estimate. What would happen if the task estimator had a moderate amount of confidence that the most likely outcome would be correct? What if the task estimator said, “I think that out of 100 task executions, 10 of them would finish in 20 hours, 10 of them would finish in 80 hours, and 80 of them would finish in 40 hours”? Essentially, the task estimator is saying that 40 hours has a four-fifths chance of being exactly correct, and a nine-tenths chance of not being exceeded, which we translate into meaning, “moderately confident” that the most likely outcome will be “correct.” Once again, we could use a spreadsheet to enter 100 values into 100 cells on a worksheet, where 10 cells have a value of 20, 10 cells have a value of 80, and 80 cells have a value of 40: Chart 3 – Normal distribution curve for medium confidence, (20, 40, 80) estimate 10 © Copyright 2014 – William W. Davis – DRAFT COPY Then, we could use STDEV.P to find that the standard deviation for the data set is a nice, even integer: 14. Plugging this information into a NORM.INV function (using the mean), we could find the 95% probability of a risk-adjusted task that enjoys a moderate level of confidence that the most likely outcome will not be exceeded: 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.95, 46.667, 14) = 69.695 ℎ𝑜𝑢𝑟𝑠 The risk-adjusted task with moderate confidence in the most likely outcome is now estimated at almost 70 hours with 95% confidence. If we wanted to use the most likely outcome surrogate instead of the mean, this would be our result: 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.95, 40, 14) = 63.028 ℎ𝑜𝑢𝑟𝑠 Intuition suggests that 63 hours is a good estimate. If we want very high confidence that the task estimate will not be exceeded, and only 10% outcomes out of 100 will equal 80 hours (still assuming only three outcomes of 20, 40 and 80 are allowable), then we would want to estimate the task much higher than 40 hours, but not too near 80 hours, either, since we accept a 5% risk that our task estimate will be exceeded. Summary on risk-adjusted, probabilistic, task estimates What we have demonstrated so far is that, when using the normal distribution, PERT estimates by themselves do not have a high probability of creating estimates that will not likely be exceeded during actual task execution. Or, putting it another way, PERT estimates have a high probability of being exceeded during task execution. Using a PERT standard deviation can overcome the problem of PERT estimates with a high probabilities of being exceeded during the project execution phase, but even when we estimate adding two PERT standard deviations to the PERT estimate (to get the 95% confidence level), we fail to introduce the concept of risk-adjusted task estimates, and therefore run a risk of overestimating our tasks and wasting organizational resources. If we correspond a task’s most likely outcome with such subjective statements such as, “I am highly confident that this task will be completed within the most likely point-estimate,” or, “I have little confidence that this task will be completed within the most likely point-estimate”, then we can also correlate those statements to typified standard deviations for those tasks. By carefully shrinking or expanding the standard deviation (because we have / lack confidence, respectively), we can create riskadjusted, statistically-sound, probabilistic task estimates that are suitable, defensible, rational, and useful to the project manager and the whole organization. 11 © Copyright 2014 – William W. Davis – DRAFT COPY The basis for S-PERT Of course, actual work efforts are continuous variables, not discreet variables. Tasks on rare occasion finish according to the optimistic estimate, and they not infrequently finish greater than the most likely estimate. They even sometimes finish in a worst-case scenario that exceeds even the pessimistic estimate. PERT is generally accepted and widely used because it is relatively simple to obtain a 3-point estimate, plus the formula is easy to construct and use. PERT usually provides a rational (though flawed) basis for adding a small buffer to a task’s most likely estimate7. But as demonstrated in this whitepaper, that does not make it particularly useful to a project manager seeking to create a project schedule and budget. What we desire, then, is to construct a method of creating risk-adjusted task estimates by allowing the estimator to render a simple, subjective judgment on the relative confidence level in her estimates. That judgment determines the standard deviation for the normal curve. Then, we want to offer the task estimator different task estimates with various probabilities from which to choose, letting the task estimator decide the trade-off between high certainty / high estimate and low certainty / low estimate. Estimates may, for example, have a 99%, 95%, 90% or 80% probability of not being exceeded, where each choice results in a declining estimated work effort but an increasing probability that the estimated work effort will be exceeded during task execution. To begin constructing this new method, we first notice that Excel’s STDEV.P function obtains a standard deviation value for our 3-point estimate of (20, 40, 80) that is eerily similar to the standard deviation obtained from 100 values where the most likely value of 40 occurred 34 times, and both the optimistic and pessimistic values occurred 33 times, respectively. To refresh our memories, 𝑆𝑇𝐷𝐸𝑉. 𝑃(20, 40, 80) = 24.9444 We do not show the formula for running this same function over 100 cells as just described, but the resulting standard deviation from that exercise is 24.828. The 3-point estimate results in a standard deviation of a uniformly distributed data series. If we subtract the optimistic point-estimate from the pessimistic point-estimate, then divide the result into the standard deviation, we obtain a ratio that can be useful to us. This ratio can be used to adjust any 37 This isn’t always true, though. When the range between the optimistic and most likely point-estimates is the same as the range between the most likely and pessimistic point-estimates (such is the case when, for example, the 3-point estimate is 20, 40, 60), then the PERT formula results in a value no different from the most likely outcome. Interestingly, PERT estimates can create values LESS THAN the most likely outcome if the difference between the most likely and optimistic point-estimates is greater than the difference between the pessimistic and most likely point-estimates, which seems counter-intuitive. 12 © Copyright 2014 – William W. Davis – DRAFT COPY point estimate just as if the 3-points were uniformly distributed (that is, the task estimator had virtually no greater expectation that the most likely outcome would arise over either the optimistic or the pessimistic outcomes). Using the 24.9444 standard deviation from above, we obtain this ratio for the uniformly distributed 3-point estimate: 24.9444 = 0.41573 (80 − 20) To see how this ratio differs slightly when we use a different, less-volatile, 3-point estimate, let us perform the same calculations using this 3-point estimate8: (30, 40, 50). First, we start with finding the standard deviation of the population which results in a value representing a uniformly distributed data series: 𝑆𝑇𝐷𝐸𝑉. 𝑃(30, 40, 50) = 8.16497 Then, we find the ratio between the 3-point estimate range and the standard deviation for the 3-point estimate: 8.16497 = 0.40825 (50 − 30) Somewhat cavalierly, we make this conclusion: the ratio between the standard deviation of a uniform data series and the range of that series is approximately 0.41. We could have said 0.415 or something else, but we remind ourselves that we are striving to be accurate without necessarily being overly precise. For our purposes, we can use 0.41 as a constant ratio factor to estimate the standard deviation of any 3point estimate range that has low confidence in the most likely outcome. We simply find the range of the 3-point estimate, multiply it by 0.41, and the result is an approximation of the standard deviation of a uniformly distributed data series. This approximated standard deviation becomes input into Excel’s NORM.DIST and NORM.INV functions to obtain probabilistic outputs according to the normal distribution curve. 8 We could have used any, less-volatile, 3-point estimate here. We selected the (20, 40, 80) and (30, 40, 50) 3point estimates because many IT project managers like to estimate tasks between 4-40 hours or 8-80 hours in order to obtain more easily monitored and controlled project tasks. If a project manager is estimating tasks with estimating values that have much higher values or ranges than used in this example, either those estimates should be converted to a different unit-of-measure that more closely aligns with this whitepaper’s example, or the constant ratios used in S-PERT should be adjusted to account for the project manager’s different task estimation scenario. 13 © Copyright 2014 – William W. Davis – DRAFT COPY Now we need to find a similar, constant, ratio value for instances when we have very high confidence in the most likely outcome – where 98 times out of 100 we expect the task to be completed by the most likely point-estimate (when our only other choices are an optimistic outcome or a pessimistic one) . To find that high-confidence-in-the-most-likely-outcome ratio, we use Excel’s STDEV.P function and specify an input range of 100 cells, where 98 of those cells have a value of 40, and only one cell has a value of either 20 or 30 (because our two, 3-point, sample estimates are [20, 40, 80] and [30, 40, 50]), and only one cell has a value of either 80 or 50. When we do this, the resulting standard deviations are: 4.46767 for the (20, 40, 80) 3-point estimate 1.41421 for the (30, 40, 50) 3-point estimate Then we divide those two standard deviations by the respective range of our two, 3-point estimates. The result we get is: 4.46767 = 0.07446 (80 − 20) 1.41421 = 0.07071 (50 − 30) We see that both values are approximately equal to 0.0725. So, with the same cavalier attitude as before, we conclude that the ratio between the standard deviation of a data series that has a high degree of confidence in a single value (that is, the most likely outcome) and the range of that data series is approximated by the constant 0.0725. We can obtain a standard deviation for any 3-point estimate where we have a high confidence in the most likely point-estimate by dividing the range of the 3-point estimate by our constant value of 0.0725. With the low-confidence and high-confidence adjustment constants anchored to our assumptions about the likelihood the actual work effort will be equal to our most likely point-estimate, we can easily create gradients between these two extremes. To create a ratio constant for a “medium confidence” judgment, we can just take the average of 0.41 and 0.0725, which is 0.241259. We can create “medium-high” and “medium-low” confidence constants by splitting the difference between our new “medium confidence” 9 Or, we could follow the same process of entering 100 values into a spreadsheet, where 10 values are equal to the optimistic value, 10 values are equal to the pessimistic value, and the remaining 80 values are equal to the most likely outcome. When we followed that process with our (20, 40, 80) 3-point estimate, the ratio result for our “medium confidence” level was 0.2333. This ratio value is only slightly different than simply taking the average between 0.41 (low confidence ratio) and 0.0725 (high confidence ratio). This small difference is immaterial to us, though, as we are seeking relative accuracy and usefulness and not precision. 14 © Copyright 2014 – William W. Davis – DRAFT COPY constant and the high confidence and low confidence constants, respectively. The end result of this effort is this list of subjective confidence levels and their corresponding standard deviation ratio constants: S-PERT Confidence Ratios Constant High confidence 0.0725 Medium-high confidence 0.156875 Medium confidence 0.24125 Medium-low confidence 0.325625 Low confidence 0.41 Table A – S-PERT Confidence Ratio Set Using this chart of constant ratio values, we can create a risk-adjusted standard deviation for any 3-point estimate. To help task estimators get an objective feel for what these subjective confidence terms mean, we suggest the following objective interpretations, which are not really very precise translations, but they are accurate enough to be useful to both the task estimator and the S-PERT estimation formulas. High means 99 times out of 100, the most likely outcome should not be exceeded Medium-high means 9 times out 100, the most likely outcome should not be exceeded Medium means 4 out of 5 times, the most likely outcome should not be exceeded Medium-low means 3 out of 4 times, the most likely outcome should not be exceeded Low means that the most likely outcome is scarcely more likely to occur than either the optimistic or the pessimistic point-estimates S-PERT estimates and probabilities Now that we have a method of creating risk-adjusted standard deviations, it is a simple matter to use riskadjusted standard deviations in Excel’s NORM.INV function to obtain a risk-adjusted, probabilistic task estimates. Using our (20, 40, 80) 3-point estimate, we can construct a table of task estimation choices that vary only in our willingness to accept the risk that our estimate may be wrong, that is, that the actual hours spent on the task exceeds our estimate of that task. For example, if we say that our 3-point estimate of (20, 40, 80) has a medium level of confidence in the most likely outcome, we can then multiply the 3-point estimate range (80 – 20 = 60) by the constant value 0.24125 (from the chart we just generated) to obtain the risk-adjusted standard deviation of 14.475. Then, 15 © Copyright 2014 – William W. Davis – DRAFT COPY we can use the NORM.INV function to obtain different task estimates with corresponding probabilities. Below, we calculate four, risk-adjusted, task estimates with probabilities of 99%, 95%, 90% and 80%10. 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.99, 40, 14.475) = 73.67 ℎ𝑜𝑢𝑟𝑠 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.95, 40, 14.475) = 63.81 ℎ𝑜𝑢𝑟𝑠 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.90, 40, 14.475) = 58.55 ℎ𝑜𝑢𝑟𝑠 𝑁𝑂𝑅𝑀. 𝐼𝑁𝑉(0.80, 40, 14.475) = 52.18 ℎ𝑜𝑢𝑟𝑠 Here is how we interpret the results. For the given task with a 3-point estimate of (20, 40, 80), where we have medium confidence in the most likely outcome, if we: Estimate 73.7 hours, there is a 1% chance that the actual effort will exceed our estimate Estimate 63.8 hours, there is a 5% chance that the actual effort will exceed our estimate Estimate 58.6 hours, there is a 10% chance that the actual effort will exceed our estimate Estimate 52.2 hours, there is a 20% chance that the actual effort will exceed our estimate This is how S-PERT works. S-PERT starts with approximating the risk-adjusted standard deviation according to the task estimator’s confidence in the most likely outcome. Then, using Excel’s NORM.INV function, S-PERT offers the task estimator a set of estimation choices, where each estimate is tied to a specific probability that the estimate may not cover all the work effort necessary to complete the task. Whereas PERT only requires the task estimator to create a 3-point estimate, S-PERT requires the same 3point estimate, but it also requires the estimator to do two more things: 1) assess the relative confidence level in the most likely outcome, and 2) choose how much certainty that the estimate will not be exceeded during the actual task execution. In exchange for this extra effort, S-PERT offers project managers much greater understanding and insight over the task estimation process, and a specific level of confidence in every task estimate (and, by extension, to the entire project schedule). As long as the 3-point estimates are reasonable and the confidence level in the most likely outcome is accurately assessed, the task estimator can have a reasonably accurate, if imperfectly precise, measure of uncertainty for tasks estimated using S-PERT. In a nutshell, then, this is the S-PERT methodology: 1. Obtain a 3-point task estimate 10 We use the most likely outcome as a surrogate for the mean of the 3-point estimate. 16 © Copyright 2014 – William W. Davis – DRAFT COPY 2. Assess the relative likelihood that the most likely outcome will occur during task execution 3. Choose the desired probability level that the resulting task estimate will not be exceeded during task execution Avoiding 3-point estimations Not every project manager uses 3-point estimates when doing task estimation. Obtaining a 3-point estimate for tasks requires greater effort on the part of the task estimator and project manager, and project managers may face resistance when they press task estimators for three estimates for every task on the project plan. In these cases, can project managers still use S-PERT? Yes. Instead of obtaining 3-point estimates from task estimators, project managers can apply a heuristic to each single-point estimate they receive so the S-PERT methodology can still be used. For instance, a project manager may choose to discount the single-point estimate by x percent to obtain the optimistic value. Similarly, a project manager can surcharge the single-point estimate by multiplying it by y to create a pessimistic value. As an example, if a task estimator’s single-point estimate is 20 hours, the project manager could discount that estimate by 20% to obtain the optimistic value (20 hours – 4 hour discount = 16 hours), and add a 50% surcharge to find the pessimistic value (20 hours + 10 hour surcharge = 30 hours), creating a normal distribution curve that is skewed to the right. Using expert judgment, the project manager could further tweak the heuristic results for specific tasks he knows have expected estimation ranges which are greater than or less than the estimation ranges obtained by using a heuristic. “But I’ve never had a problem with PERT estimating!” Some project managers reading this whitepaper who use PERT estimation (without using the PERT standard deviation) may say, “Your examples show the probability of PERT estimation success to be less than 50%, but I’ve never had a problem with getting my project tasks completed on-time and on-budget just by using the PERT formula alone, without calculating standard deviations or using statistical functions in Excel. Why would I switch to S-PERT? I don’t have a problem with PERT!” There are several possible explanations for why project managers experience little problem with actual task efforts exceeding the PERT estimates of those tasks. The first explanation is that project managers using PERT are receiving knowingly inflated, 3-point estimates from task estimators. Instead of providing project managers with a true, most likely point17 © Copyright 2014 – William W. Davis – DRAFT COPY estimate, task estimators may be providing project managers with inflated, unlikely point-estimates knowing that, if the inflated task estimates are accepted, those tasks have a large, built-in buffer of time for task executors to consume. Parkinson’s Law then consumes the built-in buffer, so project tasks seem to finish exactly according to the PERT estimate (or very close to it), giving the appearance that PERT estimation was highly accurate. The second explanation is similar to the first one: project managers are receiving flawed 3-point estimates which do not align to the actual work effort needed to complete those tasks. Here, the task estimator is not intending to inflate the 3-point estimate – but the flawed expert judgment of the task estimator leads to the same result: a warrantless, more generous request for time that leads to a PERT estimate with a high probability of equaling or exceeding the actual work effort necessary to complete those PERT-estimated tasks. And Parkinson’s Law also consumes the extra time allocated for the task. A third explanation is that the normal distribution curve is extremely skewed to the left, rather than to the right as it would normally be expected for a project task. If this is the case, then the PERT estimate will enjoy uncharacteristically high probability of not being exceeded by the actual task execution. This would be an unlikely explanation, however, because rarely will the likelihood of a task be to finish sooner than the most likely outcome rather for the task to finish later than the most likely outcome. If a project manager rarely has a problem with PERT estimates being exceeded by the project team during the project execution phase, the best explanation is that the most likely outcome contained in PERT’s 3point estimate is not really the most likely outcome for completing the task. “I’m not good with statistics. S-PERT sounds too confusing to use.” Once a project manager receives a S-PERT template with the formulas already built-in to the template, using S-PERT is as easy as adding PERT’s 3-point estimates into the spreadsheet for each task, then choosing a level of confidence in the most likely outcome by clicking on the “Most Likely Confidence” column and selecting from among five different, pre-defined levels of confidence (high, medium-high, medium, medium-low, and low). The S-PERT template automatically creates a list of task estimate choices, each with a different probability level that the actual task execution will finish on or before the given estimate. Below is an example of the S-PERT template. To use the template, the project manager first adds PERT’s 3-point estimates into the first three yellow columns. Then in the 4th yellow column (“Most Likely Confidence”), the project manager chooses a confidence value corresponding to the most likely 18 © Copyright 2014 – William W. Davis – DRAFT COPY outcome. The S-PERT formulas create five different estimates for each task. The project manager can choose any of the five S-PERT estimates to place on the project plan. The largest estimate is associated with a 99% probability that the task execution will finish on or before the given estimate, and the smallest estimate has only a 75% probability that the task execution will finish on or before the given estimate. Between these two values are three other estimates for a 95%, 90%, 85% and 80% probability of not having the task estimate being exceeded during actual task execution. ID 1 2 3 4 5 6 7 8 9 10 11 12 Optimistic Most Likely Pessimistic Most Likely Confidence 20 40 80 High confidence 20 40 80 Medium confidence 20 40 80 Low confidence 24 40 56 High confidence 24 40 56 Medium confidence 24 40 56 Low confidence 24 40 120 High confidence 24 40 120 Medium confidence 24 40 120 Low confidence 24 32 56 High confidence 24 32 56 Medium confidence 24 32 56 Low confidence Adj StDev 4.35 14.475 24.6 2.32 7.72 13.12 6.96 23.16 39.36 2.32 7.72 13.12 99% 50.12 73.67 97.23 45.40 57.96 70.52 56.19 93.88 131.57 37.40 49.96 62.52 S-PERT Probabilistic Estimates 95% 90% 85% 80% 47.16 45.57 44.51 43.66 63.81 58.55 55.00 52.18 80.46 71.53 65.50 60.70 43.82 42.97 42.40 41.95 52.70 49.89 48.00 46.50 61.58 56.81 53.60 51.04 51.45 48.92 47.21 45.86 78.09 69.68 64.00 59.49 104.74 90.44 80.79 73.13 35.82 34.97 34.40 33.95 44.70 41.89 40.00 38.50 53.58 48.81 45.60 43.04 75% 42.93 49.76 56.59 41.56 45.21 48.85 44.69 55.62 66.55 33.56 37.21 40.85 Table B – S-PERT Template Example Since these calculations are all done in a spreadsheet, it is an easy matter to add other probability-based estimates to the S-PERT template – like, say, adding task estimates with 98% or 70% probability of not being exceeded. Even the five pre-defined values for the “Most Likely Confidence” column can be modified to either reduce the number of choices or to expand them. And the S-PERT constant ratio set from Table A can be modified to obtain better-fitting, normal distribution curves resulting in moreaccurate, probability-based task estimates. Another way to use the S-PERT template is to plug-in a discreet value – any estimate of how long the task will take to complete – into the “Planning Estimate” column, and let the S-PERT template calculate the likelihood that the task will be completed on or before the discreet estimate. The task estimator can easily see how much risk she is accepting for each task estimate she chooses to model. 19 © Copyright 2014 – William W. Davis – DRAFT COPY ID 1 2 3 4 5 6 7 8 9 10 11 12 Optimistic Most Likely Pessimistic Most Likely Confidence 20 40 80 High confidence 20 40 80 Medium confidence 20 40 80 Low confidence 24 40 56 High confidence 24 40 56 Medium confidence 24 40 56 Low confidence 24 40 120 High confidence 24 40 120 Medium confidence 24 40 120 Low confidence 24 32 56 High confidence 24 32 56 Medium confidence 24 32 56 Low confidence Adj StDev 4.35 14.475 24.6 2.32 7.72 13.12 6.96 23.16 39.36 2.32 7.72 13.12 Planning S-PERT Estimate Likelihood 48 96.70% 48 70.98% 48 62.75% 48 99.97% 48 85.00% 48 72.90% 48 87.48% 48 63.51% 48 58.05% 36 95.77% 36 69.78% 36 61.98% Table C – S-PERT Template Example Using S-PERT during project negotiations Every project manager is well-acquainted with how task estimates and project finish dates are negotiated with project sponsors, business leads, and IT functional management. S-PERT can provide a powerful new tool to project managers to very clearly and concretely explain the trade-off between planning for an earlier finish date for a task (or a project) and the likelihood that the planning estimate will be exceeded by the project team during the project execution phase. When to use S-PERT (and when not to) S-PERT is a probabilistic method of task estimation based on the normal distribution curve. Therefore, it is suitable for use on tasks that have some level of uncertainty for how long they will take to complete. When a task has a known, definitive amount of time that it will take to complete, use of S-PERT is unnecessary and, in fact, may create trouble if the task estimator tries to use it. For example, if an external vendor plans to gather business requirements during an onsite, 3-day visit, usually (but not always) there is no uncertainty about how long the task will take to complete. The vendor has purchased tickets and it is generally infeasible that they will change their travel plans and stay longer than the 3-day engagement. We would not need to use S-PERT to estimate this task since there is no reasonable uncertainty surrounding it. S-PERT is only suitable for task estimation where there is some measure of reasonable uncertainty about when a task will finish, and that uncertainty is material to the 20 © Copyright 2014 – William W. Davis – DRAFT COPY project planning effort. If a task’s uncertainty cannot be rationally modeled using the normal distribution curve, then S-PERT should not be used to create risk-adjusted, probabilistic, task estimates11. Given the problem of distribution curve fitting using just three data points (optimistic, most likely, pessimistic), and that the most likely outcome is often not the mean, we conclude that S-PERT is most useful for estimating probabilistic values that are at least one standard deviation to the right of the most likely point-estimate. When S-PERT is used to obtain probabilities one standard deviation to the right of the most likely point-estimate, the problem of fitting a normal distribution curve based on only three data points becomes less of a concern. S-PERT and the Uniform Scheduling Method S-PERT (and PERT, and many other estimation methods) all suffer from Parkinson’s Law, that is, the propensity that work expands to fill the time allocated for it. If a project manager decides that he wants to be virtually 100% certain in his project schedule, he may be tempted to use the S-PERT estimation technique to estimate all tasks on the project schedule (or, at the least, all tasks on the critical path) with 99% probability of not being exceeded. Doing this, the project manager will be correct that there is a very slight statistical likelihood that the project will not finish on-time (and on-budget, too, if all project costs are directly correlated to the work efforts on the schedule). But this would be a mistake! Planning all tasks with 99% confidence would invariably lead to wasting project resources, and Parkinson’s Law would make this waste hard for the project manager and organizational leaders to detect. In most cases, we expect that project tasks will finish on or before their most likely point-estimate. If that were not the case, then the most likely point-estimate is meaningless. But the most likely outcome is a deterministic value, yet project performance leads to continuous outcomes. The issue is, irrespective of what estimate we choose, there will always be some area under the probability curve and to the right of the estimated value. This is the area of task scheduling error.12 11 Or, S-PERT’s formulas and ratio lookup table should be modified to operate with another, suitable distribution curve. S-PERT was not developed with any other distribution curve other than the normal distribution curve. Extensive testing would need to be done, firstly, before S-PERT could be used with another probability distribution curve. 12 Actually, estimation error can occur to the left of the most likely value, too. When a project task finishes sooner than its most likely outcome, the area to the left of the most likely outcome is considered part of the estimation error, too. However, because of Parkinson’s Law and the need for simplicity and usefulness, we disregard the estimation error to the left of the most likely outcome, believing that that portion of estimation error is much less significant than the estimation error found to the right of the most likely or expected outcome. 21 © Copyright 2014 – William W. Davis – DRAFT COPY Every schedule has some area of task scheduling error. Another goal we should have is to use a method for anticipating how much estimation error we need to include in the project contingency plan. We want to plan for neither too much nor too little contingency so that the project finishes successfully, onschedule and on-budget. One strategy for creating a project schedule contingency is to use the Unified Scheduling Method 13 (USM). USM recognizes that every task has the potential of estimation error, but during project execution, not all tasks will realize that estimation error. Put another way, even though every task has some probability that the task estimate will be exceeded, not every task will have its estimate exceeded when the project is executed. A few tasks may finish earlier than expected (yes, it does happen!), many or most tasks will finish according to the estimate in the project plan, and some subset of all project tasks will take longer than expected. This concept is easily demonstrated. Suppose we have ten tasks to complete. We estimate that each task will take one day to complete. Then we say that there is only a 50% probability that our estimate will be correct; when we are incorrect, the task will take two days to complete. Then we toss a coin ten times, one toss to represent one task execution during the project execution phase. When the flipped coin is heads, the actual time it takes to complete the task is one day. If the flipped coin is tails, the actual time it takes to complete the task is two days. Although all ten tasks have some estimation error potential, not all ten tasks will realize that error (or at least that won’t likely happen, since the probability having our flipped coin land tails ten consecutive times is less than 0.01%). USM relies on the binomial distribution to estimate how many times our task will be exceeded. With 95% confidence and using Excel’s BINOM.INV function, we find that there exists the probability that 8 or fewer tasks will exceed their estimates. We already fully expected that half of our tasks would exceed their estimates since our 1-day estimates had only a 50% confidence level. With 100% confidence, we could guard against schedule failure by estimating 2-days for every task (20 days total for all ten tasks), but doing so wastes unneeded resources any time the flipped coin is heads. With 95% confidence, the BINOM.INV function estimates that no more than eight of our tasks will exceed our 1-day estimate; it is quite possible that fewer than eight tasks will exceed the 1-day estimate. Therefore, we don’t need to create a project schedule with 10 anticipated days plus another 10 days of schedule contingency to cover all the estimation errors of all ten tasks. With 95% confidence in our project contingency budget, we can 13 The Unified Scheduling Method was developed by Dr. Homayoun Khamooshi and Dr. Denis F. Cioffi from The George Washington University. 22 © Copyright 2014 – William W. Davis – DRAFT COPY add 8 days to the project schedule contingency, saving our project from budgeting those 2 extra buffer days. The fuller explanation of USM is outside the scope of this whitepaper, but the reader should understand that it is not wise to create project schedules with 99% or 100% confidence in the estimation of every task on the schedule (unless the project team is not given any project contingency budget to manage, in which case estimating with 100% confidence may not be such a bad idea!). Rather, the project manager should create a project schedule that: Guards against making repeated scheduling changes and running over-budget and/or overschedule due to underestimation Reduces needlessly locking-up project resources and potentially activating Parkinson’s Law due to overestimation Provides a suitable contingency to cover all the expected estimation errors that the project will likely encounter Conclusion Statistical PERT, or S-PERT, is a risk-based, statistical approach to estimating project tasks. S-PERT allows task estimators to provide a subjective opinion on how likely is the most likely outcome. S-PERT converts a task estimator’s subjective opinion into an adjustment of the standard deviation for a normally distributed probability curve associated with the task’s 3-point PERT estimate. Then, S-PERT offers project managers and decision-makers the opportunity to select a task estimate that corresponds to a specific level of certainty that the task estimate will not be exceeded during the project execution phase. S-PERT gives project managers a method of making rational and accurate predictions about their estimates. S-PERT can be used with the Unified Scheduling Method to calculate a project contingency budget that guards against estimation errors, so it is unnecessary to schedule all project tasks with very high certainty probabilities. 23 © Copyright 2014 – William W. Davis – DRAFT COPY Bibliography There are no sources in the current document. 24 © Copyright 2014 – William W. Davis – DRAFT COPY