SuperTask * building to a generic execution framework

advertisement

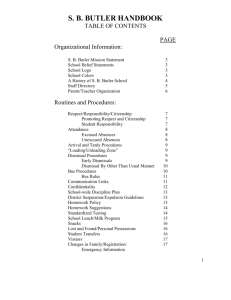

SuperTask – building to a generic execution framework Matias Carrasco Kind – NCSA Gregory Dubois-Felsmann – IPAC Some desiderata we had in mind • Allow units of work to be invoked through a generic programmatic interface • Allow the specification of sequences of work to be done in either a programmatic or a table-driven way • Allow units of work to be surrounded / decorated with a variety of monitoring functions • Allow the invocation of units of work from multiple sources – – – – Command line Callbacks in the SUI QA harness Production system Existing Task framework • Strong abstraction for establishing a hierarchy of units of work and configurations associated with them – Tasks can have subtasks – Configuration mechanism is tightly tied to Task structure • Weak abstraction for defining flow of data from Task to Task – Operate-on-data API is unique to each Task • Common name (“run()”) but not a true abstraction – Name-value-pairs interface (“Struct”) for returning data from a Task • Tasks operate on Python-domain objects – Tasks generally do not do I/O, even through the Butler, except… • CmdLineTask (a subclass of Task) provides a base class for Tasks with I/O and the code needed to invoke it as a Unix command Why is it like this? Tasks can be seen almost like free functions with configuration There were reasons that a previous framework was replaced: • Make the inputs to a Task explicit at the code level – Outputs are more opaque • Make it possible to invoke a Task from a Python prompt on objects already in the interpreter – Very debugging-friendly • Make it easy to invoke a set of tasks interactively without a complex control system Concerns • Some (known) consequences of this design – No way for a superior task to invoke a subtask without developing a dependency on the specific API of the subtask – Not obvious how to substitute a subtask in an existing workflow with one that does the same job but requires additional / different ancillary inputs; seems to require modifications in every superior Task that uses it • Use case is: “transform objects of types A and B into object type X” – Existing task design mandates interface “run( A a, B b )” returning a Struct containing X. – What if that process requires calibration constants, retrieved from a database, as inputs? Existing Task mechanism suggests “run( A a, B b, Calib1 c1 )” – What if I want to replace that with a different calibration that takes two sets of constants? “run( A a, B b, Calib2 c2, Calib3 c3 )” – but now I have to modify the calling Task’s actual code in order to introduce this, and the calling task may have to carry along all three calibration inputs in its own run() in order to provide a run-time switch. – No simple way to insert an extra (passive) step into a pipeline without actually editing the run() code in the superior Task – No generic way to instrument the calls between tasks to extract provenance information or apply wrappers that spy on the pipeline's performance or on the data flowing between tasks Concerns … – Superior tasks often seem to contain contain "glue code" that unpacks or modifies data produced by one Task before passing it to the next Task; each case of this seems unique, no general pattern • No real architectural barrier to this; superficially, it seems to fail to encourage modularity, though I admit this is debatable – No obvious generic way to force a pipeline built in this manner to persist its intermediates, as they exist only as transient variables in the run() method of superior tasks. • Therefore difficult to support checkpointing ("freeze drying") of pipeline states, in terms of inputs and outputs, to allow focused re-runs of only failing steps. • Checkpointing the entire interpreter state may be an alternative. – No place to put generic code providing responses to exceptions • This is both a plus and a minus – in many cases task-specific responses are appropriate – Vision for composing a pipeline out of Tasks requires the hierarchical superiors to contain procedural code that is specific to the subtasks. No architectural barrier to replicating this pattern all the way up to the highest levels of the construction of a workflow. • Only alternative provided is to stop at the CmdLineTask level and then compose the higher levels out of a workflow system that runs Unix commands. Concerns… • Task-invocation API that supports I/O (“run( dataref )”) is tangled with the code to run from the command line; no easy way to invoke an I/O-performing task in any other context Also… but not addressed today… • No structured way to define configurations that simultaneously affect a set of tasks Step 1 • Refactor CmdLineTask – Separate I/O-performing task-invocation API (“run( dataRef )”) from specifics of running from the command line (parsing filenames and POSIX arguments) • How? – Introduce an abstraction (provisionally called “SuperTask”) that provides the invocation interface: class SuperTask(Task): def execute( self, dataRef ): – Introduce the concept of an “Activator” which invokes a SuperTask • The Activator supplies the Butler, sets up logging, loads configurations, etc. – Concrete Activators • • • • • Command line Firefly plugin QA harness Designed to be open for extension in this direction Not intended to be customized for specific applications Writing execute() in a concrete leaf-node SuperTask Recipe: 1. Retrieve inputs from the provided Butler interface – Provisionally a dataRef (see discussion later) 2. Invoke an explicit-arguments implementation of run() – run() may itself be a “leaf node” or may invoke one or more sub-Tasks (that are not SuperTasks) 3. Extract outputs from Struct returned from run() and write to Butler Preserves the ability to invoke based on explicit objects, supporting debugging. Leaf node SuperTasks are still usable as Tasks. Running a SuperTask • CmdLineActivator provides, for now, exactly the same argumentparsing currently supplied by CmdLineTask, including for configuration overrides • Can be invoked directly as cmdLineActivator task-name --extra usual-arguments-and-options • Can wrap this, as at present, in a bin/task-name.py • Other activators will work in different ways – Firefly activator will get configuration overrides from JSON passed in from the JavaScript side Butler setup • It is the Activator’s responsibility to convert an I/O specification from the command line (or other source) into a configured Butler: – – – – Single-file Butler (when it becomes available) Directory-oriented Butler Registry-based Butler Butler that provides visibility into the Firefly server data model Configuring SuperTasks • SuperTasks are Tasks, and so are configurable in the same way Running more than one SuperTask • Apply the Composite Pattern! • Create implementations of SuperTask that maintain a list of SuperTasks and runs them – The argument to the parent execute() is passed in turn to each child SuperTask – Composite SuperTasks do not implement run() • Basic run-tasks-in-sequence case: WorkFlowSeqTask – Provides an API • WorkFlowSeqTask.link( list-of-SuperTasks ) – Can be set up by defining a subclass of WorkFlowSeqTask whose constructor establishes the children and calls link() – Can also be configuration-driven Passing data among SuperTasks • A SuperTask communicates to its successors in a sequence by writing data to its Butler – Requires modification to the Butler to allow it to handle the concept of temporaries and/or deferred persistence – Enables decisions on persistence of temporaries to be controlled from “outside” a configured workflow – SuperTask-level provenance can be maintained in a generic way – Diagnostic data on the inputs and outputs of the whole set of SuperTasks run in a process can be recorded and visualized, as ASCII, as .dot files, etc. Expressing the transformations that can be performed • A key question: – What should the signature of execute() be? – A single dataRef (the initial provisional interface) is inadequate – the single-dataRef interface allows a transformation to be performed only if all the inputs and outputs can be referenced by a single dataId. Handles: • 1:1 transformations like calibrating an image, where flats, etc. can be referred to by the same dataId as the input image • many:1 transformations where the “output” dataId (e.g., a patch) can be used to retrieve a set of inputs from the Butler – These are not adequate. – Step 2 in this project will be for us to review the universe of CmdLineTasks in more detail and understand what capabilities are needed Some next steps • Elaborate the execute()/run() layering to allow the Activator to ask “if I were to run you, what would you ask for”, intercepting the generated Butler calls, without invoking the algorithmic code in run() • Investigate ability to parallelize • Investigate ability to perform scatter-gather • Ask the question of whether the existing boundary between the CmdLineTask level and the Task level is in exactly the right place – Are there capabilities that could be incorporated in SuperTask and the execute() signature that would make this easier?