PowerPoint

advertisement

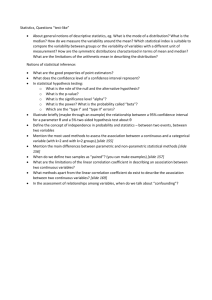

46-320-01 Tests and Measurements Intersession 2006 More Correlation Spearman’s rho: two sets of ranks Biserial correlation: continuous and artificial dichotmous variable Point biserial correlation: true dichotmous variable Hypothesis Testing Review Independent and Dependent variables In Psychology we test hypotheses Null Hypothesis (H0): a statement of relationship between the IV and DV, usually a statement of no difference or no relationship – we assume there is no relationship between IV and DV Alternative/Research Hypothesis (Ha): states a relationship, or effect, of the IV on the DV Hypothesis Examples H0: Men and women do not differ in IQ (men = women) Ha: Men and women do differ in IQ (men women) Any difference in value of the DV between the levels of the IV can be explained in 2 ways – the effect of the IV or sampling error Hypothesis Testing with Correlations Null Hypothesis: there is no significant relationship between X and Y Alternative Hypothesis: there is a significant relationship between X and Y (r is significantly different from 0) We can use Appendix 3 (p. 641) df = N – 2 robs = .832 rcrit = .195 Reject Ho Regression We know the degree to which 2 variables are related - correlation How do we predict the score on Y if we know X? Regression line Principle of least squares Y a bX ' Equation Explained Y’: predicted value of Y b: regression coefficient = slope Describes how much change is expected in Y with one unit increase in X a: intercept = value of Y when X is 0 Line of Best Fit Actual (Y) and predicted (Y’) scores are almost never the same Residual 2 Y Y ' Deviations from Y’ at a minimum Prediction Interpreting plot More Correlation Standard error of estimate Coefficient of determination Coefficient of alienation Shrinkage Cross validation Correlation does not equal causation! Third variable Multivariate Analysis 3 or more variables Many predictors, one outcome Linear Regression: linear combination of variables Y ' a b1 X 1 b2 X 2 ... bn X n Weights Raw regression coefficients Standardized regression coefficients Predictive power More Multivariate Discriminant Analysis Prediction of nominal category Multiple discriminant analysis Factor Analysis No criterion Interrelation Data reduction Principal components Factor loadings Rotation Reliability Assess sources of error Complex traits Relatively free from error = reliable Spearman, Thorndike 1904 Coefficients Kuder and Richardson 1934 Cronbach 1972 on IRT True Score Reliability Error and True Score X=T+E Random Error produces a distribution Mean is the estimated true score Reliability True score should not change with repeated administrations Standard error of measurement Sm S 1 r Larger = less reliable Use to create confidence intervals Reliability Domain Sampling Model Shorter test estimate, but sample = error Reliability: Usually expressed as a correlation Reliability: Sampling distribution, correlations b/w all scores, average correlation Reliability Reliability: r 2 T 2 X Percentage of observed variation attributable to variation in the true score r = .30: 70% of variance in scores due to random factors Sources of Error Why are observed scores different from true scores? Situational factors Unrepresentative q’s What else? Test-Retest Reliability Error of repeated administration Correlation b/w 2 times Consider: Carryover effects Time interval Changing characteristics Parallel Forms Reliability 2 forms that measure the same thing Correlation between two forms Counterbalanced order Consider time interval Example: WRAT-3 Internal Consistency Split-Half reliability Divide and correlate (internal consistency) Check method of dividing 2r r 1 r Why use Spearman-Brown formula? Each test ½ length – decreases reliability Cronbach’s alpha – unequal variances Internal Consistency Intercorrelations among items within same test Extent to which items measure same ability/trait Low? Several characteristics? Use KR20, coefficient alpha Considers all ways of splitting data Difference Scores Same trait: reliability = 0 Use z-score transformations Generally low Observer Differences Estimate reliability of observers Interrater Reliability Percentage Agreement Kappa Corrects for chance agreement 1 (perfect agreement) to –1 (less than chance alone) Interpreting: >.75 = “excellent” .40 to .75 = “fair to good” < .40 = “poor” Interpreting Reliability General rule of thumb: Above 0.70 to 0.80 – good Higher the stakes, higher the r Use confidence intervals (from standard error of estimate) Low Reliability Increase items Spearman-Brown prophecy formula Factor item analysis Omit items that do not load onto one factor Drop items Correct for Attenuation (low correlations) Validity Agreement b/w a test score and what it is intended to measure Face validity: Looks like it’s valid Content-validity Representative/fair sample of items Construct underrepresentation Construct-irrelevant variance Criterion-Related Validity How well a test corresponds with a criterion Predictive validity Concurrent validity Validity Coefficient Coefficient of determination Evaluating Validity Coefficients Changes in cause of relationship Meaning of criterion Validity population Sample size Criterion vs predictor Restricted range Validity generalization Differential prediction Construct-Related Validity Define a construct and develop its measure Main type of validity needed Convergent evidence Correlates with other measures of construct Meaning from associated variables Discriminant evidence Low correlations with unrelated constructs Criterion-referenced tests