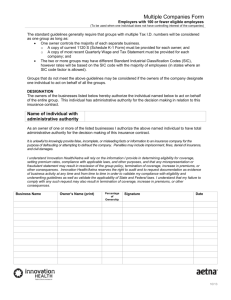

Simple View on Simple Interval Calculation (SIC)

advertisement

Simple View on Simple Interval

Calculation (SIC)

Alexey Pomerantsev,

Oxana Rodionova

Institute of Chemical Physics, Moscow

and Kurt Varmuza

Vienna Technical University

© Kurt Varmuza

10.02.05

WSC-4

1

CAC, Lisbon, September 2004

10.02.05

WSC-4

2

Leisured Agenda

1. Why errors are limited?

2. Simple calculations, indeed!

Univariate case

3. Complicated SIC.

Bivariate case

4. Conclusions

10.02.05

WSC-4

3

Part I. Why errors are limited?

10.02.05

WSC-4

4

Water in wheat. NIR spectra by Lumex Co

2

1

0

-1

-2

9058.

10.02.05

9290.

9521.

9753.

9984.

WSC-4

10216

10447

10679

5

Histogram for Y (water contents)

40

141 samples

30

20

10

0

8

10.02.05

9

10

11

WSC-4

12

13

14

6

Normal Probability Plot for Y

99.65

89.01

78.37

67.73

57.09

42.91

32.27

38%

21.63

10.99

21%

3%

0.35

8

10.02.05

9

10

11

WSC-4

12

13

14

7

PLS Regression. Whole data set

10.02.05

WSC-4

8

PLS Regression. Marked “outliers”

10.02.05

WSC-4

9

PLS Regression. Revised data set

10.02.05

WSC-4

10

Histogram for Y. Revised data set

40

124 samples

30

20

10

0

8

10.02.05

10

12

WSC-4

14

11

Normal Probability Plot. Revised data set

99.60

89.92

80.24

70.56

60.89

39.11

29.44

96%

19.76

10.08

81%

31%

0.40

9

10.02.05

10

11

WSC-4

12

13

14

12

Histogram for Y. Revised data set

40

30

20

10

0

10

m-3s

10.02.05

12

m-2s

m-s

m

WSC-4

14

m+s

m+2s

m+3s

13

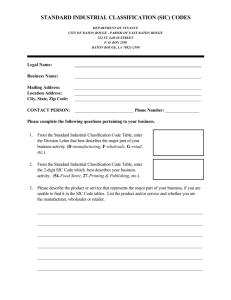

Error Distribution

Normal distribution

-b

s s s s s s

Truncated normal distribution 3.5s

+b

s

s

s

s

-b

s

+b

s s s s s s

s

s

s

s

s

Both distributions

-b

+b

s s s s s s

10.02.05

WSC-4

s

s

s

s

s

14

Main SIC postulate

All errors are limited!

There exists Maximum Error Deviation,

b, such that for any error e

Prob{| e | > b }= 0

Error distribution

b

10.02.05

b

WSC-4

15

Part 2. Simple calculations

10.02.05

WSC-4

16

Case study. Simple Univariate Model

Data

7

Test

y

C1

1.0

1.28

C2

2.0

1.68

C3

4.0

4.25

C4

5.0

5.32

T1

3.0

3.35

T2

4.5

6.19

T3

5.5

5.40

6

C4

5

Response, y

Training

x

T2

T3

4

T1

C3

3

2

C1

C2

1

Error distribution

Variable, x

0

0

1

2

3

4

5

6

Model

b

10.02.05

y=ax+e

b

WSC-4

17

OLS calibration

OLS Calibration is minimizing the Sum of Least Squares

7

T2

6

C4

Response, y

5

Sum of Squares

T3

4

T1

3

2

C1

C2

1

a

0.5

10.02.05

C3

Variable, x

0

0

0.7 0.8 0.9 1.044

1.1 1.2 1.3 1.4 1.5

WSC-4

1

2

3

4

5

6

18

Uncertainties in OLS

7

T2

6

C4

Response, y

5

t3(P) is quantile of Student's

t-distribution for probability

P with 3 degrees of freedom

T3

4

T1

C3

3

2

C1

C2

1

Variable, x

0

0

10.02.05

WSC-4

1

2

3

4

5

6

19

SIC calibration

|e|<b

7

Maximum Error Deviation

is known:

6

b = 0.7 (=2.5s)

C4

2b

Response, y

5

C3

2b

4

3

2

C2 2b

2b

C1

1

Variable, x

0

0

10.02.05

WSC-4

1

2

3

4

5

6

20

SIC calibration

7

6

C4

Response, y

5

C3

4

3

2

C2

Training

10.02.05

amin amax

x

y

C1

1.0

1.28

0.58

1.98

C2

2.0

1.68

0.49

1.19

C3

4.0

4.25

0.89

1.24

C4

5.0

5.32

0.92

1.20

C1

1

Variable, x

0

0

WSC-4

1

2

3

4

5

6

21

Region of Possible Values

amin amax

Training

x

y

C1

1.0

1.28

0.58

1.98

C2

2.0

1.68

0.49

1.19

C3

4.0

4.25

0.89

1.24

C4

5.0

5.32

0.92

1.20

C4

C3

C2

C1

RPV

a min=0.92

10.02.05

a

a max=1.19

WSC-4

22

SIC prediction

7

T2

6

T3

C4

Response, y

5

Test

x

y

v-

v+

T1

3.0

3.35

2.77

3.57

T2

4.5

6.19

4.16

5.36

T3

5.5

5.40

5.08

6.55

C3

4

T1

3

2

C2

C1

1

Variable, x

0

0

10.02.05

WSC-4

1

2

3

4

5

6

23

Object Status. Calibration Set

amin amax

y

C1

1.0

1.28

0.58

1.98

C2

2.0

1.68

0.49

1.19

C3

4.0

4.25

0.89

1.24

C4

5.0

5.32

0.92

1.20

Samples C2 & C4 are the boundary

objects. They form RPV.

7

6

C4

5

Response, y

Training

x

C3

4

3

2

C2

Samples C1 & C3 are insiders.

They could be removed from the

calibration set and RPV doesn’t

C1

1

Variable, xx

Variable,

0

change.

10.02.05

0

WSC-4

1

2

3

4

5

6

24

Object Status. Test Set

Let’s consider what happens when a

7

new sample is added to the calibration

set.

6

C4

Response, y

5

4

3

2

C2

C4

1

C2

a

a min =0.92

10.02.05

RPV

Variable, x

0

a max=1.19

0

WSC-4

1

2

3

4

5

6

25

Object Status. Insider

If we add sample T1,

RPV doesn’t change.

7

This object is an insider.

6

Prediction interval lies

5

C4

Response, y

inside error interval

4

T1

3

2

T1

C2

C4

1

C2

a

a min=0.92

10.02.05

RPV

a max=1.19

Variable, x

0

0

WSC-4

1

2

3

4

5

6

26

Object Status. Outlier

If we add sample T2,

RPV disappears.

7

This object is an outlier.

T2

6

C4

Prediction Interval

5

Response, y

lies out error interval

4

3

2

T2

C2

C4

1

C2

a

Variable, x

0

a min=0.92

10.02.05

a max=1.19

0

WSC-4

1

2

3

4

5

6

27

Object Status. Outsider

If we add sample T3,

7

RPV becomes smaller.

6

This object is an outsider.

T3

C4

5

Response, y

Prediction interval overlaps

error interval

4

3

2

T3

C2

C4

1

C2

a

a min =0.92

10.02.05

RPV

a max=1.11

Variable, x

0

0

WSC-4

1

2

3

4

5

6

28

SIC-Residual and SIC-Leverage

They characterize interactions between prediction and error intervals

Definition 1.

SIC-residual is defined as –

v+

bh

This is a characteristic of bias

y–b

Definition 2.

SIC-leverage is defined as –

br

v–

y

y+b

This is a normalized precision

10.02.05

WSC-4

29

Object Status Plot

Using simple algebraic calculus one can prove the following statements

T2

Statement 1

An object (x, y) is an insider, iff

B

1

Statement 2

An object (x, y) is an outlier, iff

SIC-residual, r

| r (x, y) | 1 – h (x)

Presented by triangle BCD

C1

C4 C

T1

C3

C2

-1

1

SIC-Leverage, h

T3

D

| r (x, y) | > 1 + h (x)

Presented by lines AB and DE

10.02.05

A

E

WSC-4

30

Object Status Classification

Insiders

Absolute

outsiders

Outliers

Outsiders

10.02.05

WSC-4

31

OLS Confidence versus SIC Prediction

7

True response value, y, is always

located within the SIC prediction

P=0.95

P=0.999

P=0.99

T2

6

C4

interval. This has been confirmed

5

times. Thus

Prob{ v- < y < v+ } = 1.00

Confidence intervals tends to

infinity when P is increased.

Response, y

by simulations repeated 100,000

T3

4

T1

C3

3

2

C1

C2

1

Confidence intervals are

unreasonably wide!

10.02.05

Variable, x

0

0

WSC-4

1

2

3

4

5

6

32

Beta Estimation. Minimum b

0.6

0.5

0.4

0.3

bb==0.7

C4

C4

C4

C4

C4

C3

C3

C3

C3

C3

C2C2C2C2C2

C1 C1 C1 C1 C1

RPV

RPV

RPV

RPV

a

b > bmin = 0.3

10.02.05

WSC-4

33

Beta Estimation from Regression Residuals

e = ymeasured – ypredicted

bOLS= max {|e1|, |e2|, ... , |en |}

bOLS = 0.4

bSIC= bOLS C(n)

Prob{b < bSIC}=0.90

10.02.05

WSC-4

bSIC = 0.8

34

1-2-3-4 Sigma Rule

10.02.05

1s RMSEC

RMSEC = 0.2 = 1s

2s bmin

bmin

= 0.3 = 1.5s

3s bOLS

bOLS

= 0.4 = 2s

4s bSIC

bSIC

= 0.8 = 4s

WSC-4

35

Part 3. Complicated SIC. Bivariate case

10.02.05

WSC-4

36

Octane Rating Example (by K. Esbensen)

Test set =13 samples

0.6

0.4

0.2

Training set = 24 samples

0.6

0

0.4

1100

1200

1300

1400

1500

X-values are NIR-measurements

over 226 wavelengths

0.2

Y-values are reference

measurements of octane number.

0

1100

10.02.05

1200

1300

1400

1500

WSC-4

37

Calibration

0.4

PC2

Scores

4

0.2

2

0

0

-0.2

PC1

-0.4

-0.2

0

RMSE

Elements:

37

Slope:

9.643866

Offset:

0.006391

Correlation: 0.991227

-2

0.2

T Scores

-0.1

RESULT4, X-expl: 85%,12% Y-expl: 85%,13%

4

U Scores

0

0.1

0.2

0.3

0.4

RESULT4, PC(X-expl,Y-expl): 2(12%,13%)

Root Mean Square Error

94

Predicted Y

Slope

0.981975

0.919002

Offset

1.608816

7.082160

Corr.

0.990947

0.972058

92

2

90

88

0

PCs

PC_01

PC_02

RESULT4, Variable: c.octane v.octane

10.02.05

PC_03

86

PC_04

Measured Y

86

88

90

92

RESULT4, (Y-var, PC): (octane,2) (octane,2)

WSC-4

38

PLS Decomposition

1

p

PLS

= y

n

b

p

n

X

1

2PC

1

1

10.02.05

WSC-4

= y – y0 1

n

a

n

n

T

2

1

39

1-2-3-4 Sigma Rule for Octane Example

RMSEC = 0.27 = 1s

bmin

= 0.48 = 1.8s

bOLS

= 0.58 = 2.2s

bSIC

= 0.88 = 3.3s

b = bSIC = 0.88

10.02.05

WSC-4

40

RPV in Two-Dimensional Case

y1 – y0– b t11a1 + t12a2 y1 – y0 + b

y2 – y0– b t21a1 + t22a2 y2 – y0 + b

...

yn – y0– b tn1a1 + tn2a2 yn – y0 + b

We have a system of 2n =48 inequalities

regarding two parameters a1 and a2

10.02.05

WSC-4

41

Region of Possible Values

40

a2

35

30

25

RPV

20

15

10

5

5

a 11

0

0

0

0

10.02.05

5

5

10

10

15

15

20

20

WSC-4

25

25

30

30

35

35

40

40

42

Close view on RPV. Calibration Set

RPV in parameter space

a2

1

18+

3

24

23

4

9

–

12

RPV

9

20

13

SIC-Residual

28

Object Status Plot

–

2

+

2

0

3

23

1

18

24

7+

5

12

16

18

20

Samples

24

7 13

a1

SIC-Leverage

-1

22

Boundary Samples

C7

10.02.05

10

1

6

14

6

22

4 20

11

14–

12

19

8 15

21

5 1617

0

1

16

14

C9

C13

C14

C18

C23

—— —— —— —— —— ——

WSC-4

43

SIC Prediction with Linear Programming

v+

v–

Vertex #

a1

a2

t ta

y

1

13.91 16.36

-0.40

88.86

2

14.22 18.36

-0.35

88.90

3

16.79 26.66

-0.24

89.01

4

19.91 26.61

-0.46

88.79

5

20.41 13.16

-0.96

88.30

6

17.44 13.52

-0.74

88.52

Linear Programming Problem

10.02.05

WSC-4

44

Octane Prediction. Test Set

Prediction intervals: SIC & PLS

94

Object Status Plot

Reference values

PLS 2RMSEP

SIC prediction

2

13

SIC-Residual

Octane Number

92

90

88

11

6

1

8

7 9

-1

1

5

10

4

3

0

12

2

1

2

3

SIC-Leverage

86

1

2

3

4

5

6

7

8

9 10 11 12 13

Test Samples

10.02.05

-2

WSC-4

45

Conclusions

• Real errors are limited. The truncated normal distribution is a

much more realistic model for the practical applications than

unlimited error distribution.

s s s s s s s s s s s

• Postulating that all errors are limited we can draw out a new

concept of data modeling that is the SIC method. It is based on

this single assumption and nothing else.

• SIC approach let us a new view on the old chemometrics

problems, like outliers, influential samples, etc. I think that

this is interesting and helpful view.

10.02.05

WSC-4

46

OLS versus SIC

SIC-Residuals vs. OLS-Residuals

SIC-Leverages vs. OLS-Leverages

OLS-Leverage

OLS-Residual

T2

C1

0.0

0.0

C2

T3

C3

0.4

C3

-1.0

C4

0.6

1.0

T2

SIC-Residual

T1

C4

1.0

2.0

0.2

C2

T1

C1

T3

SIC-Leverage

0.0

0.0

-1.0

0.5

SIC Object Status Plot

SIC-residual

1.0

OLS/PLS Influence Plot

T2

OLS-variance

T2

2

1.0

C1

T1

0.0

0.5

C4

C3

SIC-Leverage

1.0

1

C2

-1.0

C2

T3

C1

T3

T1

C3

C4

OLS-Leverage

0

0.0

10.02.05

WSC-4

0.5

1.0

47

Statistical view on OLS & SIC

Let’s have a sampling {x1,...xn} from a distribution with finite support [-1,+1].

The mean value a is unknown!

OLS

SIC

Statistics

-1

+1

Deviation

a=?

0.3

2.5s truncated normal distribution, n=100

OLS

SIC

0.2

0.1

0.0

-0.1

-0.2

-0.3

1

10.02.05

20

40

60

WSC-4

80

100

48