Bucket - University of South Carolina

advertisement

CSCE 582

Computation of the Most Probable

Explanation in Bayesian Networks

using Bucket Elimination

-Hareesh Lingareddy

University of South Carolina

Bucket Elimination

- Algorithmic framework that generalizes

dynamic programming to accommodate

algorithms for many complex problem solving

and reasoning activities.

- Uses “buckets” to mimic the algebraic

manipulations involved in each of these

problems resulting in an easily expressible

algorithmic formulation

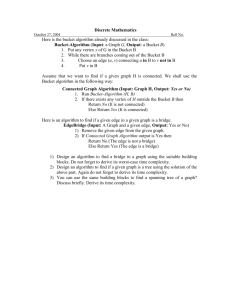

Bucket Elimination Algorithm

- Partition functions on the graph into “buckets” in

backwards relative to the given node order

- In the bucket of variable X we put all functions

that mention X but do not mention any variable

having a higher index

- Process buckets backwards relative to the node

order

- The computed function after elimination is

placed in the bucket of the ‘highest’ variable in its

scope

Algorithms using Bucket Elimination

-

Belief Assessment

Most Probable Estimation(MPE)

Maximum A Posteriori Hypothesis(MAP)

Maximum Expected Utility(MEU)

Belief Assessment

• Definition

- Given a set of evidence compute the posterior probability of

all the variables

– The belief assessment task of Xk = xk is to find

bel ( xk ) P( X k xk | e) k

n

P( x | pa( x ), e)

X { xk } i 1

i

i

where k – normalizing constant

• In the Visit to Asia example, the belief assessment problem

answers questions like

– What is the probability that a person has tuberculosis, given

that he/she has dyspnoea and has visited Asia recently ?

Belief Assessment Overview

• In reverse Node Ordering:

– Create bucket function by multiplying all functions

(given as tables) containing the current node

– Perform variable elimination using Summation

over the current node

– Place the new created function table into the

appropriate bucket

Most Probable Explanation (MPE)

• Definition

– Given evidence find the maximum probability

assignment to the remaining variables

– The MPE task is to find an assignment xo = (xo1, …, xon)

such that

n

P( x o ) max P( xi | pa( xi ), e)

X

i 1

Differences from Belief Assessment

– Replace Sums With Max

– Keep track of maximizing value at each

stage

– “Forward Step” to determine what is the

maximizing assignment tuple

Elimination Algorithm for Most Probable

Explanation

Finding MPE = max ,,,,,,, P(,,,,,,,)

MPE= MAX{,,,,,,,} (P(|)* P(|)* P(|,)* P(|,)* P()*P(|)*P(|)*P())

Bucket :

P(|)*P()

Bucket :

P(|)

Bucket :

P(|,), =“no”

Bucket :

P(|,)

H(,)

Bucket :

P(|)

H(,,)

Bucket :

P(|)*P()

Hn(u)=maxxn ( ПxnFnC(xn|xpa))

H(,,)

Bucket :

H(,)

Bucket :

H()

H()

MPE probability

H()

Elimination Algorithm for Most Probable

Explanation

Forward part

Bucket :

P(|)*P()

’ = arg max P(’|)*P()

Bucket :

P(|)

’ = arg max P(|’)

Bucket :

P(|,), =“no”

’ = “no”

Bucket :

P(|,) H(,) H()

’ = arg max P(|’,’)*H(,’)*H()

Bucket :

P(|) H(,,)

’ = arg max P(|’)*H(’,,’)

Bucket :

P(|)*P() H(,,)

’ = arg max P(’|)*P()* H(’,’,)

Bucket :

H(,)

’ = arg max H(’,)

Bucket :

H() H()

’ = arg max H()* H()

Return: (’, ’, ’, ’, ’, ’, ’, ’)

MPE Overview

• In reverse node Ordering

– Create bucket function by multiplying all functions (given

as tables) containing the current node

– Perform variable elimination using the Maximization

operation over the current node (recording the

maximizing state function)

– Place the new created function table into the appropriate

bucket

• In forward node ordering

– Calculate the maximum probability using maximizing state

functions

Maximum Aposteriori Hypothesis

(MAP)

• Definition

– Given evidence find an assignment to a subset of

“hypothesis” variables that maximizes their

probability

– Given a set of hypothesis variables A = {A1, …, Ak},

A X ,the MAP task is to find an assignment

ao = (ao1, …, aok) such that

P(a o ) max

A

n

P( x | pa( x ), e)

X A i 1

i

i