IL Best Practices - University Libraries

advertisement

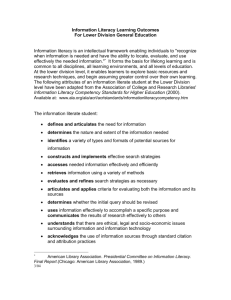

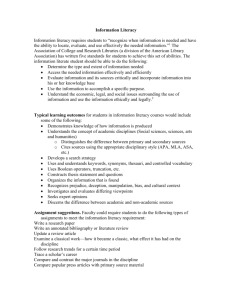

IL Best Practices: Innovative Approaches to Fit Your Institutional Needs Sheril Hook, Instruction Coordinator, University of Toronto Mississauga Stephanie Sterling Brasley, Manager, Information Literacy Initiatives, California State University, Office of the Chancellor Participants will be able to Articulate the major ideas from categories 5 and 10 of the Best Practices Guidelines Identify assessment tools for information literacy development Identify basic strategies for how categories 5 and 10 of the Best Practices Guidelines can be applied to their own instructional and institutional environments Agenda Category 5: articulation with the curriculum Playing with the IL Standards IL by design: embedding IL into course outcomes Category 10: assessment and evaluation Instruction statistics Horizontal and vertical integration ALA/ACRL Characteristics of Programs of Information Literacy that Illustrate Best Practices Category 5: Articulation with the Curriculum Articulation with the curriculum for an information literacy program: is formalized and widely disseminated; emphasizes student-centered learning; uses local governance structures to ensure institution-wide integration into academic or vocational programs; identifies the scope (i.e., depth and complexity) of competencies to be acquired on a disciplinary level as well as at the course level; sequences and integrates competencies throughout a student’s academic career, progressing in sophistication; and specifies programs and courses charged with implementation. http://www.ala.org/ala/acrl/acrlstandards/characteristics.htm IL Standards Standard One The information literate student determines the nature and extent of the information Performance Indicator 2. The information literate student identifies a variety of types and formats of potential sources for information. Outcomes include Knows how information is formally and informally produced, organized, and disseminated Recognizes that knowledge can be organized into disciplines that influence the way information is accessed Identifies the value and differences of potential resources in a variety of formats (e.g., multimedia, database, website, data set, audio/visual, book) Differentiates between primary and secondary sources, recognizing how their use and importance vary with each discipline Realizes that information may need to be constructed with raw data from primary sources "Information Literacy Competency Standards for Higher Education." American Library Association. 2006. http://www.ala.org/acrl/ilcomstan.html (Accessed 15 May, 2007) Let’s play with the standards IL Standards Standard One The information literate student determines the nature and extent of the information Performance Indicator 2. The information literate student _________ a variety of types and formats of potential sources for information. Outcomes include ________ information is formally and informally produced, organized, and disseminated _________ knowledge can be organized into disciplines that influence the way information is accessed __________the value and differences of potential resources in a variety of formats (e.g., multimedia, database, website, data set, audio/visual, book) __________between primary and secondary sources, recognizing how their use and importance vary with each discipline __________ that information may need to be constructed with raw data from primary sources "Information Literacy Competency Standards for Higher Education." American Library Association. 2006. http://www.ala.org/acrl/ilcomstan.html (Accessed 15 May, 2007) Student Engagement: IL depth & complexity research-based learning problem-based learning inquiry-based learning case-based learning discovery learning knowledge building Scardamalia, M., & Bereiter, C. (2003). Research Question (inquiry-based) How have myths changed over time? Humanities 2. Assignment: Myth over Time Outcomes Explore the dynamism of myth by comparing and contrasting a selection of ancient and modern primary sources of a myth (at least one literary, one material) Identify the most significant changes from ancient to modern source and discuss those changes in light of the context in which each source was created Interpret those changes in terms of how they affect the meaning of the myth and how they came about in the first place Humanities 1. Outcomes •compare and contrast a selection of primary sources (art) •Students begin by finding primary sources--art works, music, scripts, opera and background information on artists Google has images, but no provenance information Camio has images, plus provenance and usage rights information Humanities 3. Outcomes •identify the most significant changes...in light of the context in which each source was created. Students build on the learning acquired by finding background information on a time period/place Humanities 4. Outcomes •identify the most significant changes...in light of the context in which each source was created. Students place a myth in the cultural context in which it’s being used or retold Humanities 5. Outcomes •compare and contrast a selection of primary sources (music) Students listen to a symphony to identify the dynamism of the myth and interpret its significance Humanities 6. ALA/ACRL Characteristics of Programs of Information Literacy that Illustrate Best Practices Category 10: Assessment/Evaluation Assessment/evaluation of information literacy includes program performance and student outcomes and: for program evaluation: establishes the process of ongoing planning/improvement of the program; measures directly progress toward meeting the goals and objectives of the program; integrates with course and curriculum assessment as well as institutional evaluations and regional/professional accreditation initiatives; and assumes multiple methods and purposes for assessment/evaluation -- formative and summative -- short term and longitudinal; http://www.ala.org/ala/acrl/acrlstandards/characteristics.htm ALA/ACRL Characteristics of Programs of Information Literacy that Illustrate Best Practices Category 10: Assessment/Evaluation (cont’d) Assessment/evaluation of information literacy includes program performance and student outcomes and: for student outcomes: acknowledges differences in learning and teaching styles by using a variety of appropriate outcome measures, such as portfolio assessment, oral defense, quizzes, essays, direct observation, anecdotal, peer and self review, and experience; focuses on student performance, knowledge acquisition, and attitude appraisal; assesses both process and product; includes student-, peer-, and self-evaluation; http://www.ala.org/ala/acrl/acrlstandards/characteristics.htm Assessment Assessment is the process of gathering and discussing information for multiple and diverse purposes in order to develop a deep understanding of what students know, understand, and can do with their knowledge as a result of their educational experiences; the process culminates when assessment results are used to improve subsequent learning. Mary E. Huba and Jann E. Freed. Learner-Centered Assessment on College Campuses: Shifting the Focus from Teaching to Learning. Allyn & Bacon, 2000. Evaluation Evaluation is “any effort to use assessment evidence to improve institutional, departmental, divisional, or institutional effectiveness” Program Evaluation Components An Assessment/Evaluation Program Plan What are we assessing? What are our IL instruction goals? What are the measurable outcomes? How will we measure at the course/curricular level? Who are our campus partners? How can we tie into institutional efforts? What data do we need to collect? Program Evaluation: Snapshot of Course Penetration 100/200 level course penetration Where What Core courses, instructor status Standards 1-5 How Concept-based or tool-based Instruction Database Instruction Statistics Stats tell a story Redundancy Gaps Planning Stats identify approaches Fig. 5 Number of unique instruction sessions given by type Class snapshot Class Snapshot, contd Interpreting Statistics Assessment of Student Outcomes Direct Assessment Definition Direct evidence of student performance collected from students Actual samples of student work Assess student performance by look at their work products Examples Assignments Research papers Portfolios Dissertations/theses Oral presentations Websites Posters/videos Instructor-designed exams or quizzes Indirect Assessment Definition Provides perspectives and perceptions about what has been learned Faculty must infer students’ skills, knowledge, and abilities rather than observing from direct evidence Examples: Exit interviews Surveys: student satisfaction, Course instruction Focus groups Self-reported reflections Research journals or diaries Student ratings of skills Graduation Rates Job Placement Rates Formative Assessment Ongoing measure of student learning Provides feedback to student and instructor on learning process Takes place during the learning process Examples: Classroom assessment techniques, targeted questions, in-class exercises, research journals/diaries, concept maps Summative Assessment Information gathered at the end of instruction Used to evaluate the efficacy of the learning activity Answers the question of whether learners learned what you had hoped they would Typically quantitative: Examples: test scores, letter grades, graduation rates Formative vs. Summative “When the cook tastes the soup, that’s formative; when the guests taste the soup, that’s summative.” - Robert Stakes http://library.cpmc.columbia.edu/cere/web/ACGME/doc /formative_summative.pdf Classroom Assessment Provides continuous flow of accurate information on student learning Learner-centered Teacher Directed Mutually Beneficial Formative Context-specific Ongoing Rooted in Good teaching practice Classroom Techniques-Examples 1-minute paper Muddiest Point 1-sentence Summary 3-2-1 CATS – Web Form Example http://www2.library.ucla.edu/libraries/college/11306.cfm One Minute Paper form Quarter Class Instructor Librarian Your name Your phone number Your email address 1. What is the most significant or meaningful thing you have learned during the session? (Feel free to add more items) 2. What question(s) remain uppermost in your mind? Example: Indiana University Bloomington Libraries http://www.indiana.edu/~libinstr/Information_Literacy/assessment.html Basic Goal 1. Appreciates the richness and complexity of the information environment. Objective 1.1. You will be able to describe the wide array of information sources available and discuss their appropriateness for a given information problem. Possible Measurement Techniques: Essay examination Oral report Practicum in the library** Written evaluation assignment Basic Goal 4. Design and use a search strategy tailored to a specific information need. Objective 4.1. You will be able to describe and execute an appropriate search strategy in a given information source or in multiple information sources. Possible Measurement Techniques: Annotated bibliography with search strategy discussion included Collaborative learning exercise in class Practical exercise Practicum examination** Research journal Research paper proposal Research portfolio Research worksheet** Diagnostic Assessment Assesses knowledge and skills of students before instruction is designed Determines where student is in terms of learning – identifying gaps in student learning Can provide a baseline for student achievement in a subject area Examples: standardized tests, review of students’ prior work, pre-tests/instructordeveloped tests. Assessment in Practice: iSkills, SAILS, iLit, ILT, ICDL Assessment Tools: Evaluation Considerations Audience Test Development – Reliability and Validity Costs: Development and Administration Test Type: performance-based? Multiplechoice? Delivery mechanism – web? Print? Simulations? Content/Standards Scoring and Reporting Features SAILS – Standardized Assessment of Information Literacy Skills Librarians at Kent State University, 2000 Test of information literacy skills Web-based test Multiple choice questions 142 whole items in American English Students answer 40 items + 5 pilot Based on 4 or 5 ACRL Standards (not 4) iSkills Assessment (Formerly ICT Literacy Assessment) ETS staff with CSU and 7 core institutions Assesses Information and Communication Technology (ICT) Literacy Web-based, performance-based, scenario-based test Core and Advanced Versions; 75 minutes 15 Tasks – 14 short (1-3 min) and 1 long (15 min) Test tasks aligned with and informed by ACRL Standards, ISTE NETS standards Information Literacy Test (ILT) James Madison University’s Center for Assessment and Research Studies and the Library Designed to assess the ACRL standards (not 4) Web-based Multiple Choice test 60 operational items and 5 pilot Password-protected-Secure administration Reliability: 0.88 Cost - ?? iLIT Developed by CA. Community College librarians, CSU Subject Matter experts, Test Development experts, Psychometricians Web-based Multiple choice questions Aligned to ACRL standards “Affordable” High-Stakes, proctored Your Institution’s Needs The Characteristics in Practice