Practice Makes Perfect

advertisement

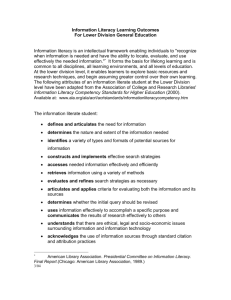

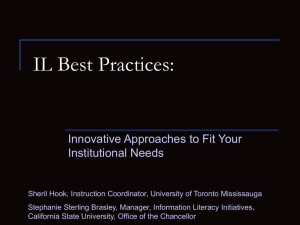

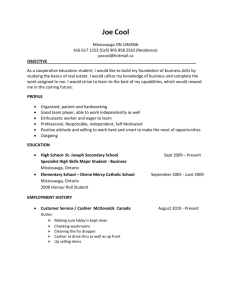

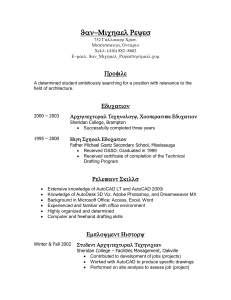

Practice Makes Perfect: applying and adapting best practices in information literacy Sheril Hook Instruction Coordinator Esther Atkinson Liaison Librarian Andrew Nicholson GIS/Data Librarian University of Toronto Mississauga WILU Conference, May 18, 2007 Agenda IL Program Development (Sheril) Examples of BP Category 5 (Andrew) research-based learning IL learning outcomes IL Program Development (Sheril) Category 5: articulation with the curriculum Category 10: Assessment/Evaluation Examples of BP Category 10 (Esther) data and its impact on instruction and planning ALA/ACRL Characteristics of Programs of Information Literacy that Illustrate Best Practices Category 5: Articulation with the Curriculum Articulation with the curriculum for an information literacy program: is formalized and widely disseminated; emphasizes student-centered learning; uses local governance structures to ensure institution-wide integration into academic or vocational programs; identifies the scope (i.e., depth and complexity) of competencies to be acquired on a disciplinary level as well as at the course level; sequences and integrates competencies throughout a student’s academic career, progressing in sophistication; and specifies programs and courses charged with implementation. http://www.ala.org/ala/acrl/acrlstandards/characteristics.htm IL Program Development: Planning Part 1 ACRL Best Practices Document environmental scan internal scan & internal development external scan & external development current state & next steps Shared Philosophical Framework training & development informing our pedagogical practices developing expertise as shared responsibility use of IL Standards and terminology Environmental Scan Core curricula (horizontal/vertical integration in Part 2) Departmental goals Required courses for baseline expectations Representation on curriculum committees Movements in teaching/learning student engagement Environmental Scan Student Engagement NSSE http://nsse.iub.edu/ Peer learning, aka peer assisted learning, supplemental instruction http://www.peerlearning.ac.uk/ http://www.umkc.edu/cad/SI/index.htm Re-invention Center http://www.sunysb.edu/Reinventioncenter/ Inquiry-based, discovery, problem-based, or researchbased learning http://www.reinventioncenter.miami.edu/BoyerSurvey/index.html http://www.reinventioncenter.miami.edu/pdfs/2001BoyerSurvey.pdf Student Engagement research-based learning problem-based learning inquiry-based learning discovery learning knowledge building Scardamalia, M., & Bereiter, C. (2003). Shared Philosophical Framework information literacy as concept tool-based vs. concept-based teaching other literacies, e.g., technology, media, spatial, data inventory of current practices and outreach activities articles & workshops that help develop framework Learning theory Bloom’s taxonomy SOLO Taxonomy (Biggs) development & use of assessment tools What is embedded IL? Embedded Assignment(s) collaboratively developed with instructor. IL stated learning outcomes in instructor's course materials. Session by librarian may or may not have been delivered during class time (e.g., series of walk-in workshops) Integrated Session content tailored to course assignment in consultation with instructor. Session may or may not have been delivered during class time (e.g., series of open workshops available to students). Session may or may not have been optional. Supplemental Generic information literacy instruction; is not tied directly to course outcomes or an assignment. Session may or may not have been optional for students. Session may or may not have been delivered during class time. ANZILL, p6 ANZIL Framework, 2004 ACRL, 2007 Learning Commons, University of Guelph, n.d. IL Standards Standard One The information literate student determines the nature and extent of the information Performance Indicator 2. The information literate student identifies a variety of types and formats of potential sources for information. Outcomes include Knows how information is formally and informally produced, organized, and disseminated Recognizes that knowledge can be organized into disciplines that influence the way information is accessed Identifies the value and differences of potential resources in a variety of formats (e.g., multimedia, database, website, data set, audio/visual, book) Differentiates between primary and secondary sources, recognizing how their use and importance vary with each discipline Realizes that information may need to be constructed with raw data from primary sources "Information Literacy Competency Standards for Higher Education." American Library Association. 2006. http://www.ala.org/acrl/ilcomstan.html (Accessed 15 May, 2007) Examples of IL Standards tailored and embedded into course curricula U of T Mississauga Library When we collaborate with our instructors on designing a class assignment, we emphasize the Library Vision -“Leading for Learning” the availability of thousands of Research and Information Resources through the U of T Libraries: as of May 15, 2007: 395,184 e-holdings including e-books, journals, newspapers, etc. the key role of these resources in enhancing student engagement with their learning. U of T Mississauga Library We also stress to instructors that our electronic resources can be utilized to enhance their instructional content. to foster an active learning environment in the course. Students will begin to think both conceptually and critically about the material. to develop information literacy competencies among the students, such as retrieving and critically evaluating information in any format. More details about information literacy can be found at the Association of College & Research Libraries (ACRL) website. http://www.ala.org/ala/acrl/acrlstandards/informationliteracycompetency.htm Many disciplines are now releasing their own information literacy standards, based on the ACRL model. Examples from Social Sciences Sciences Humanities Assignment: Changes in Canadian Society Outcomes identify and locate statistics needed evaluate statistics for use (do they cover the correct geography?, time period?, etc) analyze statistics communicate the results in term paper and presentation acknowledge the use of information Social Sciences 1. Research Question By examining census data related to occupation, how have women’s working lives changed in a 100 year period? Social Sciences 2. Outcomes •identify and locate statistics needed. • Students recognize that the Census collects statistics on occupation Social Sciences 3. Outcomes •evaluate statistics for use. • • Students differentiate between census years and census geographies available. Students identify value and differences of resources in a variety of formats. Social Sciences 4. Outcomes •analyze statistics • Students recognize the occupation categories being used Social Sciences 5. 5. Outcomes •analyze statistics • Students create a cross tabulation table between Occupation and Sex 1901 Census of Canada: Occupation by Sex Social Sciences 6. Outcomes •analyze statistics 2001 Census of Canada: Occupation by Sex •Students next identify and locate the 2001 Census Variables relating to occupation and sex. On the next slide: •A 2001 Census cross tabulation is then compared with 1901 Census cross tabulation. •Students will recognize that occupation categories will have changed in the 100 year time span. •Students realize that the data can be extrapolated into multiple categories Social Sciences 7. 1901 Census of Canada: Occupation by Sex 2001 Census of Canada: Occupation by Sex Outcomes • analyze statistics 8. Outcomes •communicate the results in term paper and presentation •Students add tables to term paper and also to a class slideshow presentation. •acknowledge the use of information 1901 Census of Canada Bibliographic Entry Canada. Statistics Canada. Census of Canada, 1901: public use microdata file – individuals file [computer file]. Victoria, B.C.: University of Victoria; Canadian Families Project [producer] [distributor]. January 2002. <http://myaccess.library.utoronto.ca/login?url=http://r1.chass.utoronto.ca/sdaweb/html/canpumf.htm> 2001 Census of Canada Bibliographic Entry Canada. Statistics Canada. Census of Canada, 2001: public use microdata file - individuals file [computer file]. Revision 2. Ottawa, Ont.: Statistics Canada [producer]; Statistics Canada. Data Liberation Initiative [distributor], 2006/04/26. (STC 95M0016XCB) <http://myaccess.library.utoronto.ca/login?url=http://r1.chass.utoronto.ca/sdaweb/html/canpumf.htm> Social Sciences 9. Examples from Social Sciences Sciences Humanities Assignment: Cited Reference Searching in the Sciences Outcomes evaluate available resources to see if their scope will include citation tracking statistics and journal impact factor locate and interpret the citation information Sciences 1. Research Question WYTTENBACH, R. and HOY, R. “DEMONSTRATION OF THE PRECEDENCE EFFECT IN AN INSECT” JOURNAL OF THE ACOUSTICAL SOCIETY OF AMERICA; 94 (2): 777-784 AUG 1993. • Before including this reference in a paper, check to see how “reputable” both the article and the journal is in the discipline. Should it be included? Sciences 2. Outcomes •Evaluate available resources to see if their scope includes citation tracking. • Students recognize that journal articles have value in a particular discipline and that they can be measured in a variety of ways, including specialized citation indexes. Sciences 3. Outcomes •Evaluate available resources. •Students recognize the ability to perform cited reference searching in a variety of ways. Sciences 4. Outcomes •locate and interpret the citation information. • Students locate the citation and realizes that the authors consulted a variety of sources (“Cited References”); and more importantly this citation has been cited frequently (“Times Cited”) in the years since publication. Sciences 5. Outcomes •interpret the citation information. •Students can review the cited references from the article and examine the origins of the research Sciences 6. Outcomes •interpret the citation information. •By checking the “Times Cited”, students gain insight into the impact of the article in the discipline. Sciences 7. Outcomes •interpret the citation information. •Students also access the JCR to check the “Impact Factor” Sciences 8. Outcomes •interpret the citation information. •Students can also rank other journals in the discipline by impact factor. Sciences 9. Examples from Social Sciences Sciences Humanities Assignment: Myth over Time Outcomes Explore the dynamism of myth by comparing and contrasting a selection of ancient and modern primary sources of a myth (at least one literary, one material) Identify the most significant changes from ancient to modern source and discuss those changes in light of the context in which each source was created Interpret those changes in terms of how they affect the meaning of the myth and how they came about in the first place Humanities 1. Research Question How have myths changed over time? Humanities 2. Outcomes •compare and contrast a selection of primary sources (art) •Students begin by finding primary sources--art works, music, scripts, opera and background information on artists Google has images, but no provenance information Camio has images, plus provenance and usage rights information Humanities 3. Outcomes •identify the most significant changes...in light of the context in which each source was created. Students build on the learning acquired by finding background information on a time period/place Humanities 4. Outcomes •identify the most significant changes...in light of the context in which each source was created. Students place a myth in the cultural context in which it’s being used or retold Humanities 5. Outcomes •compare and contrast a selection of primary sources (music) Students listen to a symphony to identify the dynamism of the myth and interpret its significance Humanities 6. Summary The U of T Mississauga Library provides access to thousands of digital and interactive resources for a variety of active and conceptual based learning activities. These resources can be utilized to promote both student engagement and the embedding of IL standards and outcomes. ALA/ACRL Characteristics of Programs of Information Literacy that Illustrate Best Practices Category 10: Assessment/Evaluation Assessment/evaluation of information literacy includes program performance and student outcomes and: for program evaluation: establishes the process of ongoing planning/improvement of the program; measures directly progress toward meeting the goals and objectives of the program; integrates with course and curriculum assessment as well as institutional evaluations and regional/professional accreditation initiatives; and assumes multiple methods and purposes for assessment/evaluation -- formative and summative -- short term and longitudinal; http://www.ala.org/ala/acrl/acrlstandards/characteristics.htm ALA/ACRL Characteristics of Programs of Information Literacy that Illustrate Best Practices Category 10: Assessment/Evaluation (cont’d) Assessment/evaluation of information literacy includes program performance and student outcomes and: for student outcomes: acknowledges differences in learning and teaching styles by using a variety of appropriate outcome measures, such as portfolio assessment, oral defense, quizzes, essays, direct observation, anecdotal, peer and self review, and experience; focuses on student performance, knowledge acquisition, and attitude appraisal; assesses both process and product; includes student-, peer-, and self-evaluation; http://www.ala.org/ala/acrl/acrlstandards/characteristics.htm How are we teaching/Who are we reaching? Reflective teaching practices Teaching portfolios Sharing with colleagues and course instructors Evaluation and assessment Student focus groups Inventory of outreach & teaching How are you reaching students? How many? Who are current campus partners? Who are potential campus partners? Who will keep these relationships going? As a group where are you teaching? Horizontally and vertically IL Program Development: Planning Part 2 Assessment standardized assessments (ETS, SAILS, JMU) creation, use and reflection of assessments (background knowledge probe, muddiest point, observation, dialogue) instruction database National standardized tools iSkills™ (aka Information and Communication Technology (ICT) Literacy Assessment) developed by the Educational Testing Service. $35.00 US per student http://www.ets.org/ Measures all 5 ACRL Standards. Two test options: Core and Advanced. Computerized, task-based assessment in which student complete several tasks of varying length, i.e., not multiple choice. Intended for individual and cohort testing. 75 minutes to complete Standardized Assessment of Information Literacy Skills (SAILS) developed by Kent State University Library and Office of Assessment. It is also endorsed by the Association of Research Libraries. $3.00 US per student (capped at $2,000), but we can also administer ourselves for free. https://www.projectsails.org/ Measures ACRL Standards 1,2,3,5. Paper or Computerized, multiple-choice. Intended for cohort testing only. 45 questions, 35 minutes to complete. Information Literacy Test (ITL) developed by James Madison University (developed by JMU Libraries and Center for Assessment and Research Studies) http://www.jmu.edu/icba/prodserv/instruments_ilt.htm Measures ACRL Standards 1,2,3,5. Computerized, multiple-choice. Intended for cohort and individual testing. 60 questions, 50 minutes to complete. NPEC Sourcebook on Assessment: http://nces.ed.gov/pubs2005/2005832.pdf ETS: Advanced Level – Access http://www.ets.org/Media/Products/ICT_Literacy/demo2/index.html ETS: Core Level - Manage http://www.ets.org/Media/Products/ICT_Literacy/demo2/index.html ETS: sample score report Access: Find and retrieve information from a variety of sources. What was I asked to do? Search a store’s database in response to a customer’s inquiry How did I do? You chose the correct store database on your first search. You selected the most appropriate category for searching. You chose the best search term for the database you selected. You selected one inappropriate item for the customer in addition to appropriate ones. http://www.ets.org ETS Pilot at UTM Evaluating the Results The relationship between the Core and Advanced score ranges is not clear. Are the two tests on a continuous scale (e.g., with Core representing 100 – 300 and Advanced 400 – 700)? The University of Toronto Mississauga norms seem to be consistent with the norms from other institutions, and they all seem to be clustering in the middle. Though students received written feedback on their performance within each category, it is unclear how this feedback relates to their aggregate score and how it is derived from the students’ performance on the test (e.g., time taken to perform each task, number of clicks). It is unclear if students are being tested on the same variables within each category across all different versions of the test (e.g., the student reports suggest that some students were evaluated on different criteria in certain categories). The institution does not receive any granular statistical data (e.g., by performance within each category or by question), and only has access to individual student reports and the aggregate score for each student. Learning Outcomes Assessment classroom assessment techniques (CATs) self-awareness inventories in-class pre-/post-assessments class assignments Instruction Database Instruction Database U of T Mississauga Library Information Literacy Program Data Records various characteristics of the instruction sessions May 2005 to April 2007 Early data reflects what is being done and what needs to be addressed U of T Mississauga Library Assessing Our Program Market penetration Reflective of current teaching practices U of T Mississauga 1. Market Penetration Number of students reached Departmental contact Number of instruction sessions given Level of vertical integration U of T Mississauga Library 2005 2006 2007 TOTALS No. of Courses Summer Fall Winter Summer Fall Winter One 77 2279 1640 285 2718 1393 8392 Two 0 1118 151 8 850 232 2359 Three 0 248 8 0 113 18 387 Four 0 17 1 0 12 1 31 Five 0 0 0 0 0 0 0 Table 1: Number of students reached per course Fig. 1 Number of students reached per department Fig. 2 Number of unique instruction sessions taught per department Fig. 3 Number of instruction sessions per course level Fig. 4 Number of instruction sessions per course level per department U of T Mississauga Library What next? How do we gain further access to underserved departments? How do we add new departments to our IL program? Would we abandon classes with little impact on student experience? Developing stronger vertical integration by including more upper year courses U of T Mississauga Library 2. Reflective of current teaching practices Type of session Which ACRL Standards are addressed What tools are covered in the sessions Building a class profile U of T Mississauga Library Fig. 5 Number of unique instruction sessions given by type U of T Mississauga Library Learning Goals Year 2005 2006 2007 Scope 20 30 8 Topic 1 7 1 Search 14 17 5 Tools 29 24 23 Standard 3 Evaluation 21 50 23 Standard 5 Legal 35 38 17 Standard 1 Standard 2 Table 2 Number of instruction sessions with stated ACRL Standards U of T Mississauga Library Tools Covered Year 2005 2006 2007 Catalogue 36 40 17 Databases 2 17 17 GIS 17 13 9 Scholars Portal 6 29 15 Library Website 46 51 21 Other Tools 8 11 8 No Tools Covered 2 4 2 Table 3 Number of instructions sessions teaching specific tools U of T Mississauga Library Anthropology Biology Chemical & Physical Sciences Economics English & Drama Geography Historical Studies Management Mathematics & Computational Sciences Philosophy Political Science Psychology Sociology ICC Erindale Courses Tools Covered 2005 Summer to 2007 Winter Catalogue 17 6 2 1 15 13 16 1 2 1 0 3 6 10 0 Databases 5 4 2 0 4 3 8 1 1 0 1 1 4 2 0 GIS 16 2 0 0 0 18 0 0 1 0 0 0 2 0 0 Scholars Portal 11 0 0 1 6 9 5 1 2 0 1 3 5 6 0 Library Website 17 13 4 1 12 25 15 2 3 1 2 5 10 8 0 Other Tools 5 3 0 1 1 6 3 0 0 0 0 0 3 5 0 No Tools Covered 0 4 0 0 0 2 0 0 0 0 0 0 0 1 1 Table 4 Number of instruction sessions teaching specific tools by department U of T Mississauga Library ANT101H5 ANT102H5 ANT200Y5 ANT204Y5 ANT205H5 ANT306H5 ANT310H5 ANT312H5 ANT338H5 ANT432H5 FSC239Y5 Tools Covered Anthropolog y Catalogue 1 1 0 1 1 0 1 0 3 1 8 Databases 1 0 1 0 0 0 0 0 2 1 0 GIS 0 0 0 0 0 8 0 2 6 0 0 Scholars Portal 1 0 0 0 0 0 0 0 2 1 7 Library Website 1 1 0 1 1 0 1 0 3 1 8 Other Tools 1 0 0 0 0 1 0 0 3 0 0 No Tools Covered 0 0 0 0 0 0 0 0 0 0 0 Table 5 Tools taught in instruction sessions: Department of Anthropology 2005-2007 U of T Mississauga Library Reflective of teaching practices Identify strengths and weaknesses Gain an understanding of current teaching sessions Develop strategies to address the goals of an embedded program across the curriculum U of T Mississauga Library Building a class profile First year Classics course 55 students enrolled Summer session U of T Mississauga Library Unique Students Once 18 Twice 4 3 Times 3 4 Times 2 5 Times 1 Total 28 Therefore 51% of student enrolled have had previous instruction Table 6 Number of students enrolled in a first year Classics course with previous instruction sessions U of T Mississauga Library Courses Taken with instruction CCT100H5 PSY100Y5 ENG110Y5 ENG110Y5 ANT101H5 PSY100Y5 ANT101H5 HIS101H5 ENG110Y5 ENG110Y5 ENG120Y5 GGR117Y5 HIS101H5 PSY100Y5 CCT100H5 GGR117Y5 HIS101H5 FSC239Y5 PSY311H5 SOC209H5 ANT101H5 ENG110Y5 ENG110Y5 PSY100Y5 ANT102H5 ANT200Y5 FSC239Y5 GGR117Y5 SOC307H5 PSY100Y5 Table 7 Courses with previous instruction takes by students enrolled in a Classics course U of T Mississauga Library Course profile 50% have already had at least one instruction session 10 students have had two or more Questions What were our assumptions? How do we approach this class? U of T Mississauga Library Course profile continued No easy answer The data allows us to look closely at our sessions: Is there repetition across classes? Year after year? What were the learning outcomes? What type of session was it? We are now in the process of reflection and learning to build in time to work towards an embedded program Thank you! Questions? References ACRL, Information Literacy Glossary. last updated March 2007. Online at http://www.ala.org/ala/acrl/acrlissues/acrlinfolit/infolitoverview/infolitglossary/infolitglossary.htm Anderson & Krathwohl, 2001 ANZILL, Australia and New Zealand Information Literacy Framework. 2nd edition. Adelaide, AU, 2004. http://www.anziil.org/resources/Info%20lit%202nd%20edition.pd Biggs, J. (1999). Teaching for quality learning at university. Buckingham, U.K.: Society for Research into Higher Education (SRHE) & Open University Press. Learning Commons, University of Guelph, (n.d.). Framework for the design and delivery of learning commons programs and services. Scardamalia, M., & Bereiter, C. (2003). Knowledge Building. In J. W. Guthrie (Ed.), Encyclopedia of Education, Second Edition (pp.). New York: Macmillan Reference, USA. Retrieved from http://ikit.org/fulltext/2003_knowledge_building.pdf Bereiter, C., & Scardamalia, M. (2003). Learning to work creatively with knowledge. In E. De Corte, L. Verschaffel, N. Entwistle, & J. van Merriënboer (Eds.), Unravelling basic components and dimensions of powerful learning environments. EARLI Advances in Learning and Instruction Series; Retrieved from http://ikit.org/fulltext/inresslearning.pdf Reinvention Center. http://www.reinventioncenter.miami.edu/pdfs/2001BoyerSurvey.pdf