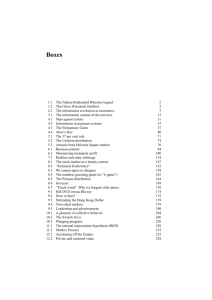

Sparse Coding in Sparse Winner networks

advertisement

Sparse Coding in Sparse Winner networks ISNN 2007: The 4th International Symposium on Neural Networks Janusz A. Starzyk1, Yinyin Liu1, David Vogel2 1 School of Electrical Engineering & Computer Science Ohio University, USA 2 Ross University School of Medicine Commonwealth of Dominica Outline • Sparse Coding • Sparse Structure • Sparse winner network with winner-take-all (WTA) mechanism • Sparse winner network with oligarchy-take-all (OTA) mechanism • Experimental results • Conclusions Motor cortex Pars opercularis Somatosensory cortex Sensory associative cortex Visual associative cortex Broca’s area Visual cortex Primary Auditory cortex Wernicke’s area 2 Sparse Coding Kandel Fig. 30-1 Richard Axel, 1995 Hip Trunk Arm Hand Foot Face Kandel Fig. 23-5 Tongue • How do we take in the sensory information and make sense of them? Larynx 3 Sparse Coding • Neurons become active representing objects and concepts • Metabolism demands of human sensory system andsparse brain neural representation Produce http://gandalf.psych.umn.edu/~kersten/kersten-lab/CompNeuro2002/ coding” • Statistical——“sparse properties of the environment – not every single bit information matters • “Grandmother cell” by J.V. Lettvin – only one neuron on the top level representing and recognizing an object (extreme case) • A small group of neuron on the top level representing an object C. Connor, “Friends and grandmothers’, Nature, Vol. 435, June, 2005 4 Sparse Structure • 1012 neurons in human brain are sparsely connected • On average, each neuron is connected to other neurons through about 104 synapses • Sparse structure enables efficient computation and saves energy and cost 5 Sparse Coding in Sparse Structure • Cortical learning: unsupervised learning • Finding sensory input activation pathway …... ………… … Sensory input … • Competition is needed: Finding neurons with stronger activities and suppress the ones with weaker activities Increasing connection’s adaptability • Winner-take-all (WTA) a single neuron winner • Oligarchy-take-all (OTA) a group of neurons with strong activities as winners 6 Outline • Sparse Coding • Sparse Structure • Sparse winner network with winner-take-all (WTA) mechanism • Sparse winner network with oligarchy-take-all (OTA) mechanism • Experimental results • Conclusions Motor cortex Pars opercularis Somatosensory cortex Sensory associative cortex Visual associative cortex Broca’s area Visual cortex Primary Auditory cortex Wernicke’s area 7 Sparse winner network with winner-take-all (WTA) • Local network model of cognition – R-net • Primary layer and secondary layer • Random sparse connection • For associative memories, not for feature extraction • Not in hierarchical structure Secondary layer Primary layer David Vogel, “A neural network model of memory and higher cognitive functions in the cerebrum” 8 Sparse winner network with winner-take-all (WTA) Hierarchical learning network: • Use secondary neurons to provide “full connectivity” in sparse structure • More secondary levels can increase the sparsity • Primary levels and secondary levels Finding neuronal representations: … … • Finding global winner which has the strongest signal strength • For large amount of neurons, it is very time-consuming Increasing number of Overall neurons Primary level h+1 Secondary level s Primary level h winner … … … … … Input pattern 9 Sparse winner network with winner-take-all (WTA) Finding global winner using localized WTA: • Data transmission: feed-forward computation • Winner tree finding: local competition and feed-back • Winner selection: feed-forward computation and weight adjustment h+1 s2 Global winner … … … … s1 h … Input pattern 10 Sparse winner network with winner-take-all (WTA) Data transmission: feed-forward computation • Signal calculation silayer1 silayer1 lilayer 1 layer w s ij j j 1 wj 1 2 Input pattern silayer1 output • Transfer function silayer1 input activation threshold 11 Sparse winner network with winner-take-all (WTA) Winner tree finding: local competition and feedback S1h1 S 2h1 • Local competition Current –mode WTA circuit (Signal – current) l2 l1 n j Branches logically cut off: l1 l3 1 level j N ilevel1 2 3 4 5 1 2 3 4 level 1 level swinner i max w jk sk (i 1,2,..N level ) jN kN N4level+1 is the winner among 4,5,6,7,8 N4level+1 N4level level 1 i l3 s2 1 Local competition local winner S 2h1 • Local competitions on network S3h 1 n2h 1 n3h1 n1h 1 Local neighborhood: n1h 1 n2h 1 n3h 1 Signal on n2h 1 goes to n1s 2 Local winner 6 5 Set of post-synaptic neurons of N4level 7 6 7 8 9 level+1 level i N level j Set of pre-synaptic neurons of N4level+1 12 Sparse winner network with winner-take-all (WTA) The winner network is found: all the neurons directly or indirectly connected with the global winner neuron Swinner Input neuron Winner neuron in local competition Loser neuron in local competition Inactive neuron Winner tree Swinner Swinner Swinner … … Swinner Swinner … 13 Sparse winner network with winner-take-all (WTA) Winner selection: feed-forward computation and weight adjustment • Signal are recalculated through logically connected links • Weights are adjusted using concept of Hebbian Learning n1h 1 w11h n1h x1 h w21 h 1 h 1 h 1 w11 11 ( x1 w11 ) h w31 n2h x2 h 1 h 1 h 1 w12 12 ( x2 w12 ) h 1 h 1 h 1 w13 13 ( x3 w13 ) n3h x3 Number of global winners found is typically 1 with sufficient links Number of active neurons on top level vs. Number of input links to each neuron number of active neurons on top level 12 10 • 64-256-1028-4096 network • Find 1 global winner with over 8 connections 8 6 4 2 0 2 3 4 5 6 7 8 9 10 number of input links 14 Sparse winner network with winner-take-all (WTA) Number of global winners found is typically 1 with sufficient input links Number of active neurons on top level vs. Number of input links to each neuron number of active neurons on top level 12 10 8 6 4 2 0 2 3 4 5 6 7 8 9 10 number of input links • 64-256-1028-4096 network Average signal strength of active neurons on top level except global winner relative to that of global winner vs. number of input8links to each neuron • Find 1 global winner with over connections l strength 0.4 0.35 0.3 0.25 15 Outline • Sparse Coding • Sparse Structure • Sparse winner network with winner-take-all (WTA) mechanism • Sparse winner network with oligarchy-take-all (OTA) mechanism • Experimental results • Conclusions Motor cortex Pars opercularis Somatosensory cortex Sensory associative cortex Visual associative cortex Broca’s area Visual cortex Primary Auditory cortex Wernicke’s area 16 Sparse winner network with oligarchy-take-all (OTA) • Signal goes through layer by layer • Local competition is done after a layer is reached • Local WTA • Multiple local winner neurons on each level • Multiple winner neurons on the top level – oligarchy-take-all • Oligarchy represents the sensory input • Provide coding redundancy • More reliable than WTA … … Active neuron Winner neuron in local competition Loser neuron in local competition Inactive neuron … 17 Outline • Sparse Coding • Sparse Structure • Sparse winner network with winner-take-all (WTA) • Sparse winner network with oligarchy-take-all (OTA) Motor cortex Pars opercularis • Experimental results • Conclusions Somatosensory cortex Sensory associative cortex Visual associative cortex Broca’s area Visual cortex Primary Auditory cortex Wernicke’s area 18 Experimental Results Initial output signal strength WTA scheme in sparse network output neuronal activities activation threshold 1.2 neuronal activity original image 1.4 global winner 1 0.8 0.6 0.4 0.2 0 0 1000 2000 3000 neurons 4000 5000 Winner selected in testing, winner is 3728 2.5 neuronal activity 2 Input size: 8 x 8 output neuronal activities activation threshold global winner 1.5 1 0.5 0 0 1000 2000 3000 neurons 4000 5000 19 Experimental Results OTA scheme in sparse network 64 bit input digit 0 1 2 3 4 5 6 7 8 9 Active Neuron index in OTA network 72 237 294 109 188 103 68 237 35 184 91 291 329 122 199 175 282 761 71 235 365 377 339 237 219 390 350 784 695 237 371 730 771 350 276 450 369 1060 801 271 1103 887 845 353 307 535 423 1193 876 277 1198 1085 1163 564 535 602 523 1218 1028 329 1432 1193 1325 690 800 695 538 1402 1198 759 1639 1218 1382 758 1068 1008 798 1479 1206 812 … … … … … … … … … … Averagely, 28.3 neurons being active represent the objects. Varies from 26 to 34 neurons 20 Experimental Results percentage of correct recognition Percentage of correct recognition 1 performance of OTA performance of winner WTA network 0.8 0.6 0.4 0.2 0 0 10 20 30 40 number of bits changed in the pattern Accuracy level of random recognition 50 Random recognition OTA has better fault tolerance than WTA 21 Conclusions & Future work • Sparse coding building in sparsely connected networks • WTA scheme: local competition accomplish the global competition using primary and secondary layers –efficient hardware implementation • OTA scheme: local competition produces neuronal activity reduction • OTA – redundant coding: more reliable and robust • WTA & OTA: learning memory for developing machine intelligence Future work: • Introducing temporal sequence learning • Building motor pathway on such learning memory • Combining with goal-creation pathway to build intelligent machine 22