Dialog Management - Intelligent Software Lab.

Dialog Management

Dialog Management

Intelligent Robot Lecture Note

1

Dialog Management

Dialog System & Architectures

Intelligent Robot Lecture Note

2

Dialog Management

Dialogue System

• A system to provide interface between the user and a computerbased application

• Interact on turn-by-turn basis

• Dialogue manager

►

Control the flow of the dialogue

►

►

Main flow

◦ information gathering from user

◦ communicating with external application

◦ communicating information back to the user

Three types of dialogue system

◦ finite state- (or graph-) based

◦ frame-based

◦ agent-based

Intelligent Robot Lecture Note 3

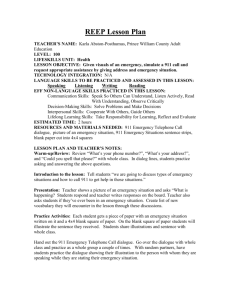

Dialog System Architecture

• Typical dialog system has following components

►

►

User Interface

◦

Input: Speech Recognition, keyboard , Pen-gesture recognition ..

◦

Output: Display, Sound, Vibration ..

Context Interpretation

◦

Natural language understanding (NLU)

◦

Reference resolution

◦

Anaphora resolution

►

Dialog Management

◦

History management

◦

Discourse management

• Many dialog system architectures are introduced.

►

►

►

DARPA Communicator

GALAXY Communicator etc.

Intelligent Robot Lecture Note

Dialog Management

4

Dialog Management

Dialog System Architecture

• The DARPA Communicator program was designed to support the creation of speech-enabled interfaces that scale gracefully across modalities, from speech-only to interfaces that include graphics, maps, pointing and gesture.

MIT AT&T

CMU

SRI

DARPA

CU Bell Lab

BBN

Intelligent Robot Lecture Note 5

Dialog Management

Galaxy Communicator

• The Galaxy Communicator software infrastructure is a distributed , message-based , hub-and-spoke infrastructure optimized for constructing spoken dialogue systems.

• An open source architecture for constructing dialogue systems

• History

►

MIT Galaxy system

►

►

Developed and maintained by MITRE Corporation

Current version is 4.0

Intelligent Robot Lecture Note 6

• The architecture

Galaxy Communicator

Dialog Management

Intelligent Robot Lecture Note 7

Galaxy Communicator

• Message Passing Protocol

Dialog Management

Intelligent Robot Lecture Note 8

Dialog Management

CU Communicator

• Dialogue management in CU Communicator

►

►

Event-driven approach

◦

Current context of the system is used to decide what to do next

◦

Do not need a dialogue script

◦

A general engine operates on the semantic representations and the current context to control the interaction flow

Mixed-initiative approach

◦ Not separate “user initiative” and “system initiative”

Intelligent Robot Lecture Note 9

Dialog Management

CMU Communicator

• Dialogue management in CMU Communicator

►

►

Frame-based approach

◦

Form-filling method

◦

Not to specify a particular order in which slots need to be filled

◦

Loosen the requirement for the system designed to correctly intuit the natural order in which information is supplied

Agenda-based approach

◦

Treats the task as one of cooperatively constructing a complex data structure, a product

◦

Uses a product tree which is developed dynamically

◦

Supports topic shifts

Intelligent Robot Lecture Note 10

Dialog Management

Queen’s Communicator

• Object-oriented architecture, distributed and inherited functionality: generic and domain-specific

• Uses discourse history and confirmation status to determine how to confirm (explicit or implicit)

Intelligent Robot Lecture Note 11

Dialog System Approaches

Dialog Management

Intelligent Robot Lecture Note

12

Dialog System approaches

• There are many approaches to represent dialog

►

►

►

►

Frame based

Agent based

Voice-XML based

Information State approach

Dialog Management

Intelligent Robot Lecture Note 13

Dialog Management

Frame-based Approach

• Frame-based system

►

►

►

Asks the user questions to fill slots in a template in order to perform a task (form-filling task)

Permits the user to respond more flexibly to the system’s prompts (as in Example 2.)

Recognizes the main concepts in the user’s utterance

Example 1)

• System: What is your destination?

• User: London.

•

System: What day do you want to travel?

• User: Friday

Example 2)

System: What is your destination?

User: London on Friday around

10 in the morning.

System: I have the following connection …

Intelligent Robot Lecture Note 14

Dialog Management

Frame-based Approach

• Advantages

►

►

The ability to use natural language, multiple slot filling

The system processes the user’s over-informative answers and corrections

• Disadvantages

►

Appropriate for well-defined tasks in which the system takes the initiative in the dialog

►

Difficult to predict which rule is likely to fire in a particular context

• Related systems

►

CU Communicator

►

CMU Communicator

Intelligent Robot Lecture Note 15

Dialog Management

Agent-based Approach

• Properties

►

Complex communication using unrestricted natural language

►

Mixed-Initiative

►

►

Co-operative problem solving

Theorem proving, planning, distributed architectures

►

Conversational agents

• Examples

User :

I’m looking for a job in the Calais area. Are there any servers?

System :

No, there aren’t any employment servers for Calais. However, there is an employment server for Pasde-Calais and an employment server for Lille.

Are you interested in one of these?

System attempts to provide a more co-operative response that might address the user’s needs.

Intelligent Robot Lecture Note 16

Agent-based Approach

• Advantages

►

Suitable to more complex dialogues

►

Mixed-initiative dialogues

• Disadvantages

►

Much more complex resources and processing

►

Sophisticated natural language capabilities

►

Complicated communication between dialogue modules

• Related Works

►

►

TRAINS project

TRIPS project

Dialog Management

Intelligent Robot Lecture Note 17

Dialog Management

TRAINS project

• TRAINS (1995~1997)

►

►

►

►

►

CISD research group in University of Rochester

◦ http://www.cs.rochester.edu/research/cisd/projects/trains/

Task

◦

Finding efficient routes for trains

Goal

◦

Robust performance on a very simple task

Approach

◦

Speech Act, Plan reasoning

Demo

◦ http://www.cs.rochester.edu/research/cisd/projects/trains/movies/TRAINS9

5-v1.3-Pia.qt.gz

Intelligent Robot Lecture Note 18

Dialog Management

TRIPS Project

• TRIPS

►

T he R ochester I nteractive P lanning S ystem

◦ http://www.cs.rochester.edu/research/cisd/projects/trips/

►

►

Goal

◦

An intelligent planning assistant (natural language + graphical display)

◦ Extending TRAINS system to several domain

Domains (supported currently)

◦

Pacifica - Evacuating people from an island

◦

Airlift – Organization Airlift scheduling

◦

TRIPS-911 – Managing the resources in small 911 emergency

◦

Underwater Survey – Planning in collaboration with semiautonomous robot agents

►

Demo (Pacifica)

◦ http://www.cs.rochester.edu/research/cisd/projects/trips/movies/T

RIPS-98_v4.0/200K/TRIPS-98_v4.0_200K.html

Intelligent Robot Lecture Note 19

TRIPS Architecture

Dialog Management

The TRIPS System Architecture

Intelligent Robot Lecture Note 20

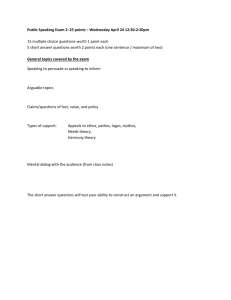

VoiceXML-based System

• What is VoiceXML?

►

The HTML(XML) of the voice web.

►

The open standard markup language for voice application

• Can do

►

►

►

►

►

Rapid implementation and management

Integrated with World Wide Web

Mixed-Initiative dialogue

Able to input Push Button on Telephone

Simple Dialogue implementation solution

Dialog Management

Intelligent Robot Lecture Note 21

Dialogue by VoiceXML

• Most VoiceXML dialogues are built from

►

►

<menu>

<form> form based dialog

• Formbased dialogue is similar to “Slot & Filling” system

• Limiting User’s Response

►

Goal

◦

Verification, and Help for invalid response

◦

Good speech recognition accuracy

Dialog Management

Intelligent Robot Lecture Note 22

Example - <Menu>

Browser : Say one of:

Sports scores; Weather information; Log in.

User : Sports scores

<vxml version="2.0" xmlns="http://www.w3.org/2001/vxml">

< menu >

<prompt>Say one of: <enumerate/></prompt>

<choice next="http://www.example.com/sports.vxml">

Sports scores

</choice>

<choice next="http://www.example.com/weather.vxml">

Weather information

</choice>

<choice next="#login">

Log in

</choice>

</ menu >

</vxml>

Intelligent Robot Lecture Note

Dialog Management

23

Example – <Form>

Browser : Please say your complete phone number

User : 800-555-1212

Browser : Please say your PIN code

User : 1 2 3 4

<vxml version="2.0" xmlns="http://www.w3.org/2001/vxml">

< form id="login" >

<field name=" phone_number " type="phone">

<prompt>

Please say your complete phone number

</prompt>

</field>

<field name=" pin_code " type="digits">

</field>

<block>

< submit next=“ http://www.example.com/servlet/login ” namelist= phone_number pin_code "/>

</block>

< /form >

</vxml>

<prompt>

Please say your PIN code

</prompt>

Intelligent Robot Lecture Note

Dialog Management

24

Dialog Management

Information State Approach

• A method of specifying a dialogue theory that makes it straightforward to implement

• Consisting of following five constituents

►

Information Components

◦

Including aspects of common context

◦

(e.g., participants, common ground, linguistic and intentional structure, obligations and commitments, beliefs, intentions, user models, etc.)

►

Formal Representations

◦

How to model the information components

◦

(e.g., as lists, sets, typed feature structures, records, etc.)

Intelligent Robot Lecture Note 25

Information State Approach

►

►

Dialogue Moves

◦

Trigger the update of the information state

◦

Be correlated with externally performed actions

Update Rules

◦

Govern the updating of the information state

Dialog Management

►

Update Strategy

◦

For deciding which rules to apply at a given point from the set of applicable ones

Intelligent Robot Lecture Note 26

Intelligent Robot Lecture Note

Example Dialogue

Dialog Management

27

Intelligent Robot Lecture Note

Example Dialogue

Dialog Management

28

Intelligent Robot Lecture Note

Example Dialogue

Dialog Management

29

Intelligent Robot Lecture Note

Example Dialogue

Dialog Management

30

Intelligent Robot Lecture Note

Example Dialogue

Dialog Management

31

Intelligent Robot Lecture Note

Example Dialogue

Dialog Management

32

Dialog Management

Reading Lists

• B. Pellom, W. Ward, S. Pradhan, 2000. The CU Communicator:

An Architecture for Dialogue Systems, International Conference on

Spoken Language Processing (ICSLP), Beijing China.

• Rudnicky, A., Thayer, E., Constantinides, P., Tchou, C., Shern, R.,

Lenzo, K., Xu W., Oh, A. 1999. Creating natural dialogs in the

Carnegie Mellon Communicator system. Proceedings of

Eurospeech, 531-1534.

• Ian M. O’Neill and Michael F. McTear. 2000. Object-Oriented

Modelling of Spoken Language Dialogue Systems Natural

Language Engineering , Best Practice in Spoken Language

Dialogue System Engineering, Special Issue, Volume 6 Part 3.

• George Ferguson and James Allen, July 1998. TRIPS: An

Intelligent Integrated Problem-Solving Assistant," in Proceedings of the Fifteenth National Conference on Artificial Intelligence

(AAAI-98) , Madison, WI, 26-30, pp. 567-573.

Intelligent Robot Lecture Note 33

Dialog Management

Reading Lists

• S. Larsson, D.R. Traum. 2001. Information state approach to dialogue management. Current and New Directions in Discourse &

Dialogue, Kluwer Academic Publishers.

• S. Larsson, D.R. Traum. 2003. Information state and dialogue management in the TRINDI dialogue move engine toolkit. Natural

Language Engineering.

Intelligent Robot Lecture Note 34

Dialog Modeling Techniques

Dialog Management

Intelligent Robot Lecture Note

35

Dialog Management

Reinforcement Learning

Training Info = desired (target) outputs

Inputs

(Feature, Target Label)

Supervised Learning

System

Outputs

Objective: To minimize error (Target Output – Actual Output)

Training Info = evaluations (“rewards”/”costs”)

Inputs

(State, Action, Reward)

RL

System

Objective: To get as much reward as possible

Outputs (“actions”)

Intelligent Robot Lecture Note 36

Dialog Management

Stochastic Modeling Approach

• Stochastic Dialog Modeling [E. Levin et al, 2000]

►

Optimization Problem

◦

Minimization of Expected Cost (C

D

)

►

►

C

D

i

i

C i

C i measures the effectiveness and the achievement of application goal

Mathematical Formalization

◦

Markov Decision Process

– Defining State Spaces, Action Sets, and Cost Function

– Formalize dialog design criteria as objective function

Automatic Dialog Strategy Learning from Data

◦

Reinforcement Learning

Intelligent Robot Lecture Note 37

Dialog Management

Mathematical Formalization

• Markov Decision Process (MDP)

►

►

Problems with cost(or reward) objective function are well modeled as

Markov Decision Process .

The specification of a sequential decision problem for a fully observable environment that satisfies the Markov Assumption and yields additive rewards.

Dialog State

Intelligent Robot Lecture Note

Dialog Manager

Cost

(Turn, Error, DB Access, etc.)

Environment

(User, External DB or other Servers)

Dialog Action

(Prompts, Queries, etc.)

38

Dialog as a Markov Decision Process

Dialog Management user goal s u user dialog act a u

User noisy estimate of user dialog act

Speech

Understanding u dialog history s d

State

Estimator m machine state

Reward r ( s m

, a m

)

R

k

k r k

Reinforcement

Learning

Optimize m

Speech

Generation

Intelligent Robot Lecture Note machine dialog act a m

Dialog

Policy

MDP

~ m

~ u

,

~ u

,

~ d

[S. Young, 2006]

39

Dialog Management

Month and Day Example

• State Space

►

State S t

represents all the knowledge of the system at time t

(values of the relevant variables).

◦

S t

=(d, m) where d=-

1,…,31 and m=-1,..,12

◦

0 : not yet filled

◦

-1 : completely filled

◦

(0,0) = Initial State

◦

(-1,-1) = Final State

Intelligent Robot Lecture Note 40

Dialog Management

Month and Day Example

• State Space

-

-

-

Month:1

Day:1

-

Day:1

Month:1

-

Month:11

-

Month:12

Day:30

-

Day:31

-

Day:30

Month:12

Day:31

Month:12

1 (initial) + 12(months) + 31(days) + 365(dates) + 1(final)

Total Dialog State : 410 states

Intelligent Robot Lecture Note

-

-

41

Dialog Management

Month and Day Example

• Action Set

►

At each state, the system can choose an action a t

.

◦

Dialog Actions

– Asking the user for input, providing a user some output, confirmation, etc.

S t

Which month? (A

m

)

Which day? (A d

)

Which date? (A dm

)

Thank you. Good Bye.(A f

)

Intelligent Robot Lecture Note 42

Month and Day Example

• State Transitions

►

When an action is taken the system changes its state.

Dialog Management

SYSTEM : Which month?

-

-

-

Month: 1

-

Month: 11

-

Month: 12

New state might depend on external inputs:

Not Deterministic

Transition Probability: P

T

(S t+1

|S t

,a t

)

Intelligent Robot Lecture Note 43

Dialog Management

Month and Day Example

• Action Costs and Objective Function

►

A cost C t is associated to action a t at state S t

.

SYSTEM : Which month?

-

-

-

Month: 1

-

Month: 11

-

Month: 12

Cost Distribution: P c

(C t

|S t

, a t

)

C

D

i

*# interactio ns

e

*# Errors

f

*# unfilled slots

Intelligent Robot Lecture Note 44

Dialog Management

Month and Day Example

Strategy 1.

-

-

Good Bye.

-

-

C

1

*

i

1

f

* 2

Strategy2.

-

-

Which date ?

Day

Month

Good Bye.

-

-

C

2

i

* 2

e

* 2 * P

1

f

* 0

Strategy 3.

Which day ?

Day

-

Which month?

Day

Month

Good Bye.

-

-

-

-

C

3

i

* 3

e

* 2 * P

2

f

* 0

Optimal strategy is the one that minimizes the cost.

Strategy 1 is optimal if w i

+ P

2

* w e

- w f

> 0

Recognition error rate is too high

Strategy 3 is optimal if 2*(P

1

-P

P

1 is much more high than P

Intelligent Robot Lecture Note

2

2

)* w e

- w i

> 0 against a cost of longer interaction

45

Dialog Management

Policy

• The goal of MDP is to learn a policy, π : S→A

►

►

►

But we have no training examples of form <s,a>

Training examples are of form <s,a,s’,r>

For selecting it next action a t based on the current observed state s t

.

a

0 a

1 a

2

S0 S1 S2 … r

0 r

1 r

2

Goal : Learn to choose actions that maximize the reward function.

r t

r t

1

2 r t

2

...

i

0

i r t

i

(where 0

1 ) discount factor

Intelligent Robot Lecture Note 46

Dialog Management

Policy

• Discounted Cumulative Reward

►

Infinite-Horizon Model

V

( s t

)

r t

r t

1

2 r t

2

...

◦ γ=0 : V π

(s t

) =r t

–

Only immediate reward considered.

i

0

◦ γ closer to 1 : Delayed Reward i r t

i

(where 0

1 )

– Future rewards are given greater emphasis relative to the immediate reward.

• Optimal Policy ( π * )

►

Optimized policy π that maximize V π

(s) for all state s .

*

argmax

V

(

s

) for

s

Intelligent Robot Lecture Note 47

Dialog Management

Q-Learning

• Define the Q-Function.

►

As evaluation function.

Q ( s , a )

r ( s , a )

V

*

(

( s , a ))

• Rewrite the optimal policy.

* arg a max

r ( s , a )

V

*

(

( s , a ))

*

( s )

arg max Q ( s , a ) a

• Why is this rewrite important?

►

It shows that if the agent learns the Q-function instead of the V* function.

◦

It will be able to select optimal actions even when it has no knowledge of the function r and

δ

.

Intelligent Robot Lecture Note 48

Dialog Management

Q-Learning

• How can Q be learned?

►

Learning the Q function corresponds to learning the optimal policy.

◦

The close relationship between Q and V*

►

V

*

( s )

m a ' ax Q ( s , a ' )

It can be written recursively as

►

Q ( s , a )

r ( s , a )

m a ' ax Q (

( s , a ), a ' )

◦

This recursive definition of Q provides the basis for algorithm that iteratively approximate Q.

It can updates the table entry for Q(s,a) following each such transition, according to the rule.

ˆ

( s , a )

r

m a ' ax

Intelligent Robot Lecture Note

( s ' , a ' )

49

Q-Learning

• Q-Learning algorithm for deterministic MDP.

Dialog Management

Intelligent Robot Lecture Note 50

Dialog Management

Action Selection in Q-Learning

• How actions are chosen by the agent.

►

To select the action that maximize the Q hat function.

◦

Thereby exploiting its current approximation Q hat.

◦

Biased to previously trained Q hat function.

►

Probability Assigning

◦

Actions with higher Q hat values are assigned higher probabilities.

◦

But every action is assigned a nonzero probability.

P ( a i

| s )

j k k

( s , a i

)

( s , a j

)

◦ k > 0 is a constant that determines how strongly the selection favors actions with high Q hat values.

– Larger values of k will assign higher probabilities to actions with above average Q hat.

– Causing the agent to exploit what it has learned and seek actions it believes will maximize its reward.

◦ k is varied with the number of iterations.

– Exploitation vs. Exploration

Intelligent Robot Lecture Note 51

Example-based Dialogue Modeling

Dialog Management

• Limitation of Rule-based Dialogue Modeling

►

For the situation-action rule, there are about possible 2 13 states of

EPG domain.

◦

Problem

– Much Human Efforts

– Inconsistency

– Unreliability

• How to automatically design situation-based rules

►

We have developed example-based dialogue modeling .

◦

Using dialogue examples indexed from dialogue corpus.

◦

It is more effective and domain portable .

– Because it is able to automatically generate system responses from dialogue example.

Intelligent Robot Lecture Note 52

Example-based Dialogue Modeling

Dialog Management

• Dialogue Example Database

►

Semantic-based indexing of dialogue examples

◦

Lexical-based example database needs much more examples.

◦

The SLU results is the most important index key.

►

Automatically indexing from dialogue corpus.

Utterance

Dialog Act

Main Action

Component

Slots

Discourse

History

System Action

그럼 SBS 드라마는 언제 하지 ?

Then, when do this SBS dramas start?

Wh-question

Search_start_time

[channel = SBS, genre = 드라마 ]

[1,0,1,0,0,0,0,0,0]

Inform(date, start_time, program)

Intelligent Robot Lecture Note

Input : User Utterance

Index Keys

Output : System Concept

53

Example-based Dialogue Modeling

Dialog Management

• Utterance Similarity

►

When the retrieved dialogue examples are not unique

◦

We choose the best one using the utterance similarity measure.

►

How to define the similarity measure for dialogue system.

◦

Lexico-Semantic Similarity

– Morpheme Similarity between utterances with the semantic slots using normalized edit distance.

◦

Discourse History Similarity

– The cosine similarity between the slot-filling vectors

– The value 1 if the slot is filled until a current dialogue state.

– The value 0 otherwise.

Intelligent Robot Lecture Note 54

Example-based Dialogue Modeling

Dialog Management

• Example of Utterance Similarity

►

Lexico-Semantic Representation

User Utterance

그럼 SBS 드라마는 언제 하지 ?

Then, when do this SBS dramas start?

Component Slots [channel = SBS, genre = 드라마 (drama)]

Lexico-Semantic

Representation

그럼 [channel] [genre] 는 언제 하 지

Then, when do the [channel] [genre] start

►

Utterance Similarity Measure

Current User Utterance

그럼 [channel] [genre] 는 언제 하 지

Slot-Filling Vector : [1,0,1,0,0,0,0,0,0]

Lexico-Semantic Similarity

Retrieved Examples

[date] [genre] 는 몇 시에 하 니

Discourse History Similarity

Slot-Filling Vector : [1,0,0,1,0,0,0,0,0]

Intelligent Robot Lecture Note 55

Strategy of EBDM

Dialog Management

Intelligent Robot Lecture Note

Lexico-semantic Similarity

Discourse history Similarity

56

Dialog Management

Advantages of EBDM

• Generic Dialogue Modeling

►

By automatically constructing the dialogue example database from the dialogue corpus

• Easy Development of an effective and practical dialogue system

►

Need a small amount of dialogue corpus.

• High Domain Portability

►

Can be applied to various domains with low cost.

◦

Goal-oriented dialogue system

– EPG, Navigation, Weather Information Center

◦

Chat Agent

Intelligent Robot Lecture Note 57

Dialog Management

Case Study I :

Example based Multi-domain

Dialogue System Development

Intelligent Robot Lecture Note

58

Dialog Management

POSSDM

• The Basic Idea

►

Situation-based dialogue management

◦

State-free dialogue management based on the current situation of dialogue

– Dialogue Situation is the dialogue information state.

– Including user intention, semantic frame, and discourse history

►

Object-oriented architecture

◦

Improving a domain portability

– Separation of domain-independent and domain-dependent dialogue modules.

►

Example-based dialogue modeling

◦

To generate the system responses according to the current situation using generic dialogue modeling

Intelligent Robot Lecture Note 59

• Overall Architecture

POSSDM

Dialog Management

Intelligent Robot Lecture Note 60

Chat

Dialog Corpus

Dialog Management

Chat Expert

• Dialog Act = statement-non-opinion

Dialog Act

Identification

•

•

Main Action= Fight

Date = 어제

Frame-Slot

Extraction

USER : 어제 여친이랑 싸웠어 .

Agent Spotter Domain Spotter

•

• Agent = Chat

Domain = Friend

Chat

DEDB

Discourse

Inference

Chat Expert

XML Rule

Parser

Discourse History

Stack

•

• previous user utterance previous dialog act and

• semantic frame previous scenario session

Retrieved

Dialog Examples

• Calculate utterance similarity

Intelligent Robot Lecture Note

System

Response

Chat

Meta-Rule

• When no example is retrieved, meta-rules are used.

SYSTEM : 왜 ? 무슨 일 있어 ?

61

EPG

Dialog Corpus

Dialog Management

Goal-oriented Dialog Expert

• Dialog Act = Wh-question

Dialog Act

Identification

USER : TV 에서 지금 뭐 하지 ?

Agent Spotter Domain Spotter

•

• Agent = Task

Domain = EPG

•

•

Main Action= Search_Program

Start_Time = 지금

Frame-Slot

Extraction (EPG)

EPG

DEDB

Discourse

Inference

Discourse History

Stack

•

• previous user utterance previous dialog act and

• semantic frame previous slot-filling vector

Retrieved

Dialog Examples

• Calculate utterance similarity

Intelligent Robot Lecture Note

System

Response

EPG Expert

Database

Manager

XML Rule

Parser

TV Schedule

Database

EPG

Meta-Rule

• When no example is retrieved, meta-rules are used.

SYSTEM :

현재 “ KBS” 에서는 “해피선데이”가

,

“MBC” 에서는 “일요일 일요일 밤에”가 , “SBS” 에

서는 “일요일이 좋다”가 방송 중 입니다

.

Web

Contents

62

Dialog Management

Experiment and Result

• Dialog Corpus & Experiment Setup

►

►

►

►

# of Chat Corpus = 2377 user utterance in 10 domains

# of Goal-Oriented Dialog Corpus = 513 user utterances in EPG and

Navigation domains

Avg. # word per utt. = 3.22

Distribution of the domain in the dialog corpus

Intelligent Robot Lecture Note 63

Dialog Management

Experiment and Result

• Spotting Evaluation

►

10-fold cross validation using Maximum Entropy Classifier

Feature Set

Baseline (Only Linguistic Features)

+ Dialog Act

Semantic Features

+ Main Action

Accuracy (%)

96.69

97.39

98.09

►

For the baseline performance of the domain spotter, we evaluated only using the TF*IDF weighting alone.

Feature Set

Baseline (TF*IDF)

Linguistic Features

Semantic Features

Keyword Features

+2-best keyword

+2-best domain class

Accuracy(%)

72.88

77.47

77.92

78.87

86.18

Intelligent Robot Lecture Note 64

Dialog Management

Experiment and Result

• Dialog Modeling Evaluation

►

►

►

►

►

Human Evaluation: 4 test volunteers ( 422 user utterances )

EMR designates the average ratio of the example match type for user utterance input.

STR designates the average success turn rate of the response correctness.

The exact match means that the dialog examples were successfully retrieved when using all indexing keys.

The partial match means that the dialog examples were retrieved when using parts of indexing keys after the failure of the exact match query.

Intelligent Robot Lecture Note 65

Experiment and Result

• Dialog Modeling Evaluation

►

Example Matching Rate (EMR) and Success Turn Rate (STR)

Example Match Type

Exact Match

Partial Match

No Example

EMR STR

0.60

0.36

0.04

0.69

0.52

0.06

►

Goal-oriented dialog evaluation of UMDM

Evaluation Goal-Oriented Dialog

Success Turn Rate

Task Completion Rate

0.75

0.81

Dialog Management

Intelligent Robot Lecture Note 66

Dialog Management

Intelligent Robot Lecture Note 67

Dialog Management

Case Study II :

Statistical Dialog System Design

Intelligent Robot Lecture Note

Air Travel Information System

(ATIS)

Dialog Management

• ATIS dialog system helps the user to find flight information in an efficient way.

►

►

The efficiency here involves:

◦

The duration of the dialog

◦

The cost of external resources

◦

The effectiveness of the system output to the user

Objective function

C

i

N i

r

N r

o

f ( N o

)

s

F s

<N i

> = The expected length of the whole interaction in number of turns

<N r

> = The expected number of tuples retrieved from the database during the session f(N o

) = The data presentation cost function with N o

F s

= An overall task success measure f(N o

)=

0 if N*< N o k*N if N*>N o

F s

=

1 - no info. was given

0 - otherwise

Intelligent Robot Lecture Note

N* is the reasonable value for data presentation

(small for voice based system, higher for display)

69

Dialog Management

The Actions in ATIS

• Greeting : This is ATIS Travel service. How can I help you ?

• Constraining:

Where are you departing from?

(Constrain ORIGIN)

What is the airline ?

(Constrain AIRLINE)

…

What time are you leaving?

(Constrain DEPARTUE_TIME)

• Releasing constraints:

• Database retrieval

There are no flight with AA. Do you want to see flight with other airlines ?

(Relax AIRLINE)

...

• Output data: There are 58 flights: Flight 111 leaves

…,

Flight 222

…

• Closing: Thank you for using ATIS. Good Bye.

Intelligent Robot Lecture Note 70

Dialog Management

The State in ATIS

• State Space

►

The state included three templates

◦

A template is a set of keyword-value pairs.

User Query

History of system actions

Data retrieved

Intelligent Robot Lecture Note

Representing accumulated information from the user

ORIGIN: X

DESTINATION: X

AIRLINE: X

Recording a partial history of actions.

GREETING

CONSTRAINING

RELAXATION

…

The number of data tuples retrieved

Ndata: Y

71

Dialog Management

User Model

• Simulated User

►

►

►

Assumption

◦

The user response depends only on the current system action and not the state.

Parameterized the simulated user in the following way

◦ 1) Response to Greeting

– P(n) , n=0,1,2, … the # of attributes specified by the user in a single utterance.

– P(attribute) (e.g. ORIGIN, DESTINATION, AIRLINE, …)

– P(Value|attribute) (e.g. P(Boston|ORIGIN), P(Delta|AIRLINE))

◦ 2) Response to Constraining Questions

– P(k

R

|k

G

) : The prob. of the user specifying a value for attribute k

R when asked for the value of attribute k

G

. P(airline|departue_time)

– P(N|k

G

) : The prob. of providing N additional unsolicited attributes in the same response.

◦

3) Response to a Relaxation Prompt

– P(yes|k

G

) =1P(no|k

G

) : The prob. of accepting(or rejecting) the proposed relaxation of attribute k

G

.

We can obtain these probability distributions from dialog corpus.

Intelligent Robot Lecture Note 72

1.

Dialog Management

Incrementally More Complex Strategies

Closing Cost1 = 1405

2.

Greeting

3.

Greeting

Constrain

Retrieval

Cost2 = 469.24

Retrieval

Output

Output

Too much data

Cost3 = 231.95

Closing

Closing

4.

Greeting

Constrain

Too much data

Intelligent Robot Lecture Note

Retrieval

No data

Release

Output

Cost4 = 123.93

Closing

73

Dialog Management

The Learned Optimal Strategy

Greeting Constrain

Enough constraints

Retrieval

No data

Release

Too much data

Constrain

* Same strategy was independently handcrafted in many DARPA ATIS cites:

BBN, CMU, AT&T…

Intelligent Robot Lecture Note

Closing

Output

74

Example of Dialog

Dialog Management

Untrained System

Intelligent Robot Lecture Note

Trained System

75

Dialog Management

Reading Lists

• R. S. Sutton, and A. G. Barto. 1998. Reinforcement Learning: An

Introduction. MIT Press

• S. Young. 2006. Reinforcement Learning for Spoken Dialog

Systems: Using POMDPs for Dialog Management. SLT

• L. P. Kaelbling, M. L. Littman, and A. W. Moore. 1996.

Reinforcement Learning: A Survey. Journal of Artificial Intelligence

Research 4:237-285

• E. Levin, R. Pieraccini, and W. Eckert. January 2000. A Stochastic

Model of Human-Machine Interaction for Learning Dialogue

Strategies. IEEE Transaction on Speech and Audio Processing.

1:11-23

Intelligent Robot Lecture Note 76

Dialog Management

Reading Lists

• Cheongjae Lee, Sangkeun Jung, Jihyun Eun, Minwoo Jeong, Gary

Geunbae Lee. 2005. Example and situation based dialog management for spoken dialog system. Proceedings of the IEEE

Automatic Speech Recognition and Understanding Workshop.

• Cheongjae Lee, Sangkeun Jung, Jihyun Eun, Minwoo Jeong, and

Gary Geunbae Lee. 2006. A Situation-based Dialogue

Management using Dialogue Examples. Proceedings of the 2006

IEEE international conference on acoustics, speech and signal processing.

• Cheongjae Lee, Sangkeun Jung, Minwoo Jeong, and Gary

Geunbae Lee. 2006. Chat and Goal-Oriented Dialog Together: A

Unified Example-based Architecture for Multi-Domain Dialog

Management. Proceedings of the IEEE/ACL 2006 workshop on spoken language technology.

Intelligent Robot Lecture Note 77