Current NAND Flash Architecture

advertisement

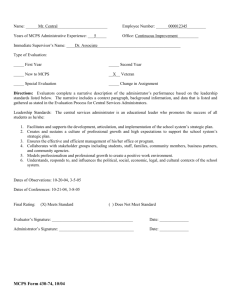

Improving System Performance and Longevity with a New NAND Flash Architecture Jin-Ki Kim Vice President, Research & Development MOSAID Technologies Inc. Santa Clara, CA USA August 2009 1 Agenda Context Core Architecture Innovation High Performance Interface Summary Santa Clara, CA USA August 2009 2 Computing Storage Hierarchy CPU Cycles CPU Cycles 1 1 Register Register 10 10 Cache 100 DRAM (10.6 ~ 12.8GB/s per Channel) DRAM (5.3 ~ 6.4GB/s per Channel) 100x > Cache 100 Memory BW Gap 105 ~ 106 10x SCM (500MB/s ~ 2GB/s) 10x 107 ~ 108 HDD (20 ~ 70MB/s) Historical Storage Hierarchy Santa Clara, CA USA August 2009 107 ~ 108 HDD (20 ~ 70MB/s) New Storage Hierarchy * SCM: Storage Class Memory R.Freitas and W.Wilcke, “Storage-class memory: The next storage system technology”, IBM J. RES. & DEV. VOL. 52 NO. 4/5 JULY/SEPTEMBER 2008. 3 NAND Flash Progression 32Gb MLC Density The single-minded focus on bit density improvements has brought Flash technology to current multi-Gb NAND devices 16Gb MLC 8Gb MLC 4Gb MLC 2Gb SLC 1Gb SLC 120nm Santa Clara, CA USA August 2009 90nm 73nm 65nm Process Technology 51nm 35nm 4 NAND Architecture Trend Primary focus is density for cost and market adoption A: # of Bit / Cell 1 2 4 B: # of Bitline / Page Buffer 1 2 4 C: # of Cell / NAND String 8 16 32 64 512B 2KB 4KB 8KB D: Page Size 16KB Block Size = (A * B * C) * D Santa Clara, CA USA August 2009 Cell Array Mismatch Factor (CAMF) 5 NAND Block Size Trend Cell Array Mismatch Factor (CAMF) is a key parameter to degrade write efficiency (i.e. increase write amplification factor) CAMF = 128 (SLC) & 256(MLC) • 64 cells/NAND • 8KB Page Buf. • 2 BLs/PB 512KB 8Gb/16Gb/32Gb MLC CAMF = 64 (SLC) & 128(MLC) 256KB 2Gb/4Gb/8Gb MLC CAMF = 32 128KB 4Gb/8Gb/16Gb CAMF = 16 CAMF = 8 16KB 8KB 4KB 8Mb/16Mb 90 Santa Clara, CA USA August 2009 1Gb/2Gb/4Gb 91 92 93 128Mb/256Mb/ 512Mb 32Mb/64Mb 94 • 8 cells/NAND • 512B Page Buf. 95 96 97 98 99 00 01 • 16 cells/NAND • 16 cells/NAND • 512B Page Buf. • 512B Page Buf. • 2 BLs/PB 02 03 04 05 06 • 32 cells/NAND • 2KB Page Buf. • 2 BLs/PB 07 08 09 • 32 cells/NAND • 4KB Page Buf. • 2 BLs/PB 10 6 NAND Challenges in Computing Apps Endurance: 100K 10K 5K 3K 1K Retention: 10 years 5 years 2.5 years Current NAND architecture trend accelerates reliability degradation Computing Multi Media Applications A: # of Bit / Cell 1 2 4 B: # of Bitline / Page Buffer 1 2 4 C: # of Cell / NAND String 8 16 32 64 512B 2KB 4KB 8KB D: Page Size 16KB Block Size = (A * B * C) * D Santa Clara, CA USA August 2009 Cell Array Mismatch Factor 7 Flash System Lifetime Metric System lifetime heavily relies on NAND architecture and features, primarily cell array mismatch factor Write Efficiency = Total Data Written by Host Total Data Written to NAND Total Host Writes = NAND Endurance Cycle * System Capacity * Write Efficiency * Wear Leveling Efficiency Santa Clara, CA USA August 2009 System Lifetime = Total Host Writes Host Writes per Day 8 NAND Architecture Innovation Current NAND Flash Architecture B/L0 Plane 1 Plane 2 B/L(j*8-1) B/L1 B/L(j*8) B/L(j+k)*8-2 B/L(j+k)*8-1 SSL W/L31 W/L30 W/L29 W/L28 W/L27 W/L26 W/L2 W/L1 Page Buffer: 512B 2K 4KB 8KB W/L0 GSL CSL NAND Cell String Page Block Santa Clara, CA USA August 2009 9 NAND Architecture Innovation FlexPlane with 2-tier Row Decoder Scheme Smaller & Flexible Page Size (2KB/4KB/6KB/8KB) Smaller & Flexible Erase Size Lower power consumption due to segmented pp-well 2KB Page Buffer Santa Clara, CA USA August 2009 2KB Page Buffer 2KB Page Buffer FlexPlane Operations NAND Cell Array on Sub PP-Well Segment Row Decoder Global Row Decoder NAND Cell Array on Sub PP-Well Segment Row Decoder NAND Cell Array on Sub PP-Well Segment Row Decoder NAND Cell Array on Sub PP-Well Segment Row Decoder FlexPlane 2KB Page Buffer 10 NAND Architecture Innovation 2-Dimensional Page Buffer Scheme Upper Plane 2-Dimensional Page Buffer (Shared Page Buffer) 0.5x Bitline Length compared to conventional NAND architecture Santa Clara, CA USA August 2009 Lower Plane Micron 32Gb NAND Flash in 34nm, 2009 ISSCC 11 NAND Core Innovation Page-pair & Partial Block Erase Minimize cell array mismatch factor Improve write efficiency Block Erase Partial Block Erase B/L Page-pair Erase B/L B/L SSL SSL SSL W/L31 W/L31 W/L31 W/L30 W/L30 W/L30 W/L29 W/L29 W/L29 W/L28 W/L28 W/L28 W/L27 W/L27 W/L27 W/L26 W/L26 W/L26 W/L2 W/L2 W/L2 W/L1 W/L1 W/L1 W/L0 W/L0 W/L0 GSL GSL GSL CSL CSL CSL Santa Clara, CA USA August 2009 • MOSAID’s Erase Scheme 12 NAND Core Innovation Lower Operating Voltage 2.7 ~ 3.3V 2.7 ~ 3.3V H.V. Gen 3.3V H.V. Gen H.V. Gen 1.8V Core and Peri. Core and Peri. Core and Peri. 1.8V I/O I/O I/O • MOSAID’s Low Stress Program Scheme Santa Clara, CA USA August 2009 13 NAND Core Innovation Low Stress Program Precharge NAND string to voltage higher than Vcc prior to wordline boost and bitline data load Reduces program stress (Vpgm & Vpass stress) during programming Eliminates Vcc dependency to achieve low Vcc operation Minimizes background data dependency Eliminates W/L to SSL Coupling Addresses Gate Induced Drain Leakage (GIDL) Santa Clara, CA USA August 2009 14 HyperLink (HLNAND™) Flash Monolithic HLNAND • Device interface independent of memory technology and density • Packet protocol with low pin count • Configurable data width link architecture • Flexible modular command structure • Simultaneous Read and Write • EDC for command and ECC for registers • Daisy-chain cascade with point-to-point connection of up to virtually Unlimited number of devices New Device Architecture N Ar e w ch Lin ite ctu k re e rit W e w m Ne che S • Fully independent bank operations • Multiple, simultaneous data transactions • Data throughput independent of core operations • Device architecture for performance and longevity FlexPlane Architecture Two-dimensional Page Buffer Two-tier Row Decoder • • • • • • NAND flash cell technology Page/multipage erase function Partial block erase function Low stress program scheme Random page program in SLC Low Vcc operations MCP HLNAND Santa Clara, CA USA August 2009 15 HyperLink Interface Point-to-point ring topology • • • • • Host Interface Santa Clara, CA USA August 2009 Synchronous DDR signaling with source termination only Up to 255 devices in a ring without speed degradation Dynamically configurable bus width from 1-8 bits HL1 parallel clock distribution to 266MB/s HL2 source synchronous clocking to 800MB/s, backward compatible to HL1 HLNAND HLNAND HLNAND HLNAND Controller 16 32Gb SLC/64Gb MLC HLNAND MCP HL1 (DDR-200/266) using NAND in MCP - Conform SLC/MLC HLNAND spec HLNAND MCP 8Gb SLC/16Gb MCL 8Gb SLC NAND/ 16Gb MLC NAND x8b HL1 Interface HLNAND Bridge Chip developed by MOSAID and implemented in a MCP x8b HLNAND Bridge Chip x8b 8Gb SLC NAND/ 16Gb MLC NAND Santa Clara, CA USA August 2009 8Gb SLC NAND/ 16Gb MLC NAND x8b 8Gb SLC NAND/ 16Gb MLC NAND 17 32Gb SLC/64Gb MLC HLNAND MCP MCP Package – 12 x 18 100-Ball BGA Cross sectional view (A-A) Santa Clara, CA USA August 2009 Cross sectional view (B-B) 18 32Gb SLC/64Gb MLC HLNAND MCP Performance DDR Output Timing (Oscilloscope Signals) – will be inserted Santa Clara, CA USA August 2009 DDR-300 Operations 151MHz (tCK = 6.6ns) @Vcc = 1.8V 19 HLNAND Flash Module (HLDIMM) Use cost effective DDR2 SDRAM 200-pin SODIMM form factor and sockets 8 x 64Gb or 8 x 128Gb HLNAND MCP (4 on each side) Santa Clara, CA USA August 2009 20 HLDIMM Port Configuration 2 x HyperLink HL1 interfaces with 533MB/s read + 533MB/s write = 1066MB/s aggregate throughput front back Pin 1 Pin 2 HL1 MCP CH0 in CH0 out HL1 MCP HL1 MCP HL1 MCP CH0 out CH0 in to controller next HLDIMM HL1 MCP CH1 in CH1 out Santa Clara, CA USA August 2009 HL1 MCP HL1 MCP Pin 199 HL1 MCP HLDIMM CH1 out CH1 in Pin 200 21 System Configurations with HLDIMM 1066MB/s Aggregate Throughput CH0 in CH0 out HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs CH0 in CH0 out HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs controller CH1 in CH1 out HL1 MCPs HL1 MCPs HLDIMM HL1 MCPs HL1 MCPs HLDIMM HL1 MCPs HL1 MCPs HLDIMM HL1 MCPs HL1 MCPs CH1 in CH1 out HLDIMM HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HLDIMM HLDIMM HL1 MCPs HL1 MCPs HLDIMM Loop-around empty socket 2133MB/s Aggregate Throughput CH0 in HL1 MCPs HL1 MCPs CH2 in CH0 out HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HL1 MCPs HLDIMM Santa Clara, CA USA August 2009 HL1 MCPs HL1 MCPs HL1 MCPs CH3 out CH0 in HL1 MCPs HL1 MCPs CH3 in CH1 out HL1 MCPs HL1 MCPs CH2 out CH1 in HL1 MCPs HL1 MCPs HLDIMM CH0 out HL1 MCPs HLDIMM HL1 MCPs HLDIMM HL1 MCPs HLDIMM HL1 MCPs HLDIMM 22 Summary The driving forces in future NVM are the memory architecture & feature innovation that will support emerging system architectures and applications Using HLNAND Flash, Storage Class Memory is viable today using proven HLNAND flash technology Santa Clara, CA USA August 2009 23 Resource for HLNAND Flash www.HLNAND.com Available • • • • • • • 64Gb MLC MCP sample 64GB HLDIMM sample Architectural Specification Datasheets White papers Technical papers Verilog Behavioral model Santa Clara, CA USA August 2009 24