CF-1 - SEAS

advertisement

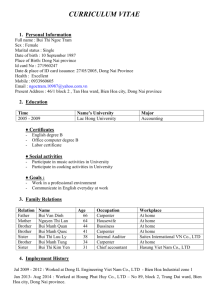

The BellKor 2008 Solution to the Netflix Prize by Leenarat Leelapanyalert Netflix Dataset • Over 100 million movie ratings with date-stamp (100,480,507 ratings) • M = 17,770 movies • N = 480,189 customers • 1 (star) = no interest, 5(stars) = strong interest • Dec 31, 1999 – Dec 31, 2005 The user-item matrix N*M = 8,532,958,530 elements 98.9% values are missing Netflix Competition • 4.2 from 100 million ratings – Training set (Probe set) – Qualifying set (Quiz set & Test set) • Scoring – Show RMSE achieved on the Quiz set – Best RMSE on the Test set → THE WINNER!! Outline • Necessary index letters • Baseline predictors – With temporal effects • Latent factor models – with temporal effects • Neighborhood models – with temporal effects • Integrated models • Extra: Shrinking towards recent actions Outline • Necessary index letters • Baseline predictors → Adjust deviations of – With temporal effects each user (rater, • Latent factor models customer) and item – with temporal effects (movie) • Neighborhood models – with temporal effects • Integrated models • Extra: Shrinking towards recent actions Outline • Necessary index letters • Baseline predictors – With temporal effects Compare between • Latent factor models → items and users – with temporal effects by SVD • Neighborhood models – with temporal effects • Integrated models • Extra: Shrinking towards recent actions Outline • Necessary index letters • Baseline predictors – with temporal effects • Latent factor models – with temporal effects Compute the • Neighborhood models→ relationship between items – with temporal effects • Integrated models (or users) • Extra: Shrinking towards recent actions Outline • Necessary index letters • Baseline predictors - with temporal effects • Latent factor models –with temporal effects • Neighborhood models –with temporal effects • Integrated models • Extra: Other methods – Shrinking towards recent actions – Blending multiple solutions Outline • Necessary index letters • Baseline predictors – with temporal effects • Latent factor models – with temporal effects • Neighborhood models – with temporal effects Combine Latent factor models and Neighborhood • Integrated models → models together • Extra:Shrinking towards recent actions Outline • Necessary index letters • Baseline predictors – with temporal effects • Latent factor models – with temporal effects • Neighborhood models – with temporal effects • Integrated models • Extra: Shrinking towards recent actions → New ideas Index Letters • • • • • • • • • u,v → i,j → rui → ^ rui → tui → K → R(u) → R(i) → N(u) → users, raters, or customers movies, or items the score by user u of movie i predicted value of rui the time of rating rui the training set which rui is known all the items for which rating by u the set of users who rated item i all items that can estimated u’s score Baseline Predictors (bui) bui bu bi µ → the overall average rating bu → deviations of user u bi → deviation of item i Example: µ = 3.7, Simha(bu) = -0.3, Titanic (bi) = 0.5 bui = 3.7 – 0.3 + 0.5 = 3.9 stars Estimate Parameter (bu, bi) – Formula bui bu bi bi bu uR (i ) (r ) 1 R(i ) iR ( u ) (r bi ) 2 R(u ) The regularization parameters (𝜆1,𝜆2) are determined by validation on the Probe set. In this case: 𝜆1 = 25, 𝜆2 = 10 Estimate Parameter (bu, bi) – The Least Squares Problem bui bu bi min b* ( u ,i )K (rui bu bi ) 2 1 ( bu2 bi2 ) u i Estimate Parameter (bu, bi) – The Least Squares Problem bui bu bi min b* ( u ,i )K (rui bu bi ) 2 1 ( bu2 bi2 ) to fit the given rating u i to avoid overfitting by penalizing the magnitudes of the parameters Time Change VS Baseline Predictors • An item’s popularity may change over time • Users change their baseline rating over time bui bu bi bui bu (tui ) bi (tui ) bi(tui) • We do not expect movie likeability to fluctuate on a daily basis • Time periods → Bins • 30 bins bui bu (tui ) bi (tui ) bi (t ) bi bi , Bin(t ) bu(tui) • Unlike movies, user effects can change on a daily basis • Time deviation devu (t ) sign (t tu ) t tu tu → the mean date of rating by tu t → the date that user u rated the movie β = 0.4 by validation on the Probe set bu(tui) bui bu (tui ) bi (tui ) b (t ) bu u devu (t ) (1) u devu (t ) sign (t tu ) t tu • Suit well with gradual drifts bu(tui) • How about sudden drifts? – Since we found that multiple ratings that a user gives in a single day b (t ) bu u devu (t ) but ( 3) u • A user rates on 40 different days on average • Thus, but requires about 40 parameters per user Baseline Predictors bui (t ) bu u devu (tui ) bu ,tui bi bi , Bin(tui ) Baseline Predictors Bu (user bias) Bi (movie bias) bui (t ) bu u devu (tui ) bu ,tui bi bi , Bin(tui ) Baseline Predictors Bu (user bias) Bi (movie bias) bui (t ) bu u devu (tui ) bu ,tui bi bi , Bin(tui ) • Movie bias is not completely user-independent bui (t ) bu u devu (tui ) bu ,tu i (bi bi , Bin(tu i ) ) cu (tui ) cu(t) → time-dependent scaling feature cu → (stable part) cut → (day-specific variable) cu (t ) cu cut bui (t ) bu u devu (tui ) bu ,tu i (bi bi , Bin(tu i ) ) cu (tui ) RMSE = 0.9555 Frequencies (additional) • The number of ratings a user gave on a specific day SIGNIFICANT f ui log a Fui Fui → the overall number of ratings that user u gave on day tui bui (t ) bu u devu (tui ) bu ,tui (bi bi , Bin(tui ) ) cu (tui ) bi , f ui bif → the bias specific for the item i at log-frequency f RMSE 0.9555 → 0.9278 Why Frequencies Work? • Bad when using with user-movie interaction terms • Nothing when using with user-related parameters • Rate a lot in a bulk → Not closely to the actual watching day – Positive approach – Negative approach • High frequencies (or bulk ratings) do not represent much change in people’s taste, but mostly biased selection of movies Predicting Future Days • The day-specific parameters should be set to default value • cu(tui) = cu • bu,t = 0 • The transient temporal model doesn’t attempt to capture future changes. Latent Factor Models • To transform both items and users to the same latent factor space – Obvious dimensions • Comedy VS Drama • Amount of action • Orientation to children – Less well defined dimensions • Depth of character development • Tool → SVD Singular Value Decomposition (SVD) • Factoring matrices into a series of linear approximations that expose the underlying structure of the matrix Singular Value Decomposition (SVD) Predicted Score = User Baseline Rating * Movie Average Score A B C Simha 4 4 4 4 Ateeq 5 5 5 5 Smith 3 3 3 3 Greg 4 4 4 Mcq 4 4 4 Ramin 4 4 4 4 Xiao 4 4 4 3 Wu 3 3 3 5 Riz 5 5 5 = 4 4 4 * 1 1 1 Singular Value Decomposition (SVD) Predicted Score = User Baseline Rating * Movie Average Score A B C Simha 4 4 5 Ateeq 4 5 5 Smith 3 3 2 Greg 4 5 4 Mcq 4 4 4 Ramin 3 5 4 Xiao 4 4 3 Wu 2 4 4 Riz 5 5 5 Singular Value Decomposition (SVD) Predicted Score = User Baseline Rating * Movie Average Score A B C Simha 3.95 4.64 4.34 4.34 Ateeq 4.27 5.02 4.69 4.69 Smith 2.42 2.85 2.66 2.66 Greg 3.97 4.67 4.36 Mcq 3.64 4.28 4.00 Ramin 3.69 4.33 4.05 3.66 Xiao 3.33 3.92 3.66 3.39 Wu 3.08 3.63 3.39 5.00 Riz 4.55 5.35 5.00 = 4.36 4.00 4.05 * 0.91 1.07 1.00 Singular Value Decomposition (SVD) Predicted Score = User Baseline Rating * Movie Average Score A B C Simha 3.95 4.64 4.34 5 Ateeq 4.27 5.02 4.69 3 2 Smith 2.42 2.85 2.66 4 5 4 Greg 3.97 4.67 4.36 Mcq 4 4 4 Mcq 3.64 4.28 4.00 Ramin 3 5 4 Ramin 3.69 4.33 4.05 Xiao 4 4 3 Xiao 3.33 3.92 3.66 Wu 2 4 4 Wu 3.08 3.63 3.39 Riz 5 5 5 Riz 4.55 5.35 5.00 A B C Simha 4 4 5 Ateeq 4 5 Smith 3 Greg - Singular Value Decomposition (SVD) Predicted Score = User Baseline Rating * Movie Average Score A B C Simha 0.05 -0.64 0.66 -0.18 Ateeq -0.28 -0.02 0.31 -0.38 Smith 0.58 0.15 -0.66 0.80 Greg 0.03 0.33 -0.36 Mcq 0.36 -0.28 0.00 0.67 -0.05 0.89 Ramin -0.69 = 0.15 0.35 -0.67 Xiao 0.67 0.08 -0.66 -1.29 Wu -1.08 0.37 0.61 0.44 Riz 0.45 -035 0.00 * 0.82 -0.20 -0.53 Singular Value Decomposition (SVD) Predicted Score = User Baseline Rating * Movie Average Score A B C Simha 4 4 5 4.34 -0.18 -0.90 Ateeq 4 5 5 4.69 -0.38 -0.15 Smith 3 3 2 2.66 0.80 0.40 4.36 0.15 0.47 4.00 0.35 -0.29 4.05 -0.67 0.68 = Greg 4 5 4 Mcq 4 4 4 Ramin 3 5 4 3.66 0.89 0.33 Xiao 4 4 3 3.39 -1.29 0.14 Wu 2 4 4 5.00 0.44 -0.36 Riz 5 5 5 * 0.91 1.07 1.00 0.82 -0.20 -0.53 -0.21 0.76 -0.62 Latent Factor Models rˆui bui puT qi pu → user-factors vector qi → item-factors vector • Add implicit feedback – Asymmetric-SVD 12 12 rˆui bui q R(u ) (ruj buj ) x j N (u ) y j jR ( u ) jN ( u ) T i – SVD++ 12 rˆui bui q pu N (u ) y j jN ( u ) T i 60 factors RMSE = 0.8966 Temporal Effects • Time – Movie biases – go in and out of popularity over time bi – User biases – user change their baseline ratings over time bu – User preferences – genre, perception on actors and directors, household pu 12 rˆui bui (t ) q pu (t ) N (u ) y j jN ( u ) T i Temporal Effects b (t ) bu u devu (t ) (1) u b (t ) bu u devu (t ) but ( 3) u • The same way we treat user bias we can also treat the user preferences pu (t )T pu1 (t ), pu 2 (t ),..., puf (t ) p (t ) puk uk devu (t ) (1) uk p (t ) p (t ) puk ,t (3) uk (1) uk k=1,2,…,f k=1,2,…,f RMSE 12 rˆui bui (t ) q pu (t ) N (u ) y j jN ( u ) T i p (t ) puk uk devu (t ) (1) uk p (t ) p (t ) puk ,t (3) uk (1) uk f = 500 RMSE = 0.8815 f = 500 RMSE = 0.8841 !! • Most accurate factor model (add frequencies) rˆui bui (t ) (q q T i f = 500, RMSE = 0.8784 T i , f ui 12 ) pu (t ) N (u ) y j jN ( u ) f = 2000, RMSE = 0.8762 Neighborhood Models • To compute the relationship between items • Evaluate the score of a user to an item based on ratings of similar items by the same user The Similarity Measure • The Pearson correlation coefficient, ρij The Similarity Measure • The Pearson correlation coefficient, ρij nij def sij nij 2 ij ;λ2 = 100 sij – similarity nij – the number of users that rated both i and j • A weighted average of the ratings of neighborhood items rˆui bui s (ruj buj ) jS k ( i ;u ) ij s jS k ( i ;u ) ij Sk(i;u) – the set of k items rated by u, which are most similar to i Problem With The Model rˆui bui s (ruj buj ) jS k ( i ;u ) ij s jS k ( i ;u ) ij • Isolate the relations between 2 items • Fully rely on the neighbors, even if they are absent rˆui bui (r jR ( u ) • The wij’s are not user specific • Sum over all item rated by u uj buj ) wij Improving The Model rˆui bui s (ruj buj ) jS k ( i ;u ) ij s jS k ( i ;u ) ij • Isolate the relations between 2 items • Fully rely on the neighbors, even if they are absent rˆui bui (r jR ( u ) uj buj ) wij • The wij’s are not user specific • Sum over all item rated by u rˆui bui (r jR ( u ) uj buj ) wij c jN ( u ) • Not only what he rated, but also what he did not rate. • cij is expected to be high if j is predictive on i ij Improving The Model rˆui bui (r jR ( u ) uj buj ) wij c jN ( u ) ij • The current model somewhat overemphasizes the dichotomy between heavy raters and those that rarely rate • Moderate this behavior by normalization rˆui bui R(u ) 12 (r jR ( u ) uj buj ) wij N (u ) 12 c jN ( u ) ij • 𝛼 = 0 → non-normalized rule – encourages greater deviations • 𝛼 = 1 → fully normalized rule – eliminate the effect of number of rating • In this case, 𝛼 = 0.5 RMSE = 0.9002 Improving The Model rˆui bui R(u ) 12 (r uj jR ( u ) buj ) wij N (u ) 12 c jN ( u ) ij RMSE = 0.9002 • Reduce the model by pruning parameters rˆui bui R (i; u ) k 12 (r uj buj ) wij N (i; u ) k jR ( i ;u ) k Sk(i) – the set of k items most similar i def R k (i; u ) R(u ) S k (i) def N k (i; u ) N (u ) S k (i) k = 17,770 → RMSE = 0.8906 k = 2000 → RMSE = 0.9067 12 c ij jN ( i ;u ) k Integrated Models • Baseline predictors + Factor models + Neighborhood models (1) 12 12 12 k k rˆui b (t ) bi (t ) q pu (t ) N (u ) y j R (i; u ) (ruj buj ) wij N (i; u ) cij jN ( u ) jR k ( i ;u ) jN k ( i ;u ) (1) u T i f = 170, k = 300 → RMSE = 0.8827 • Further improve accuracy, we add a more elaborated temporal model for the user bias (1) 12 12 12 k k rˆui b (t ) bi (t ) q pu (t ) N (u ) y j R (i; u ) (ruj buj ) wij N (i; u) cij jN ( u ) jR k ( i ;u ) jN k ( i ;u ) ( 3) u T i f = 170, k = 300 → RMSE = 0.8786 EXTRA: Shrinking Towards Recent Actions • To correct rui • Shrink rui towards the average rating of u on day t • The single day effect is among the strongest temporal effects in data rˆui cut rut α = 8 cut nut exp( Vut ) β = 11 cut nut – the number of ratings u gave on day t rut – the mean rating of u at day t Vut – the variance of u’s ratings at day t Shrinking Towards Recent Actions • A stronger corrections accounts for periods longer than a single day • And tries to characterize the recent user behavior on similar movies wiju sij exp( tui tuj ) cut nui exp( Vui ) nui u w ij u _ rated _ j rˆui cui rui 1 cui rui Vui w r w u ij u _ rated _ j uj u ij u _ rated _ j w (r w u ij u _ rated _ j u ij u _ rated _ j uj )2 (rui ) 2 Q&A