This disaster recovery plan provides

advertisement

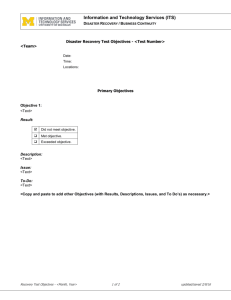

Running Head: Disaster Recovery Plan Broadband Solutions Disaster Recovery Plan Broadband Solutions Brandon Ebert University of Advancing Technology NTW440 Kevin McLaughlin June 21, 2013 1 2 Disaster Recovery Plan Broadband Solutions Disaster Recovery Plan (DRP) for ISP Systems Plan and related Business Processes Business Process Information Systems Information Systems Information Systems Information Systems System Support Software Engineering Accounting Accounting Human Resources Feature Web Hosting, Email, DNS Customer Database Customer Support Trouble Ticket System Phone Systems Code repository Accounts receivable, Account payable Payroll Employee Data Relevant Technical Components Server, email software, Web software, user data base Hardware, User Database, COTs software User Database, Server hardware, Remedy Software, Server Hardware Cisco hardware, software, PBX, VOIP systems Server Hardware, Code repository, file server Server Hardware, Accounting Software, Business partner databases, Customer database Server Hardware, employee database Server Hardware, employee database, insurance and health providers March 14, 2016 Disaster Recovery Plan 3 Table of Contents 1. Purpose and Objective ............................................................................................................................................ 4 Scope ............................................................................................................................................................................ 4 2. Dependencies.......................................................................................................................................................... 4 3. Disaster Recovery Strategies................................................................................................................................... 6 4. Disaster Recovery Procedures ................................................................................................................................ 6 Response Phase............................................................................................................................................................ 6 Resumption Phase........................................................................................................................................................ 7 Data Center Recovery ............................................................................................................................................... 7 Internal or External Dependency Recovery .............................................................................................................. 9 Significant Network or Other Issue Recovery (Defined by quality of service guidelines) ...................................... 10 Restoration Phase ...................................................................................................................................................... 10 Data Center Recovery ............................................................................................................................................. 10 Internal or External Dependency Recovery ............................................................................................................ 11 Significant Network or Other Issue Recovery (Defined by quality of service guidelines) ...................................... 12 Appendix A: Disaster Recovery Contacts - Admin Contact List ..................................................................................... 13 Appendix B: Document Maintenance Responsibilities and Revision History ............................................................... 15 Appendix C: Server Farm Details ...................................................................................... Error! Bookmark not defined. Appendix D: Glossary/Terms ......................................................................................................................................... 20 Disaster Recovery Plan 1. 4 Purpose and Objective Broadband Solutions developed this disaster recovery plan (DRP) to be used in the event of a significant disruption to the features listed in the table above. The goal of this plan is to outline the key recovery steps to be performed during and after a disruption to return to normal operations as soon as possible. Scope The scope of this DRP document addresses technical recovery only in the event of a significant disruption. The intent of the DRP is to be used in conjunction with the business continuity plan (BCP) Broadband Solutions has. A DRP is a subset of the overall recovery process contained in the BCP. Plans for the recovery of people, infrastructure, and internal and external dependencies not directly relevant to the technical recovery outlined herein are included in the Business Continuity Plan and/or the Corporate Incident Response and Incident Management plans Broadband solutions has in place. This disaster recovery plan provides: - Guidelines for determining plan activation; - Technical response flow and recovery strategy; - Guidelines for recovery procedures; - References to key Business Resumption Plans and technical dependencies; - Rollback procedures that will be implemented to return to standard operating state; - Checklists outlining considerations for escalation, incident management, and plan activation. The specific objectives of this disaster recovery plan are to: - Immediately mobilize a core group of leaders to assess the technical ramifications of a situation; - Set technical priorities for the recovery team during the recovery period; - Minimize the impact of the disruption to the impacted features and business groups; - Stage the restoration of operations to full processing capabilities; - Enable rollback operations once the disruption has been resolved if determined appropriate by the recovery team. Within the recovery procedures there are significant dependencies between and supporting technical groups within and outside Broadband Solutions. This plan is designed to identify the steps that are expected to take to coordinate with other groups / vendors to enable their own recovery. This plan is not intended to outline all the steps or recovery procedures that other departments need to take in the event of a disruption, or in the recovery from a disruption. 2. Dependencies This section outlines the dependencies made during the development of this disaster recovery plan. If and when needed the DR TEAM will coordinate with their partner groups as needed to enable recovery. 5 Disaster Recovery Plan Dependency User Interface / Rendering Assumptions Presentation components Business Intelligence / Reporting Processing components Network Layers Infrastructure components Users (end users, power users, administrators) are unable to access the system through any part of the instance (e.g. client or server side, web interface or downloaded application). Infrastructure and back-end services are still assumed to be active/running. The collection, logging, filtering, and delivery of reported information to end users is not functioning (with or without the user interface layer also being impacted). Standard backup processes (e.g. tape backups) are not impacted, but the active / passive or mirrored processes are not functioning. Specific types of disruptions could include components that process, match and transforms information from the other layers. This includes business transaction processing, report processing and data parsing. Connectivity to network resources is compromised and/or significant latency issues in the network exist that result in lowered performance in other layers. Assumption is that terminal connections, serially attached devices and inputs are still functional. Loss of SAN, local area storage, or other storage component. Storage Layer Infrastructure components Database Layer Database storage components Data within the data stores is compromised and is either inaccessible, corrupt, or unavailable Hardware/Host Layer Hardware components Virtualizations (VM's) Virtual Layer Physical components are unavailable or affected by a given event Virtual components are unavailable Hardware and hosting services are accessible Administration Infrastructure Layer Support functions are disabled such as management services, backup services, and log transfer functions. Other services are presumed functional Interfaces and intersystem communications corrupt or compromised Internal/External Dependencies 6 Disaster Recovery Plan 3. Disaster Recovery Strategies The overall DR strategy of Broadband Solutions is summarized in the table below and documented in more detail in the supporting sections. These scenarios and strategies are consistent across the technical layers (user interface, reporting, etc.) Data Center Disruption Significant Dependency (Internal or External) Disruption Failover to alternate Data Center Reroute core processes to another Data Center (without full failover) Operate at a deprecated service level Take no action 4. Reroute core functions to backup / alternate provider Significant network or other issues Reroute operations to backup processing unit / service (load balancing, caching) Participate in recovery strategies as available Wait for the restoration of service, provide communication as needed to stakeholders Wait for service to be restored, communicate with core stakholders as needed Disaster Recovery Procedures A disaster recovery event can be broken out into three phases, the response, the resumption, and the restoration. These phases are also managed in parallel with any corresponding business continuity recovery procedures summarized in the business continuity plan. Response Phase: The immediate actions following a significant event. •On call personnel paged •Decision made around recovery strategies to be taken •Full recovery team identified Resumption Phase: Activities necessary to resume services after team has been notified. •Recovery procedures implemented •Coordination with other departments executed as needed Restoration Phase: Tasks taken to restore service to previous levels. •Rollback procedures implemented •Operations restored Response Phase 7 Disaster Recovery Plan The following are the activities, parties and items necessary for a DR response in this phase. Please note these procedures are the same regardless of the triggering event (e.g. whether caused by a Data Center disruption or other scenario). Response Phase Recovery Procedures – All DR Event Scenarios Step Identify issue, page on call / Designated Responsible Individual (DR TEAM) Identify the team members needed for recovery Owner Emergency Response Team (ERT) ERT Duration 20 minutes Components Issue communicated / escalated Priority set 30 minutes Selection of core team members required for restoration phase from among the following groups: Service Delivery Human Resources Tier 1 Support Tier 2 Support Change Management Software Engineering Systems Engineering IT Accounting Legal System Support & Maintenance (SS&M) QA Security Primary bridge line: 480-441-9660 Secondary bridge line: 480-726-1280 Alternate / backup communication tools: email, Instant Message Documentation / tracking of timelines and next decisions Creation of disaster recovery event command center / “war room” as needed Establish a conference line for a bridge call to coordinate next steps Communications 10 minutes Communicate the specific recovery roles and determine which recovery strategy will be pursued. Communications 30 minutes This information is also summarized by feature in Appendix A: Disaster Recovery Contacts - Admin Contact List. Resumption Phase During the resumption phase, the steps taken to enable recovery will vary based on the type of issue. The procedures for each recovery scenario are summarized below. Data Center Recovery Full Data Center Failover Step Initiate Failover Owner ERT Duration TBD Components Restoration procedures identified Risks assessed for each procedure Coordination points between groups defined Issue communication process and triage efforts 8 Disaster Recovery Plan Step Owner Duration Complete Failover ERT TBD Test Recovery ERT TBD Failover deemed successful ERT TBD Components established Recovery steps executed, including handoffs between key dependencies Tests assigned and performed Results summarized and communicated to group Timeline for recovery actions associated with the failover the technical components between different data centers to provide geo-redundant operations. Coordination of recovery actions is crucial. A timeline is necessary in order to manage recovery between different groups and layers. Recovery Timeline T+30 Recovery T+45 Complete T+ 15 Failback Begins T+ 15 T+30 Failback Ends T+45 Detect/Report Event Redirect Traffic to Alternate Data Center Redirect traffic to rebuilt DB and servers Failover Database to Alternate Data Center Redirect Internal Interfaces to Failover DB Redirect Internal Interfaces to Rebuilt Primary DB Redirect External Interfaces to Failover DB Redirect External Interfaces to Rebuilt Primary DB <Function> Internal Dependencies Rebuild Primary DB External Dependencies DB Layer GFS/Network Layer Operations Event Instantiation Failover UI layer to backup services and redirect to failover DB Failback UI Layer to Rebuilt Primary DB Reroute critical processes to alternate Data Center Step Change Router configuration Owner IT Duration 40 min Components Backup Router configurations Install DR Router configurations 9 Disaster Recovery Plan Step Change DNS configuration Owner IT Duration 30 min Test changes to DNS and Router configuration IT 30 min Components Backup DNS Modify DNS records to point to new location Take no action – monitor for Data Center recovery This recovery procedure would only be the chosen alternative in the event no other options were available to (e.g. the cause and recovery of the Data Center is fully in the control of another department or vendor). Step Owner Duration Components Track communication and SS&M As needed status with the core recovery team. Send out frequent updates to ERT As needed core stakeholders with the status. Internal or External Dependency Recovery Reroute operations to backup provider Step Move support systems to backup location Test functionality of backup location Owner Tier Support, SS&M Tier support, SS&M Duration 1 day Components Relocate users to backup location 1 hour Execute available recovery procedures Step Inform other teams about technical dependencies Owner Duration Communications As needed Components Take no action – monitor status This recovery procedure would only be the chosen alternative in the event no other options were available to (e.g. the cause and recovery of the disruption is fully in the control of another department or vendor). Step Owner Duration Components Track communication and ERT As needed Provide feedback about system availability status with the core recovery team. Send out frequent updates to ERT As needed core stakeholders with the status. 10 Disaster Recovery Plan Significant Network or Other Issue Recovery (Defined by quality of service guidelines) Execute available recovery procedures Step Inform other teams about technical dependencies Owner DR TEAM Duration As needed Components Hardware (CPU, Memory, Hard disk, Network requirements) Take no action – monitor status This recovery procedure would only be the chosen alternative in the event no other options were available to (e.g. the cause and recovery of the internal or external dependency is fully in the control of another department or vendor). Step Owner Duration Components Track communication and DR TEAM As needed Provide feedback about SharePoint service availability status with the core recovery team. Send out frequent updates to DR TEAM As needed core stakeholders with the status. Restoration Phase During the restoration phase, the steps taken to enable recovery will vary based on the type of issue. The procedures for each recovery scenario are summarized below. Data Center Recovery Full Data Center Restoration Step Determine whether failback to original Data Center will be pursued Original data center restored Complete Failback Owner DR TEAM Duration TBD Components Restoration procedures determined DR TEAM DR Team TBD TBD Server Farm level recovery Failback steps executed, including handoffs between key dependencies Test Failback DR Team TBD Tests assigned and performed Results summarized and communicated to group Issues (if any) communicated to group Determine whether failback DR TEAM TBD Declaration of successful failback and communication was successful to stakeholder group. Disaster recovery procedures closed. Results summarized, post mortem performed, and DRP updated (as needed). The following section contains steps for the restoration procedures. Full Server Farm Recovery 11 Disaster Recovery Plan This section describes the process for recovering from a farm-level failure, for a: three-tier server farm consisting of a database server, an application server that provides Index, Query, InfoPath Forms Services and Excel Calculation Services, and two front-end Web servers that service search queries and provide Web content. Front-End Web Servers (Queries, Content) Application Server (Excel Calculation Services, Indexing Services Dedicated SQL Server The following diagram describes the recovery process for a farm. Full Farm Recovery Process SQL Server 2. Install Operating System and patches Install Office SharePoint Server (type: Complete) Run SharePoint Products and Technologies Wizard to create Central Administration site, Configuration database Front-End Web Server 1. Install Operating System and patches Install SQL Server and patches Application Server Prepare servers and install 3. Install Operating System and patches Install IIS, ASP.NET, and the .NET framework 3.0 Install Office SharePoint Server (type: front-end Web) Prepare to restore Restore backups Redeploy customizations 7a. Restore Office SharePoint Server or SQL Server backups, highest priority first. 4. Start Search services 5. Recreate Web applications 7b. Restore Office SharePoint Server backups for Search, other applications, highest priority first. 11. Optional. Reconfigure alternate access mappings 12. Restart timer jobs. 10. Reconfigure IIS settings 6. Run SharePoint Products and Technologies Wizard 9. Optional. Set one or more front-end Web servers to serve queries 13. (Optional) Redeploy solutions and reactivate features or restore 12 hive files Overview of a farm-level recovery Add Farm restore steps Internal or External Dependency Recovery Take no action – monitor status This recovery procedure would only be the chosen alternative in the event no other options were available to (e.g. the cause and recovery of the disruption is fully in the control of another department or vendor). Step Owner Duration Components Track communication and Communications As needed Provide feedback about SharePoint service status with the core recovery availability 12 Disaster Recovery Plan Step team. Send out frequent updates to core stakeholders with the status. Owner Duration Components Communications As needed Significant Network or Other Issue Recovery (Defined by quality of service guidelines) Execute available recovery procedures Step Communicate with Network Provider Owner System Engineering Assess impact to network IT Determine need to move to backup site ERT Duration As needed Components ISP Sys eng ISP IT Routers, Switches Demarc access ERT Take no action – monitor status This recovery procedure would only be the chosen alternative in the event no other options were available to (e.g. the cause and recovery of the internal or external dependency is fully in the control of another department or vendor). Step Owner Duration Components Track communication and Communications As needed Phone, email systems status with the core recovery team. Send out frequent updates to Communications As needed core stakeholders with the status. 13 Disaster Recovery Plan Appendix A: Disaster Recovery Contacts - Admin Contact List The critical team members who would be involved in recovery procedures for feature sets are summarized below. Feature Name Contact Lists Web Hosting, Email, DNS Customer Database Customer Support Trouble Ticket System Phone Systems Code repository Accounts receivable, Account payable Payroll Employee Data IT, Communications IT, Communications IT, Communications IT, Communications IT, Communications IT, Communications Finance, IT, HR Finance, IT, HR IT, HR For the key internal and external dependencies identified, the following are the primary contacts. Emergency Response Team The Emergency Response Team (ERT) is managed and control by the Crisis Management Team (CMT) the CMT will is responsible for activating all required team and managing those teams during a crisis. CMT team consists of upper management this would include the CEO, CIO, CFO, and President. These individuals will be responsible also for assisting emergency response teams like the fire and police if necessary and identifying a alternate location if necessary. ERT is consists of the following organizations within the company: Sue Patterson: Communication Terri Murray: CMT, Tom Nicastro:HR Volney Douglas: Legal Rich Edding/Brandon Ebert: IT and Security Communications: These individuals consist of public relations and individuals that are needed for communication between all the other ER Team within the company. They are responsible for relaying vital information and any orders from the CMT. Human Resources: These individuals are responsible for making sure the needs of the employees are met during a crisis this could me making sure that there is staff available to meet vital resource needs. They are also responsible for emergency medical insurance in case there is a need to go out of network from the current plan. They will also field employee concerns and make sure employees are getting paid and dealing with any other employee needs. Legal: Is responsible for making sure that the company is compliant with any laws and regulations. They could also be needed to make sure that when the company has had a disaster that the company is protected from criminal or civil actions from the employees or the public. Finance: Is responsible for making sure that all accounts payable and receivable are still being paid and received. They also are needed to verify expenditures that employees are making during a disaster are legitimate and not an employee or vendor taking advantage during a time of disaster that can be chaotic and disorganized. IT: is responsible for bringing systems back online and that there is as minimal disruption within the company as possible. They would also be responsible handling all computer incidents that include malicious code, denial of service, data proliferation, inappropriate use, etc and will be able to respond and remove threats and return the data systems back to organizations as soon as possible. Business Units: will have members assigned to work with each of the above mentioned groups in order to return the business unit back to regular operations. These units will coordinate the needs of the group to the Disaster Recovery Plan 14 appropriate groups. The following people will work directly with a specific group to make sure the needs of the business group are met. In addition the key BCP individuals are: Running Head: Disaster Recovery Plan Broadband Solutions 1 Appendix B: Risk Assessment RISK ASSESSMENT WORKSHEET Program Process or Business Practice General description of the program, process or business practice under review. Separate action steps that could expose the process to various types of risk would be described in individual rows. Information Type/Sensitivity Level Description of the types of information stored, level of sensitivity, how the information is stored (pc, laptop, paper, file cabinet, etc), who has access. Associated Risks What could go wrong? What would be the impact be to the organization? Where is the organization vulnerable? How could this information be compromised? Examples of Current Controls Description of control activities currently in place to mitigate the potential risks. Determination of the Effectiveness of this controls currently in place Assessment by individuals involved in the business practice, procedures, risks and current control activities, assessment should be as accurate as possible and completely documented. This is to determine if the control is effective. Typically a Yes or No answer. Regulation or Standard Referred to Reference Regulation or Standard that this control is to address of mitigate. Next Action; Require by whom and when If control improvements need to be made, document what will be accomplished, by whom and when. 16 Disaster Recovery Plan Customer 1 web hosting User email accounts and email. Top priority since this holds user data and PII which if not handled carefully can cause Identity theft. Adminsrators have access along with customer it is stored on email servers Data loss, Data poliferation, spam sent from hacked accounts, Identity theft from hacked web accounts, fire, administrator steals data,hardware failure, loss of power Firewalls, Raid 5 for redundancy, onsite backups of data. Generator for loss of power The current controls in place to protect customer email data in not complete and uneffective Currently no regulation on customer data retention There are many things to make customer email more secure. 1. technical controls: IDS, Log auditing, Offsite backup. 2.Management Controls: Process for handling email data, Least privilege practice, background checks for employees. Determine data owners,process for restoration of data incident handling process, user awareness. 17 Disaster Recovery Plan Customer 2 Web hosting User web accounts and web. Top priority since this holds user data and PII which if not handled carefully can cause Identity theft. Adminsrators have access along with customer it is stored on web servers Data loss, Data poliferation, spam sent from hacked accounts, Identity theft from hacked web accounts, fire, administrator steals data,hardware failure, loss of power Firewalls, Raid 5 for redundancy, onsite backups of data. Generator for loss of power The current controls in place to protect customer web data in not complete and uneffective Currently no regulation on customer data retention There are many things to make customer Web more secure. 1. technical controls: IDS, Log auditing, Offsite backup. 2.Management Controls: Process for handling email data, Least privilege practice, background checks for employees. Determine data owners, process for restoration of data incident handling process, user awareness. 18 Disaster Recovery Plan Customer 3 database database of customers their credit card information along with address, phone number all stored in on server harddrive. Loss of customer data base due to fire, media failure theft of customer data by hackers or internal employee,hardware failure, loss of power. Firewalls, Raid 5 for redundancy, onsite backups of data. Generator for loss of power The current controls in place to protect customer data in not complete and uneffective Currently no regulation on customer data retention There are many things to make customer database more secure. 1. technical controls: IDS, Log auditing, Offsite backup,process for restoration of data 2.Management Controls: Process for handling Customer credit card data, Least privilege practice, background checks for employees. Determine data owners, , Identify PII incident handling process, user awareness. 19 Disaster Recovery Plan Customer Internet 4 connectivity Customer 5 Support As an ISP it is impariative to maintain customer internet connectivity in order to survive there are currently to gateways out of the Broadband Solutions network and several networks connected to the backbone of the network providing customer connectivity. Customer support is vital in order to maintain customer satisfactions extended loss of connectivity to internet for customers, due to fire and datacenter where backbone located, hardware failure, Upstream provider having network issues, routing issues. loss of power. denial of service redundant providers incase on provider has issues, spare routers for hardware failures, Generator for loss of power The current controls in place to protect customer connectivity not complete and uneffective Currently no regulation loss of phone system, customer database, or intenet connectivity, fire in support office, Redundant phone lines, cold site to move to in order to continue to support customers. The current controls in place to protect customer connectivity not complete and uneffective No regulations 1. Technical controls: Redundant upstream providers, Redundant hardware to prevent outages due to power failures, cold datacenter for fires or other natural disasters, 2. Management controls: SLA with upstream providers, contact lists for emergencies like DOS or loss of connectivity, process to replace hardware during failures, 1.Techinical controls:Redundant phone systems, completion of controls for customer database 2. Management controls:SLA with phone providers, process for cold site, contact list for cold site and phone providers. 20 Disaster Recovery Plan Trouble 6 ticket system inablity to track customer issues stored in a database and central office data center. Hardwar failure, data loss, fire, compromise of data Raid on server to protect against hard drive failure, firewalls, onsite backup of database. The current controls in place to protect customer connectivity not complete and uneffective no regulations 1. Technical controls: Redundant hardware to prevent outages due to power failures, cold datacenter for fires or other natural disasters, offsite backups of data, IPS, and audit of logs on firewalls, IPS, routers, workstations, servers 2. Management controls: Identify data owner, process for restoration of data, incident handling process, user awareness. Appendix C: Business Impact Analyses Degree Political of or Financial Probability Overall Activity Owner Impact Sensitivity Costs of Loss Weight Unit Process ID Activity (Type of Data) Service Delivery SD-13-001 Sales Sue Patterson SD-13-002 RMA of hardware Sue Patterson SD-13-003 Repair of hardware Sue Patterson 2 2 2 3 2.25 3 2 3 2 2.5 1 2 3 3 2.25 21 Disaster Recovery Plan Human Resources SD-13-004 Replacement of hardware Sue Patterson SD-13-005 Shipping of Hardware Sue Patterson HR-13-001 HR-13-002 HR-13-003 HR-13-004 Teir 1 support TS1-13-001 TS1-13-002 TS1-13-003 TS1-13-004 TS1-13-005 Teir 2 support TS2-13-001 TS2-13-003 TS2-13-004 TS2-13-005 TS2-13-006 TS2-13-007 TS2-13-008 Change Management CM-13-001 CM-13-002 CM-13-003 Employee Pay Employee Health Care New Hire Public Relations Technical Support level 1 (Initial Customer Contact) Field Customer emails Direct calls to approriate Organizations Initial customer product support Customer Account Setup Technical Support level 2 Upgrade system software Deploy software patches Dispatch SS&M Tech Monitor system for failures or events Troubleshoot hardware issues Deploy third party software Change Control Documentation Control Process Control 1 2 3 3 2.25 1 2 3 3 2.25 1 1 2 2 1.5 1 3 1 2 2 1 1 3 2 2 3 3 1.5 2.75 1.75 1 1 2 1 1.25 1 2 2 2 1.75 2 2 2 2 2 1 1 2 3 1.75 2 1 1 2 1.5 1 1 2 2 1.5 3 3 2 2 2.5 3 3 2 2 2.5 1 1 2 3 1.75 1 1 1 2 1.25 2 2 2 3 2.25 3 1 3 1 2 2 3 2 2.75 1.5 1 2 1 2 2 2 2 3 1.5 2.25 Terri Murray Terri Murray Terri Murray Terri Murray Ted Milzarski Tom Nicastro Tom Nicastro Ted Milzarski Ted Milzarski Rich Eddings Rich Eddings Rich Eddings Rich Eddings Rich Eddings Rich Eddings Rich Eddings Volney Douglas Volney Douglas Volney Douglas 22 Disaster Recovery Plan Software Engineering CM-13-004 Continuous Improvement Volney Douglas SWEng-13-001 New software development Sam Buckwalter SWEng-13-002 patch development to existing software. Sam Buckwalter troubleshoot bugs in software Sam Buckwalter SWEng-13-003 Systems Engineering SYSEng-13-001 SYSEng-13-002 SYSEng-13-003 Physical Security Technical Security Operations Management Accounting Patch Management PS-13-008 TechSec-13-009 OM-13-010 AC-13-011 PM-13-012 New System Development Develop upgrades to system hardware Obsolecence review of Third party apps and hardware Development of physical Security controls and mainatian physical security of campus Security of information systems (firewalls, access controls, log analysys and auditing of technical controls.) Initial Deployment of systems and software to customer locations accounts billiable and accounts payable responsible for third party software patching and 3 3 3 3 3 3 3 1 2 2.25 1 1 2 2 1.5 2 2 2 1 1.75 2 2 3 3 2.5 2 2 3 3 2.5 3 3 3 3 3 1 1 1 1 1 1 1 1 1 1 2 3 2 3 2.5 1 1 1 2 1.25 1 1 3 3 2 Christian Woolf Christian Woolf Christian Woolf Derek Johnson Brandon Ebert Steve Arnet Bill Romans Glen Carlish 23 Disaster Recovery Plan testing. Third Party Software System Support & Maintance Quality Assurance TPS-13-013 SS&M-13-014 track licencing of Thirdparty software and where it is installed. Maintain physical hardware at customer location and support customer location. Verify project is following company policies and procedures verifing end user products. Sue Olsen 1 1 2 3 1.75 1 2 2 2 1.75 2 2 2 3 2.25 Dan Tomlinson Weldon Charles Running Head: Disaster Recovery Plan Broadband Solutions 1 Appendix D: Glossary/Terms Standard Operating State: Production state where services are functioning at standard state levels. In contrast to recovery state operating levels, this can support business functions at minimum but deprecated levels. Presentation Layer: Layer which users interact with. This typically encompasses systems that support the UI, manage rendering, and captures user interactions. User responses are parsed and system requests are passed for processing and data retrieval to the appropriate layer. Processing Layer: System layer which processes and synthesizes user input, data output, and transactional operations within an application stack. Typically this layer processes data from the other layers. Typically these services are folded into the presentation and database layer, however for intensive applications; this is usually broken out into its own layer. Database Layer: The database layer is where data typically resides in an application stack. Typically data is stored in a relational database such as SQL Server, Microsoft Access, or Oracle, but it can be stored as XML, raw data, or tables. This layer typically is optimized for data querying, processing and retrieval. Network Layer: The network layer is responsible for directing and managing traffic between physical hosts. It is typically an infrastructure layer and is usually outside the purview of most business units. This layer usually supports load balancing, geo-redundancy, and clustering. Storage Layer: This is typically an infrastructure layer and provides data storage and access. In most environments this is usually regarded as SAN or NAS storage. Hardware/Host Layer: This layer refers to the physical machines that all other layers are reliant upon. Depending on the organization, management of the physical layer can be performed by the stack owner or the purview of an infrastructure support group. Virtualization Layer: In some environments virtual machines (VM's) are used to partition/encapsulate a machine's resources to behave as separate distinct hosts. The virtualization layer refers to these virtual machines. Administrative Layer: The administrative layer encompasses the supporting technology components which provide access, administration, backups, and monitoring of the other layers.