pptx format

advertisement

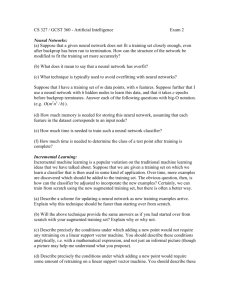

From linear classifiers to neural network Skeleton • Recap – linear classifier • Nonlinear discriminant function • Nonlinear cost function • Example Linear Classifiers • Introduction to neural network • Representation power of sigmoidal neural network Linear Classifier: Recap & Notation • We focus on two-class classification for the entire class •𝒙 𝑛 = 𝑥 𝑛 1 ,𝑥 𝑛 2 ,…,𝑥 𝑛 𝐷 𝑇 - Input feature • 𝑛 – index of training tokens, 𝐷 – feature dimension • 𝒘 = 𝑤1 , 𝑤2 , … , 𝑤𝐷 •𝑎 𝑛 = 𝑏 + 𝒘𝑇 𝒙 •𝑦 𝑛 - Predicted class •𝑡 𝑛 - Labelled class 𝑦 𝑛 𝑛 𝑇 - Weight vector - Linear output 𝑛 1 if 𝑎 ≥ 0 or 𝑦 = 0 otherwise 𝑛 𝑛 1 if 𝑎 ≥0 = −1 otherwise Discriminant function •𝑎 𝑛 = 𝑏 + 𝒘𝑇 𝒙 •𝑦 𝑛 =𝑔 𝑎 𝑛 - Linear output 𝑛 1 if 𝑎 ≥0 𝑛 𝑦 = −1 otherwise 𝑛 𝑔 𝑎 = 1 −1 if 𝑎 ≥ 0 otherwise • 𝑔 𝑎 - Nonlinear discriminant function 1 -1 Loss function • To evaluate the performance of the classifier 𝑁 ℒ 𝑦 1:𝑁 ,𝑡 1:𝑁 = 𝓁 𝑦 𝑛 ,𝑡 𝑛 𝑛=1 • Loss function 𝓁 𝑦, 𝑡 = 𝑦 ≠ 𝑡 𝓁 𝑦, 1 -1 𝓁 𝑦, −1 1 𝑦 -1 1 𝑦 Structure of linear classifier 𝓁 𝑦, 1 𝓁 𝑦, −1 𝓁 ∙, 𝑡 1 1 𝑦 1 1 𝑛 𝑦 𝑦 𝑛 𝑔 ∙ 𝑎 𝒙 𝑛 𝑛 = 𝑏 + 𝒘𝑇 𝒙 𝑎 𝑛 1 𝑥 𝑛 1 𝑛 …… 𝑥 𝑛 𝐷 Alternatively • Nonlinear function 𝑔 𝑎 =𝑎 • Loss function 𝓁 𝑦, 𝑡 = 𝑢 −𝑦𝑡 𝓁 𝑦, 1 𝓁 𝑦, −1 𝑦 𝑦 Structure of linear classifier 𝓁 𝑦, 1 𝓁 𝑦, −1 𝓁 ∙, 𝑡 1 1 𝑦 𝑦𝑦 1 1 𝑛 𝑦 𝑛 𝑔 ∙ 𝑎 𝒙 𝑛 𝑛 = 𝑏 + 𝒘𝑇 𝒙 𝑎 𝑛 1 𝑥 𝑛 1 𝑛 …… 𝑥 𝑛 𝐷 Ideal case - Problem? • Nonlinear function 1 -1 • Loss function 𝓁 𝑦, 1 𝓁 𝑦, −1 𝑦 • Not differentiable. Cannot train using gradient methods • We need proxy for both. 𝑦 Skeleton • Recap – linear classifier • Nonlinear discriminant function • Nonlinear cost function • Example Linear Classifiers • Introduction to neural network • Representation power of sigmoidal neural network Nonlinear Discriminant function • Our Goal: Find a function that is • Differentiable • Approximates the step function • Solution: Sigmoid function • Definition: • A bounded, differentiable and monotonically increasing function. Sigmoid Function - Examples • Logistic Function: 1 𝑔 𝑎 = 1 + −𝑎 𝑒 −𝑎 𝑒 ′ 𝑔 𝑎 = 1 + 𝑒 −𝑎 2 1 0.5 0 -5 -4 -3 -2 -1 0 1 2 3 4 5 0.25 0.2 0.15 0.1 0.05 0 -5 -4 -3 -2 -1 0 1 2 3 4 5 Sigmoid Function - Examples • Hyperbolic Tangent: 𝑒 𝑎 − 𝑒 −𝑎 𝑔 𝑎 = tanh 𝑎 = 𝑎 𝑒 + −𝑎 𝑒 −𝑎 𝑎 −𝑎 𝑎 −𝑎 𝑎 𝑒 +𝑒 𝑒 −𝑒 𝑒 −𝑒 ′ 2 𝑎 𝑔 𝑎 = 𝑎 − = 1 − tanh 𝑒 + 𝑒 −𝑎 𝑒 𝑎 + 𝑒 −𝑎 2 1 0.5 0 -0.5 -1 -5 -4 -3 -2 -1 0 1 2 3 4 5 -4 -3 -2 -1 0 1 2 3 4 5 1 0.5 0 -5 Skeleton • Recap – linear classifier • Nonlinear discriminant function • Nonlinear cost function • Example Linear Classifiers • Introduction to neural network • Representation power of sigmoidal neural network Nonlinear Loss function • Our Goal: Find a function that is • Differentiable • Is an UPPER BOUND of the step function • Why? • For training: min loss => error not large • For test: generalized error < generalized loss < upper bounds Loss function example • Square loss 𝓁 𝑦, 𝑡 = 𝑦 − 𝑡 𝜕𝓁 =2 𝑦−𝑡 𝜕𝑦 𝓁 𝑦, 1 2 𝓁 𝑦, −1 𝑦 • Advantage: easy to solve, common for regression • Disadvantage: punish ‘right’ tokens 𝑦 Loss function example • Hinge loss −𝑡𝑦 + 1 if 𝑡𝑦 < 1 𝓁 𝑦, 𝑡 = 0 otherwise 𝜕𝓁 = 𝑢 −𝑡𝑦 + 1 𝜕𝑦 𝓁 𝑦, 1 𝓁 𝑦, −1 𝑦 • Advantage: easy to solve, good for classification 𝑦 Skeleton • Recap – linear classifier • Nonlinear discriminant function • Nonlinear cost function • Example Linear Classifiers • Introduction to neural network • Representation power of sigmoidal neural network Linear classifiers example Nonlinear Discriminant Function Loss Function Linear Square Sigmoid 𝓁 ∙, 𝑡 𝑦 𝑛 𝑛 ,𝑡 𝑛 𝑛 𝑦 Hinge 𝑛 =𝑔 𝑎 𝑛 𝑔 ∙ • Linear + Square: MSE classifier • Sigmoid + Squared: Nonlinear MSE classifier • Linear + Hinge + Regularization : SVM 𝓁 𝑦 𝑎 1 𝑥 𝑛 1 𝑛 𝑎 …… 𝑥 𝑛 𝑛 = 𝒘𝑇 𝒛 𝑛 𝒛 𝑛 𝐷 MSE Classifier 𝓁 𝑦 𝑛 ,𝑡 𝑛 = 𝑦 𝑛 𝑇 𝛻𝒘 𝒘 𝒛 𝑛 −𝑡 𝑛 𝑛 2 𝑛 −𝑡 =2 𝑛 2 𝑛 𝒛 𝑇 = 𝑛 𝒘 𝒛 𝒛 𝑛 𝑛 𝑇 𝒘−𝑡 𝑛 𝒛 𝑛 𝒛 𝑛 𝑇𝒘 = 𝑛 𝒁= 𝒛 𝒛 𝑛 𝑡 𝑛 𝑛 1 ,…,𝒛 𝑁 ,𝒕= 𝑡 1 𝒁𝒁𝑇 𝒘 = 𝒁𝒕 𝒘 = 𝒁𝒁𝑇 −1 𝒁𝒕 𝑛 ,…,𝑡 𝑁 𝑇 −𝑡 𝑛 𝑛 =0 2 Nonlinear MSE Classifier 𝓁 𝑦 𝑛 ,𝑡 𝑛 = 𝑛 𝑛 𝒘𝑇 𝒙 𝑛 = 𝑛 −𝑡 𝑦 𝑛 𝑦 𝑛 −𝑡 𝑛 2 −𝑡 𝑛 2 2 𝑛 𝓁 𝑦 𝑛 ,𝑡 𝑛 𝑛 = 𝑛 = 𝑔 𝑛 𝒘𝑇 𝒙 𝑛 −𝑡 𝑛 2 Training a Nonlinear MSE Classifier 𝓁 𝑦 𝑛 ,𝑡 𝑛 = 𝑛 𝑦 𝑛 = 𝑔 𝒘𝑇 𝒙 𝑛 𝑛 −𝑡 2 𝑛 −𝑡 𝑛 2 −𝑡 𝑛 𝑔 ′ 𝒘𝑇 𝒙 𝑛 Chain rule: 2 𝑔 𝒘𝑇 𝒙 𝛻𝒘 = 𝑛 𝑛 Disadvantage: Can be stagnant. 𝑛 𝒙 𝑛 Skeleton • Recap – linear classifier • Nonlinear discriminant function • Nonlinear cost function • Example Linear Classifiers • Introduction to neural network • Representation power of sigmoidal neural network Introduction of neural network • 𝑦 𝑛 is a function of of 𝒙 𝑛 , 𝐹 𝒙 𝑛 • For linear classifier, this function takes a simple form • What if we need more complicated functions? 1 𝑥 𝑦 𝑛 …… 𝑦 𝑛 𝑎 𝑛 …… 𝑎 𝑛 𝑛 1 …… 𝑥 𝑛 𝐷 Introduction of neural network 𝑛 1 𝑛 1 𝑛 1 𝑥1 𝑎1 1 𝑥0 …… …… …… 𝑛 𝐷1 𝑛 𝐷1 𝑛 𝐷0 𝑥1 𝑎1 𝑥0 𝑛 𝑥3 1 …… 𝑛 𝑥3 𝐷3 Introduction𝑎 of1 neural network …… 𝑎 𝐷 𝑛 3 1 𝑛 1 𝑛 1 𝑛 1 𝑛 1 𝑛 1 𝑥2 𝑎2 1 𝑥1 𝑎1 1 𝑛 3 𝑥0 …… …… …… …… …… 3 𝑛 𝐷2 𝑛 𝐷2 𝑛 𝐷1 𝑛 𝐷1 𝑛 𝐷0 𝑥2 𝑎2 𝑥1 𝑎1 𝑥0 Introduction of neural network 𝑦 𝑛 𝑎𝐿 1 𝑛 1 𝑛 …… 𝑛 …… 𝑥𝐿−1 1 𝑎𝐿−1 1 𝑛 𝑥𝐿−1 𝐷𝐿−1 𝑛 𝑎𝐿−1 𝐷𝐿−1 Notation 𝑛 1 𝑛 1 𝑛 1 𝑛 1 𝑛 1 𝑥2 𝑎2 1 𝑥1 𝑎1 1 𝑥0 …… …… …… …… …… 𝑛 𝑛 𝑛 𝐷2 𝒙2 = 𝑔2 𝒂2 𝑛 𝐷2 𝒂2 = 𝒃2 + 𝑾2 𝒙2 𝑛 𝐷1 𝒙1 𝑛 𝐷1 𝒂1 𝑛 𝐷0 𝒙0 input 𝑥2 𝑎2 𝑥1 𝑎1 𝑥0 𝑛 𝑛 𝑛 = 𝑔1 𝒂1 𝑛 = 𝒃1 + 𝑾1 𝒙0 𝑛 𝑛 Hidden layer 1 𝑛 Notation 𝑦 𝑛 𝑎𝐿 1 𝑛 𝑥𝐿−1 𝑛 𝑎𝐿−1 1 1 𝑛 𝑦 = 𝑔𝐿 𝑎𝐿 𝑛 = 𝑏𝐿 + 𝒘𝐿 𝒙𝐿−1 𝑎𝐿 1 …… 𝑛 𝑥𝐿−1 …… 𝑛 𝑎𝐿−1 𝑛 𝑛 𝑛 𝑛 𝑛 𝐷𝐿−1 𝒙𝐿−1 = 𝑔𝐿−1 𝒂𝐿−1 𝐷𝐿−1 𝒂𝐿−1 = 𝒃𝐿−1 + 𝑾𝐿−1 𝒙𝐿−2 𝑛 𝑛 Question 𝑛 𝑛 𝒙0 𝑛 𝒙0 • 𝑦 is a function of ,𝐹 , how many kinds of function can be represented by a neural net? • Are sigmoid functions good candidates for 𝑔 ∙ ? • Answer: • Given enough nodes, a 3-layer network with sigmoid or linear activation functions can approximate ANY functions with bounded support sufficiently accurately. Skeleton • Recap – linear classifier • Nonlinear discriminant function • Nonlinear cost function • Example Linear Classifiers • Introduction to neural network • Representation power of sigmoidal neural network Proof: representation power • For simplicity, we only consider functions with 1 variable. Real input real output. In this case, two layers are enough. ∆ 𝐷 𝐹 𝑥 ≈ 𝐹 𝑑 + 1 ∆ − 𝐹 𝑑∆ 𝑢 𝑥 − 𝑑∆ 𝑑=1 Proof: representation power • First Layer: 𝐷 hidden nodes, each node represents 𝑥1 𝑑 = 𝑢 𝑥0 − 𝑑∆ • 𝑔1 ∙ - logistic function, 𝑤1 𝑑 = 1, 𝑏1 = −𝑑∆ • Second (Output) layer: 𝑤2 𝑑 = 𝐹 𝑑 + 1 ∆ − 𝐹 𝑑∆ ∆ 𝐷 𝐹 𝑥 ≈ 𝐹 𝑑 + 1 ∆ − 𝐹 𝑑∆ 𝑢 𝑥 − 𝑑∆ 𝑑=1