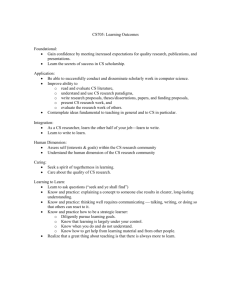

Embedding Metric Spaces in Their Intrinsic Dimension

advertisement

Embedding Metric Spaces in

Their Intrinsic Dimension

Ittai Abraham , Yair Bartal*, Ofer Neiman

The Hebrew University

* also Caltech

Emebdding Metric Spaces

► Metric

spaces (X,dX), (Y,dY)

► Embedding is a function f : X→Y

► Distortion is the minimal α such that

dX(x,y)≤dY(f(x),f(y))≤α·dX(x,y)

Intrinsic Dimension

► Doubling

Constant : The minimal λ such any

ball of radius r>0, can be covered by λ balls

of radius r/2.

► Doubling Dimension : dim(X) = log2λ.

► The

problem: Relation between metric

dimension to intrinsic dimension.

Previous Results

► Given

a λ-doubling finite metric space (X,d) and

0<γ<1, it’s snow-flake version (X,dγ) can be

embedded into Lp with distortion and dimension

depending only on λ [Assouad 83].

► Conjecture

(Assouad) : This hold for γ=1.

► Disproved by Semmes.

►A

lower bound on distortion of log n for L2,

with a matching upper bound [GKL 03].

Rephrasing the Question

► Is

there a low-distortion embedding for a finite

metric space in its intrinsic dimension?

Main result : Yes.

Main Results

► Any

finite metric space (X,d) embeds into Lp:

With distortion O(log1+θn) and dimension

O(dim(X)/θ), for any θ>0.

With constant average distortion and dimension

O(dim(X)log(dim(X))).

Additional Result

► Any

finite metric space (X,d) embeds into Lp:

distortion Olog n log n D

~

and dimension O D dim X log log n D .

► With

1 p

11 p

( For all D≤ (log n)/dim(X) ).

In particular Õ(log2/3n) distortion and dimension into L2.

Matches best known distortion result [KLMN 03] for

D=(log n)/dim(X) , with dimension O(log n log(dim(X))).

Distance Oracles

► Compact

data structure that approximately

answers distance queries.

► For general n-point metrics:

[TZ 01] O(k) stretch with O(kn1/k) bits per label.

► For

a finite λ-doubling metric:

O(1) average stretch with Õ(log λ) bits per label.

O(k) stretch with Õ(λ1/k) bits per label.

Follows from

variation on “snowflake” embedding

(Assouad).

First Result

► Thm:

For any finite λ-doubling metric space

(X,d) on n points and any 0<θ<1 there exists

an embedding of (X,d) into Lp with

distortion O(log1+θn) and dimension O((log

λ)/θ) .

Probabilistic Partitions

►

►

►

►

►

P={S1,S2,…St} is a partition of X if

i j : Si S j ,

Si X

i

P(x) is the cluster containing x.

P is Δ-bounded if diam(Si)≤Δ for all i.

A probabilistic partition P is a distribution over a set

of partitions.

A Δ-bounded P is η-padded if for all xєX :

PrP Bx, Px 1 2

η-padded Partitions

►

►

►

►

►

The parameter η determines the quality of the

embedding.

[Bartal 96]: η=Ω(1/log n) for any metric space.

[CKR01+FRT03]: Improved partitions with η(x)=1/log(ρ(x,Δ)).

[GKL 03] : η=Ω(1/log λ) for λ-doubling metrics.

[KLMN 03]: Used to embed general + doubling metrics into

Lp : distortion O((log λ)1-1/p(log n)1/p), dimension O(log2n).

The local growth rate of x at radius r is:

x, r

B x,64r

B x, r 64

Uniform Local Padding Lemma

►

►

►

A local padding : padding probability for x is independent

of the partition outside B(x,Δ).

A uniform padding : padding parameter η(x) is equal for all

points in the same cluster.

There exists a Δ-bounded prob. partition with local uniform

padding parameter η(x) :

η(x)>Ω(1/log λ)

η(x)> Ω(1/log(ρ(x,Δ)))

C1

v1

η(v1)

v2

C2

v3

η(v3)

Plan:

►A

simpler result of:

Distortion O(log n).

Dimension O(loglog n·log λ).

► Obtaining

lower dimension of O(log λ).

► Brief overview of:

Constant average distortion.

Distortion-dimension tradeoff.

Embedding

into one dimension

►

For each scale iєZ, create uniformly padded local

probabilistic 8i-bounded partition Pi.

►

For each cluster choose σi(S)~Ber(½) i.i.d.

fi(x)=σi(Pi(x))·min{ηi-1(x)·d(x,X\Pi(x)), 8i}

f x f i x

Pi

i

►

Deterministic upper bound :

|f(x)-f(y)| ≤ O(log n·d(x,y)).

using

1

i

Olog n

x

log

x

,

8

i

i

i

x

d(x,X\Pi(x)

Lower Bound - Overview

► Create

a ri-net for all integers i.

► Define success event for a pair (u,v) in the ri-net,

d(u,v)≈8i : as having contribution >8i/4 , for many

coordinates.

► In every coordinate, a constant probability of

having contribution for a net pair (u,v).

► Use Lovasz Local Lemma.

► Show lower bound for other pairs.

Lower Bound – Other Pairs?

►

►

►

►

►

x,y some pair, d(x,y)≈8i. u,v the nearest in the ri-net to x,y.

Suppose that |f(u)-f(v)|>8i/4.

We want to choose the net such that

|f(u)-f(x)|<8i/16, choose ri= 8i/(16·log n).

Using the upper bound |f(u)-f(x)| ≤ log n·d(u,x) ≤ 8i/16

|f(x)-f(y)| ≥ |f(u)-f(v)|-|f(u)-f(x)|-|f(v)-f(y)| ≥ 8i/4-2·8i/16 = 8i/8.

8i/(16log n)

v

u

x

y

Lower

Bound:

v

8i

u

► ri-net pair (u,v).

► It must be that

►

►

Can assume that 8i ≈d(u,v)/4.

Pi(u)≠Pi(v)

With probability ½ : d(u,X\Pi(u))≥ηi8i

With probability ¼ : σi(Pi(u))=1 and σi(Pi(v))=0

f i u f i v i1 u i u 8i 0 8i

Lower Bound – Net Pairs

►

►

d(u,v)≈8i. Consider R

If R<8i/2 :

f u f v

j i

j

j

With prob. 1/8 fi(u)-fi(v)≥ 8i.

►

If R≥ 8i/2 :

With prob. 1/4 fi(u)=fi(v)=0.

►

In any case

j i

►

f j u f j v 8i 2

The good event for pair in

scale i depend on higher

scales, but has constant

probability given any

outcome for them.

Oblivious to lower scales.

Lower scales do not matter

v

u

j i

ηi(u) 8i

f j u f j v 8 j 8i 4

j i

Local Lemma

►

Lemma (Lovasz): Let A1,…An be “bad” events. G=(V,E) a

directed graph with vertices corresponding to events with

out-degree at most d. Let c:V→N be “rating” function of

event such that (Ai,Aj)єE then c(Ai)≥c(Aj), if

Pr Ai

and

then

A j p

jQ

Q j Ai , Aj E c Ai cAj

epd 1 1

Pr A j 0

j[ n ]

Rating = radius

of scale.

Lower Bound – Net Pairs

►

►

►

►

►

A success event E(u,v) for a net pair u,v : there is

contribution from at least 1/16 of the coordinates.

Locality of partition – the net pair depend only on “nearby”

points, with distance < 8i.

Doubling constant λ, and ri≈8i/log n - there are at most

λloglog n such points, so d=λloglog n.

Taking D=O(log λ·loglog n) coordinates will give roughly

e-D= λ-loglog n failure probability.

By the local lemma, there is exists an embedding such that

E(u,v) holds for all net pairs.

Obtaining Lower Dimension

► To

use the LLL, probability to fail in more than

15/16 of the coordinates must be < λ-loglog n

► Instead of taking more coordinates, increase the

success probability in each coordinate.

► If probability to obtain contribution in each

coordinate >1-1/log n, it is enough to take O(log λ)

coordinates.

Similarly, if failure prob. in each

coordinate < log-θn, enough to

take O((log λ)/θ) coordinates

Using Several Scales

► Create

nets only every θloglog n scales.

► A pair (x,y) in scale i’ (i.e. d(x,y)≈8i’) will find a

close net pair in nearest smaller scale i.

► 8i’<logθn·8i, so lose a factor of logθn in the

i+θloglog n

distortion.

i’

θloglog n >

► Consider scales i-θloglog n,…,i.

i

i-θloglog n

Using Several Scales

► Take

u,v in the net with d(u,v)≈8i.

► A success in one of these scales will give

contribution >8i-θloglog n = 8i/logθn.

Lose a factor of logθn

in the distortion`

► The

success for u,v in each scale is :

Unaffected by higher scales events

Independent of events “far away” in the

same scale.

Oblivious to events in lower scales.

► Probability that all scales failed<(7/8)θloglog n.

► Take only D=O((log λ)/θ) coordinates.

i+θloglog n

i

i-θloglog n

Constant Average Distortion

►

►

►

Scaling distortion – for every 0<ε<1 at most ε·n2 pairs with

distortion > polylog(1/ε).

Upper bound of log(1/ε), by standard techniques.

Lower bound:

Define a net for any scale i>0 and ε=exp{-8j}.

Every pair (x,y) needs contribution that depends on:

► d(x,y).

► The

ε-value of x,y.

Sieve the nets to avoid dependencies between different scales and

different values of ε.

Show that if a net pair succeeded, the points near it will also

succeed.

Constant Average Distortion

►

Lower bound cont…

The local Lemma graph depends on ε, use the general case of local

Lemma.

For a net pair (u,v) in scale 8i – consider scales:

8i-loglog(1/ε),…,8i-loglog(1/ε)/2.

Requires dimension O(log λ·loglog λ).

The net

depends on λ.

Distortion-Dimension Tradeoff

D ≤ (log n)/log λ

: Olog n 1 p log n D 11 p

~

► Dimension : O D log log log n D

► Instead of assigning all scales to a single

coordinate:

► Distortion

For each point x:

Divide the scales into D bunches of coordinates, in each

1

i x log n D

iBunch

Create a hierarchical partition.

Upper bound needs the

x,y scales to be in the

same coordinates

Conclusion

► Main

result:

Embedding metrics into their

intrinsic dimension.

► Open

problem:

For p>2 there is a doubling

metric space requiring

dimension at least Ω(log n)

for embedding into LP with

distortion O(log1/pn).

Best distortion in dimension O(log λ).

Dimension reduction in L2 :

►For

a doubling subset of L2 ,is there an embedding

into L2 with O(1) distortion and dimension O(dim(X))?