rec04

advertisement

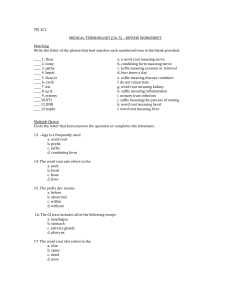

Comp. Genomics Recitation 3 (week 4) 26/3/2009 Multiple Hypothesis Testing+Suffix Trees Based in part on slides by William Stafford Noble What p-value is significant? • The most common thresholds are 0.01 and 0.05. • A threshold of 0.05 means you are 95% sure that the result is significant. • Is 95% enough? It depends upon the cost associated with making a mistake. • Examples of costs: • Doing expensive wet lab validation. • Making clinical treatment decisions. • Misleading the scientific community. • Most sequence analysis uses more stringent thresholds because the p-values are not very accurate. Multiple testing • Say that you perform a statistical test with a 0.05 threshold, but you repeat the test on twenty different observations. • Assume that all of the observations are explainable by the null hypothesis. • What is the chance that at least one of the observations will receive a p-value less than 0.05? Multiple testing • Say that you perform a statistical test with a 0.05 threshold, but you repeat the test on twenty different observations. Assuming that all of the observations are explainable by the null hypothesis, what is the chance that at least one of the observations will receive a p-value less than 0.05? • • • • Pr(making a mistake) = 0.05 Pr(not making a mistake) = 0.95 Pr(not making any mistake) = 0.9520 = 0.358 Pr(making at least one mistake) = 1 - 0.358 = 0.642 • There is a 64.2% chance of making at least one mistake. Bonferroni correction • Assume that individual tests are independent. (Is this a reasonable assumption?) • Divide the desired p-value threshold by the number of tests performed. • For the previous example, 0.05 / 20 = 0.0025. • • • • Pr(making a mistake) = 0.0025 Pr(not making a mistake) = 0.9975 Pr(not making any mistake) = 0.997520 = 0.9512 Pr(making at least one mistake) = 1 - 0.9512 = 0.0488 Database searching • Say that you search the non-redundant protein database at NCBI, containing roughly one million sequences. What pvalue threshold should you use? Database searching • Say that you search the non-redundant protein database at NCBI, containing roughly one million sequences. What p-value threshold should you use? • Say that you want to use a conservative p-value of 0.001. • Recall that you would observe such a p-value by chance approximately every 1000 times in a random database. • A Bonferroni correction would suggest using a p-value threshold of 0.001 / 1,000,000 = 0.000000001 = 10-9. E-values • A p-value is the probability of making a mistake. • The E-value is a version of the p-value that is corrected for multiple tests; it is essentially the converse of the Bonferroni correction. • The E-value is computed by multiplying the pvalue times the size of the database. • The E-value is the expected number of times that the given score would appear in a random database of the given size. • Thus, for a p-value of 0.001 and a database of 1,000,000 sequences, the corresponding E-value is 0.001 × 1,000,000 = 1,000. E-value vs. Bonferroni • You observe among n repetitions of a test a particular p-value p. You want a significance threshold α. • Bonferroni: Divide the significance threshold by α • p < α/n. • E-value: Multiply the p-value by n. • pn < α. * BLAST actually calculates E-values in a slightly more complex way. False discovery rate • The false discovery rate (FDR) is the percentage of examples above a given position in the ranked list that are expected to be false positives. 5 FP 13 TP 33 TN 5 FN FDR = FP / (FP + TP) = 5/18 = 27.8% Bonferroni vs. FDR • Bonferroni controls the family-wise error rate; i.e., the probability of at least one false positive. • FDR is the proportion of false positives among the examples that are flagged as true. Controlling the FDR • Order the unadjusted p-values p1 p2 … pm. • To control FDR at level α, j j* max j : p j m • Reject the null hypothesis for j = 1, …, j*. • This approach is conservative if many examples are true. (Benjamini & Hochberg, 1995) Q-value software http://faculty.washington.edu/~jstorey/qvalue/ Significance Summary • Selecting a significance threshold requires evaluating the cost of making a mistake. • Bonferroni correction: Divide the desired p-value threshold by the number of statistical tests performed. • The E-value is the expected number of times that the given score would appear in a random database of the given size. Longest common substring • Input: two strings S1 and S2 • Output: find the longest substring S common to S1 and S2 • Example: • S1=common-substring • S2=common-subsequence • Then, S=common-subs Longest common substring • Build a generalized suffix tree for S1 and S2 • Mark each internal node v with a 1 (2) if there is a leaf in the subtree of v representing a suffix from S1 (S2) • The path-label of any internal node marked both 1 and 2 is a substring common to both S1 and S2, and the longest such string is the LCS. Longest common substring • S1$ = xabxac$, S2$ = abx$, S = abx Lowest common ancestor A lot more can be gained from the suffix tree if we preprocess it so that we can answer LCA queries on it Lowest common ancestor The LCA of two leaves represents the longest common prefix (LCP) of these 2 suffixes # a $ b 4 5 # a a $ b b 4 # $ b a b $ 1 $ # 3 1 2 2 3 Finding maximal palindromes • A palindrome: cbaabc, “A Santa dog lived as a devil god at NASA”, “Tel Aviv erases a revival: E.T.“ • Want to find all maximal palindromes in a string S Let S = cbaaba • Observation: The maximal palindrome with center between i-1 and i is the LCA of the suffix at position i of S and the suffix at position m-i+1 of Sr http://www.palindromelist.com/ Finding maximal palindromes • Prepare a generalized suffix tree for S = cbaaba$ and Sr = abaabc# • For every i find the LCA of suffix i of S and suffix m-i+1 of Sr Let S = cbaaba$ Sr = abaabc# a # b c $ 7 $ b a $ 3 a 3 a 6 $ 4 $ 6 5 5 4 1 2 2 1 7 Maximum k-cover substring • Input: k sequences S1,S2,S3,…,Sm • Problem: Find t, the longest substring of at least k strings Maximum k-cover substring • Solution • • • • Guild a GST for the m strings Update “string depths” Traverse the tree (what order?) Update a 0/1 vector of appearance in the m strings for every node • Find the deepest node with at least k “1”s in its vector Shortest lexicographic cleavage • Input: Circular string S • Problem: Find an index i, such that S[i..n]+S[1..i-1] is “smallest” lexicographically Lexicographically smallest cleavage • Solution: • • • • Concatenate two strings: SS Split SS at a random site Build a suffix tree Traverse the suffix tree (How?) • Select the “smallest” branching option • What about the “stop sign” $? • Make $ the largest lexicographically • Depth n is always found (why?) Finding overrepresented substrings • Input: String S • Problem: Find all the substrings of S which are “overrepresented” • Overrepresented: f(length,number) Finding overrepresented substrings • Solution • Build a suffix tree for S • Compute number of leaves for every node (How?) • Compute the string depth of every node (How?) • Check f(length,number) at every node • Why is checking nodes enough? Implementation Issues • Theoretical time/space – O(n) • Why is practical space important? • Problem: when the size of the alphabet grows • Large sequences are difficult to store entirely in the memory • A lot of paging significantly harms practical runtime • Implementing ST to reduce practical space use can be a serious concern. • Main design issue: how to represent and search the outgoing branches out of the nodes • Practical design: must balance between space and speed Implementation Issues • Basic choices to represent branches: • An array of size (||) at each non-leaf node v • A linked list at node v of characters that appear at the beginning of the edge-labels out of v. • If kept in sorted order it reduces the average time to search for a given character • In the worst case, adds time || to every node operation. If the number of children of v is large, then little space is saved over the array while noticeably degrading performance • A balanced tree implements the list at node v • Additions and searches take O(logk) time and O(k) space, where k is the number of children of v. This alternative makes sense only when k is fairly large. • A hashing scheme. The challenge is to find a scheme balancing space with speed. For large trees and alphabets hashing is very attractive at least for some of the nodes Implementation Issues • When m and are large enough, the best design is probably a mixture of the above choices. • Nodes near the root of the tree tend to have the most children, so arrays are sensible choice at those nodes. • For nodes in the middle of a suffix tree, hashing or balanced trees may be the best choice.