Combining tree results using several trees: Tree 1 (weight .2) Tree 2

advertisement

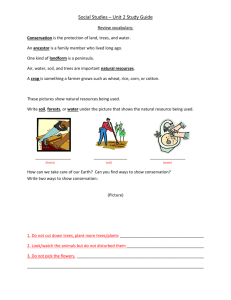

Combining tree results using several trees:

Tree 1 (weight .2)

Tree 2 (weight .3)

X1

X3

Pr{Y1 =1}

Yi

1

0

1

1

X1i

18

4

3

11

Tree 3 (weight .5)

X4

X2

X1

.6

Weights

X2i X3i X4i X5i

3 5 7 4

16 12 3 8

8 9 12 2

10 2 7 12

X2

.3

.2 .3

p1 p2

.6 .3

.4 .4

.2 .1

.9 .8

.5

p3

.8

.5

.3

.7

X1

X5

X1

.8

Y-p

combined Decsn. Error

.61

1

1-.61

.45

0

0-.45

.22

0

1-.22

.77

1

1-.77

.

Misclass

0

0

1

0

The error sum of squares for this tree is .392+…+.232 and the misclassification rate is ¼. The blue dot in

each tree indicates the leaf in which observation 1 lands, based on its X values (features). In tree 1, it

lands in a leaf with 60% 1s so the probability of 1 is 0.6. The combined result for observation Y1 is

.1(.6)+.3(.3)+.5(.8)=.61. The weights for each tree are related to the tree’s accuracy with more accurate

trees getting more weight. Trees with larger error sums of squares or misclassification rates would get

less weight. Bagging and random forests use equal weights but boosting uses weights based on

accuracy.

A program to compute the above table:

data a;

input p1 p2 p3 Y;

combinedp = .2*p1+.3*p2+.5*p3;

D = (combinedp>1/2);

misclass=0; if D ne Y then misclass=1;

error = (Y-combinedp);

Sq = error*error;

example+1;

datalines;

.6 .3 .8 1

.4 .4 .5 0

.2 .1 .3 1

.9 .8 .7 1

;

proc print; sum misclass Sq;

var example p1 p2 p3 combinedp Y D misclass error Sq;

Title "Tree weights are .2, .3, and .5";

run;

Methods for obtaining multiple trees. BOOSTING BAGGING and RANDOM FORESTS.

Bagging (Bootstrap AGGregation) Sample the data with replacement so some observations will enter

more than once, some not at all most likely - almost certainly in very large training data sets. We

sample all variables (columns) but in sample 1, observations 2,5, and 7 are “out of bag” and act

something like a validation set though the assessments seem to be pessimistic when computed in the

out of bag data.

Sample 1

X X X X X

X X X X X

X X X X X

X X X X X

Sample 2

X X X X X

X X X X X

X X X X X

X X X X X

X X X X X

Etc.

Do this sampling multiple times resulting in multiple trees.

Random Forests – This is perhaps the most popular of these methods. Recall that observations are the

rows of our data set, one row for each item (e.g. each credit card applicant) and variables are the

columns so age, gender, salary, etc. are variables. The idea is to take a random sample of both the

variables and of the observations and grow a tree on this subset. Notice that this is a sort of bagging but

using a different subset of the variables for each “bag.” Do this sampling multiple times resulting in

several trees.

X

X X

X

X

X X

X X

X

X X

Boosting (basic idea) For decisions, run a sequence of trees each time giving increased weight to the

observations that were previously misclassified in hopes of getting them right this time. For estimation

predictions, you take the deviations from the previous model’s predicted probabilities (1-p or 0-p) for

each point. Perhaps there is a pattern to these residuals so you then grow a tree on these residuals to

improve on the original prediction. In either case the sequence of trees is combined in a weighted

combination to classify a point or estimate its probability of yielding an event. Larger weights are given

to more accurate trees in this weighted combination of trees. These methods try to sequentially

improve on the previous decisions or predictions. There are some variations on this. One variant,

gradient boosting, is available from the Model subtab. A more detailed reference for this is chapter 10

in The Elements of Statistical Learning by Hastie, Tibshirani, and Friedman.

Notice that for all of these methods, you end up with multiple trees. How do you then predict

observations? The idea of combining multiple models is called ensemble modelling. The models can

even be of different types. This will be discussed in a more general setting later. For trees, if you are

interested in decisions one method is to run an observation through all of the trees, as in the book

illustration with the yellow and blue dots, and then decide based on the majority decision. This is called

the “voting” method of combining models. For estimates, you can just average the probabilities from

the models. You can also do a weighted combination with weights computed using the accuracy of each

tree so trees with larger error mean square or misclassification rate are given less weight which is

typically applied for boosting. See the optional weights section below for details. Because there are

many trees, this method will not produce some of the output that we are used to, such as a tree

diagram (there isn’t just one tree). In random forests, you will also see statistics labelled as “out of bag.”

Recall that each sample most likely does not include all the observations. The observations from the

training data that are not included in the sample (the bag) are called out of bag observations and thus

they form a sort of validation data set, a different one for each sample. For boosting a weighted

average of the tree results, based on the tree accuracy, is used as noted previously.

Enterprise Miner provides several HP programs where HP stands for high performance. These have

been sped up with programming and by taking advantage of distributed processing when available. If

you have several processors, each sample can be assigned to a different processor so that the time for

processing all of the samples is the same as for growing a single tree. The procedure HPFOREST is one

such program for random forests. Here is a quick random forest demo for our veterans data.

(1) Find the HPDM subtab and select the HP forest icon.

(2) Inspect the properties panel and note that some of the tree defaults are a bit different than those for

the ordinary tree node.

(3) Connect the HP forest node to the data partition node in your veterans diagram.

(4) Run the node and inspect the results. Did it take a long time to run?

(5) Later we will see how to export the score code to score future data sets. The gain is in accuracy of

the results but we also loose the simple interpretability of a single tree.

Optional: Similarly try a gradient boosting approach (model subtab) to the veterans data. In this demo

you will see at least one variable (demcluster) that is character with 54 levels. When you split, one part

will involve level A (let’s say) of this class variable. For that part, each of the 53 other levels can be

included or not. That means there are 253 ways of constructing the part of the split containing level A

which is also the total number of possible splits except for the fact that it includes a “split” where all the

levels are included with A so the total number of feasible splits is 253 – 1. This variable would have lots

of chances to give a high Chi-square (& logworth). You will see that demcluster has importance 1 in the

training data and 0 in the validation data for this reason. Use the metadata node to change the role of

demcluster to “rejected.”

Optional: Weights for individual trees in a boosting sequence using misclassification:

wi = weight for observation i in tree m. Start with weight 1/N where there are N observations.

Relabel the two classes using Y=1 for an event, Y=-1 for a non-event. Same for classification (classify as 1

or -1). This way Y times classification is 1 if correct, -1 if incorrect.

1. Sum the weights wi over all misclassified observations. Divide this by the sum of all N weights. This

we will call error_m (for tree m out of a list of M trees). It is between 0 and 1 and thus can be thought

of as a weighted probability of misclassification in tree m. You would prune to minimize error_m as you

build tree m.

2. Compute the logit of the weighted probability of correct classification.

This is =ln((1-err_m)/err_m) > ln(1)=0. It is nonnegative since you’d never misclassify more than half of

the observations.

3. For the next tree, leave wi the same for correctly classified observations and multiply the old wi by e

for observations incorrectly classified. This focuses the next tree on the observations its predecessor

misclassified. Repeat for m=1,2,…,M to get M trees.

4. At the end, consider a point and its set of features. Run that point through all M trees to get your

decision. Multiply each tree’s decision (1 or -1) by the tree’s weight and add. If the weighted sum is

>0, call it an event (1) and if not call it a nonevent (-1).

Boosting for estimates follows a similar process.