Lecture Presentation Chp-6

advertisement

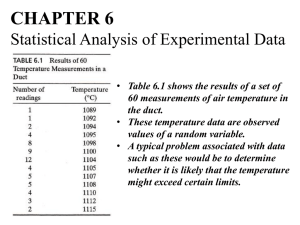

CHAPTER 6 Statistical Analysis of Experimental Data • Table 6.1 shows the results of a set of 60 measurements of air temperature in the duct. • These temperature data are observed values of a random variable. • A typical problem associated with data such as these would be to determine whether it is likely that the temperature might exceed certain limits. • A typical problem associated with data such as these would be to determine whether it is likely that the temperature might exceed certain limits. • Although these data show no temperatures less than 1089 C or greater than 1115 C, we might, for example, ask if there is a significant chance that the temperature will ever exceed 1117 C or be less than 1085 C (either of which might affect the manufacturing process in some applications). • This example illustrates a random variable that can vary continuously and can take any real value in a certain domain. Such a variable is called a continuous random variable. • Some experiments produce discrete (noncontinuous) results, which are considered to be values of a discrete random variable. • Examples of discrete random variables are the outcome of tossing a die (which has the only possible values of 1.,2,3,4,5,or 6) and fail/no-fail products in a quality control process. • To apply statistical analysis to experimental data, the data are usually characterized by determining parameters that specify the central tendency and the dispersion of the data. • The next step is to select a theoretical distribution function that is most suitable for explaining the behavior of the data. The theoretical function can then be used to make predictions about various properties of the data. GENERAL CONCEPTS AND DEFINITIONS Population. The population comprises the entire collection of objects, measurements, observations, and so on whose properties are under consideration and about which some generalizations are to be made. Examples of population are the entire set of 60-W electric bulbs that have been produced in a production batch and values of wind speed at a certain point over a defined period of time. The mode is the value of the variable that corresponds to the peak value of the probability of occurrence of the event. The Median is a value or quantity lying at the midpoint of a frequency distribution of observed values or quantities, such that there is an equal probability of falling above or below it PROBABILITY Probability is a numerical value expressing the likelihood of occurrence of an event relative to all possibilities in a sample space. The probability of occurrence of an event A is defined as the number of successful occurrences (m) divided by the total number of possible outcomes (n) in a sample space, evaluated for n>>1 For particular situations, experience has shown that the distribution of the random variable follows certain mathematical functions. Sample data are used to compute parameters in these mathematical functions, and then we use the mathematical functions to predict properties of the parent population. For discrete random variables, these functions are called probability mass functions. For continuous random variables, the functions are called probability density functions. (a) (a) Calculate the expected life of the bearings. (b) If we pick a bearing at random from this batch, what is the probability that its life (x) will be less that 20 h, greater than 20 h, and finally, exactly 20 h? (a) The Cumulative distribution function Also, we find that the probability that the life time is less than 15 h is 0.55. 6.3.2 Some Probability Distribution Functions with Engineering Applications Binomial Distribution The binomial distribution is a distribution which describes discrete random variables that can have only two possible outcomes: "success" and "failure." This distribution has application in production quality control, when the quality of a product is either acceptable or unacceptable. The following conditions need to be satisfied for the binomial distribution to be applicable to a certain experiment: 1. Each trial in the experiment can have only the two possible outcomes of success or failure. 2. The probability of success remains constant throughout the experiment. This probability is denoted by p and is usually known or estimated for a given population. 3. The experiment consists of n independent trials. The expected number of successes in n trials for binomial distribution is The standard deviation of the binomial distribution is Example 6.5 For the data of Example 6.4, calculate the probability of finding up to and including two defective light bulbs in the sample of four. Solution: We use E,q. (6.21) for this purpose: Poisson Distribution The Poisson distribution is used to estimate the number of random occurrences of an event in a specified interval of time or space if the average number of occurrences is already known. The following two assumptions underline the Poisson distribution: 1. The probability of occurrence of an event is the same for any two intervals of the same length. 2. The probability of occurrence of an event is independent of the occurrence of other events. The probability of occurrence of x events is given by Where is the expected or mean number of occurrences during the interval of interest. The expected value of x for the Poisson distribution, the same as the mean, is given by Example 6.8 It has been found in welds joining pipes that there is an average of five defects per 10 linear meters of weld (0.5 defects per meter). What is the probability that there will be (a) a single defect in a weld that is 0.5 m long or (b) more than one defect in a weld that is 0.5 m long. Normal Distribution A normal distribution is a very important statistical data distribution pattern occurring in many natural phenomena, such as height, blood pressure, lengths of objects produced by machines, etc. Certain data, when graphed as a histogram (data on the horizontal axis, amount of data on the vertical axis), creates a bell-shaped curve known as a normal curve, or normal distribution. Normal distributions are symmetrical with a single central peak at the mean (average) of the data. The shape of the curve is described as bell-shaped with the graph falling off evenly on either side of the mean. Fifty percent of the distribution lies to the left of the mean and fifty percent lies to the right of the mean. The spread of a normal distribution is controlled by the standard deviation, . The smaller the standard deviation the more concentrated the data. The mean and the median are the same in a normal distribution. Example: The lifetime of a battery is normally distributed with a mean life of 40 hours and a standard deviation of 1.2 hours. Find the probability that a randomly selected battery lasts longer than 42 hours. Example 6.9 The results of a test that follows a normal distribution have a mean value of 10.0 and a standard deviation of 1. Find the probability that a single reading is (a) between 9 and 12. (b) between 8 and 9.55. Reading from the chart, we see that approximately 19.1% of normally distributed data is located between the mean (the peak) and 0.5 standard deviations to the right (or left) of the mean. (The percentages are represented by the area under the curve.) • 50% of the distribution lies within 0.67448 standard deviations of the mean. PARAMETER ESTIMATION In some experiments, it happens that one or more measured values appear to be out of line with the rest of the data. If some clear faults can be detected in measuring those specific values, they should be discarded. But often the seemingly faulty data cannot be traced to any specific problem. There exist a number of statistical methods for rejecting these wild or outlier data points. The basis of these methods is to eliminate values that have a low probability of occurrence. For example, data values that deviate from the mean by more than two or more than three standard deviations might be rejected. It has been found that so-called two-sigma or three-sigma rejection criteria normally must be modified to account for the sample size. Furthermore, depending on how strong the rejection criterion is, good data might be eliminated or bad data included. The method recommended in the document Measurement (Uncertainty (ASME, 1998) is the modified Thompson r technique. In this method, if we have ,? measurements that have a mean x and standard deviation S, the data can be arranged in ascending order xb x2, . .. , xn. The extreme values (the highest and lowest) are suspected outliers. For these suspected points, the deviation is calculated as • Correlation Coefficient • Scatter due to random errors is a common characteristic of virtually all measurements. However, in some cases the scatter may be so large that it is difficult to detect a trend. Consider an experiment in which an independent variable x is varied systematically and the dependent variable y is then measured. • The coefficient of determination is the ratio of the explained variation to the total variation. The coefficient of determination is such that 0 < r ^2 < 1, and denotes the strength of the linear association between x and y. • The coefficient of determination represents the percent of the data that is the closest to the line of best fit. For example, if r = 0.922, then r ^2 = 0.850, which means that 85% of the total variation in y can be explained by the linear relationship between x and y (as described by the regression equation). The other 15% of the total variation in y remains unexplained. • The coefficient of determination is a measure of how well the regression line represents the data. If the regression line passes exactly through every point on the scatter plot, it would be able to explain all of the variation. The further the line is away from the points, the less it is able to explain. • No correlation: If there is no linear correlation or a weak linear correlation, r is close to 0. A value near zero means that there is a random, nonlinear relationship between the two variables Note that r is a dimensionless quantity; that is, it does not depend on the units employed. • A perfect correlation of ± 1 occurs only when the data points all lie exactly on a straight line. If r = +1, the slope of this line is positive. If r = -1, the slope of this line is negative. • A correlation greater than 0.8 is generally described as strong, whereas a correlation less than 0.5 is generally described as weak. 6.6.2 Least-Squares Linear Fit It is a common requirement in experimentation to correlate experimental data by fitting mathematical functions such as straight lines or exponentials through the data. One of the most common functions used for this purpose is the straight line. Linear fits are often appropriate for the data, and in other cases the data can be transformed to be approximately linear. As shown in Figure 6.12, if we have n pairs of data (xi, yi), we seek to fit a straight line of the form