Communication-Avoiding Krylov Subspace Methods in Finite Precision

advertisement

Communication-avoiding

Krylov subspace methods in finite

precision

Erin Carson, UC Berkeley

Advisor: James Demmel

ICME LA/Opt Seminar, Stanford University

December 4, 2014

Model Problem: 2D Poisson on 2562 grid, κ(𝐴) = 3.9𝐸4, 𝐴 2 = 1.99. Equilibration

(diagonal scaling) used. RHS set s.t. elements of true solution 𝑥𝑖 = 1/256.

Residual

replacement

strategy

of with

vaninder

Vorst andaccuracy

Ye variants.

(1999)

We We

can

can

combine

extend

residual

this

strategy

replacement

communication-avoiding

inexpensive

techniques

Roundoff

error

cause

decrease

attainable

of for

This

affect

can

becan

worse

forato

“communication-avoiding”

(𝑠-step)

improves

attainable

accuracy

for

classical

methods

maintaining

Accuracy

convergence

can

beKrylov

improved

rate

closer

for

to

minimal

that

of performance

theKrylov

classical

method!

cost!

subspace

methods.

Krylov

subspace

methods

– limits

practice

applicability!

2

What is communication?

• Algorithms have two costs: communication and computation

• Communication : moving data between levels of memory hierarchy

(sequential), between processors (parallel)

sequential comm.

parallel comm.

• On modern computers, communication is expensive, computation is cheap

– Flop time << 1/bandwidth << latency

– Communication bottleneck a barrier to achieving scalability

– Also expensive in terms of energy cost!

• Towards exascale: we must redesign algorithms to avoid communication

3

Communication bottleneck in KSMs

• A Krylov Subspace Method is a projection process onto the Krylov subspace

𝒦𝑚 𝐴, 𝑟0 = span 𝑟0 , 𝐴𝑟0 , 𝐴2 𝑟0 , … , 𝐴𝑚−1 𝑟0

• Linear systems, eigenvalue problems, singular value problems, least squares, etc.

• Best for: 𝐴 large & very sparse, stored implicitly, or only approximation needed

Projection process in each iteration:

1. Add a dimension to 𝒦𝑚

• Sparse Matrix-Vector Multiplication (SpMV)

• Parallel: comm. vector entries w/ neighbors

• Sequential: read 𝐴/vectors from slow memory

2. Orthogonalize (with respect to some ℒ𝑚 )

• Inner products

• Parallel: global reduction

• Sequential: multiple reads/writes to slow memory

SpMV

orthogonalize

Dependencies between

communication-bound

kernels in each iteration

limit performance!

4

Example: Classical conjugate gradient (CG)

Given: initial approximation 𝑥0 for solving 𝐴𝑥 = 𝑏

Let 𝑝0 = 𝑟0 = 𝑏 − 𝐴𝑥0

for 𝑚 = 1, 2, … , until convergence do

𝛼𝑚−1 =

𝑇

𝑟𝑚−1

𝑟𝑚−1

𝑇

𝑝𝑚−1

𝐴𝑝𝑚−1

𝑥𝑚 = 𝑥𝑚−1 + 𝛼𝑚−1 𝑝𝑚−1

𝑟𝑚 = 𝑟𝑚−1 − 𝛼𝑚−1 𝐴𝑝𝑚−1

𝛽𝑚 =

𝑇𝑟

𝑟𝑚

𝑚

𝑇

𝑟𝑚−1

𝑟𝑚−1

SpMVs and inner products

require communication in

each iteration!

𝑝𝑚 = 𝑟𝑚 + 𝛽𝑚 𝑝𝑚−1

end for

5

Communication-avoiding CA-KSMs

• Krylov methods can be reorganized to reduce communication cost by 𝑶(𝒔)

• Many CA-KSMs (𝑠-step KSMs) in the literature: CG, GMRES, Orthomin,

MINRES, Lanczos, Arnoldi, CGS, Orthodir, BICG, CGS, BICGSTAB, QMR

Related references:

(Van Rosendale, 1983), (Walker, 1988), (Leland, 1989), (Chronopoulos and

Gear, 1989), (Chronopoulos and Kim, 1990, 1992), (Chronopoulos, 1991),

(Kim and Chronopoulos, 1991), (Joubert and Carey, 1992), (Bai, Hu, Reichel,

1991), (Erhel, 1995), GMRES (De Sturler, 1991), (De Sturler and Van der

Vorst, 1995), (Toledo, 1995), (Chronopoulos and Kinkaid, 2001), (Hoemmen,

2010), (Philippe and Reichel, 2012), (C., Knight, Demmel, 2013),

(Feuerriegel and Bücker, 2013).

• Reduction in communication can translate to speedups on practical problems

• Recent results: 𝟒. 𝟐x speedup with 𝑠 = 4 for CA-BICGSTAB in GMG

bottom-solve; 2.5x in overall GMG solve (24,576 cores, 𝑛 = 10243 on

Cray XE6) (Williams, et al., 2014).

6

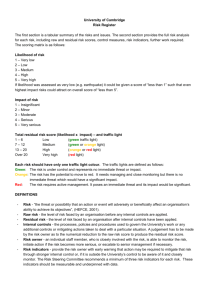

Benchmark timing breakdown

• Plot: Net time spent on different operations over one MG solve using

24,576 cores

• Hopper at NERSC (Cray XE6), 4 6-core Opteron chips per node, Gemini

network, 3D torus

• 3D Helmholtz equation

• CS-BICGSTAB with 𝑠 = 4

7

CA-CG overview

Starting at iteration 𝑠𝑘 for 𝑘 ≥ 0, 𝑠 > 0, it can be shown that to compute the

next 𝑠 steps (iterations 𝑠𝑘 + 𝑗 where 𝑗 ≤ 𝑠),

𝑝𝑠𝑘+𝑗 , 𝑟𝑠𝑘+𝑗 , 𝑥𝑠𝑘+𝑗 − 𝑥𝑠𝑘 ∈ 𝒦𝑠+1 𝐴, 𝑝𝑠𝑘 + 𝒦𝑠 𝐴, 𝑟𝑠𝑘 .

1. Compute basis matrix

𝑌𝑘 = 𝜌0 𝐴 𝑝𝑠𝑘 , … , 𝜌𝑠 𝐴 𝑝𝑠𝑘 , 𝜌0 𝐴 𝑟𝑠𝑘 , … , 𝜌𝑠−1 𝐴 𝑟𝑠𝑘

of dimension 𝑛-by-(2𝑠+1), where 𝜌𝑗 is a polynomial of degree 𝑗.

This gives the recurrence relation

𝐴𝑌𝑘 = 𝑌𝑘 𝐵𝑘 ,

where 𝐵𝑘 is (2𝑠+1)-by-(2𝑠+1) and is 2x2 block diagonal with upper

Hessenberg blocks, and 𝑌𝑘 is 𝑌𝑘 with columns 𝑠+1 and 2𝑠+1 set to 0.

• Communication cost: Same latency cost as one SpMV using matrix powers

kernel (e.g., Hoemmen et al., 2007), assuming sufficient sparsity structure

(low diameter)

8

The matrix powers kernele Matrix Powers

Avoids communication:

• In serial, by exploiting temporal locality:

• Reading 𝐴, reading 𝑌 = [𝑥, 𝐴𝑥, 𝐴2𝑥, … , 𝐴𝑠𝑥]

• In parallel, by doing only 1 ‘expand’ phase

(instead of 𝑠).

• Requires sufficiently low ‘surface-to-volume’

ratio

Also works for

Tridiagonal Example:

general graphs!

black = local elements

red = 1-level dependencies

green = 2-level dependencies

blue = 3-level dependencies

A3v

A2v

Av

v

Sequential

A3v

A2v

Av

v

Parallel

9

CA-CG overview

2. Orthogonalize: Encode dot products between basis vectors by

computing Gram matrix 𝐺𝑘 = 𝑌𝑘𝑇 𝑌𝑘 of dimension (2𝑠+1)-by- 2𝑠+1

(or compute Tall-Skinny QR)

• Communication cost: of one global reduction

3. Perform 𝒔 iterations of updates

• Using 𝑌𝑘 and 𝐺𝑘 , this requires no communication

• Represent 𝑛-vectors by their length-(2𝑠 + 1) coordinates in 𝑌𝑘 :

𝑥𝑠𝑘+𝑗 − 𝑥𝑠𝑘 = 𝑌𝑘 𝑥𝑗′ ,

𝑟𝑠𝑘+𝑗 = 𝑌𝑘 𝑟𝑗′ , 𝑝𝑠𝑘+𝑗 = 𝑌𝑘 𝑝𝑗′

• Perform 𝑠 iterations of updates on coordinate vectors

4. Basis change to recover CG vectors

′

′

′

• Compute 𝑥𝑠𝑘+𝑠 −𝑥𝑠𝑘 , 𝑟𝑠𝑘+𝑠 , 𝑝𝑠𝑘+𝑠 = 𝑌𝑘 [𝑥𝑘,𝑠

, 𝑟𝑘,𝑠

, 𝑝𝑘,𝑠

] locally,

no communication

10

No communication in inner loop!

CG

for 𝑗 = 1, … , 𝑠 do

𝛼𝑠𝑘+𝑗−1 =

CA-CG

Compute 𝑌𝑘 such that 𝐴𝑌𝑘 = 𝑌𝑘 𝐵𝑘

Compute 𝐺𝑘 = 𝑌𝑘𝑇 𝑌𝑘

for 𝑗 = 1, … , 𝑠 do

𝑇

𝑟𝑠𝑘+𝑗−1

𝑟𝑠𝑘+𝑗−1

𝛼𝑠𝑘+𝑗−1 =

𝑇

𝑝𝑠𝑘+𝑗−1

𝐴𝑝𝑠𝑘+𝑗−1

′𝑇

′

𝑟𝑘,𝑗−1

𝐺𝑘 𝑟𝑘,𝑗−1

′𝑇

′

𝑝𝑘,𝑗−1

𝐺𝑘 𝐵𝑘 𝑝𝑘,𝑗−1

𝑥𝑠𝑘+𝑗 = 𝑥𝑠𝑘+𝑗−1 + 𝛼𝑠𝑘+𝑗−1 𝑝𝑠𝑘+𝑗−1

′

′

′

𝑥𝑘,𝑗

= 𝑥𝑘,𝑗−1

+ 𝛼𝑠𝑘+𝑗−1 𝑝𝑘,𝑗−1

𝑟𝑠𝑘+𝑗 = 𝑟𝑠𝑘+𝑗−1 − 𝛼𝑠𝑘+𝑗−1 𝐴𝑝𝑠𝑘+𝑗−1

′

′

′

𝑟𝑘,𝑗

= 𝑟𝑘,𝑗−1

− 𝛼𝑠𝑘+𝑗−1 𝐵𝑘 𝑝𝑘,𝑗−1

𝛽𝑠𝑘+𝑗 =

𝑇

𝑟𝑠𝑘+𝑗

𝑟𝑠𝑘+𝑗

𝛽𝑠𝑘+𝑗 =

𝑇

𝑟𝑠𝑘+𝑗−1

𝑟𝑠𝑘+𝑗−1

′𝑇

′

𝑟𝑘,𝑗−1

𝐺𝑘 𝑟𝑘,𝑗−1

′

′

′

𝑝𝑘,𝑗

= 𝑟𝑘,𝑗

+ 𝛽𝑠𝑘+𝑗 𝑝𝑘,𝑗−1

𝑝𝑠𝑘+𝑗 = 𝑟𝑠𝑘+𝑗 +𝛽𝑠𝑘+𝑗 𝑝𝑠𝑘+𝑗−1

end for

′𝑇

′

𝑟𝑘,𝑗

𝐺𝑘 𝑟𝑘,𝑗

end for

′

′

′

𝑥𝑠𝑘+𝑠 −𝑥𝑠𝑘 , 𝑟𝑠𝑘+𝑠 , 𝑝𝑠𝑘+𝑠 = 𝑌𝑘 [𝑥𝑘,𝑠

, 𝑟𝑘,𝑠

, 𝑝𝑘,𝑠

]

Krylov methods in finite precision

• Finite precision errors have effects on algorithm behavior (known to

Lanczos (1950); see, e.g., Meurant and Strakoš (2006) for in-depth survey)

• Lanczos:

• Basis vectors lose orthogonality

• Appearance of multiple Ritz approximations to some eigenvalues

• Delays convergence of Ritz values to other eigenvalues

• CG:

• Residual vectors (proportional to Lanczos basis vectors) lose

orthogonality

• Appearance of duplicate Ritz values delays convergence of CG

approximate solution

• Residual vectors 𝑟𝑘 and true residuals 𝑏 − 𝐴𝑥𝑘 deviate

• Limits the maximum attainable accuracy of approximate solution

12

CA-Krylov methods in finite precision

• CA-KSMs mathematically equivalent to classical KSMs

• But convergence delay and loss of accuracy gets worse with

increasing 𝒔!

• Obstacle to solving practical problems

• Decrease in attainable accuracy → some problems that

KSM can solve can’t be solved with CA variant

• Delay of convergence → if # iterations increases more than

time per iteration decreases due to CA techniques, no

speedup expected!

13

This raises the questions…

For CA-KSMs,

For solving linear systems:

• How bad can the effects of roundoff error be?

• Bound on maximum attainable accuracy for CA-CG and CABICG

• And what can we do about it?

• Residual replacement strategy: uses bound to improve

accuracy to 𝑂(𝜀) 𝐴 𝑥 in CA-CG and CA-BICG

14

This raises the questions…

For CA-KSMs,

For eigenvalue problems:

• How bad can the effects of roundoff error be?

• Extension of Chris Paige’s analysis of Lanczos to CA variants:

• Bounds on local rounding errors in CA-Lanczos

• Bounds on accuracy and convergence of Ritz values

• Loss of orthogonality convergence of Ritz values

• And what can we do about it?

• Some ideas on how to use this new analysis…

• Basis orthogonalization

• Dynamically select/change basis size (𝒔 parameter)

• Mixed precision variants

15

Maximum attainable accuracy of CG

• In classical CG, iterates are updated by

𝑥𝑚 = 𝑥𝑚−1 + 𝛼𝑚−1 𝑝𝑚−1

and

𝑟𝑚 = 𝑟𝑚−1 − 𝛼𝑚−1 𝐴𝑝𝑚−1

• Formulas for 𝑥𝑚 and 𝑟𝑚 do not depend on each other - rounding errors

cause the true residual, 𝑏 − 𝐴𝑥𝑚 , and the updated residual, 𝑟𝑚 , to deviate

• The size of the true residual is bounded by

𝑏 − 𝐴𝑥𝑚 ≤ 𝑟𝑚 + 𝑏 − 𝐴𝑥𝑚 − 𝑟𝑚

• When 𝑟𝑚 ≫ 𝑏 − 𝐴𝑥𝑚 − 𝑟𝑚 , 𝑟𝑚 and 𝑏 − 𝐴𝑥𝑚 have similar

magnitude

• When 𝑟𝑚 → 0, 𝑏 − 𝐴𝑥𝑚 depends on 𝑏 − 𝐴𝑥𝑚 − 𝑟𝑚

• Many results on attainable accuracy, e.g.: Greenbaum (1989, 1994, 1997),

Sleijpen, van der Vorst and Fokkema (1994), Sleijpen, van der Vorst and

Modersitzki (2001), Björck, Elfving and Strakoš (1998) and Gutknecht and

Strakoš (2000).

16

Example: Comparison of convergence of

true and updated residuals for CG vs. CACG using a monomial basis, for various 𝑠

values

Model problem (2D Poisson on 2562 grid)

17

• Better conditioned polynomial bases

can be used instead of monomial.

• Two common choices: Newton and

Chebyshev - see, e.g., (Philippe and

Reichel, 2012).

• Behavior closer to that of classical CG

• But can still see some loss of attainable

accuracy compared to CG

• Clearer for higher 𝒔 values

18

Residual replacement strategy for CG

• van der Vorst and Ye (1999): Improve accuracy by replacing updated

residual 𝒓𝒎 by the true residual 𝒃 − 𝑨𝒙𝒎 in certain iterations, combined

with group update.

• Choose when to replace 𝑟𝑚 with 𝑏 − 𝐴𝑥𝑚 to meet two constraints:

1. Replace often enough so that at termination, 𝑏 − 𝐴𝑥𝑚 − 𝑟𝑚 is

small relative to 𝜀𝑁 𝐴

𝑥𝑚

2. Don’t replace so often that original convergence mechanism of

updated residuals is destroyed (avoid large perturbations to finite

precision CG recurrence)

19

Residual replacement condition for CG

Tong and Ye (2000): In finite precision classical CG, in iteration 𝑛, the

computed residual and search direction vectors satisfy

𝑟𝑚 = 𝑟𝑚−1 − 𝛼𝑚−1 𝐴𝑝𝑚−1 + 𝜂𝑚 ,

Then in matrix form,

1 𝑟𝑚+1 𝑇

𝐴𝑍𝑚 = 𝑍𝑚 𝑇𝑚 − ′

𝑒𝑚+1 + 𝐹𝑚

𝛼𝑚 𝑟0

𝑝𝑚 = 𝑟𝑚 + 𝛽𝑚 𝑝𝑚−1 + 𝜏𝑚

with 𝑍𝑚 =

𝑟0

𝑟𝑚

,…,

𝑟0

𝑟𝑚

′ = 𝑒 𝑇 𝑇 −1 𝑒 and 𝐹 =

where 𝑇𝑚 is invertible and tridiagonal, 𝛼𝑚

𝑚 𝑚 1

𝑚

𝑓0 , … , 𝑓𝑚 , with

𝐴𝜏𝑚

1 𝜂𝑚+1

𝛽𝑚 𝜂𝑚

𝑓𝑚 =

+

−

𝑟𝑚

𝛼𝑚 𝑟𝑚

𝛼𝑚−1 𝑟𝑚

20

Residual replacement condition for CG

Tong and Ye (2000): If sequence 𝑟𝑚 satisfies

𝐴𝑍𝑚 = 𝑍𝑚 𝑇𝑚 −

1 𝑟𝑚+1 𝑇

𝑒𝑚+1

′

𝛼𝑚

𝑟0

+ 𝐹𝑚 ,

𝑓𝑚 =

𝐴𝜏𝑚

𝑟𝑚

1 𝜂𝑚+1

+

𝛼𝑚 𝑟𝑚

𝛽𝑚 𝜂𝑚

−

𝛼𝑚−1 𝑟𝑚

then

𝑟𝑚+1 ≤ 1 + 𝒦𝑚

where 𝒦𝑚 =

min

𝜌∈𝒫𝑚 ,𝜌 0 =1

‖𝜌 𝐴 + Δ𝐴𝑚 𝑟1 ‖

+

+.

𝐴𝑍𝑚 − 𝐹𝑚 𝑇𝑚−1 ‖𝑍𝑚+1

‖ and Δ𝐴𝑚 = −𝐹𝑚 𝑍𝑚

• As long as 𝑟𝑚 satisfies recurrence, bound on its norm holds regardless of

how it is generated

• Can replace 𝑟𝑚 by fl(𝑏 − 𝐴𝑥𝑚 ) and still expect convergence whenever

𝜂𝑚 = fl 𝑏 − 𝐴𝑥𝑚 − 𝑟𝑚−1 − 𝛼𝑚−1 𝐴𝑝𝑚−1

is not too large relative to 𝑟𝑚 and 𝑟𝑚−1 (van der Vorst & Ye, 1999).

21

Residual replacement strategy for CG

• (van der Vorst and Ye, 1999): Use computable bound for ‖𝑏 − 𝐴𝑥𝑚 −

Pseudo-code for residual replacement with group update for CG:

if

end

𝑑𝑚−1 ≤ 𝜀 𝑟𝑚−1 𝐚𝐧𝐝 𝑑𝑚 > 𝜀 𝑟𝑚 𝐚𝐧𝐝 𝑑𝑚 > 1.1𝑑𝑖𝑛𝑖𝑡

𝑧 = 𝑧 + 𝑥𝑚

group update of approximate solution

𝑥𝑚 = 0

set residual to true residual

𝑟𝑚 = 𝑏 − 𝐴𝑧

𝑑𝑖𝑛𝑖𝑡 = 𝑑𝑚 = 𝜀 𝑁 𝐴 𝑧 + 𝑟𝑚

• Assuming a bound on condition number of 𝐴, if updated residual

converges to 𝑂(𝜀) 𝐴 𝑥 , true residual reduced to 𝑂(𝜀) 𝐴 𝑥

22

Sources of roundoff error in CA-CG

Computing the 𝑠-step Krylov basis:

𝐴𝑌𝑘 = 𝑌𝑘 𝐵𝑘 + ∆𝑌𝑘

Error in computing

𝑠-step basis

Updating coordinate vectors in the inner loop:

′

′

′

𝑥𝑘,𝑗

= 𝑥𝑘,𝑗−1

+ 𝑞𝑘,𝑗−1

+ 𝜉𝑘,𝑗

′

′

′

𝑟𝑘,𝑗

= 𝑟𝑘,𝑗−1

− 𝐵𝑘 𝑞𝑘,𝑗−1

+ 𝜂𝑘,𝑗

Error in updating

coefficient vectors

′

′

with 𝑞𝑘,𝑗−1

= fl(𝛼𝑠𝑘+𝑗−1 𝑝𝑘,𝑗−1

)

Recovering CG vectors for use in next outer loop:

′

𝑥𝑠𝑘+𝑗 = 𝑌𝑘 𝑥𝑘,𝑗

+ 𝑥𝑠𝑘 + 𝜙𝑠𝑘+𝑗

′

𝑟𝑠𝑘+𝑗 = 𝑌𝑘 𝑟𝑘,𝑗

+ 𝜓𝑠𝑘+𝑗

Error in

basis change

23

Maximum attainable accuracy of CA-CG

• We can write the deviation of the true and updated residuals in terms of

these errors:

𝑏−𝐴𝑥𝑠𝑘+𝑗 −𝑟𝑠𝑘+𝑗 = 𝑏−𝐴𝑥0 −𝑟0

𝑘−1

−

𝑠

′

𝐴𝑌ℓ 𝜉ℓ,𝑖 + 𝑌ℓ 𝜂ℓ,𝑖 − Δ𝑌ℓ 𝑞ℓ,𝑖−1

𝐴𝜙𝑠ℓ+𝑠 + 𝜓𝑠ℓ+𝑠 +

ℓ=0

𝑖=1

𝑗

′

𝐴𝑌𝑘 𝜉𝑘,𝑖 + 𝑌𝑘 𝜂𝑘,𝑖 − Δ𝑌𝑘 𝑞𝑘,𝑖−1

−𝐴𝜙𝑠𝑘+𝑗 − 𝜓𝑠𝑘+𝑗 −

𝑖=1

• Using standard rounding error results, this allows us to obtain an upper

bound on 𝑏−𝐴𝑥𝑠𝑘+𝑗 −𝑟𝑠𝑘+𝑗 .

24

A computable bound

• We extend van der Vorst and Ye’s residual replacement strategy to CA-CG

• Making use of the bound for 𝑏−𝐴𝑥𝑠𝑘+𝑗 −𝑟𝑠𝑘+𝑗 in CA-CG, update error

estimate 𝑑𝑠𝑘+𝑗 by:

Extra𝟑computation

all lower order terms, communication

𝟑

𝑶(𝒔

)

flops

per

𝒔

iterations;

≤1

reduction

per 𝒔 iterations

𝑶(𝒏 + 𝒔by

) flops

perfactor

𝒔 iterations;

no communication

only

once

increased

at Estimated

most

of 2.

𝑑𝑠𝑘+𝑗 ≡ 𝑑𝑠𝑘+𝑗−1

+𝜀 4+𝑁′

+𝜀

𝐴

𝐴

′

𝑌𝑘 ∙ 𝑥𝑘,𝑗

+

𝑥𝑠𝑘+𝑠 + 2+2𝑁 ′ 𝐴

′

𝑌𝑘 ∙ 𝐵𝑘 ∙ 𝑥𝑘,𝑗

+

′

𝑌𝑘 ∙ 𝑟𝑘,𝑗

′

′

𝑌𝑘 ∙ 𝑥𝑘,𝑠

+𝑁 ′ 𝑌𝑘 ∙ 𝑟𝑘,𝑠

,𝑗=𝑠

0, otherwise

where 𝑁 ′ = max 𝑁, 2𝑠 + 1 .

25

Residual replacement for CA-CG

• Use the same replacement condition as van der Vorst and Ye (1999):

𝑑𝑠𝑘+𝑗−1 ≤ 𝜀 𝑟𝑠𝑘+𝑗−1

𝐚𝐧𝐝

𝑑𝑠𝑘+𝑗 > 𝜀 𝑟𝑠𝑘+𝑗

𝐚𝐧𝐝 𝑑𝑠𝑘+𝑗 > 1.1𝑑𝑖𝑛𝑖𝑡

• (possible we could develop a better replacement condition based on

perturbation in finite precision CA-CG recurrence)

Pseudo-code for residual replacement with group update for CA-CG:

if 𝑑𝑠𝑘+𝑗−1 ≤ 𝜀 𝑟𝑠𝑘+𝑗−1 𝐚𝐧𝐝

′

𝑧 = 𝑧 + 𝑌𝑘 𝑥𝑘,𝑗

+ 𝑥𝑠𝑘

𝑥𝑠𝑘+𝑗 = 0

𝑟𝑠𝑘+𝑗 = 𝑏 − 𝐴𝑧

𝑑𝑠𝑘+𝑗 > 𝜀 𝑟𝑠𝑘+𝑗

𝐚𝐧𝐝 𝑑𝑠𝑘+𝑗 > 1.1𝑑𝑖𝑛𝑖𝑡

𝑑𝑖𝑛𝑖𝑡 = 𝑑𝑠𝑘+𝑗 = 𝜀 1 + 2𝑁′ 𝐴 𝑧 + 𝑟𝑠𝑘+𝑗

′

𝑝𝑠𝑘+𝑗 = 𝑌𝑘 𝑝𝑘,𝑗

break from inner loop and begin new outer loop

end

26

27

Residual

Replacement

Indices

Total Number

of Reductions

s=4

CACG Mono.

CACG

Newt.

CACG

Cheb.

354

203

353

196

365

197

340

102

353

99

326

68

346

71

401, 517

s=8 224, 334,

157

s=12

135,

2119

557

CG

355

669

•• #Inreplacements

small compared

to total

addition to attainable

accuracy,

iterations

RR

doesn’t

significantly affect

convergence

rate

is incredibly

communication

savings!

important in practical

implementations

•• Can

have bothrate

speed

and accuracy!

Convergence

depends

on basis

28

consph8, FEM/Spheres (from UFSMC)

n = 8.3 ⋅ 104 , 𝑛𝑛𝑧 = 6.0 ⋅ 106 , 𝜅 𝐴 = 9.7 ⋅ 103 , 𝐴 = 9.7

After

𝑠=8

# Replacements

Class.

2

M

12

N

2

C

2

# Replacements

𝑠 =12

Before

Class.

2

M

0

N

4

C

3

29

xenon1, materials (from UFSMC)

n = 4.9 ⋅ 104 , 𝑛𝑛𝑧 = 1.2 ⋅ 106 , 𝜅 𝐴 = 3.3 ⋅ 104 , 𝐴 = 3.2

After

𝑠=4

# Replacements

Class.

2

M

1

N

1

C

1

# Replacements

𝑠=8

Before

Class.

2

M

5

N

2

C

1

30

Preconditioning for CA-KSMs

• Tradeoff: speed up convergence, but increase time per iteration due to

communication!

• For each specific app, must evaluate tradeoff between preconditioner

quality and sparsity of the system

• Good news: many preconditioners allow communication-avoiding approach

• Block Jacobi – block diagonals

• Sparse Approx. Inverse (SAI) – same sparsity as 𝐴; recent work for

CA-BICGSTAB by Mehri (2014)

• Polynomial preconditioning (Saad, 1985)

• HSS preconditioning for banded matrices (Hoemmen, 2010),

(Knight, C., Demmel, 2014)

• CA-ILU(0) – recent work by Moufawad and Grigori (2013)

• Deflation for CA-CG (C., Knight, Demmel, 2014), based on Deflated

CG of (Saad et al., 2000); for CA-GMRES (Yamazaki et al., 2014)

31

Deflated CA-CG, model problem

Monomial Basis,

𝑠𝑠==10

4

8

Matrix: 2D Laplacian(512), 𝑁 = 262,144. Right hand side set such that true solution

has entries 𝑥𝑖 = 1/ 𝑛. Deflated CG algorithm (DCG) from (Saad et al., 2000).

32

Eigenvalue problems: CA-Lanczos

Problem: 2D Poisson, 𝑛 = 256, 𝐴 = 7.93, with random starting vector

As 𝒔 increases, both convergence rate and accuracy to which we can find

approximate eigenvalues within 𝒏 iterations decreases.

33

Eigenvalue problems: CA-Lanczos

Problem: 2D Poisson, 𝑛 = 256, 𝐴 = 7.93, with random starting vector

Improved by better-conditioned bases, but still see decreased

convergence rate and accuracy as 𝒔 grows.

34

Paige’s Lanczos convergence analysis

𝑇 + 𝛿𝑉

𝐴𝑉𝑚 = 𝑉𝑚 𝑇𝑚 + 𝛽𝑚+1 𝑣𝑚+1 𝑒𝑚

𝑚

𝑉𝑚 = 𝑣1 , … , 𝑣𝑚 ,

𝛿 𝑉𝑚 = 𝛿 𝑣1 , … , 𝛿 𝑣𝑚 ,

𝑇𝑚 =

𝛼1

𝛽2

𝛽2

⋱

⋱

⋱

⋱

𝛽𝑚

𝛽𝑚

𝛼𝑚

for 𝑖 ∈ {1, … , 𝑚},

Classic Lanczos rounding

error result of Paige (1976):

2

𝛽𝑖+1

where 𝜎 ≡ 𝐴

2,

𝜃𝜎 ≡

𝐴

𝛿 𝑣𝑖 2 ≤ 𝜀1 𝜎

𝛽𝑖+1 𝑣𝑖𝑇 𝑣𝑖+1 ≤ 2𝜀0 𝜎

𝑇

𝑣𝑖+1

𝑣𝑖+1 − 1 ≤ 𝜀0 2

+ 𝛼𝑖2 + 𝛽𝑖2 − 𝐴𝑣𝑖 22 ≤ 4𝑖 3𝜀0 + 𝜀1 𝜎 2

2 , 𝜀0

≡ 2𝜀 𝑛 + 4 , and 𝜀1 ≡ 2𝜀 𝑁𝜃 + 7

𝜀0 = 𝑂 𝜀𝑛

𝜀1 = 𝑂 𝜀𝑁𝜃

These results form the basis for Paige’s influential results in (Paige, 1980).

35

CA-Lanczos convergence analysis

Let Γ ≡ max 𝑌ℓ+

ℓ≤𝑘

2

∙

𝑌ℓ

2

≤ 2𝑠+1 ∙ max 𝜅 𝑌ℓ .

ℓ≤𝑘

for 𝑖 ∈ {1, … , 𝑚=𝑠𝑘+𝑗},

For CA-Lanczos,

we have:

2

𝛽𝑖+1

𝛿 𝑣𝑖 2 ≤ 𝜀1 𝜎

𝛽𝑖+1 𝑣𝑖𝑇 𝑣𝑖+1 ≤ 2𝜀0 𝜎

𝑇

𝑣𝑖+1

𝑣𝑖+1 − 1 ≤ 𝜀0 2

+ 𝛼𝑖2 + 𝛽𝑖2 − 𝐴𝑣𝑖 22 ≤ 4𝑖 3𝜀0 + 𝜀1 𝜎 2

𝜀0 ≡ 2𝜀 𝑛+11𝑠+15 Γ 2 = 𝑂 𝜀𝑛Γ 2 ,

(vs. 𝑂 𝜀𝑛 for Lanczos)

𝜀1 ≡ 2𝜀 N+2𝑠+5 𝜃 + 4𝑠+9 𝜏 + 10𝑠+16 Γ = 𝑂 𝜀𝑁𝜃Γ ,

where 𝜎 ≡ 𝐴

2,

𝜃𝜎 ≡

𝐴

2,

(vs. 𝑂 𝜀𝑁𝜃 for Lanczos)

𝜏𝜎 ≡ max 𝐵ℓ

ℓ≤𝑘

2

36

The amplification term

• Our definition of amplification term before was

Γ ≡ max 𝑌ℓ+ 2 ∙ 𝑌ℓ 2 ≤ 2𝑠+1 ∙ max 𝜅 𝑌ℓ

ℓ≤𝑘

ℓ≤𝑘

where we want 𝑌 |𝑦′| 2 ≤ Γ 𝑌𝑦′ 2 to hold for the computed

basis 𝑌 and any coordinate vector 𝑦′ in every iteration.

• Better, more descriptive estimate for Γ updated possible

w/tighter bounds; requires some light bookkeeping

𝑇

• Example: for bounds on 𝛽𝑖+1 𝑣𝑖𝑇 𝑣𝑖+1 and 𝑣𝑖+1

𝑣𝑖+1 − 1 , we

can use the definition

𝑌𝑘 𝑥 2

Γ𝑘,𝑗 =

max

′

′ ,𝑣 ′ ,𝑣 ′

𝑥∈{𝑤𝑘,𝑗 ,𝑢𝑘,𝑗

𝑌𝑘 𝑥 2

𝑘,𝑗 𝑘,𝑗−1 }

37

Local Rounding Errors, Classical Lanczos

𝛽𝑖+1 𝑣𝑖𝑇 𝑣𝑖+1

Measured value

𝑇

𝑣𝑖+1

𝑣𝑖+1 − 1

Upper bound (Paige 1976)

Problem: 2D Poisson, 𝑛 = 256, 𝐴 = 7.93, with random starting vector

38

Local Rounding Errors, CA-Lanczos, monomial basis, 𝑠 = 8

𝛽𝑖+1 𝑣𝑖𝑇 𝑣𝑖+1

Measured value

𝑇

𝑣𝑖+1

𝑣𝑖+1 − 1

Upper bound

Γ value

Problem: 2D Poisson, 𝑛 = 256, 𝐴 = 7.93, with random starting vector

39

Local Rounding Errors, CA-Lanczos, Newton basis, 𝑠 = 8

𝛽𝑖+1 𝑣𝑖𝑇 𝑣𝑖+1

Measured value

𝑇

𝑣𝑖+1

𝑣𝑖+1 − 1

Upper bound

Γ value

Problem: 2D Poisson, 𝑛 = 256, 𝐴 = 7.93, with random starting vector

40

Local Rounding Errors, CA-Lanczos, Chebyshev basis, 𝑠 = 8

𝛽𝑖+1 𝑣𝑖𝑇 𝑣𝑖+1

Measured value

𝑇

𝑣𝑖+1

𝑣𝑖+1 − 1

Upper bound

Γ value

Problem: 2D Poisson, 𝑛 = 256, 𝐴 = 7.93, with random starting vector

41

Paige’s results for classical Lanczos

• Using bounds on local rounding errors in Lanczos, Paige showed that

1. The computed Ritz values always lie between the extreme

eigenvalues of 𝐴 to within a small multiple of machine

precision.

2. At least one small interval containing an eigenvalue of 𝐴 is

found by the 𝑛th iteration.

3. The algorithm behaves numerically like Lanczos with full

reorthogonalization until a very close eigenvalue

approximation is found.

4. The loss of orthogonality among basis vectors follows a

rigorous pattern and implies that some Ritz values have

converged.

Do the same statements hold for CA-Lanczos?

42

Results for CA-Lanczos

• The answer is YES! …but

• Only if:

• 𝑌ℓ is numerically full rank for 0 ≤ ℓ ≤ 𝑘 and

2

• 𝜀0 ≡ 2𝜀 𝑛+11𝑠+15 Γ ≤

• i.e.,

Γ2

1

12

≤ 24𝜖 𝑛 + 11𝑠 + 15

−1

• Otherwise, e.g., can lose orthogonality due to computation

with rank-deficient basis

• How can we use this bound on Γ to design a better algorithm?

43

Ideas based on CA-Lanczos analysis

• Explicit basis orthogonalization

• Compute TSQR on 𝑌𝑘 in outer loop, use 𝑄 factor as basis

• Get Γ = 1 for only one reduction

• Dynamically changing basis size

• Run incremental condition estimate when computing 𝑠-step basis;

−1

stop when Γ 2 ≤ 24𝜖 𝑛 + 11𝑠′ + 15

is reached. Use this basis

of size 𝑠 ′ ≤ 𝑠 in this outer loop.

• Mixed precision

• For inner products, use precision 𝜖 (0) such that

−1

(0)

2

𝜖 ≤ 24Γ 𝑛 + 11𝑠 + 15

• For SpMVs, use precision 𝜖 (1) such that

𝜖 (1) ≤ 4Γ N+2𝑠+5 𝜃 + 4𝑠+9 𝜏 + 10𝑠+16

−1

44

Summary

• For high performance iterative methods, both time per iteration

and number of iterations required are important.

• CA-KSMs offer asymptotic reductions in time per iteration, but

can have the effect of increasing number of iterations required

(or causing instability)

• For CA-KSMs to be practical, must better understand finite

precision behavior (theoretically and empirically), and develop

ways to improve the methods

• Ongoing efforts in: finite precision convergence analysis for

CA-KSMs, selective use of extended precision, development

of CA preconditioners, other new techniques.

45

Thank you!

contact: erin@cs.berkeley.edu

http://www.cs.berkeley.edu/~ecc2z/