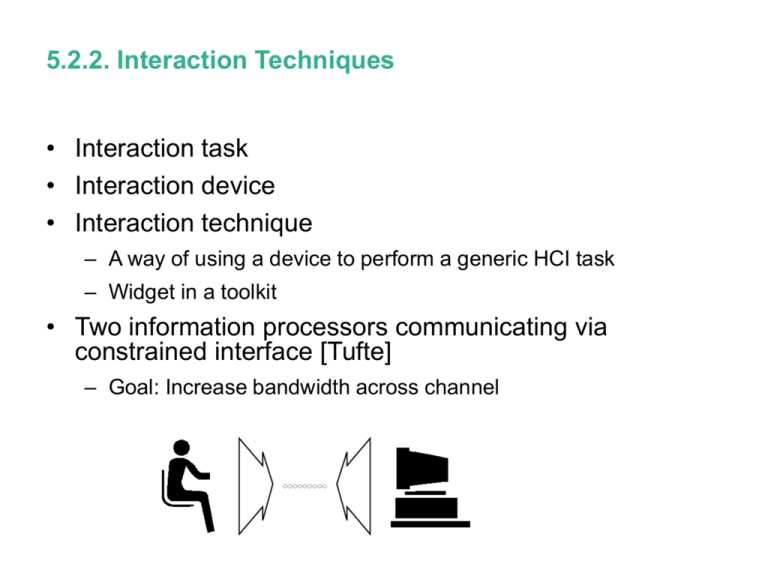

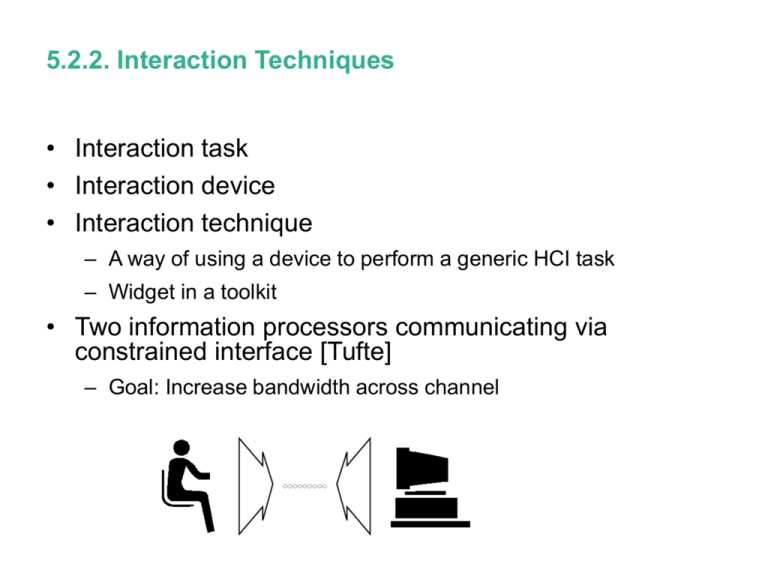

5.2.2. Interaction Techniques

• Interaction task

• Interaction device

• Interaction technique

– A way of using a device to perform a generic HCI task

– Widget in a toolkit

• Two information processors communicating via

constrained interface [Tufte]

– Goal: Increase bandwidth across channel

The Teletype

• 10

characters

per second

in both

directions

• Photo

shows

Thompson

and Ritchie,

creators of

Unix

Chord Keyboard

• Douglas Engelbart,

“Mother of all Demos”

– http://sloan.stanford.e

du/MouseSite/1968De

mo.html

Image courtesy of Louisa Billeter on Flickr.

Image courtesy of Amarand Agasi on Flickr.

Image courtesy of Frau Bob on Flickr.

Image courtesy of Mike Marttila on Flickr.

Light Pen

Image by MIT OpenCourseWare.

Image by MIT OpenCourseWare.

Image by MIT OpenCourseWare.

Not Multi-touch

Fast accurate finger or stylus

Image by MIT OpenCourseWare.

Bare finger or

conducting

stylus

Not multi-touch

Image by MIT OpenCourseWare.

Image by MIT OpenCourseWare.

Image by MIT OpenCourseWare.

Image by MIT OpenCourseWare.

Other Manual Input

• Bier, Taxonomy of See-Through Tools

– https://open-video.org/details.php?videoid=4563

• Han, Perceptive Pixel

– https://www.youtube.com/watch?v=ysEVYwa-vHM

• Harrison, Skinput

– http://research.microsoft.com/enus/um/redmond/groups/cue/skinput/

Google Glass Predecessors, Steve Mann

Skype/Facetime Predecessor 1964 (!)

Interaction Tasks

• Select

• Position

• Orient

• Quantify

• Text

Selection Factors

• Continuity

• Parallelism

• Experimental results

• Costs

• Reliability

Design issues

• Control/display ratio

• Control/display compatibility

• Direction relations

• Fitts' law

• Position vs. velocity control

• Relative vs. absolute

• Direct vs. indirect

© ACM, Inc. All rights

reserved.

© ACM, Inc. All rights

reserved.

Eye Movement-Based Interaction

• Highly-interactive, Non-WIMP, Non-command,

Lightweight

– Continuous, but recognition algorithm quantizes

– Parallel, but implemented on coroutine UIMS

– Non-command, lightweight, not issue intentional commands

• Benefits

– Extremely rapid

– Natural, little conscious effort

– Implicitly indicate focus of attention

– “What You Look At is What You Get”

Issues

• Midas touch

– Eyes continually dart from point to point, not like relatively

slow and deliberate operation of manual input devices

– People not accustomed to operating devices simply by

moving their eyes; if poorly done, could be very annoying

• Need to extract useful dialogue information from

noisy eye data

• Need to design and study new interaction

techniques

Approach to Using Eye Movements

• Philosophy

– Use natural eye movements as additional user input

– vs. trained movements as explicit commands

• Technical approach

– Process noisy, jittery eye tracker data stream to filter,

recognize fixations, and turn into discrete dialogue tokens

that represent user's higher-level intentions

– Then, develop generic interaction techniques based on the

tokens

Other Work

• A taxonomy of approaches to eye movement-based

Natural

interaction

Unnatural

response

(real-world)

response

Unnatural

(learned)

eye movement

Most work,

esp. disabled

N/A

Natural

eye movement

Jacob

Starker & Bolt,

Vertegaal

Methods for Measuring Eye Movements

• Electronic

– Skin electrodes around eye

• Mechanical

– Non-slipping contact lens

• Optical/Video - Single Point

– Track some visible feature on eyeball; head stationary

• Optical/Video - Two Point

– Can distinguish between head and eye movements

Optical/Video Method

•

Views of pupil, with corneal reflection

•

Hardware components

Use CR-plus-pupil Method

•

Track corneal reflection and outline

of pupil, compute visual line of gaze

from relationship of two tracked

points

•

Infrared illumination

•

Image from pupil camera

The Eye

• Retina not uniform

– Sharp vision in fovea, approx. 1 degree

– Blurred vision elsewhere

• Must move eye to see object sharply

• Eye position thus indicates focus of attention

Types of Eye Movements Expected

• Saccade

– Rapid, ballistic, vision suppressed

– Interspersed with fixations

• Fixation

– Steady, but some jitter

• Other movements

• Eyes always moving; stabilized image disappears

Eye Tracker in Use

• Integrated with head-mounted display

Fixation Recognition

•

Need to filter jitter, small saccades,

eye tracker artifacts

•

Moving average slows response

speed; use a priori definition of

fixation, then search incoming data

for it

•

Plot = one coordinate of eye

position vs. time (3 secs.)

•

Horizontal lines with o's represent

fixations recognized by algorithm,

when and where they would be

reported

User Interface Management System

•

Turn output of recognition algorithm

into stream of tokens

–

EYEFIXSTART, EYEFIXCONT,

EYEFIXEND, EYETRACK,

EYELOST, EYEGOT

•

Multiplex eye tokens into same

stream as mouse, keyboard and

send to coroutine-based UIMS

•

Specify desired interface to UIMS

as collection of concurrently

executing objects; each has own

syntax, which can accept eye,

mouse, keyboard tokens

Interaction Techniques

•

Eye tracker inappropriate as a

straightforward substitute for a

mouse

•

Devise interaction techniques that

are fast and use eye input in a

natural and unobtrusive way

•

Where possible, use natural eye

movements as an implicit input

•

Address “Midas Touch” problem

Eye as a Computer Input Device

• Faster than manual devices

• No training or coordination

• Implicitly indicates focus of attention, not just a pointing device

• Less conscious/precise control

• Eye moves constantly, even when user thinks he/she is

staring at a single object

• Eye motion is necessary for perception of stationary objects

• Eye tracker is always "on"

• No analogue of mouse buttons

• Less accurate/reliable than mouse

Object Selection

•

Select object from among several

on screen

•

After user is looking at the desired

object, press button to indicate

choice

•

Alternative = dwell time: if look at

object for sufficiently long time, it is

selected without further commands

–

•

Poor alternative = blink.

Dwell time method is convenient,

but could mitigate some of speed

advantage

Object Selection (continued)

•

Found: Prefer dwell time method

with very short time for operations

where wrong choice immediately

followed by correct choice is

tolerable

•

Long dwell time not useful in any

cases, because unnatural

•

Built on top of all preprocessing

stages-calibration, filtering, fixation

recognition

•

Found: 150-250 ms. dwell time feels

instantaneous, but provides enough

time to accumulate data for

accurate fixation recognition

Continuous Attribute Display

• Continuous display of attributes of selected object,

instead of user requesting them explicitly

• Whenever user looks at attribute window, will see

attributes for the last object looked at in main window

• If user does not look at attribute window, need not be

aware that eye movements in the main window

constitute commands

• Double-buffered refresh of attribute window, hardly

visible unless user were looking at that window

– But of course user isn't

Moving an Object

•

Two methods, both use eye position

to select which object to be moved

–

Hold button down, “drag” object by

moving eyes, release button to stop

dragging

–

Eyes select object, but moving is

done by holding button, dragging

with mouse, then releasing button

•

Found: Surprisingly, first works

better

•

Use filtered “fixation” tokens, not

raw eye position, for dragging

Menu Commands

•

Eye pull-down type menu

•

Use dwell time to pop menu, then to

highlight choices

–

If look still longer at a choice, it is

executed; else if look away, menu is

removed

–

Alternative: button to execute

highlighted menu choice without

waiting for second, longer dwell time

•

Found: Better with button than long

dwell time

•

Longer than people normally fixate

on one spot, hence requires

“unnatural” eye movement

Eye-Controlled Scrolling Text

•

Indicator appears above or below

text, indicating that there is

additional material not shown

•

If user looks at indicator, text itself

starts to scroll

•

But never scrolls while user is

looking at text

•

User can read down to end of

window, then look slightly lower, at

arrow, in order to retrieve next lines

•

Arrow visible above and/or below

text display indicates additional

scrollable material

Listener Window

• Window systems use explicit mouse command to

designate active window (the one that receives

keyboard inputs)

• Instead, use eye position: The active window is the

one the user is looking at

• Add delays, so can look briefly at another window

without changing active window designation

• Implemented on regular Sun window system (not

ship display testbed)

Object Selection Experiment

•

Compare dwell time object selection

interaction technique to

conventional selection by mouse

pick

•

Use simple abstract display of array

of circle targets, instead of ships

•

Subject must find and select one

target with eye (dwell time method)

or mouse

•

Circle task: Highlighted item

•

Letter task: Spoken name

Results

• Eye gaze selection significantly and substantially

faster than mouse selection in both tasks

• Fitts slope almost flat (1.7 eye vs 117.7 mouse)

Task (time in msec.)

Device

Circle

Letter

Eye gaze

503.7 (50.56)

1103.0 (115.93)

Mouse

931.9 (97.64)

1441.0 (114.57)

Time-integrated Selection

•

Alternative to “stare harder”

–

Subsumed into same implementation

•

Retrieve data on 2 or 3 most looked-at objects over

last few minutes

•

Integrate over time which objects user has looked at

•

Select objects by weighted, integrated time function

(vs. instantaneous look)

•

Matches lighweight nature of eye input

X-ray Vision

•

1. Entire virtual room

•

2. Portion of virtual room

–

•

No object currently selected

3. Stare at purple object near top

–

Internal details become visible

Experimental Results

• Task: Find object with given letter hidden inside

• Result: Eye faster than Polhemus, More so for

distant objects

• Extra task: Spatial memory better with Polhemus

time (sec)

150

close

100

distant

50

overall

0

Eye

Polh

Laptop prototype

• Lenovo + Tobii

– http://www.youtube.c

om/watch?v=6bYOB

XIb3cQ

– http://www.youtube.c

om/watch?v=GFwhx

0Wy8PI