04SequentialConsistency

advertisement

Distributed Shared

Memory and

Sequential

Consistency

Consistency Models

Memory Consistency Models

Distributed Shared Memory

Outline

Implementing Sequential Consistency in

Distributed Shared Memory

Consistency

There are many aspects for consistency. But remember that the

consistency is the way for the people to reason about the systems.

(What behavior should be considered as “correct” or “suitable”.)

Consistency model is considered to be the constrains of a system that

can be observed by the outside of the system.

Consistency problems raised in many applications in distributed

system including DSM(distributed shared memory), multiprocessors

with shared memory(called as memory model), and replicas stored on

multiple servers.

Examples for consistency

Memory:

◦ step1: write x=5; step2: read x;

◦ step2 of read x should return 5 as the read operation is following the write

operation and should reveal the write effectiveness. This is single object

consistency and also called as “coherence”.

Database: Bank Transaction

◦ (transfer 1000 from acctA to acctB)

◦ ACT1: acctA=accA+1000; ACT2: accB=accB=1000;

◦ acctA+acctB should be kept as the same. Any internal state should not be

seen from the outside.

Replica in Distributed System

◦ All the replicas for the same data should be the same despite the network

or server problems.

Consistency Challenges

No right or wrong consistency models. Often it is the art of tradeoff

between ease of programmability and efficiency.

There is no consistency problem when you are using one thread to

read or write data as the read will always reveal the result of the most

recent write.

Thus, consistency problem raises while dealing with concurrent

accessing on either single object or multiple objects.

Pay attention that this might be less obvious than you though before.

We will focus on building a distributed shared memory system.

Many systems involve

consistency

Many systems have storage/memory with concurrent readers and writers,

all these systems will face the consistency problems.

◦ Multiprocessors, databases, AFS, lab extent server, lab YFS

You often want to improve in ways that risk changing behavior:

◦ add caching

◦ split over multiple servers

◦ replication for fault tolerance

How can we figure out that such optimizations are “correct”?

We need a way to think about correct execution of distributed programs.

Most of these ideas from multiprocessors (memory models) and

databases (transactions) 20/30 years ago.

The following discussion is focused on the correctness and efficiency, not

fault-tolerance.

Distributed Shared Memory

Multiple processes connect to the virtually shared memory. The virtual shared

memory might be physcially located in distributed hosts connected by a network.

So, how to implement a distributed shared memory system?

Naive Distributed Shared

Memory

Each machine has a local copy of all memory (mem0, mem1, mem2

should be kept as the same)

Read: from local memory

Write: send update message to each other host (but don’t wait)

This is fast because the processes never waits for communication

Does this memory work well?

Example1:

M0:

M2:

v0=f0();

while(done1==0);

done0=1;

v2=f2(v0,v1);

M1:

while(done0==0) ;

v1=f1(v0);

done1=1;

Intuitive intent: M2 should

execute f2() with results from M0

and M1, waiting for M1 implies

waiting for M0.

Will the naive distributed

memory work for example 1?

Problem A

M0’s writes of v0 and

done 0 may be

interchanged by

network leaving v0

unset but done0=1

how to fix?

Will naive distributed memory

work for example 1?

Problem B

M2 sees M1’s writes before

M0’s writes i.e. M2 and M1

disagree on order of M0 and

M1 writes.

How to fix?

Naive distributed memory is

fast

But has unexpected behavior

Maybe it is not “correct”?

maybe we should never have expected example 1 to work.

So?

How can we write correct distributed programs with shared storage?

◦

◦

◦

◦

Memory system promises to behave according to certain rules.

We write programs assuming those rules.

Rules are a “consistency model”

This is the contract between memory system and programmer.

What makes a good

consistency model?

There is no “right” or “wrong” consistency models.

A model may make it harder to program but with good efficiency.

A model may make it easier to program but with bad performance.

Some consistency model may output astonishing results.

Applications might use different kinds of memory models such as Web

pages or shared memory according the types of applications.

Strict Consistency

Define the strict consistency?

◦ Suppose we can tag each operation with a timestamp (global time).

◦ Suppose each operation can complete instantaneous.

Thus:

◦ A read returns the results of the most recently written value.

◦ This is what uniprocessors support.

Strict Consistency

This follows the strict consistency:

◦ a=1;a=2;print a; always produce the value of a (2)

Is this strict consistency?

◦ P0: w(x) 1

◦ P1:

r(x)0 r(x)1

Strict consistency is a very intuitive consistency model.

So, would strict consistency avoid problem A and B?

Implementation of Strict

Consistency Model

How is R@2 aware of W@1?

How does W@4 know to pause until R@3 has finished? How long to

wait?

This is too hard to implement.

Sequential Consistency

Sequential consistency (serializability): the results are the same as if

operations from different processors are interleaved, but operations

of a single processor appear in the order specified by the program

Example of sequentially consistent execution (Not strictly consistency

as it violate the physical time effectiveness) :

P1: W(x)1

P2:

R(x)0 R(x)1

Sequential consistency is inefficient: we want to weaken the model

further

What sequential consistency

implies?

Sequential consistency defines a total order of operations:

◦ Inside each machine, the operations (instructions) appear in-order in the

total order (and defined by the program). The results will be defined by the

total order.

◦ All machines see results consistent with the total order (all machines agree

the operation order that applied to the shared memory). All reads see most

recent write in the total order. All machines see the same total order.

Sequential Consistency has better performance than strict consistency

◦ System has some freedom in how to interleave different operations from

different machines.

◦ Not forced to order by operation time (as in strict consistency model) and

can delay a read or write while it finds current values.

Problem A and B in sequential

consistency

Problem A

◦ M0's execution order was v0= done0=

◦ M1 saw done0= v0=

Each machine's operations must appear in execution order, so this

cannot happen with sequential consistency.

Problem B

◦ M1 saw v0= done0= done1=

◦ M2 saw done1= v0=

This cannot occur given a single total order, so this cannot happen

with sequential consistency.

The performance bottleneck

Once a machine’s write completes, other machines’ reads must see

the new data.

Thus communication cannot be omitted or much delayed.

Thus either reads or writes (or both) will be expensive.

The implementation of

sequential consistency

Using a single server. Each

machines will send the

read/write operations to

the server and queued.

(The operations should be

sent in order by the

corresponding machine

and should be queued in

that order.)

The server picks order

among waiting operations.

Server executes one by

one, sending back the

replies.

Performance problem of the

simple implementation

Single server will soon get overloaded.

No local cache! all operations will wait replies from the server. (This is

severe performance killer for multicore processors)

So:

◦ Partition memory across multiple servers to eliminate single-sever bottleneck.

◦ Can serve many machines in parallel if they don’t use same memory

Lamport paper from 1979 shows that a system is sequential consistent if:

◦ 1. each machine executes on operation at a time, waiting for it to complete.

◦ 2. executes operations on each memory location at a time i.e. you can have lots

of independent machines and memory systems.

Distributed shared memory

If a memory location is not written, it can be replicated i.e. cache it on

each machine so that reads are fast.

But we have to ensure that reads and writes are ordered

◦ Once the write modifies the location, no read should return the old value.

◦ Must revoke cached copies before writing.

◦ This delays writes to improve read performance.

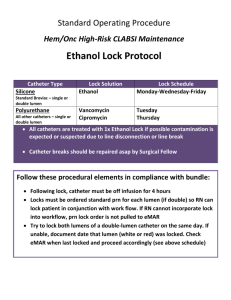

IVY: memory coherence in shared

virtual memory systems (Kai Li and

Paul Hudak)

IVY: Distributed Shared

Memory

IVY: connect multiple desktop / server together through LAN and

provide the illusion of super power machine.

A single power machine: single machine with shared memory and all

CPUs are visible to the applications.

Applications can use the concepts of multi-thread programming and

harnessing the power of many machines!

Applications do not need make explicit communication. (different

from MPI)

Page operations

IVY operates on pages of memory, stored in machine DRAM (no

memory server, different from the single server implementation)

Uses VM (virtual memory) hardware to intercept reads/writes.

Let’s build the IVY system step by step.

Simplified IVY

Only one copy of a page at a time (on

only one machine)

All other copies marked invalid in VM

tables

If M0 faults PageX (either read or write)

Fine the one copy e.g. in M1

Invalidate PageX in M1

Move PageX to M0

M0 marks the page R/W in VM tables

Provide sequential consistency: order of reads/writes

can be set by order in which page moves.

Slow: think about the applications perform many reads

without any write, the mechanism require many faults

and page move

Multiple reads in IVY

IVY allows multiple reader copies between writes.

No need to force an order for reads that occur between two writes.

IVY put a copy of the page at each reader thus the reads can

performed concurrently.

IVY core strategy

Either:

◦ multiple read-only copies and no writeable copies,

◦ or one writeable copy, no other copies

Before write, invalidate all other copies,

Must track one writer (owner) and copies (copy_set)

Why crucial to invalidate all

copies before write?

Once a write completes, all subsequent reads *must* see new data.

Otherwise it might be possible that different machine will see the

different order.

If one could read stale data, this could occur:

M0: wv=0

M1:

wv=99 wdone=1

rv=0

rdone=1 rv=0

But we know that can't happen with sequential consistency.

IVY Implementation

Manager: the process to manage the relationship between page and

its owner. Manager acts like a map to help the process to find the

corresponding page. In IVY, manager can be either fixed or dynamic.

Owner: the owner of a page has the write privilege and all other

processes have the read-only privilege.

copy_set: store the information about the copies for a specific page. If

the page is read-only, the copy_set indicates the copies of the page

together with the location of the copies. If the page is writable, the

copy_set contain only one entry i.e. the owner.

IVY Messages

RQ (read query, reader to MGR)

RF (read forward, MGR to owner)

RD (read data, owner to reader)

RC (read confirm, reader to MGR)

WQ (write query, writer to MGR)

IV (invalidate, MGR to copy_set)

IC (invalidate confirm, copy_set to MGR)

WF (write forward, MGR to owner)

WD (write data, owner to writer)

WC (write confirm, writer to MGR)

Scenarios

scenario 1: M0 has writeable copy, M1 wants to read

◦

◦

◦

◦

◦

0. page fault on M1, since page must have been marked invalid

1. M1 sends RQ to MGR

2. MGR sends RF to M0, MGR adds M1 to copy_set

3. M0 marks page as access=read, sends RD to M1

4. M1 marks access=read, sends RC to MGR

scenario 2: now M2 wants to write

◦

◦

◦

◦

◦

◦

◦

0. page fault on M2

1. M2 sends WQ to MGR

2. MGR sends IV to copy_set (i.e. M1)

3. M1 sends IC msg to MGR

4. MGR sends WF to M0, sets owner=M2, copy_set={}

5. M0 sends WD to M2, access=none

6. M2 marks r/w, sends WC to MGR

lock

access

owner?

CPU0

ptable (all CPUs)

access: R, W, or nil

owner: T or F

info (MGR only)

lock

access

owner?

copy_set: list of CPUs

with read-only copies

owner: CPU that can

write page

lock

access

owner?

lock

CPU1

copy_set

owner

CPU2 / MGR

ptable

info

34

CPU0

CPU1

read

CPU2 / MGR

lock

access

owner?

F

W

T

lock

access

owner?

F

nil

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

F

{}

CPU0

…

…

…

…

ptable

info

35

CPU0

CPU1

read

lock

access

owner?

F

W

T

lock

access

owner?

T

nil

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

F

{}

CPU0

…

…

RQ

CPU2 / MGR

…

…

ptable

info

36

CPU0

CPU1

read

lock

access

owner?

F

W

T

lock

access

owner?

T

nil

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

T

{}

CPU0

…

…

RQ

CPU2 / MGR

…

…

ptable

info

37

CPU0

RF

CPU1

read

lock

access

owner?

F

W

T

lock

access

owner?

T

nil

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

T

{CPU1}

CPU0

…

…

RQ

CPU2 / MGR

…

…

ptable

info

38

CPU0

RF

CPU1

read

lock

access

owner?

T

W

T

lock

access

owner?

T

nil

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

T

{CPU1}

CPU0

…

…

RQ

CPU2 / MGR

…

…

ptable

info

39

CPU0

lock

access

owner?

T

R

T

lock

access

owner?

T

nil

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

T

{CPU1}

CPU0

…

RD

RF

CPU1

read

…

RQ

CPU2 / MGR

…

…

ptable

info

40

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

T

nil

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

T

{CPU1}

CPU0

…

RD

RF

CPU1 read

…

RQ RC

CPU2 / MGR

…

…

ptable

info

41

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

T

R

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

T

{CPU1}

CPU0

…

RD

RF

CPU1 read

…

RQ RC

CPU2 / MGR

…

…

ptable

info

42

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

F

R

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

T

{CPU1}

CPU0

…

RD

RF

CPU1 read

…

RQ RC

CPU2 / MGR

…

…

ptable

info

43

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

F

R

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

F

{CPU1}

CPU0

…

RD

RF

CPU1 read

…

RQ RC

CPU2 / MGR

…

…

ptable

info

44

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

F

R

F

lock

access

owner?

lock

copy_set

owner

F

nil

F

F

{CPU1}

CPU0

…

CPU1

…

write

CPU2 / MGR

…

…

ptable

info

45

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

F

R

F

lock

access

owner?

lock

copy_set

owner

T

nil

F

F

{CPU1}

CPU0

…

CPU1

…

write

CPU2 / MGR

WQ

…

…

ptable

info

46

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

F

R

F

lock

access

owner?

lock

copy_set

owner

T

nil

F

T

{CPU1}

CPU0

…

CPU1

…

IV

write

CPU2 / MGR

WQ

…

…

ptable

info

47

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

T

nil

F

lock

access

owner?

lock

copy_set

owner

T

nil

F

F

{CPU1}

CPU0

…

CPU1

…

IC

IV

write

CPU2 / MGR

WQ

…

…

ptable

info

48

CPU0

lock

access

owner?

F

R

T

lock

access

owner?

F

nil

F

lock

access

owner?

lock

copy_set

owner

T

nil

F

T

{}

CPU0

…

WF

CPU1

…

IC

IV

write

CPU2 / MGR

WQ

…

…

ptable

info

49

CPU0

lock

access

owner?

T

nil

F

lock

access

owner?

F

nil

F

lock

access

owner?

lock

copy_set

owner

T

nil

F

T

{}

CPU0

…

WD

WF

CPU1

…

IC

IV

write

CPU2 / MGR

WQ

…

…

ptable

info

50

CPU0

access

owner?

F

nil

F

lock

access

owner?

F

nil

F

lock

access

owner?

lock

copy_set

owner

T

W

T

T

{}

CPU0

…

WD

WF

CPU1

…

IC

IV

write

CPU2 / MGR

WC

lock

WQ

…

…

ptable

info

51

CPU0

access

owner?

F

nil

F

lock

access

owner?

F

nil

F

lock

access

owner?

lock

copy_set

owner

F

W

T

T

{}

CPU2

…

WD

WF

CPU1

…

IC

IV

write

CPU2 / MGR

WC

lock

WQ

…

…

ptable

info

52

What if Two CPUS Want to Write

to Same Page at Same Time?

Write has several steps, modifies multiple tables.

Invariants for tables:

◦ MGR must agree with CPUs about single owner

◦ MGR must agree with CPUs about copy_set

◦ copy_set != {} must agree with read-only for owner

Write operation should thus be atomic!

What enforces atomicity?

What if there were no RC

message?

MGR unlocked after sending RF?

◦ could RF be overtaken by subsequent WF?

◦ or does IV/IC+ptable[p].lock hold up any subsequent RF? but invalidate

can't acquire ptable lock -- deadlock?

no IC?

◦ i.e. MGR didn't wait for holders of copies to ack?

no WC?

◦ e.g. MGR unlocked after sending WF to M0? MGR would send subsequent

RF, WF to M2 (new owner) What if such a WF/RF arrived at M2 before WD?

No problem! M2 has ptable[p].lock locked until it gets WD RC + info[p].lock

prevents RF from being overtaken by a WF so it's not clear why WC is

needed! but I am not confident in this conclusion.

Does IVY provide strict

consistency?

no: MGR might process two Ws in order opposite to issue time

no: W may take a long time to revoke read access on other machines

◦ so Rs may get old data long after the W issues.

Performance

In what situations will IVY perform well?

1. Page read by many machines, written by none

2. Page written by just one machine at a time, not used at all by others

Cool that IVY moves pages around in response to changing use

patterns

What about the page size?

Will page size of e.g. 4096 bytes be good or bad?

good if spatial locality, i.e. program looks at large blocks of data

bad if program writes just a few bytes in a page

◦ subsequent readers copy whole page just to get a few new bytes

bad if false sharing

◦ i.e. two unrelated variables on the same page and at least one is frequently

written page will bounce between different machines

◦ even read-only users of a non-changing variable will get invalidations

◦ even though those computers never use the same location

Discussions

What about IVY's performance?

◦ after all, the point was speedup via parallelism

What's the best we could hope for in terms of performance?

◦ Nx faster on N machines

What might prevent us from getting Nx speedup?

◦

◦

◦

◦

Network traffic (moving lots of pages)

locks

Many machines writing the same page

application is inherently non-scalable

Summary

Must exist total order of operations such that:

◦ All CPUs see results consistent with that total order (i.e.,

LDs see most recent ST in total order)

◦ Each CPU’s instructions appear in order in total order

Two rules sufficient to implement sequential

consistency [Lamport, 1979]:

◦ Each CPU must execute reads and writes in program

order, one at a time

◦ Each memory location must execute reads and writes in

arrival order, one at a time

Thank you! Any Questions?