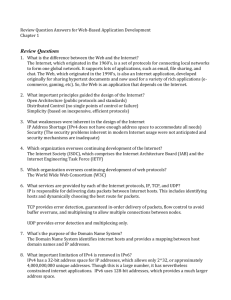

ourmon - Portland State University

advertisement

Intro to Ourmon Jim Binkley jrb@cs.pdx.edu Portland State University Computer Science Outline intro to ourmon, a network monitoring system network control and anomaly detection theory pictures at an exhibition of attacks port signatures Gigabit Ethernet - flow measurement what happens when you receive 1,488,000 64 byte packets a second? answer: not enough more information 2 the network security problem you used IE and downloaded a file and executed it perhaps automagically you used outlook and got email and executed it. just click here to solve the password problem you were infected by a worm/virus thingee trolling for holes which tries to spread itself with a known set of network-based attacks result: your box is now controlled by a 16-year old boy in Romania, along with 1000 others and may be a porn server, or is simply running an IRC client or server, or has illegal music, “warez”. on it and your box was just used to attack Microsoft or the Whitehouse 3 how do the worm/virus thingees behave on the network? 1. case the joint: try to find other points to infect: via TCP syn scanning of remote IPs and remote ports this is just L4, TCP SYNS, no data at L7 ports 135-139, 445, 80, 443, 1433, 1434 are popular ports like 9898 are also popular • and represent worms looking for worms (backdoors) 2. once they find you they can launch known and unknown attacks at you now we have L7 data 3. this is a random process, although certainly targeting at certain institutions/networks is common 4. a tool like agobot can be aimed at dest X, and then dest X can be “DOSSED/scanned” with a set of attacks 4 motivation: what the heck is going on out there? Vern Paxson said: the Internet is not something you can simulate Comer defined the term Martian means a packet on your network that is unexpected The notion of well-known ports so you can state that port 80 is used *only* for web traffic is defunct media vendors “may” have had something to do with this John Gilmore said: The Internet routes around censorship We have more and more P2P apps where 1. you don’t know the ports used, 2. things are less server-centric, and 3. crypto may be in use 5 a lot of this work was motivated by this problem gee, where’s the “anomaly”? now tell me what caused it … and what caused the drops too 6 ourmon introduction ourmon is a network monitoring system with some similarities/differences to traditional SNMP RMON II • name is a take off on this (ourmon is not rmon) Linux ntop is somewhat similar, Cisco netflow we deployed it in the PSU DMZ a number of years ago (2001) first emphasis on network stats • how many packets, how much TCP vs UDP, etc. • what the heck is going on out there? recent emphasis on detection of network anomalies 7 ourmon architectural overview a simple 2-system distributed architecture front-end probe computer – gather stats back-end graphics/report processor front-end depends on Ethernet switch portmirroring like Snort does NOT use ASN.1/SNMP summarizes/condenses data for back-end data is ASCII cp summary file via out of band technique micro_httpd/wget, or scp, or rsync, or whatever 8 ourmon 之道 the way of ourmon: aggregate the right stuff - network engineers don’t have time (aka too much info, not enuf data) try to do something with the data, don’t just pile it up and expect “look for the pony in all of the poo” minimize the data in support of aggregation - as opposed to: 1 sample out of 2k try to not lose stuff (again not 1 sample out of 2k) it’s ok to make new FLOW TUPLES it’s ok to look at layer 7 it’s ok to try and make the probe smarter, it’s not a router (separate those functions) 9 老子曰 道可道非長道 the network will change in unexpected ways 名可名非長名 most of your signatures will be useless jrb says: anomaly detection is easy if you pay attention to your outputs every day 10 PSU network Gigabit Ethernet backbone including Ge connection to Inet1 and Inet2 PREN(L3)/NWAX(L2) exchange in Pittock lamba Rail/PNW/NERO etc. 300+ Ethernet switches at PSU 10000 live ports, 5-6k hosts 4 logical networks: resnet, OIT, CECS, 802.11 10+ Cisco routers in DMZ 2 ourmon probes, 60k pps at peaks, 2G packets per day 11 PSU network DMZ Simplified overview skaldi: Pittock PSU router at NWAX exchange DMZ net gig E link instrumentation including ourmon front-end local DMZ switch MCECS PUBNET OIT all (often redundant) L3 routers RESNET Firewall ACLs exist for the most part on DMZ interior routers ourmon.cat.pdx.edu/ourmon is within MCECS network 12 ourmon current deployment in PSU DMZ Inet A. gigabit connection to exchange router (Inet) C. to dorms, OIT, etc. B. to Engineering Cisco gE switch gE ourmon graphics display box port-mirror of A, B, C 2 ourmon probe boxes (P4) 13 ourmon architectural breakdown pkts from NIC/kernel BPF buffer mon.lite report file probe box/FreeBSD tcpworm.txt ourmon.conf config file runtime: 1. N BPF expressions 2. + topn (hash table) of flows and other things (lists) 3. some hardwired C filters (scalars of interest) graphics box/BSD or linux outputs: 1. RRDTOOL strip charts 2. histogram graphs 3. various ASCII reports, hourly summaries automated tcpdump outputs (blacklist, triggers) 14 the front-end probe written in C input file: ourmon.conf (filter spec) 1-6 BPF expressions may be grouped in a named graph, and count either packets or bytes some hardwired filters written in C topn filters (generates lists, #1, #2, ... #N) kinds of topn tuples: flow, TCP syn, icmp/udp error output files: mon.lite, tcpworm.txt, etc summarization of stats by named filter ASCII, but usually small (current 5k) 15 the front-end probe typically use 7-8 megabyte kernel BPF buffer we may look at traditional 68 byte snap size a la tcpdump meaning L2-L4 HEADERS only, not data if you want irc, then defaults to 256 bytes at this point due to hash tuning we rarely drop packets barring massive syn attacks front-end basically is 2-stage gather packets and count according to filter type write report at 30-second alarm period 16 running the probe # ourmon.sh start or more like: # ourmon -c conffile -i em0 -a 30 -D directory (optional bpf expression) So the name of the probe is ourmon and it is found in /home/mrourmon/bin/ourmon or somewhere … depending on config process conffile -> mrourmon/etc/ourmon.conf (supplied) 17 ourmon.conf filter types 1. hardwired filters are specified as: fixed_ipproto # tcp/udp/icmp/other pkts packet capture filter cannot be removed 2. 1 user-mode bpf filter (configurable) bpf “ports” “ssh” “tcp port 22” bpf-next “p2p” “port 1214 or port 6881 or ...” bpf-next “web” “tcp port 80 or tcp port 443” bpf-next “ftp” “tcp port 20 or tcp port 21” 3. topN filter (ip flows) is just topn_ip 60 meaning top 60 flows 18 mon.lite output file roughly like this: pkts: caught:670744 : drops:0: fixed_ipproto: tcp:363040008 : udp:18202658 : icmp:191109 : xtra:1230670: bpf:ports:0:5:ssh:6063805:p2p:75721940:web:1 02989812:ftp:7948:email:1175965:xtra:0 topn_ip : 55216 : 131.252.117.82.3112>193.189.190.96.1540(tcp): 10338270 : etc useful for debug, but not intended for human use 19 back-end does graphics written in perl, one main program but there are now a lot of helper programs uses Tobias Oetiker’s RRDTOOL for some graphs (hardwired and BPF): integers as used in cricket/mrtg, other apps popular with network engineers easy to baseline 1-year of data logs (rrd database) has fixed size at creation top N uses histogram (our program) plus perl reports for topn data and other things hourly/daily summarization of interesting things 20 backend run from crontab every 30 seconds runs omupdate.sh script runs various perl scripts, makes rrd databases, graphics (NOT html files which are static) except does make 2nd-level html files for top N hourly run for ASCII reports top N daily aggregation midnight run for rollover of last daily/hourly report into previous day thus some reports have one week of backups, today, yesterday, etc. 21 hardwired-filter #1: bpf counts/drops this happens to be yet another SQL slammer attack. front-end stressed as it lost packets due to the attack. 22 2. bpf filter output example note: xtra means any remainder and is turned off in this graph. note: 5 bpf filters mapped to one graph 23 3. OLD topN example (histogram) 24 config process after unpack, run configure.pl (and hope for the best) front-end in src/ourmon (may need to hack makefile) front-end depends on libpcap and libpcre (may need to get from web and build on own) back-end depends on RRDTOOL perl (get from web and install on own possibly) RRDTOOL depends on libpng (sourceforge) 25 dependency overview front-end needs libpcap libpcre back-end needs rrdtool (libpng) and a web server (apache) and C plus perl of course 26 reasonable install strategy run configure.pl if ourmon front-end fails, goto src/ourmon and build with Makefile until you get it right but first install libpcap and libpcre if needed run the front-end, you should get output files (mon.lite, etc.) with something in them (assuming you have net traffic!!!) now run the back-end and see if you get .png pictures if not, debug RRDTOOL by hand - it has some sample perlscript for making pics if RRDTOOL can make pics, so can ourmon 27 2 ways to run ourmon 1. probe/back-end on same box web server access 2 interfaces, one for control and one for sniffing 2. probe/back-end on different boxes use scp or wget with small web server on front-end probe box so back-end can get files #method#1 is supported by default #method#2 can be easily hacked into omupdate.sh shellscript (back-end shell script driver) (say use wget to get the files) 28 probe output files mon.lite - nearly all RRDTOOL, top N, etc. tcpworm.txt - tcp port report (syn tuple filter) syndump.txt - local home IP version of tcp port report p2pdump.txt - p2p apps (needs work) emaildump.txt - port 25 users from tcp syn tuple irc - irc tuple file various automated tcpdump output files that do not go to backend (blacklist, trigger files) 29 ourmon has taught us a few hard facts about the PSU net P2P never sleeps (although it does go up in the evening) Internet2 wanted “new” apps. It got bittorrent. PSU traffic is mostly TCP traffic (ditto worms) web and P2P are the top apps bittorrent/gnutella PSU’s security officer spends a great deal of time chasing multimedia violations ... Sorry students: Disney doesn’t like it when you serve up Shrek It’s interesting that you have 1000 episodes of Friends on a server, but … 30 current PSU DMZ ourmon probe has about 60 BPF expressions grouped in 16 graphs many are subnet specific (e.g., watch the dorms) some are not (watch tcp control expressions) about 7 hardwired graphs including a count of flows IP/TCP/UDP/ICMP, and a wormcounter … topn graphs include : TCP syn’ners, IP flows (TCP/UDP/ICMP), top ports, ICMP error generators, UDP weighted errors topn scans, ip src to ip dst, ip src to L4 ports important reports: IRC tuples TCP port report 31 ourmon and intrusion detection obviously it can be an anomaly detector McHugh/Gates paraphrase: Locality is a paradigm for thinking about normal behavior and “Outsider” threat or insider threat if you are at a university with dorms thesis: anomaly detection focused on 1. network control packets; e.g., TCP syns/fins/rsts 2. errors such as ICMP packets (UDP) 3. meta-data such as flow counts, # of hash inserts this is useful for scanner/worm finding 32 inspired by noticing this ... mon.lite file (reconstructed), Oct 1: 2003: note 30 second flow counts (how many tuples) topn_ip: 163000: topn_tcp: 50000 topn_udp: 13000 topn_icmp: 100000 oops ... normal icmp flow count: 1000/30 seconds We should have been graphing the meta-data (the flow counts). Widespread Nachi/Welchia worm infection in PSU dorms 33 actions taken as a result: we use the BPF/RRDTOOL to graph: 1. network “errors” TCP resets and ICMP errors 2. we graph TCP syns/resets/fins 3. we graph ICMP unreachables (admin prohibit, host unreachable etc). we have RRDTOOL graphs for flow meta-data: topN flow counts tcp SYN tuple weighted scanner numbers topn syn graph now shows more info based on ip src, sorts by a work weight, shows SYNS/FINS/RESETS and the portreport …based on SYNs/2 functions 34 more new anomaly detectors the TCP syn tuple is a rich vein of information top ip src syn’ners + front-end generates 2nd output tcpworm.txt file (which is processed by the backend) port signature report, top wormy ports, stats work metric is a measure of TCP efficiency worm metric is a set of IP srcs deemed to have too many syns topN ICMP/UDP error weighted metric for catching UDP scanners 35 daily topn reports are useful top N syn reports show us the cumulative synners over time (so far today, and days/week) if many syns, few fins, few resets • almost certainly a scanner/worm (or trinity?) many syns, same amount of fins, may be a P2P app ICMP error stats show up both top TCP and UDP scanning hosts especially in cumulative report logs both of the above reports show MANY infected systems (and a few that are not) 36 front-end syn tuple TCP Syn Tuple produced by the front-end (ip src, syns sent, fins received, resets received, icmp errors received, total tcp pkts sent, total tcp pkts received, set of sampled ports) There are three underlying concepts in this tuple: 1. 2-way info exchange 2. control data plane 3. attempt to sample TCP dst ports, with counts 37 and these metrics used in particular places TCP work weight: (0..100%) IP src: S(s) + F(s) + R(r)/total pkts TCP worm weight: low-pass filter IP src: S(s) - F(r) > N TCP error weight: IP src: (S - F) * (R+ICMP_errors) UDP work metric: IP src: (U(s) - U(r)) * (ICMP_errors) 38 EWORM flag system TCP syn tuple only: E, if error weight > 100000 W, if the work weight is greater than 90% O (ouch), if there are few FINS returned and some SYNS, no FINS more or less R (reset), if there are RESETS M (keeping mum flag), if no packets are sent back, therefore not 2-way what does that spell? (often it spells WOM) 39 6:00 am TCP attack - BPF net errors 40 topn RRD flow count graph 41 bpf TCP control 42 6 am TCP top syn (this filter is useful...) 43 topn syn syslog sort start log time : instances: DNS/ip : syns/fins/resets total counts end log time --------------------------------------------------------------------------------------Wed Mar 3 00:01:04 2004: 777: host-78-50.dhcp.pdx.edu:401550:2131:2983 Wed Mar 3 07:32:36 2004 Wed Mar 3 00:01:04 2004: 890: host-206-144.resnet.pdx.edu:378865:1356:4755 Wed Mar 3 08:01:03 2004 Wed Mar 3 00:01:04 2004: 876: host-245-190.resnet.pdx.edu:376983:1919:8041 Wed Mar 3 08:01:03 2004 Wed Mar 3 00:01:04 2004: 674: host-244-157.resnet.pdx.edu:348895: :8468:29627 Wed Mar 3 08:01:03 2004 44 1st graph you see in the morning: 45 BPF: in or out? 46 BPF ICMP unreachables 47 hmm... size is 100.500 bytes 48 flow picture: UDP counts up 49 bpf subnet graph: OK, it came from the dorms (this is rare ..., it takes a strong signal) 50 top ICMP shows the culprit’s IP 8 out of 9 slots taken by ICMP errors back to 1 host 51 the worm graph (worm metric) 52 port signatures in tcpworm.txt port signature report simplified: ip src: flags work: port-tuples 131.252.208.59 () 39: (25,93)(2703,2) ... 131.252.243.162 () 22: (6881,32)(6882,34) (6883,5)... 211.73.30.187 (WOM) 100: (80,11)(135,11)(139,11)(445,11)(1025,11)(2745,11)( 3127,11)(5000,11) 211.73.30.200 (WOM) 100: port sig same as above 212.16.220.10 (WOM) 100: (135,100) 219.150.64.11 (WOM) 100: (5554,81) (9898,18) 53 tcpworm.txt stats show: on May 20, 2004 top 20 ports for “W” metric are: 445,135,5554,9898,80, 2745, 6667 1025,139,6129,3127,44444,2125,6666 7000,9900,5000,1433 www.incidents.org reports at this time: port 135 traffic increase due to bobax.c ports mentioned are 135,445,5000 54 Gigabit Ethernet speed testing test questions: what happens when we hit ourmon and its various kinds of filters 1. with max MTU packets at gig E rate? can we do a reasonable amount of work? can we capture all the packets? 2. with min-sized packets (64 bytes) same questions 3. is any filter kind better/worse than any other topn in particular (answer is it is worse) and by the way roll the IP addresses (insert-only) 55 Gigabit Ethernet - Baseline acc. to TR-645-2, Princeton University, Karlin, Peterson, “Maximum Packet Rates for FullDuplex Ethernet”: 3 numbers of interest for gE min-packet theoretical rate: 1488 Kpps (64 bytes) max-packet theoretical rate: 81.3 Kpps (1518 bytes) min-packet end-end time: 672 ns note: the min-pkt inter-frame gap for gigabit is 96 ns (not a lot of time between packets ...) an IXIA 1600 packet generator can basically send min/max at those rates 56 test setup for ourmon/bpf measurement ixia 1600 gE packet generator ixia 1. min-sized pkts. 2 max-sized pkts ixia sends/recvs packets Packet Engines line-speed gigabit ethernet switch port-mirror of IXIA send port UNIX pc 1.7 ghz AMD 2000 UNIX/FreeBSD 64-bit PCI/syskonnect + ourmon/bpf 57 test notes keep in mind that ourmon snap length is 68 bytes (includes L4 headers, not data) The kernel BPF is not capturing all of a max-sized packet An IDS like snort must capture all of the pkt it must run an arbitrary set of signatures over an individual packet reconstruct flows undo fragmentation shove it in a database? (may I say… GAAAA) 58 maximum packets results with work including 32 bpf expressions, top N, and hardwired filters: we can always capture all the packets but we need a N megabyte BPF buffer in the kernel add bpfs, add kernel buffer size 8 megabytes (true for Linux too) this was about the level of real work we were doing at the time in the real world 59 minimum packet results using only the basic count/drop filter NO OTHER WORK! using any-size of kernel buffer (didn’t matter) we start dropping packets at around 80 mbit speed (10% of the line rate with overhead) this is only with the drop/count filter! if you want to do real work, 30-50 mbits more like it challenged by opponent that has 100mbit NIC card ... (or distributed zombie) 60 why is min performance so poor? Two points-of-view that are complimentary. 1. there is not enough time to do any real work (you have 500 ns or so) 2. the bottom-half of the os is at HW priority, interrupts prevent the top-half from running (enough) to avoid drops. note that growing the kernel buffer doesn’t help research question: what is to be done? btw: this is why switch/router vendors usually publish performance stats on min-sized pkts. 61 also top N has a problem random inserts means bucket lookup always fails followed by memory allocation/cache churn random IP src and/or random IP dst how to deal with this? one obvious answer: make sure hash algorithm is optimized as much as possible improved lookup certainly does help insert is logically: lookup to find correct bucket + insert (node allocation/setup/chaining) our hash bucket size was way too small ... 62 this explains my long standing question of why does ourmon sometimes drop packets? in the drop/count graph but you couldn’t find any reason for it reason: looking at BIG things like flows, not small things like TCP syn attacks which do not add up to anything in the way of a MegaByte flow and may be distributed small packets are evil... 63 ourmon gigabit test conclusions min packets are a problem no filters and still overflows topn needed optimization (now bpf needs optimization) does topn problem apply to route caching in routers? a different parallel architecture is needed.for min pkts consequences for IDS snort system are terrible easy to construct a DOS attack that can sneak packets by snort 80 mbits of 64-byte packets will likely clog it. to say nothing of a concerted zombie attack less could always do the trick depending on the exact circumstances and the amount of work done in the monitor 64 google 06/SOC result experiment 4 hw thread (dual dual-core AMD) box with Intel NICs FBSD/Linux recent versions threaded ourmon probe. -T threads threading means: rfork - BSD. clone - linux per thread BPF buffer when it makes sense (BSD) x86 architecture spinlocks although low granularity 65 BSD results - gbits on Ge pkt size fbsd fbsdt max min/64 415 415 1500 work/64 150 255 1500 min/750 162 162 162 work/750 130 162 162 min/1500 82 82 82 work/1500 82 82 82 work - means ourmon has load (reasonable amt of work to do) BSD is not polled, has 8k BPF buffer 415 at min64 due to Intel NIC losing packets 66 Linux results pkt size linux(def) mmap linux/t min/64 230 315 270 work/64 90 160 140 min/750 162 162 162 work/750 70 120 140 min/1500 82 82 82 work/1500 70 82 82 max 1500 1500 162 162 82 82 67 current work event list - interesting anomaly info is put here in one place (events come from front-end in mon.lite file or from backend and all put in event file) e.g., hey stupid wake up and look at IRC channel N or irc channel Y has 50000 hosts in it!!! host X was talking to known evil host Y DNS top N work. thread-based front-end (done but needs integration) IRC-mesh collection sans Layer 7 payloads 68 more ourmon information ourmon.cat.pdx.edu/ourmon - PSU stats and download page ourmon.cat.pdx.edu/ourmon/info.html - how it works (not totally out of date) some papers on http://www.cs.pdx.edu/~jrb Botnet book (www.syngress.com). 4 chapters 69