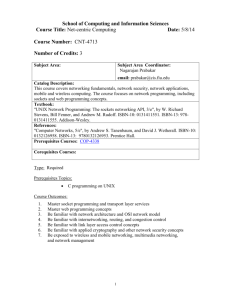

Presentation - Center for Software Engineering

advertisement

Test & Evaluation/Science &Technology Net-Centric Systems Test (NST) Focus Area Overview NAVAIR Pt. Mugu Executing Agent Gil Torres T&E/S&T Program Overview • The T&E/S&T program: – Exploits new technologies and processes to meet important T&E requirements – Expedites the transition of new technologies from the laboratory environment to the T&E community. – Leverages commercial equipment, modeling and simulation, and networking innovations to support T&E • Examines emerging requirements derived from joint service initiatives • Identifies needed technology areas and develops a long-range roadmap for technology insertion • Leverages research efforts from the highly developed technology base in the DoD labs, test centers, other government agencies, industry, and academia 2 TRMC Organization OSD Test Resource Management Center Director Deputy Director Budget & Resources Policy Deputy Director Joint Investment, Policy, & Planning S&T Program Net-Centric Systems Test Focus Area CTEIP Program InterTEC Deputy Director Strategic Planning JMETC Netcentric Test Components Net-Centric Technology Feed 3 Net-Centric Systems Test (NST) Focus Area Overview GIG SERVICES & DATA TACTICAL DATA Netcentric Test Environment Technology Gaps Technology Gaps Measure & Analyze Net-Centric Test Environment. Build Characterize & replicate JNO Emulate Networks & Services Construct NST Infrastructure Sim/Stim Battlespace Components Measure Measure & Verify NR-KPP compliance Assess Joint Mission Effectiveness Automate analysis and visualization Recreate NetCentric Test Battlespace Technology Gaps Manage Net-Centric Test Environment Manage: Dynamically manage & monitor Test Env. Automated test planning & reporting Access & interface with the Netcentric Env. 4 NST Vision Accelerate the delivery of Mission Ready Net-Centric capabilities to the warfighter Today’s Netcentric Environment Net-Centric Tomorrows Plug & Test NetCentric Environment Solutions Warfighter NST Portfolio NST T&E Advanced Technologies/Capabilities Broad Agency Build Announcement Manage Measure NST Reference Architecture SME COI Technology Lab Joint Architectures Netcentrtic Standards Net-Centric Policy GAP GAP – – – – – – – Net-Centricity• Decentralized Control• Enterprise Services• Shared Data• Autonomous Agent• Enterprise Architecture• Web 2.0 GAP NST T&E Needs Technology Gaps 5 NST T&E Needs (1 of 2) • Recreate the Net-Centric Test Battlespace – Emulate tactical edges – Test mission threads with limited participants – Integration/Responsiveness of Virtual Component with Live Component – Integration of Cyber/Information Operations with Kinetic Simulations – Representation of the Network Infrastructure and Network Environment – Representation of Cyber threat to NSUT – Simulate/Stimulate Irregular Warfare for urban environment – Verification & Validation (V&V) of the Synthetic Battle Environment (SBE) 6 NST T&E Needs (2 of 2) • Measure & Analyze Net-Centric Test Environment – Monitor/Analyze Critical Chaining Issues – Improving Near Real Time Analysis – Improve Instrumentation & Analyzing Cyber/IO • Manage Net-Centric Test Battlespace – – – – Quality of Service to Critical Nodes Synchronize Synthetic Environments Manage Test Environment for Net Based Systems Manage Tactically Dynamically Configured Networks 7 NST Challenges “Technologies to. . . .” Recreate Net-Centric Test Battlespace Measure & Analyze Net-Centric Test Environment • • • • • • Flexible, Scalable LVC Environment For JMe Testing GIG & SOA Sim / Stim Capabilities Integrate & Validate Net-Centric Simulations & SoS Emulate Red Cyber Warfare Capabilities Automate C2 Decision Process • • • • Analyze Joint Mission Threads Near Real-Time Evaluate Net-Centric Data & Services Automated NR-KPP Analysis Measure Joint Mission Effectiveness (JMe) Analyze & Visualize Information Assurance & Operations Manage Net-Centric Test Battlespace • • • Dynamically Manage Test Infrastructure Automated Planning and Scenario Development Cross Domain Solution Over Distributed Test Infrastructure 8 NST Roadmap – Recreate the Net-Centric Test Battlespace 06 07 08 09 T&E/S&T Investments 10 11 12 13 •Emulate Tactical Edges •Test Mission Thread w/Limited Participants •Technologies to V&V NST Env. •Integration/Responsiveness of VC with Theatre/Live Component •Sim/Stim Irregular Warfare NE2S 14 15 16 17 18 19 20 NE2S – Network Effects Emulator System TRCE – TENA in a Resource Constrained Environment •Representation of Cyber Threat to NSUT •Representation of Network Infrastructure and Env. TRCE •Integrate Internet into Family of Tested Nets •Integrate IO with Kinetic Sim’s Challenges Flexible, Scalable LVC Environment For JMe Testing Emulate Red Cyber Warfare Capabilities Integrate & Validate Netcentric Simulations & SoS GIG & SOA Sim / Stim Capabilities Automate C2 Decision Process T&E Capability Recreate the Net-Centric Test Battlespace 9 NST Roadmap – Measure and Analyze the Net-Centric Test Environment 06 07 08 09 10 11 12 T&E/S&T Investments 13 14 15 16 17 18 19 20 NECM – NST Evaluation Capability Module NEIV – Net-Centric Environment Instrumentation & Visualization NSAT – NR-KPP Solution Architecture Toolset NECM NEIV NSAT •Monitor/Analyze Critical Chaining Issues •Improving Near Real Time Analysis •Analyze impact of Cyber and IO Challenges Analyze Joint Mission Threads Near Real-Time Evaluate Netcentric Data & Services Automated NR-KPP Analysis Measure Joint Mission Effectiveness (JMe) Analyze & Visualize Information Assurance & Operations T&E Capability Measure and Analyze the Net-Centric Test Env. 10 NST Roadmap – Manage the Net-Centric Test Battlespace 06 07 08 09 10 T&E/S&T Investments MENSA ANSC •12 11 13 14 15 16 17 18 19 20 MENSA – Middleware Enhancements for Net-Centric Simulation Architecture ANSC – Analyzer for NST Confederations MLSCLS – Multi-Level Security Cross Layer Scheme PAM – Policy Based Adaptive Network Management RRM – Rapid Reconfiguration Module MLSCLS •Synch Synthetic Environments PAM •Manage Test Env. For Net-based systems RRM •Manage Tactically Dynamic Configured Networks •QoS to Critical Nodes Challenges Dynamically Manage Test Infrastructure Cross Domain Solution Over Distributed Test Infrastructure Automated Planning and Scenario Development T&E Capability Manage the Net-Centric Test Battlespace 11 T&E/S&T Project Critical Factors • T&E Need (Gaps) • Address a known T&E technological gap. • Understanding of the current T&E capabilities and techniques • Understand capabilities and techniques for the future of T&E • S&T Challenge • What needs to be done to effectively address the T&E Need • Efficiencies and test capacity improvements as a result of the technology developed or matured • Results of project will significantly advance technology and thereby significantly enhance current test capabilities • High Risk-High Payoff • The project improves testing rigor and capabilities relevant to NetCentric technology improvements • Reduces the cost of government operations • Provides an improved capability to test operators 12 T&E/S&T Project Critical Factors • Wide Application • The technology can be applied at multiple sites, on multiple systems, in multiple organizations simultaneously • The technology effectively supports a distributed LVC Net-Centric test event • Transition Strategy • Identify potential partners, future consumers of the technology (government lab, agency, test activity, industry, etc). • Identify the intended use of the technology, and a plan to obtain buy-in to the technology from potential transition partners • Demonstrate a concise and sensible plan that will create a higher probability of a successful transition to the identified transition partners 13 Project Execution Plan (PEP) • PEP required contents: – – – – – – – – – – Purpose and Work Scope T&E Needs S&T Challenges Technology Readiness Levels Budget Project Schedule Project Specifications Phase Exit Criteria Project Transition List of Deliverables 14 Project Specifications Specifications Parameter End-to-end Jitter Performance Improvement End-to-end packet Drop Performance Improvement End-to-end Delay Performance Improvement Network Fault Recovery Time Test Exercises Disruption Caused by Network Problems Current Performance Level Up to 200% (7 hops, 70% load, Across Continental U.S.) Up to 100% (7 hops, 70% load, Across Continental U.S.) 200 – 500 msec (7 hops, 70% load, Across Continental U.S.) Days/Hours/Minut es Minutes/Hours/ Days Current Target Ultimate Goal Less than 20% (High priority video traffic) Less than 5% Less than 5% (High priority video traffic) Less than 1% <100 msec (High priority traffic) ~ 50 msec 30 Sec 15 Sec 30 Sec 1 Sec Achieved 15 Netcentric Systems Test Technology Investments & Transitions (1 of 7) Project Title Dynamic Utility for Collaborative Architecture-Centric T&E (DUCAT) Georgia Tech Research Institute Network Ready Architecture Evaluator (NetRAE) T&E Gap/Description Inability for real-time visualization and analysis of C2 processes, integrated architectures and dynamic, adaptive test architectures Enable representation of test artifacts test architectures using XML; use of data mining to capture C2 interactions and visualize mission threads as they are being executed Inability to perform T&E of Net-Enabled Weapon Systems operating in a Service Oriented Architecture environment Provide a persistent and extensible infrastructure with the means of testing against the Net Ready KPP Raytheon Current Status/Transition Complete – Developed and demonstrated advanced data mining and visualization techniques to work in near-real time with agile C2 in heterogeneous networks Prototype evaluation completed in September 09 Transitioned to NSAT project (1QFY10) Complete – Delivered prototype toolset to extract critical data from systems architecture Toolset for assessing serviceoriented architectures provided by weapon systems for establishing Net Ready KPP compliance (1QFY10) 16 Netcentric Systems Test Technology Investments & Transitions (2 of 7) Project Title T&E Gap/Description Flexible Analysis Services (FAS) Inability for flexible analysis service that adapts to changes in tactical, C2 and emerging net-centric protocols and systems that dynamically optimize info exchange JITC/Northrop Grumman Analyzer for Netcentric Systems Test Confederations (ANSC) SRI Research services for collection, parsing, transforming, analyzing, and visualizing information from diverse protocols Inability to automate complex pre-event test planning with removal of human errors in developing a LVC based test environment Current Status/Transition Complete – Visualization and Reporting (FY09) : Conduct research, expand Phase 2 prototypes, and develop visualization and reporting prototypes Prototype flexible, userdefinable message parsing, data transformation, and analysis services (1QFY10) Phase 3 – Iterate Phase 2 tasks with the InterTEC Spiral 3 test event; Install Analyzer and KBs in TIE Lab. SMEs to add resource descriptions to selected KBs Develop and demonstrate prototype analyzer software that will streamline pre-event Prototype demo for InterTEC specification and integration of and DISA FDCE. systems or components into a (3QFY10) test confederation. 17 Netcentric Systems Test Technology Investments & Transitions (3 of 7) Project Title T&E Gap/Description Inability to simulate and Network Effects Emulation System (NE2S) analyze Network effects within joint context to create, instrument, and analyze the impact of effects on shared situational awareness in a Netcentric environment 3 Supported Architectures: - Software installed on end systems under test - Hardware in the loop behind physical network - Hardware in the loop spliced into physical network User Host Based Effects Generator (Software) ` HTTPS Web-based access to Control Station CPU Effects Mem Effects Net Effects C2PC Access Switch ` C2PC Exchange Server S ke SH y a wit uth h p en ub tic lic ati on Web Server (Apache) [User Interface] [Business Logic] Comm. Plugin Framework Application Server (JBoss) Distributed Network Access Switch In Line Effects Generator (Linux Router) Current Status/Transition Phase 2 – Research types of effects and methods to be emulated; research capability to control emulated effects such as memory exhaustion and high CPU load ` Net Effects ` Chat Server Database Server (MySQL) [Persistence] Master Station Effects Appliace/ Dedicated Hardware (e.g. SPIRNET) ` C2PC Net Effects Access Switch ` JFCOM, Suffolk, VA GCCS Develop technologies to provide an enterprise tool capable of simulating a wide range of network and host based effects that can be centrally managed and controlled. Prototype demo of Federated Joint Live-Virtual-Constructive Effects Capability (3QFY11) 18 Netcentric Systems Test Technology Investments & Transitions (4 of 7) Project Title Middleware Enhancements for Netcentric Simulation Architecture (MENSA) JPL NST Evaluation Capability Module (NECM) Visense T&E Gap/Description Current Status/Transition Inability to dynamically optimize information delivery; minimize network congestive failure; and overcome unreliable network environments Phase 1 – Development environment covering TENA platforms; development of Compression enhancements and QoS Hooks Improving network efficiency; improving reliability via the use of redundancy; improving utilization through dynamic coding Inability for automated tool to provide an architecture-driven JMe capability across LVC distributed environment, works with the U.S. Joint Forces Command (USJFCOM) JACAE tool suite and operational methodology. Demo during InterTEC Spiral 3 and improve Network Quality of Service for iNET. (2QFY09) PHASE 1 – JACAE tool and schema technical exchange with NECM team October 2009. NECM Software Client and Data Host (3QFY10) Automating joint mission thread and net-enabled UJT analysis and event planning 19 Netcentric Systems Test Technology Investments & Transitions (5 of 7) Project Title T&E Gap/Description Multi-Level Security Cross Layer Scheme (MLSCLS) Inability to support efficient and flexible Cross Domain/Multilevel Security (MLS) in distributed test Infrastructures John Hopkins/Applied Physics Laboratory Provide guaranteed access to the wireless medium while using a single channel without fixed infrastructure and with physical layer solutions to provide a cross layer security solution TENA in a Resource Inability to support efficient and Constrained Environment flexible Cross Domain/Multilevel (TRCE) Security (MLS) in distributed test Infrastructures TENA Middleware API Callback Scheduler TENA Objects Diagnostics Object queuing system to provide ef fective data continuity on either side of a variable quality or degraded network link Subscriber specif ied interest such as “Send me all ground vehicles moving less than 10 mph AND within 10 miles of my current location” Interests Distributed Interest-Based Message Exchange (DIME) Object Framework Object Queue The ACE ORB (TAO) QoS Support Rules Engine Rules Engine handles the logic of when, where, and how to package and deliver data in a timely f ashion Quality of Service logic and algorithms f or dealing with variable quality or degraded networks Pluggable Protocols Adaptive Communication Envirnoment SAIC IP based protocols that support wireless and variable quality networks Provide guaranteed access to the wireless medium while using a single channel without fixed infrastructure and with physical layer solutions to provide a cross layer security solution Current Status/Transition Phase 2 – Research, feasibility, characterization and selection of alternatives of flexible & efficient architectures Fills a long range iNET requirement for test scenarios that need MLS, ad hoc & mobile protocols 2QFY2011 Phase 2 – Analyze technologies for effective network utilization; develop key technology software (SW) component proof-ofconcepts Prototype technologies evaluated in “Alpha” release of middleware 20 4QFY2011 Netcentric Systems Test Technology Investments & Transitions (6 of 7) Project Title T&E Gap/Description NR-KPP Solutions Architecture Toolkit (NSAT) Inability to automate testing of the Net Ready-Key Performance Parameters (NR-KPP) solutions architecture element. GBL Systems Corp. Provide automated compliance and conformance testing; plan mission threads for Netcentric System Test; and visualize & analyze mission thread execution through architecture views and tactical message exchanges Current Status/Transition Phase 1 – Define NSAT vocabulary/ontology and associated project capabilities and interfaces; Create SOA service for solutions architecture; Create standardized NST inference services Provides supporting DoDAF solutions architecture assessments; support joint interoperability certifications within the emerging netcentric warfare (NCW) environment 4QFY10 21 Netcentric Systems Test Technology Investments & Transitions (7 of 7) Project Title Policy-based Adaptive Network & Security Management Technology for NST (PAM) JPL T&E Gap/Description Inability to automate network infrastructure configuration and recovery based on dynamic policies and cross domain data sharing Provide “Mission_Plan-toNetwork_Operations” automation and enables policybased dynamic information sharing with Intellectual Protocol protection Current Status/Transition Phase 2 – R&D on reasoning front-end software with PolicyBased Network Management software & emulated network environment; demo planned to improve InterTEC event execution. Prototype demo of PolicyBased Adaptive Network and Security Management software system (4QFY11) 22 Questions? 23 T-E Web Monthly Reports • Monthly reporting timeliness is critical – Timeliness of report submission is important to effectively communicate project status – Submission to Deputy EA required by the 5th of the month for prior month • Content: – – – – – – – Executive Summary of the project Funding O&E Activities for the month just completed Significant accomplishments Planned efforts for the next month Problems/Issues Brief explanation on use of funds 24 FAM Monthly Reports • Focus Area Monthly (FAM) reports – EA required to submit to PMO on the last Friday of each month – Highlight significant (positive and negative) events or issues in projects – Not a substitute for more timely notification to PMO of highly significant issues or events – Issues requiring immediate action – Events that could result in “bad press” • FAM reports are now auto-generated from T-E Web, so significant accomplishments and issues are very important to report each month Keep the Focus Area Monthly Reports simple—no lengthy description of normal, scheduled activities—just good news and problems 25 Back-Up Slides 26 Weekly Activity Reports • Due to EA every Wednesday by noon Pacific Time • Sample Content: – Netcentric Systems Test (NST) NST Evaluation Capability Module (NECM): The NECM project is developing an automated object-relationship graph algorithm . . . . – This technology will automate the arrangement of operational activities, control logic, sequence flows, and . . . – This algorithm enables improved user interaction to create a. . . – During the week of 11 January 2010, the NECM team implemented an initial Joint Personnel Recovery (JPR) joint mission thread (JMT). . . – The future efforts will include. . . • Status/Assessment: Green – Early engagement of transition partners to define appropriate use case to verify useful test technologies, and project is operating on schedule and on budget. 27 T&E/S&T Program Roles and Responsibilities • T&E/S&T Program Manager – Provides guidance to focus areas to ensure disciplined project selection and funding process – Provides program funds for administering and executing T&E/S&T Program – Reviews technical execution of focus areas and projects to ensure T&E/S&T Program objectives are being met – Reviews financial execution of focus areas and projects to ensure best value and fiscal responsibility – Provides TESTWeb and the T&E/S&T Acquisition Community Connection (ACC) Special Interest Area to support exchange of financial, technical, and programmatic information throughout the T&E/S&T Program • T&E/S&T Program Office Staff – Supports the T&E/S&T Program Manager – Reviews focus areas and projects to ensure technical objectives are being met – Reviews focus areas and projects to ensure financial objectives are being met – Provides recommendations to the T&E/S&T Program Manager on Program related matters – Interfaces with EAs, Deputies, WG members and PIs on behalf of T&E/S&T Program Manager as required 28 T&E/S&T Program Roles and Responsibilities • Focus Area Executing Agents – Primary point of contact for T&E/S&T Program Office within focus area – Maintain awareness of DoD T&E needs within focus area and technology developments related to focus area – Actively seek out high payoff T&E/S&T projects that address critical DoD T&E needs and mitigate identified T&E risks – Issue Requests for Information (RFIs) and Broad Agency Announcements (BAAs) through contractual channels – Responsible for technical and financial execution of projects approved by T&E/S&T Program Manager – Chair for focus area working group (WG) and responsible for maintaining Tri-Service WG T&E and S&T representation – Coordinate replacements with T&E/S&T Program Manager – Responsible for maintaining current project and focus area information in TESTWeb and T&E/S&T ACC Special Interest Area 29 T&E/S&T Program Roles and Responsibilities • Focus Area Working Group Members – Act as government leads on projects – where there is no intrinsic government lead – Provide coordination of T&E/S&T projects with Service related efforts – Identify and prioritize T&E needs – Support the EA on source selection efforts – Review ongoing focus area projects – Identify transition opportunities for projects • Project Principal Investigators – – – – Execute projects approved by T&E/S&T Program Manager Conduct research efforts Provide technical progress reports to EA Identify and actively pursue transition opportunities for T&E/S&T projects – Responsible for maintaining accurate financial information in TESTWeb 30 T&E/S&T Program Roles and Responsibilities • Government Lead – Attend major focus area reviews (when able) – Provide guidance to the project to ensure a disciplined engineering approach – Review technical execution of project to ensure NST Program objectives are being met; gaps are being satisfied – Provides quick review of monthly reports to ensure accuracy – Interfaces with EAs, Deputies, WG members and Principle Investigators as required – Maintain awareness of identified T&E needs within the NST focus area and technology developments related to focus area – Support the EA on source selection efforts – Support EA in Identifying transition opportunities for project 31 Publications • Professional publications are encouraged by the TRMC and PMO – Provides opportunities for transition of technology – Raises awareness of T&E/S&T Program to new communities • Public Release of Technical Information – EAs adhere to local procedures for release of technical information in EA’s focus area – Copies of all technical information to be released are to be sent to the T&E/S&T Principal Scientist prior to release – Use the ACC Special Interest Area https://acc.dau.mil/CommunityBrowser.aspx – Include acknowledgement of the T&E/S&T Program in all publications – Example: “The authors would like to thank the Test Resource Management Center (TRMC) Test and Evaluation/Science and Technology (T&E/S&T) Program for their support. This work is funded (or is partially funded) by the T&E/S&T Program through the (Put your organization’s name here) contract number xxxxxx.” 32 Release of Programmatic Information • The PMO must review and approve release of programmatic information prior to transmission outside the program – Applies to detailed project status, funding levels and execution, schedule, problem areas, and potential management issues – Also applies to Solicitations (BAAs, PRDAs, RFIs) – Not intended to restrict information exchange between EAs or core focus area working groups • This applies to written and verbal release of information – For publications, PMO requires a minimum of 10 days before EA’s next suspense on the publication – For verbal or email Request for Information, call or email PMO for approval before information is released – Option is to refer requestor to PMO for the programmatic information 33 Technology Readiness Levels (TRL) Technology Readiness Level Description 1. Basic principles observed and reported Lowest level of technology readiness. Scientific research begins to be translated into applied research and development. Example might include paper studies of a technology's basic properties. 2. Technology concept and/or application formulated Invention begins. Once basic principles are observed, practical applications can be invented. The application is speculative and there is no proof or detailed analysis to support the assumption. Examples are still limited to paper studies. 3. Analytical and experimental critical function and/or characteristic proof of concept Active research and development is initiated. This includes analytical studies and laboratory studies to physically validate analytical predictions of separate elements of the technology. Examples include components that are not yet integrated or representative. 4. Component and/or breadboard validation in laboratory environment Basic technological components are integrated to establish that the pieces will work together. This is relatively "low fidelity" compared to the eventual system. Examples include integration of 'ad hoc' hardware in a laboratory. 5. Component and/or breadboard validation in relevant environment Fidelity of breadboard technology increases significantly. The basic technological components are integrated with reasonably realistic supporting elements so that the technology can be tested in a simulated environment. Examples include 'high fidelity' laboratory integration of components. 34 TRL (Cont) 6. System/subsystem model or prototype demonstration in a relevant environment Representative model or prototype system, which is well beyond the breadboard tested for TRL 5, is tested in a relevant environment. Represents a major step up in a technology's demonstrated readiness. Examples include testing a prototype in a high fidelity laboratory environment or in simulated operational environment. 7. System prototype demonstration in an operational environment Prototype near or at planned operational system. Represents a major step up from TRL 6, requiring the demonstration of an actual system prototype in an operational environment, such as in an aircraft, vehicle or space. Examples include testing the prototype in a test bed aircraft. 8. Actual system completed and 'flight qualified' through test and demonstration Technology has been proven to work in its final form and under expected conditions. In almost all cases, this TRL represents the end of true system development. Examples include developmental test and evaluation of the system in its intended weapon system to determine if it meets design specifications. 9. Actual system 'flight proven' through successful mission operations Actual application of the technology in its final form and under mission conditions, such as those encountered in operational test and evaluation. In almost all cases, this is the end of the last "bug fixing" aspects of true system development. Examples include using the system under operational mission conditions. 35 Definitions • Breadboard: A breadboard is an experimental model composed of representative system components used to prove the functional feasibility (in the lab) of a device, circuit, system, or principle without regard to the final configuration or packaging of the parts. Breadboard performance may not be representative of the final desired system performance, but provides an indication that desired performance can be achieved. • Brassboard: A brassboard is a refined experimental model that provides system level performance in a relevant environment. The brassboard should be designed to meet (at a minimum) the threshold performance measures for the final system. • Prototype: A prototype is a demonstration system that has been built to perform in an operational environment. A prototype device meets or exceeds all performance measures including size, weight, and environmental requirements. This device is suitable for complete evaluation of electrical and mechanical sub-system form, fit, and function design and characterization of the full system performance. 36 Accounting Definitions • Obligations – The amount of an order placed, contract awarded, service rendered, or other transaction that legally reserves a specified amount of an appropriation or fund for expenditure • Accruals – The costs incurred during a given period representing liabilities incurred for goods and services received, other assets acquired and performance accepted, prior to payment being made • Disbursements – The charges against available funds representing actual payment and evidenced by vouchers, claims, or other documents approved by competent authority • Expenditures – The total of disbursements plus accruals 37