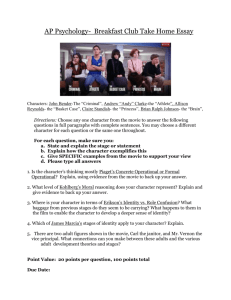

Blind (spot, alert)

advertisement

From Sentiment to Persuasion

Analysis: A Look at Idea Generation

Tools

Jason Kessler

Data Scientist, CDK Global

@jasonkessler

www.jasonkessler.com

Outline

• Idea generation tools

– Use large corpora to generate hypotheses about questions like:

– How do you make a persuasive ad?

– How can presidential candidates improve their rhetoric?

– How do ethnicity and gender correlate to language use in online

dating profiles?

– How do movie reviews predict box-office success?

• Technical content:

– Ways of extracting category-associated words and phrases from

corpora

– UX around displaying and provided context to associated words and

phrases

Customer-Written

Product Reviews

Good Ad Content

Naïve Approach: Indicators of Positive Sentiment

"If you ask a Subaru owner what they think of their car, more times than not

they'll tell you they love it,"

-Alan Bethke, director of marketing communications for Subaru of

America (via Adweek)

Positive sentiment.

Engaging language.

Finding Engaging Content

Example Review Appearing on a 3rd Party

Automotive Site

Rating: 4.4/5 Stars

Car Reviewed: Chevy Cruze

Text:

…I was very skeptical giving

up my truck and buying an

"Economy Car." I'm 6' 215lbs,

but my new career has me

driving a personal vehicle to

make sales calls. I am overly

impressed with my Cruze…

# of users who

read review:

20

Finding Engaging Content

Example Review Appearing on a 3rd Party

Automotive Site

Rating: 4.4/5 Stars

Car Reviewed: Chevy Cruze

Text:

…I was very skeptical giving

up my truck and buying an

"Economy Car." I'm 6' 215lbs,

but my new career has me

driving a personal vehicle to

make sales calls. I am overly

impressed with my Cruze…

# of users who

read review:

# who went on to

visit a Chevy

dealer’s website:

20

15

Finding Engaging Content

Example Review Appearing on a 3rd Party

Automotive Site

Rating: 4.4/5 Stars

Car Reviewed: Chevy Cruze

Text:

…I was very skeptical giving

up my truck and buying an

"Economy Car." I'm 6' 215lbs,

but my new career has me

driving a personal vehicle to

make sales calls. I am overly

impressed with my Cruze…

# of users who

read review:

# who went on to

visit a Chevy

dealer’s website:

20

15

Review Engagement Rate:

15/20=75%

Finding Engaging Content

Example Review Appearing on a 3rd Party

Automotive Site

Rating: 4.375/5 Stars

Car Reviewed: Chevy Cruze

Text:

…I was very skeptical giving

up my truck and buying an

"Economy Car." I'm 6' 215lbs,

but my new career has me

driving a personal vehicle to

make sales calls. I am overly

impressed with my Cruze…

# of users who

read review:

# who went on to

visit a Chevy

dealer’s website:

20

15

Review Engagement Rate:

15/20=75%

Median Review Engagement Rate:

22%

Sentiment vs. Persuasiveness: SUV-Specific

Positive Sentiment

High Engagement

Love

Comfortable

Comfortable

Front [Seats]

Features

Acceleration

Solid

Free [Car Wash, Oil Change]

Amazing

Quiet

Sentiment vs. Persuasiveness: SUV-Specific

Positive Sentiment

High Engagement

Love

Comfortable

Comfortable

Front [Seats]

Features

Acceleration

Solid

Free [Car Wash, Oil Change]

Amazing

Quiet

Negative Sentiment

Low Engagement

Transmission

Money [spend my, save]

Problem

Features

Issue

Dealership

Dealership

Amazing

Times

Build Quality [typically positive]

Algorithm for finding word lists

• We’ll discuss algorithms later in the talk

• Basically, we rank words and phrases based on their classifier

produced feature weights

• Techniques and technologies used

– Unigram and bigram features (bigrams must pass a simple keyphrase test)

– Ridge classifier

High Sentiment Terms

Love

Awesome

Fantastic

Handled

Perfect

Engagement Terms

Blind (spot, alert)

Contexts from high

engagement reviews

- “The techno safety features

(blind spot, lane alert, etc)

are reason for buying car...”

- “Side blind Zone Alert is

truly wonderful…”

- …

Can better science improve messaging?

Engagement Terms

Blind

White (paint, diamond)

Contexts

- “White with cornsilk interior.”

- “My wife fell in love with the

Equinox in White Diamond”

- “The white diamond paint is

to die for”

Can better science improve messaging?

Can better science improve messaging?

Engagement Terms

Blind

White

Climate (geography, a/c)

Contexts

- “Love the front wheel drive

in this northern Minn.

Climate”

- “We do live in a cold climate

(Ontario)”

- …climate control…

Just recently, VW has produced very similar

commercials.

Process

Process

Corpus

collection

Identify

documents

of interest.

Label documents

with class of

interest

• For CDK’s usage:

• Persuasive

• High engagement

rate

• Positive

• High star rating

Find linguistic

elements that are

associated with

class

Complicated!

Will be a major

focus of this talk

Explain why

linguistic

elements are

associated.

- Show

representative

contexts.

- Human

generated

explanation.

- Statistics

supporting

association

- Ideation

Case Study 1:

Language of Politics

NYT: 2012 Political Convention Word Use by Party

Mike Bostock et al., http://www.nytimes.com/interactive/2012/09/06/us/politics/convention-word-counts.html

2012 Political Convention Word Use by Party

Source: http://www.nytimes.com/interactive/2012/09/06/us/politics/convention-word-counts.html,

Corpus has a class size imbalance:

- Democrats: 79k words across 123 speeches

- Republicans: 60k words across 66 speeches

“Number of mentions by spoken words”

- Normalizes imbalance (282 vs. 182)

- More understandable than P(jobs|democrat) vs

P(jobs|republican), which are both extremely low

numbers (0.36% vs. 0.30%)

Mike Bostock et al., http://www.nytimes.com/interactive/2012/09/06/us/politics/convention-word-counts.html

Summary: NYT 2012 Conventions

• Corpus: Political Convention Speeches

• Class labels: Political Party of Speaker

• Linguistic elements:

– Words and phrases

– Manually chosen

• Explanation:

– Cool bubble diagram

– Selective topic narration

– View topic contexts organized by speaker and party

• We’ll get back to this in a minute

Case Study 2:

Language of SelfRepresentation

OKCupid: How does gender and ethnicity affect selfpresentation on online dating profiles?

Which words and phrases statistically distinguish ethnic groups and genders?

hobos

almond

butter

100 Years of

Solitude

Bikram yoga

Christian Rudder: http://blog.okcupid.com/index.php/page/7/

OKCupid: How do ethnicities’ self-presentation differ

on a dating site?

Words and phrases that distinguish white men.

Source: http://blog.okcupid.com/index.php/page/7/ (Rudder 2010)

OKCupid: How do ethnicities’ self-presentation differ

on a dating site?

Words and phrases that distinguish Latino men.

Explanation

Source: http://blog.okcupid.com/index.php/page/7/ (Rudder 2010)

OKCupid: How do ethnicities’ self-presentation differ

on a dating site?

Words and phrases that distinguish Latino men.

The explanation suggests a topic modeling may help to identify latent themes

that are driving these word and phrase distinctiveness.

Source: http://blog.okcupid.com/index.php/page/7/

OKCupid: How do ethnicities’ self-presentation differ

on a dating site?

Words and phrases that distinguish Latino men.

The explanation suggests a topic modeling may help to identify latent themes

that are driving these word and phrase distinctiveness.

Source: http://blog.okcupid.com/index.php/page/7/

What can we do with this?

• Genre of insurance or investment ads

– Montage of important events in the life of a person.

• With these phrase sets, the ads practically write themselves:

• What if you wanted to target Latino men?

– Grows up boxing

– Meets girlfriend salsa dancing

– Becomes a Marine

– Tells a joke at his wedding

– Etc…

OKCupid: How do ethnicities’ self-presentation differ

on a dating site?

The linguistic elements were found “statistically.”

The exact method is unclear, but Rudder (2014)

describes a novel method for identify statistically

associated terms.

- Let’s see how the algorithm functions on

- how it works

- and how it performs on the political convention

data set.

top

✚ public

✚ worker

✚ auto

people✚

stand ✚

election ✚

Ranking with

democrats

✚ wealthy

✚ bin laden

profit ✚

bipartisan ✚

grandfather ✚

middle

regulation

middle

bottom

✚

pelosi regulatory

✚

✚

Ranking with

republicans

ann

olympics

✚

✚

top

rancher

bottom ✚ giraffe

✚

* Not drawn to scale

Source: Christian Rudder. Dataclysm. 2014.

top

✚ public

✚ worker

✚ auto

people✚

stand ✚

election ✚

Ranking with

democrats

✚ wealthy

Association between democrats and

“worker” is the Euclidean distance

between word and top left corner

✚ bin laden

profit ✚

bipartisan ✚

grandfather ✚

middle

regulation

middle

bottom

✚

pelosi regulatory

✚

✚

Ranking with

republicans

ann

olympics

✚

✚

top

rancher

bottom ✚ giraffe

✚

* Not drawn to scale

Source: Christian Rudder. Dataclysm. 2014.

top

✚ public

✚ worker

✚ auto

people✚

stand ✚

election ✚

Ranking with

democrats

✚ wealthy

Association between republicans and

“regulation” is the Euclidean distance

between word and bottom right corner

✚ bin laden

profit ✚

bipartisan ✚

grandfather ✚

middle

regulation

middle

bottom

✚

pelosi regulatory

✚

✚

Ranking with

republicans

ann

olympics

✚

✚

top

rancher

bottom ✚ giraffe

✚

* Not drawn to scale

Source: Christian Rudder. Dataclysm. 2014.

Another look at the 2012 political convention data

- The conventions let political parties reach a broad audience, and

both energize their bases and argue their case to undecided

voters.

- How well do these terms capture rhetorical differences between

parties?

Mike Bostock et al., http://www.nytimes.com/interactive/2012/09/06/us/politics/convention-word-counts.html

Another look at the 2012 political convention data

Applying the Rudder algorithm to the 2012 data reveals a number of

terms associated with a party that weren’t covered in the NYT viz.

These can uncover party talking points.

Republican Top Terms

Included in

Visualization?

olympics

ann

big government

16 [trillion]

oklahoma

elect mitt

next president

the constitution

mitt 's

our founding

jack

no

no

no

no

no

yes

no

no

yes

no

no

8 [percent]

they just

no

no

patient

pipeline

no

no

Comment

Gov. Romney was CEO of the Organizing Committee for the 2002 Winter

Olympics.

Ann Romney

Size of national debt

Speech by Mary Fallin, OK governor, mentioned state numerous times.

Mostly referring to allegedly unconstitutional actions by Pres. Obama

Founding fathers. Talk of restoring values of founding fathers.

Republicans just seem to talk about people named Jack more.

8% unemployment. The term “unemployment” was used in the visualization, but

Democrats didn’t mention the percentage.

“Just don’t get it” was a refrain of a Repub. speaker.

Discussions of US being “patient,” as well as how the ACA affects the doctorpatient relationship

Keystone pipeline

How well do these terms capture linguistic differences

between parties?

Before

After

Mike Bostock et al., http://www.nytimes.com/interactive/2012/09/06/us/politics/convention-word-counts.html

Another look at the 2012 political convention data

Now let’s look at the Democrats.

• The auto bailout is Pres. Obama’s 2012 Olympics.

• Government is seen as a collection of programs (Pell grants, Medicare

Vouchers, etc…) to help middle class families, vs. “big government”.

• Attacks on wealthy

• No appeals to fundamental principles (“constitution,” “founding fathers”)

• Women explicitly mentioned, while Repubs. talk about Ms. Romney.

Democratic Top Terms

auto [industry]

[move] america forward

insurance company

woman 's

[the] wealthy

pell [grant]

last week

grandmother

access

millionaire

platform

voucher

class family

register

Included in

Visualization?

yes

yes

no

yes

no

no

no

no

no

yes

no

no

yes

no

Comment

Provided in NYT. Pres. Obama was credited with auto industry recovery.

*only “forward” was included in visualization.

Never used by Repubs.

Never used by Repubs.

Used to talk about RNC that happened the pervious week.

6:1 ratio of Dem vs. republican usage. Dovetails with discussion of women.

Access to gov’t services or health care

Repubs never mentioned party platform

Accusing Republicans of turning Medicare into “voucher.”

“Middle class” was included.

Voter registration. Only used once by Repubs.

How can this aid in messaging?

• Democrats had an advantage in having their convention second

– They could refute Republican talking points

– The Republicans made Gov. Romney's role in the 2002 Olympics a

major selling point

– It went virtually unmentioned by the Democrats

• Republicans may be using numbers to their detriment:

– 8% unemployment

• Often “for 42 months” was added

– $16 trillion deficit

– These numbers are tough to interpret without a lot context

• Romney’s “47%… …are dependent on the government, believe they are

victims” comment may have been the death-nail in his presidential bid

• Jeb Bush’s campaign point of “4% GDP growth” has been ineffective

Case Study 3:

Movie reviews and

revenue

Predicting Box-Office Revenue From Movie Reviews

- Data:

- 1,718 movie reviews from 2005-2009 7 different publications

(e.g., Austin Chronicle, NY Times, etc.)

- Various movie metadata like rating and director

- Gross revenue

- Task:

- Predict revenue from text, couched as a regression problem

- Regressor used: Elastic Net

- l1 and l2 penalized linear regression

- 2009 reviews were held-out as test data

- Linguistic elements:

- Ngrams: unigrams, bigrams and trigrams

- Dependency relation triples: <dependent, relation, head>

- Versions of features labeled for each publication (i.e. domain)

- “Ent. Weekly: comedy_for”, “Variety: comedy_for”

- Essentially the same algo as Daume III (2007)

- Performed better than naïve baseline, but worse than metadata

Joshi et al. Movie Reviews and Revenues: An Experiment in Text Regression. NAACL 2010

Daume III. Frustratingly Easy

Domain Adaptation. ACL 2007.

Predicting Box-Office Revenue From Movie Reviews

manually labeled feature

categories

Feature weight

(“Weight ($M)”) in

linear model

indicates how much

features are “worth”

in millions of dollars.

The learned

coefficients.

Joshi et al. Movie Reviews and Revenues: An Experiment in Text Regression. NAACL 2010

- 2015 follow-up work:

- Using convolutional neural network in

place of Elastic Net

Bitvai and Cohn: Non-Linear Text Regression with a Deep Convolutional Neural Network. ACL 2015

Predicting Box-Office Revenue From Movie Reviews

- Word association for convolutional neural

network regressor

- Algorithm:

- Compare the prediction of the regressor

with phrase zeroed out in input to original

output.

- Impact is the difference in outputs.

- Impact for “Hong Kong” will involve

running regressor with “Hong Kong”

zeroed out in movie representation, but

unigrams “Hong” and “Kong” are

unaffected.

Impact = predict({…, “Hong Kong”: 1, …}) –

predict({…, “Hong Kong”: 0, …})

Bitvai and Cohn: Non-Linear Text Regression with a Deep Convolutional Neural Network.

Univariate approach to predicting revenue from text

• The corpus used in Joshi et al. 2010 is freely available.

• Can we use the Rudder algorithm to find interesting associated

terms? How does it compare?

– Rudder algorithm requires two or more classes.

– We can partition the the dataset into high and low revenue partitions.

• High being movies in the upper third of revenue, low in the bottom third

– Find words that are associated with high vs. low (throwing out the

middle third) and vice versa

Univariate approach to predicting revenue-category

from text

• Observation definition is really important!

– Recall that the same movie may have multiple reviews.

– We can treat an observation as

• a single review

• a single movie

– The response variable remains the same– movie revenue

Univariate approach to predicting revenue-category

from text

• Observation definition is really important!

– Recall that the same movie may have multiple reviews.

– We can treat an observation as

• a single review

• a single movie

– The response variable remains the same– movie revenue

Top 5 high revenue terms (Rudder algorithm)

Review-level observations Movie-level observations

Batman

Computer generated

Borat

Superhero

Rodriguez

The franchise

Wahlberg

Comic book

Comic book

Popcorn

Univariate approach to predicting revenue-category

from text

• Observation definition is really important!

– Recall that the same movie may have multiple reviews.

– We can treat an observation as

• a single review

• a single movie

– The response variable remains the same– movie revenue

Top 5 high revenue terms (Rudder algorithm)

Review-level observations Movie-level observations

Batman

Computer generated

Borat

Superhero

Rodriguez

The franchise

Wahlberg

Comic book

Comic book

Popcorn

Univariate approach to predicting revenue-category

from text

Top 5

Computer generated

Superhero

The franchise

Comic book

Popcorn

Bottom 5

exclusively

[Phone number]

Festival

Tribeca

With English

Failed to produce term associations around

content ratings (e.g., PG-13, “strong

language”). Rating is strongly correlated to

revenue.

Let’s look exclusively at PG-13 movies.

Only PG-13-rated movies

Selected Top Terms

Franchise

Computer generated

Installment

Top terms are very similar. Franchises

and sequels are very successful.

Bottom terms are interesting!

The first two

The ultimate

Movies about friendship or family

dynamics don’t seem to perform well!

Selected Bottom Terms

[Theater specific terms like

phone numbers]

A friend

Idea generation tools can also be idea

rejection tools.

- Spiderman 15 >> PG-13 family

melodrama.

Her mother

Parent

One day

Siblings

Corpus selection is important in getting

actionable, interpretable results!

Language use and age

Language use over time in Facebook statuses

Best topic for each age

group listed.

LOESS regression line for

prevalence by age group

Nod to James Pennebaker

Schwartz HA, Eichstaedt JC, Kern ML, Dziurzynski L, Ramones SM, et al. (2013) Personality, Gender, and Age in the Language of

Social Media: The Open Vocabulary Approach. PLoS ONE 8(9)

Word cloud pros and cons

Alternative to word cloud is

list, ranked by phrase

frequency or phrase

precision.

Pro

• Word clouds force you to

hunt for the most

impactful terms

• You end up examining the

long tail in the process

• Compactly represent a lot

of phrases

Con

• Longer words are more

prominent.

• “Mullet of the Internet”

• Hard to show phrase

annotations.

• Ranking is unclear.

Schwartz HA, Eichstaedt JC, Kern ML, Dziurzynski L, Ramones SM, et al. (2013) Personality, Gender, and Age in the Language of

Social Media: The Open Vocabulary Approach. PLoS ONE 8(9)

CDK Global’s

Language

Visualization

Tool

Informing dealer talk tracks.

• Suppose you are selling a car to a typical person, how would you

describe the car’s performance?

• Should you say

– This car has 162 ft-lbs of torque.

– OR

– This car makes passing on two lane roads easy.

• Having an idea generation (and rejection) tool makes this very

easy.

Recommendations

• Corpus and document selection are important

– Documents: movie-level instead of review-level

– Corpus: rating-specific

• Don’t always look at extreme terms

– The Rudder algorithm on the NYT visualization lacked many

important issues like Medicare

• Use a variety of approaches

– Univariate and multivariate approaches can highlight different terms

• More phrase context is better than less

• When possible, phrase lists are most understandable when

presented with a speculative narrative.

Acknowledgements

• Thank you!

• We’re hiring

– talk to me (best) or, if you can’t, go to CDKJobs.com

• Special thanks to CDK Global BI and Data Science, including Joel

Collymore (the concept of “idea generation tool”), Michael Mabale

(thoughts on word clouds), Iris Laband, Peter Kahn, Michael

Eggerling, Kyle Lo, Iris Laband, Chris Mills, and Dengyao Mo

Questions? (Yes, we’re hiring!!)

• Find “Jobs by

Is this

you?

• Data Scientist

• UI/UX Development

& Design

• Software Engineer –

all levels

• Product Manager

Head to

CDKJobs.com

-ortalk to me

Category”

• Click

Technology

• Have your

Resume ready

• Click “Apply”!

@jasonkessler