Enhancing Feedback Using eGrids and Driving Tests

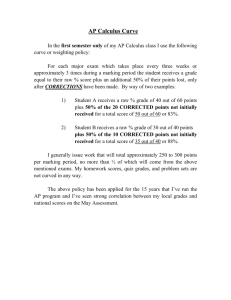

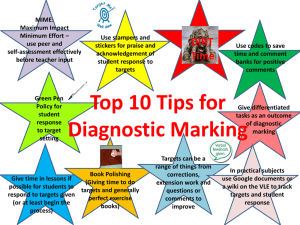

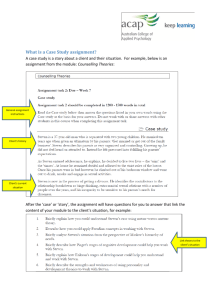

advertisement

Matthew Dean Faculty of Technology mjdean@dmu.ac.uk www.cse.dmu.ac.uk/~mjdean (Teaching and Learning) Can a combination of electronic marking grids (eGrids) and viva based driving tests… Improve the quality of student feedback? Optimise staff time spent in assessment? “How will I cope?” ◦ Voluntary severance ◦ New duties ◦ Potentially excessive marking load Action Research Approach Mock NSS Results ◦ Entire year minus module ◦ Module only Access logs Student and Staff Focus Groups Observation and Reflection eGrids used on two second year modules Multimedia and Internet Technology ◦ Existing module modified to eGrid assessment ◦ 4 x assessment points Internet Software Development ◦ New module written with eGrids in mind ◦ 3 x assessment points Both Modules ◦ ◦ ◦ ◦ 100% Coursework Extensive use of on-line videos 1 x 2hr Lab, 1 x Lecture All TLA provided at the start Viva based assessment Allows multiple attempts Peer learning Limiting factors ◦ ◦ ◦ ◦ One test a week Three “time outs” Sliding scale of marks Only assessed in taught session Used so far only on “small” assessments Confidentiality – G6.77 Account Server creates the grids Read only access for student Students may model grades Read write access for staff Staff update grid with grades + feedback Split into “credit categories” Grades may be 0, 25, 50, 70 or 100% Grade is time sensitive Claims for Credit ◦ Self + peer assessment ◦ May be limited to a single credit category Formative Claims (25% claims) Review weeks Time built into taught sessions for assessment Summative Claims After assignment deadline (time sensitive grade) Continuous Claims Theoretical aspects (time sensitive grade) A = Definitely Agree, B = Mostly Agree, C= Neither Agree Nor Disagree, D = Disagree, E= Definitely Disagree (60 Students over 2 Programmes involving 3 Staff) Module only % Minus module % Assessment and Feedback A B C D E A B C D E The criteria used in marking have been clear in advance 56 32 10 3 0 34 48 16 2 0 Assessment arrangements and marking have been fair 51 30 16 3 0 31 52 11 5 0 Feedback on my work has been prompt 49 37 6 8 0 23 41 28 7 2 I have received detailed comments on my work 33 40 22 2 3 23 30 36 7 5 Feedback on my work has helped me clarify things I did not understand 42 34 18 6 0 23 38 25 7 8 Staff Perspective ◦ Marking a lonely and boring activity – this approach is anything but ◦ Significant reduction in marking out of class ◦ Quite an intense process ◦ Get to know students ◦ Sensitive to staff absence ◦ Can be quite hard to tell a student to their face their work is not up to scratch ◦ Feedback must be constructive and positive ◦ Possibly demanding for staff new to teaching / new to module content Teaching and Learning ◦ Mechanism is initially alien to both staff and students – some confusion, conflict and anxiety ◦ Positive impact on plagiarism – ownership of work ◦ Staff and students develop consensus on quality ◦ Moderation of work possibly an issue Management ◦ Time to plan prior to delivery (not a luxury I had this time) ◦ Timing of assessments, we need to provide time for students to reflect and engage – less may well be more! ◦ Number and nature of credit categories need to be thought through in advance ◦ Changing grids once teaching has started is a problem ◦ Collating grades needs addressing ◦ Update of staff data entry Student Perspective ◦ Students become active participants in assessment ◦ Loud cheer of “yes” when asked if they like this approach ◦ Reduced impact on loss of work ◦ Not all students engage with the process ◦ (true of whatever we do!) Carry out remaining research activities (Focus groups etc.) Obtain views of non engaging students Seek the advice of others Action research Obtain copies of successful applications Focus on one topic Research something you were going to do anyway Make it sexy