Language-Based Security A Brief Overview

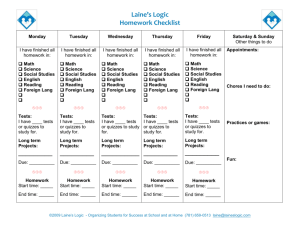

advertisement

Language-Based Security

Reference Monitors

Greg Morrisett

Cornell University

Papers for this lecture

F.B.Schneider, “Enforceable Security Policies.” In ACM Trans. on

Information and System Security, 2(4), March 2000.

R.Wahbe et al. “Efficient Software-Based Fault Isolation.” In

Proceedings of the 14th ACM Symp. on Operating Systems

Principles, pages 203--216, December 1993.

U.Erlingsson and F.B.Schneider. “SASI Enforcement of Security

Policies: A Retrospective.” Cornell Technical Report TR991758.

U.Erlingsson and F.B.Schneider. IRM Enforcement of Java Stack

Inspection. Cornell Technical Report TR2000-1786, January

2000.

D.Evans and A.Twynman. “Flexible Policy-Directed Code Safety.”

In 1999 IEEE Symposium on Security and Privacy, Oakland,

CA, May 1999. [sp99.ps]

June 2001

Lang. Based Security

2

A Reference Monitor

Observes the execution of a program and halts

the program if it’s going to violate the security

policy.

Common Examples:

– operating system (hardware-based)

– interpreters (software-based)

– firewalls

Claim: majority of today’s enforcement

mechanisms are instances of reference

monitors.

June 2001

Lang. Based Security

3

Reference Monitors Outline

• Analysis of power and limitations [Schneider]

– What is a security policy?

– What policies can reference monitors enforce?

• Traditional Operating Systems.

– Policies and practical issues

– Hardware-enforcement of OS policies.

• Software-enforcement of OS policies.

– Why?

– Software-Based Fault Isolation [Wahbe et al.]

– Inlined Reference Monitors [Urlingsson & Schneider]

June 2001

Lang. Based Security

4

Requirements for a Monitor

• Must have (reliable) access to information about what

the program is about to do.

– e.g., what instruction is it about to execute?

• Must have the ability to “stop” the program

– can’t stop a program running on another machine that you

don’t own.

– really, stopping isn’t necessary, but transition to a “good”

state.

• Must protect the monitor’s state and code from

tampering.

– key reason why a kernel’s data structures and code aren’t

accessible by user code.

• In practice, must have low overhead.

June 2001

Lang. Based Security

5

What Policies?

We’ll see that under quite liberal assumptions:

– there’s a nice class of policies that reference

monitors can enforce.

– there are desirable policies that no reference

monitor can enforce precisely.

• rejects a program if and only if it violates the policy

Assumptions:

– monitor can have access to entire state of

computation.

– monitor can have infinite state.

– but monitor can’t guess the future – the predicate

it uses to determine whether to halt a program

must be computable.

June 2001

Lang. Based Security

6

Some Definitions

Model programs as labeled transition systems:

– pP: set of possible program states

– P0 P: set of possible initial states

– : set of labels

– p1 p2 P x x P: transition relation

– : infinite sequence of states and labels

(p0,0),(p1,1),(p2,2),... such that

p00p11p22... and p0P0

– [..i]: the first i pairs in the sequence

– : set of all sequences

– p: set of all sequences starting with p

June 2001

Lang. Based Security

7

Example:

A Unix Process on an x86 machine:

– p: memory of the machine, contents of the disk,

program counter, registers, etc.

– : read(addr), write(addr), jump(addr),

syscall(args), other.

– transition relation would need to faithfully model

the x86 instructions by transforming the state and

emitting the appropriate label.

• if pc = 50, Mem[50] = “movl ebx,[eax+4]”, eax=12, and

ebx=3, then we’d transition to a state where Mem[16] = 3

and pc = 54 and the label was “write(16)”.

June 2001

Lang. Based Security

8

Security Policies

Schneider defines a policy P to be a

predicate on sets of sequences.

P : 2 bool

A program p satisfies a policy P iff

P(p) is true.

June 2001

Lang. Based Security

9

Policies

P = false

P = true

P = false

June 2001

Lang. Based Security

10

Why Sets of Sequences?

There are some conceivable policies (e.g.,

confidentiality in an information theoretic setting) that

involve statistical properties of sets of execution

sequences.

let Secret = 42 in Out := rand()

...

...

out = 0

out = 1

out = 2

out = 41 out = 42

out = 43

We don’t want to reveal anything about the secret.

• P({out:=42}) should be false

• P() should be true (i.e., admit all executions)

June 2001

Lang. Based Security

11

Back to Reference Monitors

A reference monitor only sees one execution

sequence of a program.

So we can only enforce policies P s.t.:

(1) P(S) = S.P ()

where P is a predicate on individual

sequences.

A set of execution sequences S is a property if

membership is determined solely by the

sequence and not the other members in the

set.

June 2001

Lang. Based Security

12

Properties

So, reference monitors can’t enforce security

policies that aren’t properties.

And we’ve already seen one policy regarding

information leakage that can’t be described in

terms of single execution sequences.

So reference monitors can’t enforce every

conceivable security policy. Oh well...

June 2001

Lang. Based Security

13

More Constraints on Monitors

Shouldn’t be able to “see” the future.

– Assumption: must make decisions in finite time.

P

P

– Suppose

() is true but

([..i]) is false for

some prefix [..i] of . When the monitor sees

[..i] it can’t tell whether or not the execution will

yield or some other sequence, so the best it can

do is rule out all sequences involving [..i]

including .

So in some sense, P must be continuous:

(2) .P () (i.P([..i]))

June 2001

Lang. Based Security

14

Safety Properties

A predicate P on sets of sequences s.t.

(1) P(S) = S.P ()

(2) .P () (i.P([..i]))

is a safety property: “no bad thing will happen.”

Conclusion: a reference monitor can’t enforce a

policy P unless it’s a safety property. In fact,

Schneider shows that reference monitors can

(in theory) implement any safety property.

June 2001

Lang. Based Security

15

Safety vs. Security

Safety is what we can implement, but is it what

we want?

– already saw “lack of info. flow” isn’t a property.

Safety ensures something bad won’t happen,

but it doesn’t ensure something good will

eventually happen:

– program will terminate

– program will eventually release the lock

– user will eventually make payment

These are examples of liveness properties.

– policies involving availability aren’t safety prop.

– so a ref. monitor can’t handle denial-of-service?

June 2001

Lang. Based Security

16

Safety Is Nice

Safety does have its benefits:

– They compose: if P and Q are safety

properties, then P & Q is a safety property

(just the intersection of allowed traces.)

– Safety properties can approximate liveness

by setting limits. e.g., we can determine

that a program terminates within k steps.

– We can also approximate many other

security policies (e.g., info. flow) by simply

choosing a stronger safety property.

June 2001

Lang. Based Security

17

Practical Issues

In theory, a monitor could:

– examine the entire history and the entire machine state to

decide whether or not to allow a transition.

– perform an arbitrary computation to decide whether or not to

allow a transition.

In practice, most systems:

–

–

–

–

keep a small piece of state to track history

only look at labels on the transitions

have small labels

perform simple tests

Otherwise, the overheads would be overwhelming.

– so policies are practically limited by the vocabulary of labels,

the complexity of the tests, and the state maintained by the

monitor.

June 2001

Lang. Based Security

18

Reference Monitors Outline

Analysis of the power and limitations.

What is a security policy?

What policies can reference monitors enforce?

• Traditional Operating Systems.

– Policies and practical issues

– Hardware-enforcement of OS policies.

• Software-enforcement of OS policies.

– Why?

– Software-Based Fault Isolation

– Inlined Reference Monitors

June 2001

Lang. Based Security

19

Operating Systems circa ‘75

Simple Model: system is a collection of running

processes and files.

– processes perform actions on behalf of a user.

• open, read, write files

• read, write, execute memory, etc.

– files have access control lists dictating which

users can read/write/execute/etc. the file.

(Some) High-Level Policy Goals:

– Integrity: one user’s processes shouldn’t be able

to corrupt the code, data, or files of another user.

– Availability: processes should eventually gain

access to resources such as the CPU or disk.

– Secrecy? Confidentiality? Access control?

June 2001

Lang. Based Security

20

What Can go Wrong?

– read/write/execute or change ACL of a file for

which process doesn’t have proper access.

• check file access against ACL

– process writes into memory of another process

• isolate memory of each process (& the OS!)

– process pretends it is the OS and execute its code

• maintain process ID and keep certain operations

privileged --- need some way to transition.

– process never gives up the CPU

• force process to yield in some finite time

– process uses up all the memory or disk

• enforce quotas

– OS or hardware is buggy...

June 2001

Lang. Based Security

21

Key Mechanisms in Hardware

– Translation Lookaside Buffer (TLB)

• provides an inexpensive check for each memory access.

• maps virtual address to physical address

– small, fully associative cache (8-10 entries)

– cache miss triggers a trap (see below)

– granularity of map is a page (4-8KB)

– Distinct user and supervisor modes

• certain operations (e.g., reload TLB, device access)

require supervisor bit is set.

– Invalid operations cause a trap

• set supervisor bit and transfer control to OS routine.

– Timer triggers a trap for preemption.

June 2001

Lang. Based Security

22

Steps in a System Call

Time

User Process

Kernel

calls f=fopen(“foo”)

library executes “break”

trap

calls fread(f,n,&buf)

library executes “break”

saves context, flushes TLB, etc.

checks UID against ACL, sets up IO

buffers & file context, pushes ptr to

context on user’s stack, etc.

restores context, clears supervisor bit

saves context, flushes TLB, etc.

checks f is a valid file context, does

disk access into local buffer, copies

results into user’s buffer, etc.

restores context, clears supervisor bit

June 2001

Lang. Based Security

23

Hardware Trends

The functionality provided by the hardware hasn’t

changed much over the years. Clearly, the raw

performance in terms of throughput has.

Certain trends are clear:

–

–

–

–

–

small => large # of registers: 8 16-bit =>128 64-bit

small => large pages: 4 KB => 16 KB

flushing TLB, caches is increasingly expensive

computed jumps are increasingly expensive

copying data to/from memory is increasingly expensive

So a trap into a kernel is costing more over time.

June 2001

Lang. Based Security

24

OS Trends

In the 1980’s, a big push for microkernels:

– Mach, Spring, etc.

– Only put the bare minimum into the kernel.

• context switching code, TLB management

• trap and interrupt handling

• device access

– Run everything else as a process.

• file system(s)

• networking protocols

• page replacement algorithm

– Sub-systems communicate via remote procedure

call (RPC)

– Reasons: Increase Flexibility, Minimize the TCB

June 2001

Lang. Based Security

25

A System Call in Mach

Time

User Process

Kernel

Unix Server

f=fopen(“foo”)

“break”

saves context

checks capabilities,

copies arguments

switches to Unix

server context

checks ACL, sets up

buffers, etc.

“returns” to user.

saves context

checks capabilities,

copies results

restores user’s

context

June 2001

Lang. Based Security

26

Microkernels

Claim was that flexibility and increased assurance

would win out.

– But performance overheads were non-trivial

– Many PhD’s on minimizing overheads of communication

– Even highly optimized implementations of RPC cost 2-3

orders of magnitude more than a procedure call.

Result: a backlash against the approach.

– Windows, Linux, Solaris continue the monolithic tradition.

• and continue to grow for performance reasons (e.g., GUI) and

for functionality gains (e.g., specialized file systems.)

– Mac OS X, some embedded or specialized kernels (e.g.,

Exokernel) are exceptions. VMware achieves multiple

personalities but has monolithic personalities sitting on top.

June 2001

Lang. Based Security

27

Performance Matters

The hit of crossing the kernel boundary:

– Original Apache forked a process to run each CGI:

• could attenuate file access for sub-process

• protected memory/data of server from rogue script

• i.e., closer to least privilege

– Too expensive for a small script: fork, exec, copy

data to/from the server, etc.

– So current push is to run the scripts in the server.

• i.e., throw out least privilege

Similar situation with databases, web browsers,

file systems, etc.

June 2001

Lang. Based Security

28

The Big Question?

From a least privilege perspective, many

systems should be decomposed into

separate processes. But if the

overheads of communication (i.e., traps,

copying, flushing TLB) are too great,

programmers won’t do it.

Can we achieve isolation and cheap

communication?

June 2001

Lang. Based Security

29

Reference Monitors Outline

Analysis of the power and limitations.

What is a security policy?

What policies can reference monitors enforce?

Traditional Operating Systems.

Policies and practical issues

Hardware-enforcement of OS policies.

• Software-enforcement of OS policies.

Why?

– Software-Based Fault Isolation

– Inlined Reference Monitors

June 2001

Lang. Based Security

30

Software Fault Isolation (SFI)

• Wahbe et al. (SOSP’93)

• Keep software components in same hardware-based

address space.

• Use a software-based reference monitor to isolate

components into logical address spaces.

– conceptually: check each read, write, & jump to make sure

it’s within the component’s logical address space.

– hope: communication as cheap as procedure call.

– worry: overheads of checking will swamp the benefits of

communication.

• Note: doesn’t deal with other policy issues

– e.g., availability of CPU

June 2001

Lang. Based Security

31

One Way to SFI

void interp(int pc, reg[], mem[], code[], memsz, codesz) {

while (true) {

if (pc >= codesz) exit(1);

int inst = code[pc], rd = RD(inst), rs1 = RS1(inst),

rs2 = RS2(inst), immed = IMMED(inst);

switch (opcode(inst)) {

case ADD: reg[rd] = reg[rs1] + reg[rs2]; break;

case LD: int addr = reg[rs1] + immed;

if (addr >= memsz) exit(1);

reg[rd] = mem[addr];

break;

case JMP: pc = reg[rd]; continue;

0: add r1,r2,r3

1: ld r4,r3(12)

...

}

pc++;

2: jmp r4

}}

June 2001

Lang. Based Security

32

Pros & Cons of Interpreter

Pros:

– easy to implement (small TCB.)

– works with binaries (high-level languageindependent.)

– easy to enforce other aspects of OS policy

Cons:

– terribly execution overhead (x25? x70?)

but it’s a start.

June 2001

Lang. Based Security

33

Partial Evaluation (PE)

A technique for speeding up interpreters.

– we know what the code is.

– specialize the interpreter to the code.

• unroll the loop – one copy for each instruction

• specialize the switch to the instruction

• compile the resulting code

PE is a technology that was primarily

developed here in Copenhagen.

June 2001

Lang. Based Security

34

Example PE

Original Binary:

0: add r1,r2,r3

1: ld r4,r3(12)

2: jmp r4

...

Interpreter

while (true) {

if (pc >= codesz) exit(1);

int inst = code[pc];

...

}

Specialized interpreter:

Resulting Compiled Code

reg[1] = reg[2] + reg[3];

addr = reg[3] + 12;

if (addr >= memsz) exit(1);

reg[4] = mem[addr];

pc = reg[4]

0: add r1,r2,r3

1: addi r5,r3,12

2: subi r6,r5,memsz

3: jab _exit

4: ld r4,r5(0)

...

June 2001

Lang. Based Security

35

SFI in Practice

Used a hand-written specializer or rewriter.

– Code and data for a domain in one contiguous segment.

• upper bits are all the same and form a segment id.

• separate code space to ensure code is not modified.

– Inserts code to ensure stores [optionally loads] are in the

logical address space.

• force the upper bits in the address to be the segment id

• no branch penalty – just mask the address

• may have to re-allocate registers and adjust PC-relative offsets

in code.

• simple analysis used to eliminate unnecessary masks

– Inserts code to ensure jump is to a valid target

• must be in the code segment for the domain

• must be the beginning of the translation of a source instruction

• in practice, limited to instructions with labels.

June 2001

Lang. Based Security

36

More on Jumps

• PC-relative jumps are easy:

– just adjust to the new instruction’s offset.

• Computed jumps are not:

– must ensure code doesn’t jump into or around a

check or else that it’s safe for code to do the jump.

– for this paper, they ensured the latter:

• a dedicated register is used to hold the address that’s

going to be written – so all writes are done using this

register.

• only inserted code changes this value, and it’s always

changed (atomically) with a value that’s in the data

segment.

• so at all times, the address is “valid” for writing.

• works with little overhead for almost all computed jumps.

June 2001

Lang. Based Security

37

More SFI Details

Protection vs. Sandboxing:

–

Protection covers loads as well as stores:

•

•

•

–

stronger security guarantees (e.g., reads)

required 5 dedicated registers, 4 instruction sequence

20% overhead on 1993 RISC machines

Sandboxing covers only stores

•

•

requires only 2 registers, 2 instruction sequence

5% overhead

Remote Procedure Call:

–

–

10x cost of a procedure call

10x faster than a really good OS RPC

Sequoia DB benchmarks: 2-7% overhead for

SFI compared to 18-40% overhead for OS.

June 2001

Lang. Based Security

38

Questions

• What happens on the x86?

– small # of registers

– variable-length instruction encoding

• What happens with discontiguous hunks of

memory?

• What would happen if we really didn’t trust

the extension?

– i.e., check the arguments to an RPC?

– timeouts on upcalls?

• Does this really scale to secure systems?

June 2001

Lang. Based Security

39

Inlined Reference Monitors

Schneider & Erlingsson

Generalize the idea of SFI:

– write specification of desired policy as a security

automaton.

• (Possibly infinite number of) states used to track

history/context of computation.

• Transitions correspond to labels on computation’s

sequence.

• No transition in automaton means the sequence should

be rejected.

• In principle, can enforce any safety property.

– general re-writing tool enforces the policy by

inserting appropriate state and checks.

June 2001

Lang. Based Security

40

Example Policy

“Applet can’t send a message after reading a file.”

read

has

read

start

not(read)

June 2001

Lang. Based Security

not(send)

41

SFI Policy

(retOp() && validRetPt(topOfStack)) ||

(callOp() && validCallPt()) ||

(jumpOp() && validJumpPt()) ||

(readOp() && canRead(sourceAddr)) ||

(writeOp() && canWrite(targetAddr))

June 2001

Lang. Based Security

42

1st Prototype

• SASI:

– Security Automata-based Software fault Isolation.

– One version for x86 native code, another for JVM

bytecodes.

• x86: labels correspond to opcode & operands of an

instruction, sets of addresses that can be

read/written/jumped to.

• JVM: class name, method name, method type signature,

JVML opcode, instruction operands, JVM state in which

operation will be executed.

– SAL: Security Automata specification Language

• finite list of states, list of (deterministic) transitions

• simple predicate language for transitions

June 2001

Lang. Based Security

43

Example SAL

/* Macros */

MathodCall(name) ::= op == “invokevirtual” && param[1]

== name;

FileRead() ::=

MethodCall(“java/io/FileInputStream/read()I”);

Send() :=

MethodCall(“java/net/SocketOutputStream/write(I)V”);

/* Security Automaton */

start ::=

!FileRead() -> start

FileRead() -> hasRead;

hasRead ::=

!Send() -> hasRead;

June 2001

Lang. Based Security

44

x86 Prototype

• Leveraged GCC

– code had to come from gcc

• assumes programs don’t care about nop’s.

• assumes branch targets restricted to the set of labels

identified by GCC during compilation.

• Relative to original code (std. dev)

Benchmark

MiSFIT

2.378 (0.3%)

SASI

3.643 (2.6%)

logical logstructured disk

1.576 (0.3%)

1.654 (0.5%)

MD5 message

digest

1.331 (1.4%)

1.363 (0.1%)

page evicition

hostlist

June 2001

Lang. Based Security

45

Java Prototype

• Implemented JDK 1.1 Security Manager

– more on this later in the course

– essentially: check that certain methods are

called in the right “stack” context

• Same cost as Sun’s implementation

– primarily because of partial evaluation

• Easier to see the policy

– for Sun, it’s baked into the code

June 2001

Lang. Based Security

46

Some Pros and Cons:

• Policy is separate from implementation

– easier to reason about and compose application-specific

policies and get it right because things are automated

– e.g., SFI is a half-page policy and there were bugs in MiSFIT

• Analysis and optimization can get rid of overheads

– but this increases the size of the TCB

• Stopping a “bad” program might not be “good”

– suppose it holds locks...

• Vocabulary is limited by what can be observed.

– what’s a “procedure call”? what’s a “file descriptor”?

– more structure on the code (e.g., types) leads to a bigger

vocabulary (Java vs. x86)

– but then it’s not working on native code

June 2001

Lang. Based Security

47

2nd Prototype: PoET/PSLang

• PoET: Policy Enforcement Toolkit

– similar to SASI but specific to Java and the JVM

– organized around JVM “events”

• e.g., method calls, instructions, returns, etc.

• similar to ATOM and other toolkits for monitoring

performance

• also similar to “aspect-oriented” programming.

• PSLang: Policy Specification Language

– has full power for Java for defining events and

transitions

– spec is at the Java level, but rewriting is done at

the JVM level.

June 2001

Lang. Based Security

48

Ex: “at most 10 windows”

IMPORT LIBRARY Lock;

ADD SECURITY STATE {

int openWindows = 0;

Object lock = Lock.create();

}

ON EVENT begin method

WHEN Event.fullMethodNameIs(“void java.awt.Window.show()”)

PERFORM SECURITY UPDATE {

Lock.acquire(lock);

if (openWindows = 10) {

HALT[ “Too many open GUI windows” ];

}

openWindows = openWindows + 1;

Lock.release(lock);

}

ON EVENT begin method

WHEN Event.fullMethodNameIs(“void java.awt.Window.dispose()”)

PERFORM SECURITY UPDATE {

Lock.acquire(lock);

openWindows := openWindows – 1;

Lock.release(lock);

}

June 2001

Lang. Based Security

49

Java 2 Security Model

• Each hunk of code is associated with a protection

domain.

• e.g., applet vs. trusted local-host code

• Each protection domain can only perform certain

operations.

• e.g., applets can only access /tmp while trusted local-host code

can access any file.

• Both direct and indirect access control:

• applet can’t directly call load(“/etc/passwd”)

• nor can it call a trusted piece of code that calls

load(“etc/passwd”).

• avoids accidentally leaking access through a method.

• But sometimes, we need indirect access

• this is achieved through amplification (similar idea to setuid)

June 2001

Lang. Based Security

50

Example Security Domains

Untrusted Applet: /home/*

GUI Library: /fonts/*

display:

...

load(“thesis.txt”)

use plain font

show on screen

...

use plain font:

...

doPrivileged {

load(“Courier”);

}

...

File Library: *

load(file F):

...

checkPermission(F,read)

...

June 2001

Lang. Based Security

51

Java 2 Stack Inspection

A richer notion of “context” than just the domain of the

executing code.

–

on whose behalf is the code executing?

doPrivileged{S}

–

switches from the caller’s protection domain to the

method’s protection domain while executing the statement

S.

checkPermission(P)

–

–

–

June 2001

examines the “stack” from most recent to least recent.

if it encounters a frame from a protection domain that does

not have permission P, throws a security exception.

stops when it reaches the end of the stack or a

doPrivileged command.

Lang. Based Security

52

3 Implementations

• Sun’s implementation

– no overhead on method call, doPrivileged

– crawls the stack on checkPermission

– hard to see what the policy is

• Naive PSLang

–

–

–

–

use an external stack object

push the domain upon each method call, pop upon return.

push a different domain upon doPrivileged.

crawl the stack object on checkPermission.

• Optimized PSLang

– takes advantage of reflection facilities to crawl the stack in a

fashion similar to Sun’s

June 2001

Lang. Based Security

53

Performance Overhead

JVM

IRM(naive)

IRM

Jigsaw

javac

6.2%

2.9%

20.1%

46.2%

6.4%

2.0%

tar

10.1%

3.0%

5.4%

MPEG

0.9%

72.5%

0.4%

Naive approach is very expensive because there are a

lot of method calls relative to domain crossings.

IRM approach can beat JVM because of static (local)

optimization.

June 2001

Lang. Based Security

54

Summary

• Reference monitors

– implement safety policies

• OS policies

– designed in a different era

– leverage hardware for efficient checking

– but overheads discourage “least privilege”

• Software Fault Isolation

– small overheads, but limited protection

– not clear it scales in many directions

• Inlined Reference Monitors (SASI/PoET)

– competitive with hand-rolled systems

– more flexible, easier to reason about policies

– limited by “vocabulary” and structure of the code

June 2001

Lang. Based Security

55