now - Learner Analytics Summit

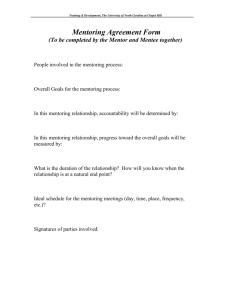

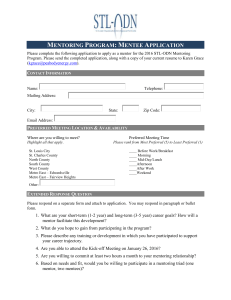

advertisement

APPROACHES TO PREDICTING COLLEGE STUDENT SUCCESS: IMPLICATIONS J. B. Arbaugh, University of Wisconsin Oshkosh UMUC Learner Analytics Summit Jully 22, 2014 RESEARCH ON ANALYTICS IN HIGHER ED BEGINNING TO APPEAR Conference presentations galore Special issue of JALN (2012) Several articles in EDUCAUSE Review Journal of Learning Analytics debuted in 2014 Ethical questions regarding use of analytics in higher ed already being considered (Slade & Prinsloo, 2013) SOME OBSERVATIONS FROM THE LITERATURE ON COMMUNITY COLLEGES AND ANALYTICS Increasing attention given to analytics by entities such as AACC (Norris & Baer, 2013) Analytics adoption at CCs seen as 2-3 years out (along with “next generation” LMSs), whereas flipped classrooms and social media seen as more pressing adoptions (Johnson et al., 2013) Phillips & Horowitz (NDCC, 2013): Data entry approaches are critical – consistency of entry, between new and old systems Opportunities for trend analysis – either of a cohort of students or year by year comparisons in a program or college Make data accessible and useful to ALL staff WHAT/WHO GETS LITTLE ATTENTION IN THIS RESEARCH? Instructors!!! One article found written from the instructors’ perspective (DietzUhler & Hurn, 2013) Instructors aren’t even considered as part of the learning analytics ethical equation (Slade & Prinsloo, 2013) Even when instructors are considered, it is almost always from a post hoc perspective BUT DON’T FEEL TOO BAD ABOUT THIS… Instructors in virtual settings have a long history of being understudied: Distance Education (Dillon & Walsh, 1992; Thompson, 2007) Online Delivery in General (Dziuban, Shea, & Arbaugh, 2005; Shea, 2007; Shea, Pickett, & Li, 2005) Online Delivery in Business Schools (Arbaugh, DeArmond, & Rau, 2013; Shea, Hayes, & Vickers, 2010) HOW TO MAKE INSTRUCTORS LOVE DATA SCIENTISTS (FORNACIARI & ARBAUGH, 2014) Use predictive analytics to create a priori tools: Class section composites Collective demographics Collective GPA Prior online course experience Prior online/blended course content access behavior Prior online course discussion behavior INTERVENTIONS: WHAT TO DO WITH RESULTS OF ANALYTICS? THE CASE OF “MENTORING” Peter Shea, Associate Provost for Online Learning & Associate Professor, School of Education University at Albany, SUNY QUICK PSA Editor for Journal of Asynchronous Learning Networks (JALN) (soon to be Online Learning) You are invited! http://jaln.sloanconsortium.org/ Register to be considered as author, reviewer etc http://jaln.sloanconsortium.org/index.php/index/user/register MAJOR CHALLENGE Getting insight analytics to “front lines” What should practitioners “do” with results? Some typical answer: notify instructors or staff to better advise, support, tutor, mentor, instruct etc. students at risk But what should these interventions look like? Beyond analytics, what models and research inform the design of interventions? Especially for online students? Assumes these as “given” Pre-entry Attributes Goals/ Commitments Institutional Experience Integration Goals/ Commitments Outcome Why uni-variate outcome? Assumes these as “given” Where is institutional response? Where are “interventions”? NON-TRADITIONAL STUDENTS ‘‘We label none of the thirteen propositions of Tinto’s theory as reliable knowledge [about] commuter colleges” (Braxton & Lee, 2005) Assumes these as “given” academic variables academic outcomes learner characteristics decision to Drop-out psychological outcomes environmental environmental variables variables Assumes these as “given” BEAN & METZNER’S (1985) MODEL FOR NON-TRADITIONAL STUDENTS Where is institutional response? Where are “interventions”? NEWER MODELS Newer models improve on past work Multivariate outcomes for example Still lacking attention to proactive intervention Still assumes these as “given” ? INTERVENTIONS: FOR RETENTION OR PERSISTENCE? Multivariate Outcomes Still assumes these as “given” ? (Falcone, 2012) Where is institutional response? Where are “interventions”? EMPIRICAL V CONCEPTUAL MODELS Previous models have been largely based on conceptual work Opportunities exist to test these empirically Analytics clearly one way Other ways too, for example: NATIONAL EMPIRICAL RESULTS Interventions targeted toward: preventing delayed entry to college increasing part-timers’ level of enrollment boosting financial aid in community colleges reducing students’ work hours have the greatest numerical potential for improving completion rates. Caveat: National results don’t tell me about me and perhaps too general to be useful… THE ISSUE WITH THAT ADVICE… “…thinking you can tell every student to switch from part-time to full-time is insane, trying to reduce every students work hours is nuts – just not going to happen .” (Milliron, 2014) EXAMPLE OF PROBLEM Online learning at the community college level – what are the problems and what would an intervention look like and why? ONLINE LEARNING IMPEDES CC PROGRESS? Data: 24k students in 23 institutions in Virginia Community College System; 51k in Washington Online learning outcomes at community college worse than classroom Smith Jaggars & Xu, 2010; Xu & Smith Jaggars, 2011: More failing/withdrawing from online courses Online students less likely to return in following semesters Online students less likely to attain a credential/transfer to 4 year institution. CONFLICTING RESULTS Shea & Bidjerano (2014). A national study of online community college students. Computers and Education, 75 (2), 103-111. Net of 40 other factors DE/online learners were 1.25 times as likely to attain any credential When credential goal was certificate (rather than BA) DE/online learners were 3.22 times as likely to succeed STILL MORE CONFLICTING RESULTS: CALIFORNIA COMMUNITY COLLEGES These mixed results recently extended Nation’s largest two-year system enrolling nearly 1 million OL students Methods employed and results similar to Washington and Virginia studies Community college online learners less likely to complete online course and less likely to get passing grade. BUT… more likely to graduate and transfer to 4 year college… (Johnson, H. & Cuellar Mejilla, M., 2014. Online Learning and Student Outcomes in Community Colleges Public Policy Institute of California) CONCLUSION Something funny is going on… Online students fail courses at higher rates but graduate and transfer at higher rates? More research is needed… Clear consensus that online course level outcomes are uniformly worse is very troubling, how to address? ASSUMPTIONS ARE ONE DIRECTION Pre-entry attributes Goals and Commitments Institutional Experiences Integration Outcome TWO WAY MODEL IS POSSIBLE Pre-entry attributes Goals and Commitments Institutional Interventions Integration Outcome Proposed new “transactional” model Shea & Bidjerano, 2014 ONE EXAMPLE: MENTORING Analytics indicate that certain students are at risk in online environments How do we develop an institutional responses? One example: Mentoring But what is it? Not as easy as it seems… PROBLEM “…there is a false sense of consensus, because at a superficial level everyone “knows” what mentoring is. But closer examination indicates wide variation in operational definitions, leading to conclusions that are limited to the use of particular procedures.” (Wrightsman, 1981, pp. 3–4, in Jacobi, 1991, p. 508) NOT WELL DEFINED More than 50 definitions of mentoring used in the research literature (Crisp & Cruz, 2009). The result is that the concept is devalued, because everyone is using it loosely, without precision, and it may become a short-term fad. (Wrightsman, 1981, pp. 3–4, in Jacobi, 1991, p. 508) BENEFIT This framework provides a detailed yet concise method of communicating exactly what we mean when using the word mentoring May also act as a useful set of prompts for educators designing new mentoring interventions. INTERVENTIONS Designing a specific intervention based on identification of at risk students through analytics is a complex process Intervention needs to be highly specified to demonstrate effectiveness and be replicable COMPONENTS OF A MENTORING MODEL 1.Objectives: The aims or intentions of the Mentoring Model 2.Roles: A statement of who is involved and their function 3.Cardinality: numbers involved in mentoring relationship (one-to-one, one-to-many) 4.Tie strength: the intended closeness of the mentoring relationship (intensity) 5.Relative seniority: comparative expertise, or status of participants (peers, staff, faculty) 6.Time: length of a mentoring relationship, regularity of contact, and quantity of contact (dosage) 7.Selection: how mentors and mentees are chosen 8.Matching: how mentoring relationships are composed COMPONENTS OF A MENTORING MODEL 9. Activities: actions that mentors and mentees can perform during their relationship 10. Resources and tools: technological or other artifacts available to assist mentors and mentees 11. Role of technology: the relative importance of technology to the relationship (F2F, Online, Blended) 12. Training: how necessary understandings and skills for mentoring will be developed in participants 13. Rewards: what participants will receive to compensate for their efforts 14. Policy: rules and guidelines on issues such as privacy or the use of technology 15. Monitoring: what oversight will be performed, what actions will be taken under what circumstances, and by whom 16. Termination: how relationships are ended DEVELOPING A MENTORING INTERVENTION Would include analysis of all 16 elements Development can’t happen in a vacuum Must be a researcher-practitioner collaboration (2yr, 4yr, University, etc) Sustained process addressing specific problem inputs activities outputs outcomes impact PARTIAL EXAMPLE Development of a replicable, community college, online-faculty, mentor model to address academic and social disengagement through group mentored instruction in planning, time management, & self regulation, with additional peer-mentored socialmedia networks to support at-risk student engagement and persistence to increase graduation and transfer. QUESTIONS J. B. Arbaugh, University of Wisconsin Oshkosh arbaugh@uwosh.edu Peter Shea, University at Albany, SUNY pshea@albany.edu