Introduction Slides

advertisement

Hands-on course in deep

neural networks for vision

Instructors

Michal Irani, Ronen Basri

Teaching Assistants

Ita Lifshitz, Ethan Fetaya, Amir Rosenfeld

Course website

http://www.wisdom.weizmann.ac.il/~vision/courses/2016_1/DNN/index.html

Schedule (fall semester)

4/11/2015

18/11/2015

9/12/2015

Introduction

Tutorial on Caffe, exercise 1

Q&A on exercise 1

16/12/2015

6/1/2016

27/1/2016

Submission of exercise 1 (no class)

Student presentations of recent work

Project selection

Nerve cells in a microscope

1836

1838

1862

1873

1888

1891

1897

1906

1921

First microscopic image of a nerve cell (Valentin)

First visualization of axons (Remak)

First description of the neuromuscular junction (Kühne)

Introduction of silver-chromate technique as staining

procedure (Golgi)

Birth of the neuron doctrine: the nervous system is made up of

independent cells (Cajal)

The term “neuron” is coined (Waldeyer).

Concept of synapse (Sherrington)

Nobel Prize: Cajal and Golgi.

Nobel Prize: Sherrington

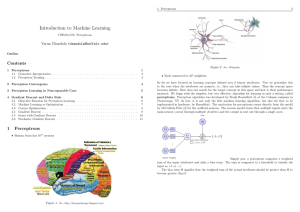

Nerve cell

Synapse

Action potential

• The human brain contains ~86 billion nerve cells

• The DNA cannot encode a different function for each cell

• Therefore,

• Each cell must perform a simple computation

• The overall computations are achieved by ensembles of neurons

(“connectionism”)

• These computations can be changed dynamically by learning (“plasticity”)

A Logical Calculus of the Ideas Immanent in Nervous Activity

Warren S. Mcculloch and Walter Pitts

BULLETIN OF MATHEMATICAL BIOPHYSICS VOLUME 5, 1943

“We shall make the following physical assumptions for our calculus.

1. The activity of the neuron is an "all-or-none" process.

2. A certain fixed number of synapses must be excited within the period of

latent addition in order to excite a neuron at any time, and this number

is independent of previous activity and position on the neuron.

3. The only significant delay within the nervous system is synaptic delay.

4. The activity of any inhibitory synapse absolutely prevents excitation of

the neuron at that time. 5. The structure of the net does not change with

time.”

The Perceptron: A Probabilistic Model for Information Storage

and Organization in The Brain

Frank Rosenblatt

Psychological Review Vol. 65, No. 6, 1958

A simplified view of neural computation

• A nerve cell accumulates electric potential at its dendrites

• This accumulation is due to the flux of neurotransmitors from

neighboring (input) neurons

• The strength of this potential depends on the efficacy of the synapse

and can be positive (“excitatory”) or negative (“inhibitory”)

• Once sufficient electric potential is accumulated (exceeding a certain

threshold) one or more action potentials (spikes) are produced. They

then travel through the nerve axon and affect nearby (output)

neurons

The perceptron

• Input 𝑥 = 𝑥1 , … , 𝑥𝑑

• Weights 𝑤 = 𝑤1 , … , 𝑤𝑑

• Output 𝑓 𝑥 = 1

0

𝑤𝑇𝑥

+𝑏 >0

otherwise

• This is a linear classifier

• Implemented with one layer of weights

+ threshold (Heaviside step activation)

𝑥1

𝑥2

𝑤1

𝑤2

𝑤𝑛

𝑥𝑑

?

> −𝑏

Multi-layer Perceptron

• Linear classifiers are very limited,

e.g., XOR configurations cannot be classified

Multi-layer Perceptron

• Linear classifiers are very limited,

e.g., XOR configurations cannot be classified

Solution: multilayer, feed-forward perceptron

Input

Hidden 1

Hidden 2

𝑥1

ℎ1

ℎ′1

𝑥2

ℎ2

ℎ′2

𝑥𝑑

ℎ𝑚

ℎ′𝑚′

Output

𝑦1

𝑦2

𝑦𝑘

ℎ = 𝜎 𝑊𝑥

ℎ′ = 𝜎 𝑊 ′ ℎ

⋮

𝑦 = 𝜎(… 𝜎 𝑊𝑥 … )

The non-linear 𝜎 is called

“activation function”

Activation functions

• Heaviside (threshold)

• Sigmoid (tanh(𝑧) or logistic

1

)

−𝑧

1+𝑒

• Rectified linear unit (Relu: max(x,0))

• Max pooling (max(ℎ1 , ℎ2 ))

Multi-layer Perceptron

• A perceptron with one hidden layer can approximate any

smooth function to an arbitrary accuracy

Supervised learning

• Objective: given labeled training examples 𝑥𝑖 , 𝑦𝑖

learn a map from input to output, y = 𝑓(𝑥)

• Type of problems:

𝑁

1,

• Classification – each input is mapped to one of a discrete set, e.g.,

{person, bike, car}

• Regression – each input is mapped to a number, e.g., viewing angle

• How? Given a network architecture, find a set of weights that

minimize a loss function on the training data,

e.g., -log likelihood of class given input

• Generalization vs. overfit

Generalization vs. overfit

• We want to minimize loss on the test data, but the test data is not

available in training

• Use a validation set to make sure you don’t overfit

𝑦

𝑥

Generalization vs. overfit

• We want to minimize loss on the test data, but the test data is not

available in training

• Use a validation set to make sure you don’t overfit

Loss

Loss on validation

Loss on training

Iteration

Training DNN: back propagation

• A network is a function 𝑦 = 𝑓(𝑥; 𝑤)

• Objective: modify 𝑤 to improve the prediction of 𝑓 on the training

(and validation) data

• Quality of 𝑓 is measured via the loss function ℒ(𝑥, 𝑦, 𝑤)

• Back propagation: minimize ℒ by gradient descent

𝑤 ← 𝑤 − 𝜂𝛻𝑤 ℒ

• Gradient is computed by a backward pass by applying the chain rule

Calculating the gradient

• Loss: ℒ 𝑦 =

1

2

𝑦−𝑦

2,

where 𝑦 = 𝑓(𝑥; 𝑤)

1

1+𝑒 −𝑧

• Activation: logistic φ 𝑧 =

(note: φ′(𝑧) = φ(𝑧)(1 − φ(𝑧)))

𝜕ℒ

𝜕ℒ 𝜕𝑥𝑗′

= ′

=

𝜕𝑤𝑖𝑗 𝜕𝑥𝑗 𝜕𝑤𝑖𝑗

𝜕ℒ ′

= ′ 𝑥𝑗 (1 − 𝑥𝑗′ )𝑥𝑖

𝜕𝑥𝑗

(Recall that 𝑥𝑗′ = φ 𝑤1𝑗 𝑥1 + ⋯ + 𝑤𝑑𝑗 𝑥𝑑 )

𝑦1

𝑤′11

𝑥′1

𝑤11

𝑥1

𝑦𝑘

𝑤′𝑑′ 𝑘

𝑥′𝑑′

𝑤𝑑𝑑′

𝑥𝑑

Calculating the gradient

𝜕ℒ

=

𝜕𝑥𝑖

𝑑′

=

𝑘=1

𝑑′

𝑘=1

𝜕ℒ 𝜕𝑥𝑘′

=

𝜕𝑥𝑘′ 𝜕𝑥𝑖

𝑦1

𝑤′11

𝑦𝑘

𝑤′𝑑′ 𝑘

𝜕ℒ ′

′

′ 𝑥𝑘 (1 − 𝑥𝑘 )𝑤𝑖𝑘

𝜕𝑥𝑘

• Computed recursively starting with

𝜕ℒ

= 𝑦𝑖 − 𝑦𝑖

𝜕𝑦𝑖

𝑥′1

𝑤11

𝑥1

𝑥′𝑑′

𝑤𝑑𝑑′

𝑥𝑑

Training algorithm

• Initialize with random weights

• Forward pass: given a training input vector apply the network to and

store all intermediate results

• Backward pass: starting from top, recursively use the chain rule to

𝜕ℒ

calculate derivatives

for all nodes and use those derivatives to

calculate

𝜕ℒ

𝜕𝑤𝑖𝑗

𝜕𝑥𝑖

for all edges

• Repeat for all training vectors, the gradient 𝛻𝑤 ℒ is composed of the

𝜕ℒ

sum of all

over all edges

𝜕𝑤𝑖𝑗

• Weight update: 𝑤 ← 𝑤 − 𝜂𝛻𝑤 ℒ

Stochastic gradient descent

• Gradient descent requires computing the gradient of the (full) loss

over all of the training data at every step

With large training this is expensive

• Approach: compute the gradient over a sample (“mini-batch”),

usually by re-shuffling the training set

Going once through the entire training data is called an epoch

• If learning rate decreases appropriately and under mild assumptions

this converges almost surely to a local minimum

• Momentum: pass the gradient from one iteration to the next (with

(𝑡−1)

𝑡

decay), i.e., 𝑤 ← 𝑤 − 𝜂𝛻𝑤 ℒ − 𝜂′𝛻𝑤

ℒ

Regularization: dropout

• At training we randomly eliminate half of the nodes in the network

• At test we use the full network, but each weight is halved

• This spreads the representation of the data over multiple nodes

• Informally, it is equivalent to training with many different networks

and using their average response

Invariance

• Invariance to translation, rotation and scale as well as for some

transformations of intensity is often desired

• Can be achieved by perturbing the training set (data augmentation) –

useful for small transformations (expensive)

• Translation invariance is further achieved with max pooling and/or

convolution

Invariance: convolutional neural nets

• Translation invariance can be achieved by conv-nets

• A conv-net learns a linear filter at the first level, non linear ones higher up

• SIFT is an example for a useful non-linear filter, but a learned filter may be more

suitable

• Inspired by the local receptive fields in the V1 visual cortex area

• Conv-nets were applied successfully to digit recognition in the 90’s (LeCunn et al.

1998), but at the time did not scale well to other kinds of images

𝑥1

𝑥′1

𝑥′2

𝑥′3

𝑥2

𝑥3

𝑥4

𝑥′𝑑′

𝑥5

Weight-sharing: same color ⇒ same weight

𝑥𝑑

Alex-net

Krizhevsky, Sutzkever, Hinton 2012

• Trained and tested on Imagenet: 1.2M training images, 50K validation,

150K test. 1000 categories

• Loss: softmax – top layer has 𝐶 nodes, 𝑧1 , … , 𝑧𝐶 (here 𝐶 = 1000

categories). The softmax function renormalizes them by

𝑒 𝑧𝑗

𝜎𝑗 𝒛 = 𝐶

𝑧𝑘

𝑒

𝑘=1

Network maximizes the multinomial logistic regression objective, that

is ℒ 𝒙 = − 𝑁

𝑖=1 𝜎𝑦𝑖 (𝒙𝑖 ) over the training images 𝒙𝑖 of class 𝑦𝑖

Alex-net

• Activation: Relu

• Data augmentation

• Translation

• Horizontal reflection

• gray level shifts by principal components)

• Dropout

• Momentum

tanh

Relu

Alex-net

Alex-net: results

• ILSVRC-2010

• ILSVRC-2012

Team name

Error

(5 guesses)

SuperVision

0.15315

Using extra training from ImageNet 2011

SuperVision

0.16422

Using only supplied training data

ISI

0.26172

Weighted sum of scores SIFT+FV, LBP+FV, GIST+FV, and CSIFT+FV

OXFORD_VGG

0.26979

Mixed selection from High-Level SVM and Baseline Scores

XRCE/INRIA

0.27058

University of Amsterdam

0.29576

Description

Baseline: SVM trained on Fisher Vectors over Dense SIFT and Color Statistics

• More recent networks reduced error to ~7%

Alex-net: results

(Krizhevsky et al. 2012)

Applications

•

•

•

•

•

Image classification

Face recognition

Object detection

Object tracking

Low-level vision

•

•

•

•

Optical flow, stereo

Super-resolution, de-bluring

Edge detection

Image segmentation

• Attention and saliency

• Image and video captioning

•…

Face recognition

• Google’s conv-net is trained on

260M face images

• Achieved 99.63% accuracy on LFW

(face comparison database), and some

of its mistakes turned out to be

labeling mistakes)

• Available in Google Photos

(Schroff et al. 2015)

Object detection

• Latest methods achieve average precision of about 60% on PASCAL

VOC 2007 and 44% on Imagenet ILSVRC 2014

(He et al. 2015)

Unsupervised learning

• Find structure in data

• Type of problems:

• Clustering

• Density estimation

• Dimensionality reduction

Auto-encoders

• Produce a compact representation

of the training

• Analogous to PCA

• Note that identity transformation

may be a valid (but undesired)

solution

• Initialize by training a Restricted

Bolzmann Machine

Input

𝑥1

𝑥2

𝑥𝑑

Hidden 1

ℎ1

ℎ2

ℎ𝑚

“Output = Input”

𝑦1

𝑦2

𝑦𝑑

Recurrent networks: Hopfield net

John Hopfield, 1982

• Fully connected network

• Time dynamic: starting from an input,

apply network repeatedly to convergence

• Update: 𝑠𝑖 = sign( 𝑗≠𝑖 𝑤𝑖𝑗 𝑠𝑗 + 𝜃𝑗 ),

minimizes an Ising model energy

• Weights are set to store preferred states

• “Associative memory” (content address):

denoising and completion

𝑥1

𝑥𝑑

𝑥2

𝑥3

𝑥4

Recurrent networks

• Used, e.g., for image annotation, i.e., produce a descriptive sentence

of an input image

Next word

Image

Recurrent networks (unrolled)

• Used, e.g., for image annotation, i.e., produce a descriptive sentence

of an input image

First word

Second word

Image

(Kiros et al. 2014)

Neural network development packages

• Caffe: http://caffe.berkeleyvision.org/

• Matconvnet: http://www.vlfeat.org/matconvnet/

• Torch: https://github.com/torch/torch7/wiki/Cheatsheet