Content Distribution

advertisement

Content Distribution

March 6, 2012

2: Application Layer

1

Contents

P2P architecture and benefits

P2P content distribution

Content distribution network (CDN)

2: Application Layer

2

Pure P2P architecture

no always-on server

arbitrary end systems

directly communicate peer-peer

peers are intermittently

connected and change IP

addresses

Three topics:

File distribution

Searching for information

Case Study: Skype

2: Application Layer

3

File Distribution: Server-Client vs P2P

Question : How much time to distribute file

from one server to N peers?

us: server upload

bandwidth

Server

us

File, size F

dN

uN

u1

d1

u2

ui: peer i upload

bandwidth

d2

di: peer i download

bandwidth

Network (with

abundant bandwidth)

2: Application Layer

4

File distribution time: server-client

server sequentially

sends N copies:

NF/us time

client i takes F/di

time to download

Server

F

us

dN

u1 d1 u2

d2

Network (with

abundant bandwidth)

uN

Time to distribute F

to N clients using

= dcs = max {NF/us, F/min(di) }

i

client/server approach

increases linearly w.r.t. N (for large N)

2: Application Layer

5

File distribution time: P2P

Server

server must send one

F

u1 d1 u2

d2

copy: F/us time

us

client i takes F/di time

Network (with

dN

to download

abundant bandwidth)

uN

NF bits must be

downloaded (aggregate)

fastest possible upload rate: us + Sui

dP2P = max { F/us, F/min(di) , NF/(us + Sui) }

i

2: Application Layer

6

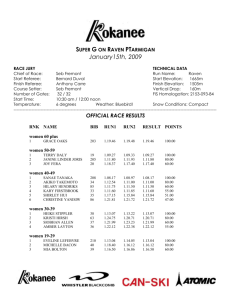

Server-client vs. P2P: example

Client upload rate = u, F/u = 1 hour, us = 10u, dmin ≥ us

Minimum Distribution Time

3.5

P2P

3

Client-Server

2.5

2

1.5

1

0.5

0

0

5

10

15

20

25

30

35

N

Client server ~ NF/us vs. P2P ~ NF/(us +

Sui)

2: Application Layer

7

Contents

P2P architecture and benefits

P2P content distribution

Content distribution network (CDN)

2: Application Layer

8

P2P content distribution issues

Issues

Group management and data search

Reliable and efficient file exchange

Security/privacy/anonymity/trust

Approaches for group management and

data search (i.e., who has what?)

Centralized (e.g., BitTorrent tracker)

Unstructured (e.g., Gnutella)

Structured (Distributed Hash Tables [DHT])

2: Application Layer

9

Centralized model (Napster)

original “Napster” design

1) when peer connects, it

informs central server:

Bob

centralized

directory server

1

peers

IP address

content

2) Alice queries for “Hey

Jude”; server notifies

that Bob has the file..

3) Alice requests file from

Bob

1

3

1

2

Q: “Hey Jude”

A: Bob has it

1

Alice

2: Application Layer

10

Centralized model

Bob

Alice

file transfer is

decentralized, but

locating content is

highly centralized

Judy

Jane

2: Application Layer

11

Centralized model

Benefits:

Low per-node state

Limited bandwidth usage

Short search time

High success rate

Fault tolerant

Drawbacks:

Single point of failure

Limited scale

Possibly unbalanced load

Bob

Judy

Alice

Jane

copyright infringement (?)

2: Application Layer

12

File distribution: BitTorrent

P2P file distribution

tracker: tracks peers

participating in torrent

torrent: group of

peers exchanging

chunks of a file

obtain a list

of peers

trading

chunks

peer

2: Application Layer

13

BitTorrent (1)

file divided into 256KB chunks.

peer joining torrent:

has no chunks, but will accumulate them over time

registers with tracker to get list of peers,

connects to subset of peers (“neighbors”)

while downloading, peer uploads chunks to other

peers.

peers may come and go

once peer has entire file, it may (selfishly) leave or

(altruistically) remain

2: Application Layer

14

BitTorrent (2)

Pulling Chunks

at any given time,

different peers have

different subsets of

file chunks

periodically, a peer

(Alice) asks each

neighbor for a list of

chunks that it has.

Alice sends requests

for her missing chunks

rarest first

Sending Chunks: tit-for-tat

Alice sends chunks to four

neighbors currently

sending her chunks at the

highest rate

re-evaluate top 4 every

10 secs

every 30 secs: randomly

select another peer,

starts sending chunks

newly chosen peer may

join top 4

“optimistically unchoke”

2: Application Layer

15

BitTorrent: Tit-for-tat

(1) Alice “optimistically unchokes” Bob

(2) Alice becomes one of Bob’s top-four providers; Bob reciprocates

(3) Bob becomes one of Alice’s top-four providers

With higher upload rate,

can find better trading

partners & get file faster!

2: Application Layer

16

P2P Case study: Skype

Skype clients (SC)

inherently P2P: pairs of

users communicate.

proprietary

Skype

login server

application-layer

protocol (inferred via

reverse engineering)

hierarchical overlay

with super nodes (SNs)

Index maps usernames

to IP addresses;

distributed over SNs

Supernode

(SN)

2: Application Layer

17

Peers as relays

Problem when both

Alice and Bob are

behind “NATs”.

NAT prevents an outside

peer from initiating a call

to insider peer

Solution:

Using Alice’s and Bob’s

SNs, Relay is chosen

Each peer initiates

session with relay.

Peers can now

communicate through

NATs via relay

2: Application Layer

18

Contents

P2P architecture and benefits

P2P content distribution

Content distribution network (CDN)

2: Application Layer

19

Why Content Networks?

More hops between client and Web server

more congestion!

Same data flowing repeatedly over links

between clients and Web server

C1

C3

C4

S

C2

Slides from http://www.cis.udel.edu/~iyengar/courses/Overlays.ppt

- IP router

2: Application Layer

20

Why Content Networks?

Origin server is bottleneck as number of

users grows

Flash Crowds (for instance, Sept. 11)

The Content Distribution Problem: Arrange

a rendezvous between a content source at

the origin server (www.cnn.com) and a

content sink (us, as users)

Slides from http://www.cis.udel.edu/~iyengar/courses/Overlays.ppt

2: Application Layer

21

Example: Web Server Farm

Simple solution to the content distribution problem: deploy a

large group of servers

www.cnn.com

(Copy 1)

www.cnn.com

(Copy 2)

Request from

grad.umd.edu

www.cnn.com

(Copy 3)

Request from

ren.cis.udel.edu

L4-L7 Switch

Request from

ren.cis.udel.edu

Request from

grad.umd.edu

Arbitrate client requests to servers using an “intelligent”

L4-L7 switch

Pretty widely used today

2: Application Layer

22

Example: Caching Proxy

Majorly motivated by ISP business interests – reduction in

bandwidth consumption of ISP from the Internet

Reduced network traffic

Reduced user perceived latency

ISP

Client

ren.cis.udel.edu

Client

merlot.cis.ud

el.edu

Intercepters

TCP port 80

traffic

Other

traffic

Internet

www.cnn.com

Proxy

2: Application Layer

23

But on Sept. 11, 2001

Web Server

www.cnn.com

New Content

WTC News!

1000,000

other hosts

request

1000,000

other hosts

ISP

old

content

request

User

mslab.kaist.ac.kr

- Congestion /

Bottleneck

- Caching Proxy

2: Application Layer

24

Problems with discussed approaches:

Server farms and Caching proxies

Server farms do nothing about problems due to

network congestion

Caching proxies serve only their clients, not all

users on the Internet

Content providers (say, Web servers) cannot rely

on existence and correct implementation of

caching proxies

Accounting issues with caching proxies.

For instance, www.cnn.com needs to know the number of

hits to the webpage for advertisements displayed on the

webpage

2: Application Layer

25

Again on Sept. 11, 2001 with CDN

Web Server

www.cnn.com

New Content

WTC News!

WA

CA

MI

1000,000

other users

IL

MA

1000,000

other users

FL

NY

DE

request

new

content

User

mslab.kaist.ac.kr

- Distribution

Infrastructure

- Surrogate

2: Application Layer

26

Web replication - CDNs

Overlay network to distribute content from

origin servers to users

Avoids large amount of same data repeatedly

traversing potentially congested links on the

Internet

Reduces Web server load

Reduces user perceived latency

Tries to route around congested networks

2: Application Layer

27

CDN vs. Caching Proxies

Caches are used by ISPs to reduce bandwidth

consumption, CDNs are used by content providers

to improve quality of service to end users

Caches are reactive, CDNs are proactive

Caching proxies cater to their users (web clients)

and not to content providers (web servers), CDNs

cater to the content providers (web servers) and

clients

CDNs give control over the content to the content

providers, caching proxies do not

2: Application Layer

28

CDN Architecture

Origin

Server

CDN

Request

Routing

Infrastructure

Distribution

& Accounting

Infrastructure

Surrogate

Surrogate

Client

Client

2: Application Layer

29

CDN Components

Distribution Infrastructure:

Moving or replicating content from content source

(origin server, content provider) to surrogates

Request Routing Infrastructure:

Steering or directing content request from a client to

a suitable surrogate

Content Delivery Infrastructure:

Delivering content to clients from surrogates

Accounting Infrastructure:

Logging and reporting of distribution and delivery activities

2: Application Layer

30

Server Interaction with CDN

www.cnn.com

1.

Origin server pushes new

content to CDN

OR

CDN pulls content from origin

server

Origin

Server

1

2

2. Origin server requests logs and

other accounting info from CDN

OR

CDN provides logs and other

accounting info to origin server

CDN

Distribution

Infrastructure

Accounting

Infrastructure

2: Application Layer

31

Client Interaction with CDN

1. Hi! I need www.cnn.com/sept11

2.

Go to surrogate

newyork.cnn.akamai.com

CDN

california.cnn.akamai.com

Surrogate

(CA)

Request

Routing

Infrastructure

3. Hi! I need content /sept11

newyorkcnn.akamai.com

Q:

How did the CDN choose the New

York surrogate over the California

surrogate ?

Surrogate

(NY)

1

2

3

Client

2: Application Layer

32

Request Routing Techniques

Request routing techniques use a set of

metrics to direct users to “best” surrogate

Proprietary, but underlying techniques

known:

DNS based request routing

Content modification (URL rewriting)

Anycast based (how common is anycast?)

URL based request routing

Transport layer request routing

Combination of multiple mechanisms

2: Application Layer

33

DNS based Request-Routing

Common due to the ubiquity of DNS

as a directory service

Specialized DNS server inserted in

a DNS resolution process

DNS server is capable of returning

a different set of A, NS or CNAME

records based on policies/metrics

2: Application Layer

34

DNS based Request-Routing

Q: How does the Akamai

DNS know which

surrogate is closest ?

Akamai

CDN

newyork.cnn.akamai.com

Surrogate

145.155.10.15

www.cnn.com

Akamai DNS

california.cnn.akamai.com

Surrogate

58.15.100.152

1) DNS query:

www.cnn.com

test.nyu.edu

128.4.30.15

DNS response:

A 145.155.10.15

newyork.cnn.akamai.com

local DNS server (dns.nyu.edu)

128.4.4.12

2: Application Layer

35

DNS based Request-Routing

www.cnn.com

Akamai

CDN

Akamai DNS

Surrogate

Surrogate

DNS query

test.nyu.edu

128.4.30.15

local DNS server

(dns.nyu.edu)

128.4.4.12

2: Application Layer

36

DNS based Request-Routing

www.cnn.com

Akamai DNS

Akamai

CDN

Requesting DNS - 76.43.32.4

Surrogate - 145.155.10.15

Surrogate

58.15.100.152

Surrogate

145.155.10.15

Requesting DNS - 76.43.32.4

Requesting DNS - 76.43.32.4

Available Bandwidth = 10 kbps

RTT = 10 ms

Client

Client DNS

76.43.35.53

76.43.32.4

Available Bandwidth = 5 kbps

RTT = 100 ms

www.cnn.com

A 145.155.10.15

TTL = 10s

2: Application Layer

37

DNS based Request Routing: Discussion

Originator Problem: Client may be far removed

from client DNS

Client DNS Masking Problem: Virtually all DNS

servers, except for root DNS servers honor

requests for recursion

Q: Which DNS server resolves a request for test.nyu.edu?

Q: Which DNS server performs the last recursion of the

DNS request?

Hidden Load Factor: A DNS resolution may result

in drastically different load on the selected

surrogate – issue in load balancing requests, and

predicting load on surrogates

2: Application Layer

38

Summary

P2P architecture and its benefits

P2P content distribution

BitTorrent, Skype

Content distribution network (CDN)

DNS-based request routing

2: Application Layer

39

Distributed Hash Table (DHT)

DHT = distributed P2P database

Database has (key, value) pairs;

key: ss number; value: human name

key: content type; value: IP address

Peers query DB with key

DB returns values that match the key

Peers can also insert (key, value) peers

2: Application Layer

40

DHT Identifiers

Assign integer identifier to each peer in range

[0,2n-1].

Each identifier can be represented by n bits.

Require each key to be an integer in same range.

To get integer keys, hash original key.

eg, key = h(“Led Zeppelin IV”)

This is why they call it a distributed “hash” table

2: Application Layer

41

How to assign keys to peers?

Central issue:

Assigning (key, value) pairs to peers.

Rule: assign key to the peer that has the

closest ID.

Convention in lecture: closest is the

immediate successor of the key.

Ex: n=4; peers: 1,3,4,5,8,10,12,14;

key = 13, then successor peer = 14

key = 15, then successor peer = 1

2: Application Layer

42

Chord (a circular DHT) (1)

1

3

15

4

12

5

10

8

Each peer only aware of immediate successor

and predecessor.

“Overlay network”

2: Application Layer

43

Chord (a circular DHT) (2)

O(N) messages

on avg to resolve

query, when there

are N peers

0001

I am

Who’s resp

0011

for key 1110 ?

1111

1110

0100

1110

1110

1100

1110

1110

Define closest

as closest

successor

1010

0101

1110

1000

2: Application Layer

44

Chord (a circular DHT) with Shortcuts

1

3

15

Who’s resp

for key 1110?

4

12

5

10

8

Each peer keeps track of IP addresses of predecessor,

successor, short cuts.

Reduced from 6 to 2 messages.

Possible to design shortcuts so O(log N) neighbors, O(log

N) messages in query

2: Application Layer

45

Peer Churn

1

•To handle peer churn, require

3

15

4

12

5

10

each peer to know the IP address

of its two successors.

• Each peer periodically pings its

two successors to see if they

are still alive.

8

Peer 5 abruptly leaves

Peer 4 detects; makes 8 its immediate successor;

asks 8 who its immediate successor is; makes 8’s

immediate successor its second successor.

What if peer 13 wants to join?

2: Application Layer

46