10. Prediction in the Classical Regression Model

advertisement

Econometrics I

Professor William Greene

Stern School of Business

Department of Economics

10-1/21

Part 10: Prediction

Econometrics I

Part 10 - Prediction

10-2/21

Part 10: Prediction

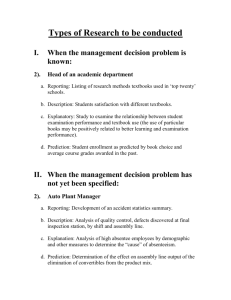

Forecasting

Objective: Forecast

Distinction: Ex post vs. Ex ante forecasting

Prediction vs. model validation.

10-3/21

Ex post: RHS data are observed

Ex ante: RHS data must be forecasted

Within sample prediction

“Hold out sample”

Part 10: Prediction

Prediction Intervals

Given x0 predict y0.

Two cases:

Estimate E[y|x0] = x0;

Predict y0 = x0 + 0

Obvious predictor, b’x0 + estimate of 0. Forecast 0 as 0, but allow for

variance.

Alternative: When we predict y0 with bx0, what is the 'forecast error?'

Est.y0 - y0 = bx0 - x0 - 0, so the variance of the forecast error is

x0Var[b - ]x0 + 2

How do we estimate this? Form a confidence interval. Two cases:

If x0 is a vector of constants, the variance is just x0 Var[b] x0. Form

confidence interval as usual.

If x0 had to be estimated, then we use a random variable. What is the

variance of the product? (Ouch!) One possibility: Use bootstrapping.

10-4/21

Part 10: Prediction

Forecast Variance

Variance of the forecast error is

2 + x0’ Var[b]x0 = 2 + 2[x0’(X’X)-1x0]

If the model contains a constant term, this is

1 K 1 K 1 0

0

0

jk

Var[e ] 1 ( x j x j )( xk xk )( ZM Z)

N j 1 k 1

0

2

In terms squares and cross products of deviations from

means. Interpretation: Forecast variance is smallest

in the middle of our “experience” and increases as we

move outside it.

10-5/21

Part 10: Prediction

Butterfly Effect

10-6/21

Part 10: Prediction

Internet Buzz Data

10-7/21

Part 10: Prediction

A Prediction Interval

Prediction includes a range of uncertainty

Point estimate: yˆ a bx*

The range of uncertainty around the prediction:

2

1

(x

*

x)

2

a bx* 1.96 S e 1+ N

2

N i1(xi x)

The usual 95%

10-8/21

Due to ε

Due to estimating α and β with a and b

Part 10: Prediction

Slightly Simpler Formula for Prediction

Prediction includes a range of uncertainty

Point estimate: yˆ a bx*

The range of uncertainty around the prediction:

2

1

2

a bx* 1.96 S 1+ (x * x) SE(b)

N

2

e

10-9/21

Part 10: Prediction

Prediction from Internet Buzz Regression

Buzz

= 0.48242

Max(Buzz)= 0.79

10-10/21

Part 10: Prediction

Prediction Interval for Buzz = .8

Predict Box Office for Buzz = .8

a+bx = -14.36 + 72.72(.8) = 43.82

1

se2 1 (.8 Buzz)2 SE(b)2

N

1

2

2

13.38632 1

(.8

.48242)

10.94

62

13.93

Interval = 43.82 1.96(13.93)

= 16.52 to 71.12

10-11/21

Part 10: Prediction

Dummy Variable for One Observation

A dummy variable that isolates a single

observation. What does this do?

Define d to be the dummy variable in question.

Z = all other regressors. X = [Z,d]

Multiple regression of y on X. We know that

X'e = 0 where e = the column vector of

residuals. That means d'e = 0, which says that

ej = 0 for that particular residual. The

observation will be predicted perfectly.

Fairly important result. Important to know.

10-12/21

Part 10: Prediction

Oaxaca Decomposition

Two groups, two regression models: (Two time periods,

men vs. women, two countries, etc.)

y1 = X11 + 1 and y2 = X22 + 2

Consider mean values,

y1* = E[y1|mean x1] = x1* 1

y2* = E[y2|mean x2] = x2* 2

Now, explain why y1* is different from y2*. (I.e., departing

from y2, why is y1 different?) (Could reverse the roles

of 1 and 2.)

y1* - y2* = x1* 1 - x2* 2

= x1*(1 - 2)

+ (x1* - x2*) 2

(change in model)

(change in conditions)

10-13/21

Part 10: Prediction

The Oaxaca Decomposition

Two groups (e.g., men=1, women=2)

Regression predictions:

ˆ

y1 x1 b1 , ˆ

y 2 x 2b2 (e.g., wage equations)

Explain ˆ

y1 - ˆ

y2.

ˆ

y1 - ˆ

y 2 x1 (b1 - b2 )

+ (x1 - x 2 )b2

discrimination + qualifications

Var[x1 (b1 - b2 )]=x1 {12 ( X1 X1 ) 1 22 ( X 2 X 2 ) 1 } x 1

Wald : W=(x1 (b1 - b2 ))2 / [x1 {12 ( X1 X1 ) 1 22 ( X 2 X 2 ) 1 } x 1 ]

What is the hypothesis?

10-14/21

Part 10: Prediction

Application - Income

German Health Care Usage Data, 7,293 Individuals, Varying Numbers of

Periods

Variables in the file are

Data downloaded from Journal of Applied Econometrics Archive. This is an

unbalanced panel with 7,293 individuals. They can be used for regression, count

models, binary choice, ordered choice, and bivariate binary choice. This is a

large data set. There are altogether 27,326 observations. The number of

observations ranges from 1 to 7. (Frequencies are: 1=1525, 2=2158, 3=825,

4=926, 5=1051, 6=1000, 7=987).

HHNINC = household nominal monthly net income in German marks / 10000.

(4 observations with income=0 were dropped)

HHKIDS = children under age 16 in the household = 1; otherwise = 0

EDUC = years of schooling

AGE = age in years

MARRIED = 1 if married, 0 if not

FEMALE = 1 if female, 0 if male

10-15/21

Part 10: Prediction

Regression: Female=0 (Men)

10-16/21

Part 10: Prediction

Regression Female=1 (Women)

10-17/21

Part 10: Prediction

Pooled Regression

10-18/21

Part 10: Prediction

Application

namelist ; X = one,age,educ,married,hhkids$

? Get results for females

include ; new ; female=1$

Subsample females

regr

; lhs=hhninc;rhs=x$

Regression

matrix

; bf=b ; vf = varb ; xbarf = mean(x) $ Coefficients, variance, mean X

calc

; meanincf = bf'xbarf $

Mean prediction for females

? Get results for males

include ; new ; female=0$

Subsample males

regr

; lhs=hhninc;rhs=x$

Regression

matrix

; bm=b ; vm = varb ; xbarm = mean(x) $ Coefficients, etc.

calc

; meanincm = bm'xbarm $

Mean prediction for males

? Examine difference in mean predicted income

calc

; list

; meanincm ; meanincf

Display means

; diff = xbarm'bm - xbarf'bf $

Difference in means

matrix

; vdiff = xbarm'[vm]xbarm + xbarf'[vf]xbarf $ Variance of difference

calc

; list ; diffwald = diff^2 / vdiff $

Wald test of difference = 0

? “Discrimination” component of difference

matrix

; db = bm-bf ; discrim = xbarm'db

Difference in coeffs., discrimination

; vdb = vm+vf ; vdiscrim = xbarm'[vdb]xbarm $ Variance of discrimination

calc

; list ; discrim ; dwald = discrim^2 / vdiscrim $ Walt test that D = 0.

? “Difference due difference in X”

matrix

; dx = xbarm - xbarf $

Difference in characteristics

matrix

; qual = dx'bf ; vqual = dx'[vf]dx $

Contribution to total difference

calc

; list ; qual ; qualwald = qual^2/vqual $ Wald test.

10-19/21

Part 10: Prediction

Results

+------------------------------------+

| Listed Calculator Results

|

+------------------------------------+

MEANINCM =

.359054

MEANINCF =

.344495

DIFF

=

.014559

DIFFWALD =

52.006502

DISCRIM

DWALD

10-20/21

=

=

-.005693

7.268757

QUAL

=

QUALWALD =

.020252

1071.053640

Part 10: Prediction

Decompositions

10-21/21

Part 10: Prediction