Miguel Lim

UNIKE Policy Brief

Impact metrics in higher education: the need for caution in the use of quantitative metrics and more research on research policy.

Author: Miguel Lim, EU Marie Curie Fellow, Aarhus University

Intended policy audience: European and Private/NGO Research Funding Agencies, European-level and

National Research Councils

Introduction and Policy Context

There is a general skepticism about the role of metrics in research management and the measurement of impact. Metrics are seen as a second best but least-worst option when considering the need for audit and management over a large number of research outputs.

Those who are supportive of metrics caution that they need be used carefully. Their design and application needs to be understood better. The data sources should be transparent.

There are a variety of factors contributing to the growth in number and importance of metrics in higher education and research. These include:

Increased need for audit

Demand for evaluation and accountability of public spending on higher education and research

Need for strategic intelligence on research quality and impact o For policy makers o For internal research strategies and management

Increasing competition for various resources: prestige, students, staff, external funding, donations

Availability of ‘big data’

Impact is a contested term

There are various definitions of impact. This variety is useful for understanding the relatively broad missions of higher education and research institutions. One definition of impact is provided by the LSE Public Policy

Group: “Research has an external impact when an auditable or recorded influence is achieved upon a nonacademic organization or actor in a sector outside the university sector itself ... external impacts need to be demonstrated rather than assumed.” 1

In the UK Research Excellence Framework 2014 (REF2014), impact was defined as an “effect on, change or benefit to the economy, society, culture, public policy or services, health, the environment or quality of life, beyond academia”. The definition includes, but was not limited to, an effect on, change or benefit to: the activity, attitude, awareness, behaviour, capacity, opportunity, performance, policy, practice, process or

1 LSE Public Policy Group (2011).

understanding of an audience, beneficiary, community, constituency, organisation or individuals in any geographic location whether locally, regionally, nationally or internationally.” 2

These definitions are broad and encompass the many ways that research can be used in society. They reflect the various missions of a wide range of higher education institutions. Because of the large range of activities that could be captured by such a broad definition, there are a variety of ways to measure impact.

Various measures of impact

Evidence of external impacts can take a number of forms. These include:

Bibliometric data: citations in academic publications

Alternative metrics (altmetrics): citations in practitioner or commercial documents; in media or in meetings, conferences, seminars, or working groups;

Through measurement of funding success

Through the involvement of academics in policy making and decision-making processes in government and other societal groups

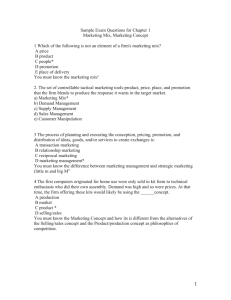

These measures of impact are roughly associated to the various pathways identified by the Research

Councils UK to achieve (1) academic and (2) economic and societal impacts. These pathways are outlined in the diagram below.

2 REF (2014).

(Source: Research Councils UK)

The behavior of researchers could be affected by the use of quantitative metrics. Current work on research impact metrics tend to focus on publication and citation data (especially journal articles) and patent data. The introduction or exclusive use of metrics might skew researchers only to focus on publication and patenting activity as proxies for impact.

In REF 2014, there were 6,975 case studies and 1,911 impact templates submitted. The sub-panels identified a significant proportion of research impact to be 4-star (44%) or 3- star (40%). The cost of preparing impact cases and templates is estimated to be £55 million through the submissions 3 . The use of quantitative indicators or metrics might reduce the cost of impact assessment because detailed case studies are more expensive, in terms of time and resources, to produce.

However, the conclusion of an analysis by King’s College London shows that present indicators are insufficient. We need better research on the issue of impact metrics.

“The quantitative evidence supporting claims for impact was diverse and inconsistent, suggesting that the development of robust impact metrics is

3 Manville, C. Morgan Jonesm M. et al. (2015)

unlikely. There was a large amount of numerical data (ie, c.170,000 items, or c.70,000 with dates removed) that was inconsistent in its use and expression and could not be synthesized. In order for impact metrics to be developed, such information would need to be expressed in a consistent way, using standard units. However, as noted above, the strength of the impact case studies is that they allow authors to select the appropriate data to evidence their impact. Given this, and based on our analysis of the impact case studies, we would reiterate…impact indicators are not sufficiently developed and tested to be used to make funding decisions

.” 4

Quantitative indicators tend to be highly specific and better suited to particular types of research impact.

Patent numbers or citation data is relevant for some kinds of impacts – they measure the impact of research commercialization fairly well. However, they are much less suited to measure impact on government policy or societal behaviour.

Recommendations

1.

Careful use of metrics when measuring impact. There continues to be a need for a qualitative assessment of impact cases. The introduction or exclusive use of quantitative metrics to measure impact could result in skewed behavior and gaming.

2.

More studies need to be done on the use of impact cases. The study of submission to the REF2014 could form the basis of better understanding of any potential quantitative indicators to be introduced in later research assessments.

3.

More research on research and innovation policy is necessary. We also need a better understanding of the consequences of the use of some certain metrics over others as proxies of impact.

Conclusion

There is a need for caution on exclusively using quantitative metrics to measure impact.

There is a need for more research on research. At the moment there is little ‘science’ in ‘science policy’ because the pathways to impact are not yet very well understood. Our use of proxies needs to be refined.

There are several disciplines and methodological approaches that can contribute to the understanding of impact assessment. Researchers from the fields of computer science, statistics, social sciences, and economics among others can work together to study the relationship between research and impact.

4 King’s College London and Digital Science (2015)