Mary_Hill_CSDMS_2015_annual_meeting

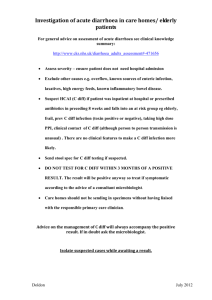

advertisement

Testing modeling frameworks or… how can we improve predictive skill from our data? Mary C. Hill, University of Kansas; Emeritus, U.S. Geological Survey Laura Foglia, Technical University of Darmstadt (soon at UC Davis) Ming Ye, Florida state University Lu Dan, Oak Ridge National Laboratory Frameworks for Model Analysis • Look for surprises • Surprises can indicate New understanding about reality or Model limitations or problems • Fast methods are convenient -- enable routine evaluation. Computationally frugal, parallelizable methods What do I mean by a modeling framework? • Methods used to – Parameterize system characteristics – Compare to data – Adjust for data (calibration, tuning) – Sensitivity analysis – Uncertainty quantification What do I mean by a modeling framework? • Methods used to – Parameterize system characteristics Define zones or simple interpolations and estimate relatively few parameter values Quaternary geology map X Conductivity map 9 Define many interpolation points. Estimate a parameter value at each point. What do I mean by a modeling framework? • Methods used to – Parameterize system characteristics – Compare to data • Graphical • Statistics like sum of squared residuals What do I mean by a modeling framework? • Methods used to – Parameterize system characteristics – Compare to data – Adjust for data (calibration, tuning) What do I mean by a modeling framework? • Methods used to Foglia et al, in preparation 0.5 425 0.4 390 0.3 355 0.2 320 0.1 285 0.0 250 Head identifier Head, in meters Observation importance using leverage, Cook’s D – Parameterize system characteristics – Compare to data – Adjust for data (calibration, tuning) – Sensitivity analysis What do I mean by a modeling framework? • Methods used to – Parameterize system characteristics – Compare to data – Adjust for data (calibration, tuning) – Sensitivity analysis – Uncertainty quantification Model runs 100 1,600 480,000 True Lu et al. 2012 WRR HO 3Z INT Name of alternative model…….. Overview of framework methods Problems: -Many method - Tower of Babel? -Colossal computational demands – Necessary? Hill + 2015, Groundwater Testing modeling frameworks Test using cross-validation Maggia Valley, Switzerland Goal: Integrated hydrologic model to help manage ecology of this altered hydrologic system. Use component sw and gw models to test methods for the integrated model. 1954: Dams closed upstream 11 Maggia Valley, southern Switzerland Series of studies to identify and test a computationally frugal protocol for model development 1. Test frugal sensitivity analysis (SA) with cross-validation – MODFLOW Model w stream (dry) (Foglia+ 2007 GW) 2. Demonstrate frugal optimal calibration method – TOPKAPI Rainfall-Runoff model (Foglia + 2009 WRR) 3. Here - SA and calibration methods enable test of computationally frugal model selection criteria MODFLOW GW model (wet) (Foglia + 2013 WRR) 4. Integrated hydrologic model – SW and GW Software: UCODE (Poeter + 2005, 2014) MMA (MultiModel Analysis) (Poeter and Hill 2007) 12 Model outline and 35 Obs Locations Flow data (use gains/losses) Head data 13 13 Model structures SFR RIV • Streamflow Routing (SFR2) vs. River (RIV) • Recharge – Zero, constant, TOPKAPI • Bedrock boundary – Old & New: Before and after gravimetric survey. – Depth in south x 2. Quaternary geology map K • K – Based on map of surficial quaternary geology – 5 combinations, from 1 parameter to 6 64 alternative models Range: 5e-4 to 1e-3 Conductivity map 14 Cross validation experiment Flow data (use gains/losses) 3 gains/losses omitted for cross-validation experiment Head data 8 heads omitted for cross-validation experiment 15 15 Use SA measure leverage for models calibrated with all 35 obs. High leverage = important obs. 1.0 0.9 0.8 R4new_2 Each symbol R2new_3 represents a R4new_3 R2new_2model different R2old_2 0.7 R4new_4 S1old_5 (KIC 1) 0.6 S4old_5 (KIC 2) S2old_5 (KIC 3) 0.5 S1old_4 (KIC 4) S4new_5 (KIC 5) 0.4 0.3 0.2 0.1 0.0 11 22 33 44 55 66 77 88 99 10 10 11 11 12 12 13 13 14 14 15 15 16 16 17 17 18 18 19 19 20 20 21 21 22 22 23 23 24 24 25 25 26 26 27 27 28 28 V1_diff V1_diff R1_diff S1_diff R1_diff S2_diff S1_diff S3_diff S2_diff L1_diff S3_diff A1_diff L1_diff Leverage Leverage How important are the omitted observations in the alternative models? Head observation number and flow gain/loss observation name Observation number or name 16 How important are the omitted observations in the alternative models? 1.0 methods of analyzing alternative models R4new_2 0.9 R2new_3 R4new_3 0.8 R2new_2 R2old_2 0.7 R4new_4 S1old_5 (KIC 1) 0.6 S4old_5 (KIC 2) S2old_5 (KIC 3) 0.5 S1old_4 (KIC 4) S4new_5 (KIC 5) 0.4 0.3 0.2 0.1 0.0 11 22 33 44 55 66 77 88 99 10 10 11 11 12 12 13 13 14 14 15 15 16 16 17 17 18 18 19 19 20 20 21 21 22 22 23 23 24 24 25 25 26 26 27 27 28 28 V1_diff V1_diff R1_diff S1_diff R1_diff S2_diff S1_diff S3_diff S2_diff L1_diff S3_diff A1_diff L1_diff Leverage Leverage Omitted obs areSAvery important toIdentifies not important. Quickly evaluate using measure leverage. observations important and unimportant to parameters. Omitting these obs should provide a good test of Head observation number and flow gain/loss observation name Observation number or name 17 Tests conducted • Test model selection criteria AIC, BIC, and KIC – Forund problems – especially for KIC • Compared results with a basic premise related to model complexity – Led to some specific suggestions about model development – Present this part of the analysis here 12 Observation misfit (o) 10 or 8 Prediction 6 error ( ) We want this: the best predictions Prediction error 1200 1000 800 600 400 200 0 130 4 Model Fit 2 0 1 10 100 Number of parameters 1000 Model fit (SSWR) Discrepancy between model and observations ( o) or Prediction error ( x) Our results vs basic premise? Prediction error (SSWpred) 1400 110 90 70 50 30 4 Lines connect models that are the same except for K 5 6 7 8 9 10 Number of estimated parameters 19 12 Observation misfit (o) 10 or 8 Prediction 6 error ( ) We want this: the best predictions Prediction error 1200 1000 800 600 400 200 0 130 4 Model Fit 2 0 1 10 100 1000 Number of parameters Lack of improvement with added K parameters indicates the K parameterization has little relation to the processes affecting the data Model fit (SSWR) Discrepancy between model and observations ( o) or Prediction error ( x) Our results vs basic premise? Prediction error (SSWpred) 1400 110 Solid: SFR Hollow: RIV 90 70 50 30 4 Lines connect models that are the same except for K 5 6 7 8 9 10 Number of estimated parameters 20 12 Observation misfit (o) 10 or 8 Prediction 6 error ( ) We want this: the best predictions Prediction error 1200 1000 800 600 400 200 0 130 4 Model Fit 2 0 1 10 100 Number of parameters 1000 Model fit (SSWR) Discrepancy between model and observations ( o) or Prediction error ( x) Our results vs basic premise? Prediction error (SSWpred) 1400 110 Solid: SFR Hollow: RIV 90 70 50 30 4 Lines connect models that are the same except for K 5 6 7 8 9 10 Number of estimated parameters 21 12 Observation misfit (o) 10 or 8 Prediction 6 error ( ) We want this: the best predictions Prediction error 1200 1000 800 600 400 200 0 130 4 Model Fit 2 0 1 10 100 Number of parameters 1000 Model fit (SSWR) Discrepancy between model and observations ( o) or Prediction error ( x) Our results vs basic premise? Prediction error (SSWpred) 1400 110 Solid: SFR Hollow: RIV 90 70 50 30 4 Lines connect models that are the same except for K 5 6 7 8 9 10 Number of estimated parameters 22 12 Observation misfit (o) 10 or 8 Prediction 6 error ( ) We want this: the best predictions Prediction error 1200 1000 800 600 400 200 0 130 4 Model Fit 2 0 1 10 100 1000 Number of parameters Sensitivity analysis indicated the models with the most parameters were poorly posed. Suggests we can use these methods to detect poor models. Model fit (SSWR) Discrepancy between model and observations ( o) or Prediction error ( x) Our results vs basic premise? Prediction error (SSWpred) 1400 110 Solid: SFR Hollow: RIV 90 70 50 30 4 Lines connect models that are the same except for K 5 6 7 8 9 10 Number of estimated parameters 23 What do poor predictions look like? (a) 3 2 1 0 -1 -2 -3 -7.3 Number of head observation 12 11 10 9 8 7 6 -4 5 Observed minus predicted value, in m 4 S4old_2 S4old_3 R1old_2 R2new_3 R4new_3 S2old_3 R4new_4 S4old_5 R4old_2 S4new_2 R4new_2 R4old_3 S2new_2 R2old_2 R2new_2 S2new_3 S2old_5 R2old_5 S4new_5 S1old_4 Identify important parameters Results from the integrated hydrologic model Groundwater model + distributed hydrologic model + new observations for low flows Original R-R model Observations 120 streamflows New integrated model 618 streamflows 28 GW head observations 7 gain/loss observations Parameters included in calibration Processes Results 4 SW parameter types 6 SW parameter types (36 pars) 3 GW parameter types (6 pars) Rainfall-runoff model Rainfall-runoff model Groundwater model More frequent sampling for Low flows are still as important as low flows is needed (Foglia + high flows for many of the 2009 WRR) parameters Observations related to the groundwater model are important also for the rainfall-runoff model B_ETP1 depth2 depth3 depth4 depth5 depth8 depth11 KS2 KS3 KS4 KS5 KS8 KS11 thetas2 thetas3 thetas4 thetas5 thetas8 thetas11 MAN_ovr1 MAN_ovr2 MAN_ovr3 MAN_ovr4 MAN_ovr5 MAN_ovr6 MAN_ovr7 MAN_ovr8 MAN_ovr9 MAN_ovr10 MAN_ovr11 MAN_ovr12 MAN_RIV1 MAN_RIV2 MAN_RIV3 MAN_RIV4 MAN_RIV5 KRIVER1 KRIVER2 K2 K57 K6 RCH_1 Composite Scaled Sensitivity Identify important parameters 14 12 10 8 GW Heads obs GW flow obs SW low-medium flows SW high flows B_ETP1 Soil depth Saturated K Porosity TOPKAPI PARAMETERS Overland Mannings Rivers MODFLOW PARAMETERS 6 4 2 0 KRiv K Rech Identify important parameters GW observations are quite important to some of the SW parameters SW obs are interpreted as GW flow (gain/loss) obs, which are important Composite Scaled Sensitivity 14 GW Heads obs GW flow obs SW low-medium flows SW high flows 12 10 8 TOPKAPI PARAMETERS MODFLOW PARAMETERS 6 4 2 B_ETP1 depth2 depth3 depth4 depth5 depth8 depth11 KS2 KS3 KS4 KS5 KS8 KS11 thetas2 thetas3 thetas4 thetas5 thetas8 thetas11 MAN_ovr1 MAN_ovr2 MAN_ovr3 MAN_ovr4 MAN_ovr5 MAN_ovr6 MAN_ovr7 MAN_ovr8 MAN_ovr9 MAN_ovr10 MAN_ovr11 MAN_ovr12 MAN_RIV1 MAN_RIV2 MAN_RIV3 MAN_RIV4 MAN_RIV5 KRIVER1 KRIVER2 K2 K57 K6 RCH_1 0 B_ETP1 Soil depth Saturated K Porosity Overland Mannings Rivers KRiv K Rech Conclusions from this test • The surficial geologic mapping did not provide a useful way to parameterize the K • Sensitivity analysis can be used to determine when the model detail exceeds what can be supported by data. • Models unsupported by data are likely to have poor predictive ability • 5 suggestions for model development Testing modeling frameworks • Enabled by computationally frugal methods, which are enabled by computationally robust models • This is where you, the model developers come in! Do your models have numerical daemons??? Kavetski Kuczera 2007 WRR; Hill + 2015 GW; test with DELSA Rakovec 2014 WRR Need important nonlinearities. Keep them! Get rid of unrealistic, difficult numerics Identify important parameters GW observations are quite important to some of the SW parameters SW obs are interpreted as GW flow (gain/loss) obs, which are important Composite Scaled Sensitivity 14 GW Heads obs GW flow obs SW low-medium flows SW high flows 12 10 8 TOPKAPI PARAMETERS MODFLOW PARAMETERS 6 4 2 B_ETP1 depth2 depth3 depth4 depth5 depth8 depth11 KS2 KS3 KS4 KS5 KS8 KS11 thetas2 thetas3 thetas4 thetas5 thetas8 thetas11 MAN_ovr1 MAN_ovr2 MAN_ovr3 MAN_ovr4 MAN_ovr5 MAN_ovr6 MAN_ovr7 MAN_ovr8 MAN_ovr9 MAN_ovr10 MAN_ovr11 MAN_ovr12 MAN_RIV1 MAN_RIV2 MAN_RIV3 MAN_RIV4 MAN_RIV5 KRIVER1 KRIVER2 K2 K57 K6 RCH_1 0 B_ETP1 Soil depth Saturated K Porosity Overland Mannings Rivers KRiv K Rech Perspective • In 100 years, we may very well be thought of as being in the Model T stage of model development • A prediction: In the future, simply getting a close model fit will not be enough. It will also be necessary to show what obs, pars, and processes are important to the good model fit. • This increased model transparency is like the safety equipment in automobile development. I am looking for a test case • Test a way to detect and diagnose numerical daemons. • High profile model applications preferred. AGU: New Technical Committee on Hydrologic Uncertainty SIAM: New SIAM-UQ section and journal Quantifying Uncertainty • Lu Ye Hill 2012 WRR • Investigate methods that take 10s to 100,000s of model runs (Linear to MCMC) • New UCODE_2014 with MCMC supports these ways of calculating uncertainty intervals Test Case • • • • • MODFLOW 3.8 km x 6 km x 80m Top: free-surface No-flow sides, bottom Obs: 54 heads, 2 flows, lake level, lake budget • Parameters: (b) (a) (c) 5 for • recharge • KRB • confining unit KV • net lake recharge, • vertical anisotropy K : 1 (HO), 3 (3Z), or 21 (INT) Data on K at yellow dots True (d) 3Z Int Calibrated model, Predictions, Confidence Intervals Model runs 100 1,600 480,000 True HO 3Z INT Name of alternative Statistics to use when the truth is unknown model…….. HO Lesson about uncertainty 3Z Int error of 1.49 1.00 May Standard be more important to consider1.27 the uncertainty regression (1.25-1.84) (1.06-1.57) (0.88-1.30) from many models than0.54 use a computationally 1Intrinsic nonlinearity 0.02 0.26 demanding R 2way to calculate uncertainty for one model N