ppt

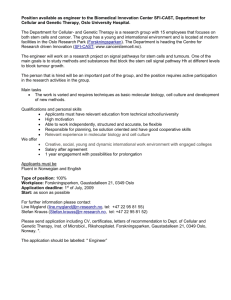

advertisement

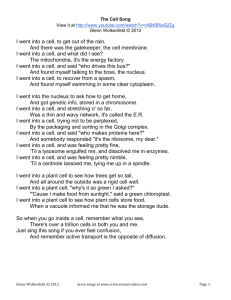

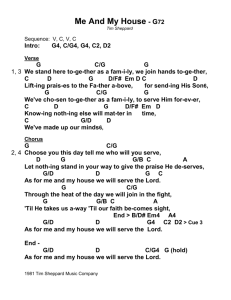

INF5063: Programming Heterogeneous Multi-Core Processors Network Processors A generation of multi-core processors March 11, 2016 Agere Payload Plus APP550 Classifier buffer Scheduler buffer Stream editor memory from Ingress to Egress to coprocessor from. coprocessor Classifier memory University of Oslo Scheduler memory Statistics memory INF5063, Carsten Griwodz & Pål Halvorsen PCI Bus Agere Payload Plus APP550 Classifier buffer Scheduler buffer Packet (protocol data unit) assembler - collect all blocks of a frame - not programmable Stream editor memory Stream Editor (SED) - two parallel engines - modify outgoing packets (e.g., checksum, TTL, …) - configurable, but not programmable from Pattern Processing Engine Ingress - patterns specified by programmer - programmable using a special high-level language - only pattern matching instructions from.by co- parallelism hardware using multiple copies and several sets of variables processor - access to different memories to Egress to coprocessor Reorder Buffer Manager - transfers data between classifier and traffic manager - ensure packet order due to parallelism and variable processing time in the pattern processing Classifier memory State Engine - gather information (statistics) for scheduling - verify flow within bounds - provide an interface to the host - configure and control other functional units University of Oslo Scheduler memory Statistics memory INF5063, Carsten Griwodz & Pål Halvorsen PCI Bus PowerNP 2 Interfaces (OUT to host) 2 Interfaces (IN from host) Internal memory External memory Control store Ingress queue PowerPC core Ingress data store Embedded processors Embedded processors Embedded processors Embedded processors Embedded processors Embedded processors Embedded processors Embedded processors Instruct. memory Hardware classifier Dispatch unit Egress queue 4 Interfaces (OUT to net) 4 Interfaces (IN from net) University of Oslo Egress data store INF5063, Carsten Griwodz & Pål Halvorsen PowerNP 2 Interfaces (OUT to host) 2 Interfaces (IN from host) Coprocessors - 8 embedded processors External - 4 kbytes local memory each memory - 2 cores/processor - 2 threads/core Internal memory Control store Ingress Embedded queue PowerPC GPU - no OS on the NPF PowerPC core Ingress data store Embedded processors Embedded processors Embedded processors Embedded processors Embedded processors Embedded processors Embedded processors Embedded processors Instruct. memory Hardware classifier Dispatch unit 4 Interfaces (IN from net) University of Oslo Egress data store Link layer - framing outside the processor INF5063, Carsten Griwodz & Pål Halvorsen Egress queue 4 Interfaces (OUT to net) IXP1200 Architecture RISC processor: - StrongARM running Linux - control, higher layer protocols and exceptions - 232 MHz Access units: - coordinate access to external units Scratchpad: - on-chip memory - used for IPC and synchronization Microengines: - low-level devices with limited set of instructions - transfers between memory devices - packet processing - 232 MHz University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen IXP2400 Architecture Coprocessors - hash unit - 4 timers SRAM - general purpose I/O pins bus tests) - external JTAG connections (in-circuit - several bulk cyphers (IXP2850 only) - checksum (IXP2850 only) SRAM -… PCI bus RISC processor: - StrongArm XScale - 233 MHz 600 MHz SRAM access PCI access coprocessor SCRATCH memory FLASH Slowport - shared inteface to external units - used for FlashRom during bootstrap slowport access SDRAM access DRAM multiple independent internal buses Embedded RISK CPU (XScale) microengine 1 microengine 2 microengine 3 Media Switch Fabric microengine 4 - forms fast path for transfers Microengines - interconnect for severalmicroengine IXP2xxx 5- 6 8 MSF access … microengine 8 DRAM bus Receive/transmit buses - shared bus separate busses University of Oslo receive bus INF5063, Carsten Griwodz & Pål Halvorsen - 233 MHz 600 MHz transmit bus INF5063: Programming Heterogeneous Multi-Core Processors Example: SpliceTCP TCP Splicing SYNACK SYNACK Internet Some client University of of Oslo Oslo University INF5063, Carsten Carsten Griwodz Griwodz & & Pål Pål Halvorsen Halvorsen INF5063, SYN TCP Splicing ACK ACK Internet Some client University of of Oslo Oslo University INF5063, Carsten Carsten Griwodz Griwodz & & Pål Pål Halvorsen Halvorsen INF5063, ACK TCP Splicing DATA DATA Internet GET Some client University of of Oslo Oslo University INF5063, Carsten Carsten Griwodz Griwodz & & Pål Pål Halvorsen Halvorsen INF5063, HTTP-GET TCP Splicing Internet Some client University of of Oslo Oslo University INF5063, Carsten Carsten Griwodz Griwodz & & Pål Pål Halvorsen Halvorsen INF5063, TCP Splicing accept connect while(1) read write Data link layer layer Transport layer Application Network layer Linux Netfilter • Establish upstream connection • Receive entire packet • Rewrite headers • Forward packet Physical Network layer Transport Data linklayer layer layer University of of Oslo Oslo University IXP 2400 • Establish upstream connection • Parse packet headers • Rewrite headers • Forward packet INF5063, Carsten Carsten Griwodz Griwodz & & Pål Pål Halvorsen Halvorsen INF5063, Throughput vs Request File Size 800 700 Linux-based Throughput (Mbps) NP-based 600 500 400 300 200 100 0 1 4 16 64 256 1024 Request file size (KB) Major performance gain at all request sizes Graph from the presentation of the paper SpliceNP: A TCP Splicer using a Network Processor, ANCS2005, Princeton, NJ, Oct 27-28, 2005 By Li Zhao, Yan Lou, Laxmi Bhuyan (Univ. Calif. Riverside), Ravi Iyer (Intel) University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen INF5063: Programming Heterogeneous Multi-Core Processors Example: Transparent protocol translation and load balancing in a media streaming scenario slides from an ACM MM 2007 presentation by Espeland, Lunde, Stensland, Griwodz and Halvorsen Load Balancer IXP 2400 mplayer clients RSTP/RTP video server ingress RTSP / RTP parser RTSP Balancer . . . RTP/UDP Monitor 1. identify connection Historic and 2. if exist send to right server (select port to use) else create new session (select one server) send packet current loads Network of the different servers egress RTSP RTP/UDP RSTP/RTP video server University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Transport Protocol Translator IXP 2400 mplayer clients RSTP/RTP video server ingress HTTP RTSP HTTP-streaming is frequently used today!! . . . RTSP / RTP parser Balancer Network Monitor egress RSTP/RTP video server University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Transport Protocol Translator IXP 2400 mplayer clients RSTP/RTP video server ingress RTP/UDP Protocol translator HTTP HTTP RTSP/RTP RTSP / RTP parser Balancer . . . Monitor Network RTSP egress RTSP/RTP RSTP/RTP video server University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Results The prototype works and both load balances and translates between HTTP/TCP and RTP/UDP The protocol translation gives a much more stable bandwidth than using HTTP/TCP all the way from the server protocol translation University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen HTTP INF5063: Programming Heterogeneous Multi-Core Processors Example: Booster Boxes slide content and structure mainly from the NetGames 2002 presentation by Bauer, Rooney and Scotton Client-Server local distribution network local distribution network University of Oslo backbone network local distribution network INF5063, Carsten Griwodz & Pål Halvorsen Peer-to-peer local distribution network local distribution network University of Oslo backbone network local distribution network INF5063, Carsten Griwodz & Pål Halvorsen Booster boxes Middleboxes − Attached directly to ISPs’ access routers − Less generic than, e.g. firewalls or NAT Assist distributed event-driven applications − Improve scalability of client-server and peer-to-peer applications Application-specific code − − − − − “Boosters” Caching on behalf of a server Aggregation of events Intelligent filtering Application-level routing University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Booster boxes local distribution network local distribution network University of Oslo backbone network local distribution network INF5063, Carsten Griwodz & Pål Halvorsen Booster boxes local distribution network local distribution network University of Oslo backbone network local distribution network INF5063, Carsten Griwodz & Pål Halvorsen Booster boxes Application-specific code − Caching on behalf of a server • Non-real time information is cached • Booster boxes answer on behalf of servers − Aggregation of events • Information from two or more clients within a time window is aggregated into one packet − Intelligent filtering • Outdated or redundant information is dropped − Application-level routing • Packets are forward based on Packet content Application state Destination address University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Architecture Data Layer − behaves like a layer-2 switch for the bulk of the traffic − copies or diverts selected traffic − IBM’s booster boxes use the packet capture library (“pcap”) filter specification to select traffic University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Data Aggregation Example: Floating Car Data Main booster task Complex message aggregation Statistical computations Context information Traffic monitoring/predictions Pay-as-you-drive insurance Car maintenance Car taxes … Very low real-time requirements Transmission of Position Speed Driven distance … Statistics gathering Compression Filtering … University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Interactive TV Game Show Main booster task Simple message aggregation Limited real-time requirements 3. packet aggregation 4. packet forwarding 2. packet interception 1. packet generation University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Game with large virtual space Main booster task Dynamic server selection server 2 server 1 − based on current ingame location − Require applicationspecific processing Virtual space handled by server 1 handled by server 2 University of Oslo High real-time requirements INF5063, Carsten Griwodz & Pål Halvorsen Summary Scalability − by application-specific knowledge − by network awareness Main mechanisms − − − − − Caching on behalf of a server Aggregation of events Attenuation Intelligent filtering Application-level routing Application of mechanism depends on − Workload − Real-time requirements University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen INF5063: Programming Heterogeneous Multi-Core Processors Multimedia Examples Multicast Video-Quality Adjustment University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment IO hub memory hub CPU memory University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment Several ways to do video-quality adjustments − − − − frame dropping re-quantization scalable video codec … Yamada et. al. 2002: use low-pass filter to eliminate high-frequency components of the MPEG-2 video signal and thus reduce data rate − determine a low-pass parameter for each GOP − use low-pass parameter to calculate how many DCT coefficients to remove from each macro block in a picture − by eliminating the specified number of DCT coefficients the video data rate is reduced − implemented the low-pass filter on an IXP1200 University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment Low-pass filter on IXP1200 − − − parallel execution on 200MHz StrongARM and microengines 24 MB DRAM devoted to StrongARM only 8 MB DRAM and 8 MB SRAM shared − test-filtering program on a regular PC determined work-distribution • • 75% of data from the block layer 56% of the processing overhead is due to DCT five step algorithm: 1. StrongArm receives packet copy to shared memory area 2. StrongARM process headers and generate macroblocks (in shared memory) 3. microengines read data and information from shared memory and perform quality adjustments on each block 4. StrongARM checks if the last macroblock is processed (if not, go to 2) 5. StrongARM rebuilds packet University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment Segmentation of MPEG-2 data − − slice = 16 bit high stripes macroblock = 16 x 16 bit square • four 8 x 8 luminance • two 8 x 8 chrominance DCT transformed with coefficients sorted in ascending order Data packetization for video filtering − 720 x 576 pixels frames and 30 fps 36 “slices” with 45 macroblocks per frame − − Each slice = one packet 8 Mbps stream ~7Kb per packet University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Multicast Video-Quality Adjustment Evaluation – three scenarios tested − StrongARM only 550 kbps − StrongARM + 1 microengine 350 kbps − StrongARM + all microengines 1350 kbps − achieved real-time transcoding not enough for practical purposes, but distribution of workload is nice University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen INF5063: Programming Heterogeneous Multi-Core Processors Parallelism, Pipelining & Workload Partitioning Divide and … Divide a problem into parts – but how? Pipelining: Parallelism: Hybrid: University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Key Considerations System topology − processor capacities: different processors have different capabilities − memory attachments: • different memory types have different rates and access times • different memory banks have different access times − interconnections: different interconnects/busses have different capabilities Requirements of the workload? − dependencies Parameters? − width of pipeline (level of parallelism) − depth of pipeline (number of stages) − number of jobs sharing busses University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Network Processor Example Pipelining vs. Multiprocessor by Ning Weng & Tilman Wolf − network processor example − all pipelining, parallelism and hybrid is possible − packet processing scenario − what is the performance of the different schemes taking into account…? • • • • … processing dependencies … processing demands … contention on memory interfaces … pipelining and parallelism effects (experimenting with the width and the depth of the pipeline) University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Simulations Several application examples in the paper giving different DAGs, e.g.,… − ... flow classification: classify flows according to IP addresses and transport protocols Measuring system throughput varying all the parameters − # processors in parallel (width) − # stages in the pipeline (depth) − # memory interfaces (busses) between each stage in the pipeline − memory access times University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Results # memory interfaces per stage M = 1 Memory service time S = 10 Increases with the pipeline depth D − Good scalability – proportional to the # processors Increases with the width W initially, but tails off for large W − Poor scalability due to contention on the memory channel Efficiency per processing engine…? University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Lessons learned… Memory contention can become a severe system bottleneck − the memory interface saturates with about two processing elements per interface − off-chip memory access cause significant reduction in throughput and drastic increase in queuing delay − performance increase with more • memory channels • lower access times Most NP applications are of sequential nature which leads to highly pipelined NP topologies Balancing processing tasks to avoid slow pipeline stages Communication and synchronization are the main contributors to the pipeline stage time, next to the memory access delay “Topology” has significant impact on performance University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Some References 1. Tatsuya Yamada, Naoki Wakamiya, Masayuki Murata, and Hideo Miyahara: "Implementation and Evaluation of Video-Quality Adjustment for heterogeneous Video Multicast“, 8th AsiaPacific Conference on Communications, Bandung, September 2002, pp. 454-457 2. Daniel Bauer, Sean Rooney, Paolo Scotton, “Network Infrastructure for Massively Distributed Games”, NetGames, Braunschweig, Germany, April 2002 J.R. Allen, Jr., et al., “IBM PowerNP network processor: hardware, software, and applications”, IBM Journal of Research and Development, 47(2/3), pp. 177-193, March/May 2003 3. 4. 5. Ning Weng, Tilman Wolf, “Profiling and mapping of parallel workloads on network processors”, ACM Symposium of Applied Computing (SAC 2005), pp. 890-896 Ning Weng, Tilman Wolf, “Analytic modeling of network processors for parallel workload mapping”, ACM Trans. on Embedded Computing Systems, 8(3), 2009 6. Li Zhao, Yan Lou, Laxmi Bhuyan, Ravi Iyer, “SpliceNP: A TCP Splicer using a Network Processor”, ANCS2005, 2005 7. Håvard Espeland, Carl Henrik Lunde, Håkon Stensland, Carsten Griwodz, Pål Halvorsen, ”Transparent Protocol Translation for Streaming”, ACM Multimedia 2007 University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen Summary TODO University of Oslo INF5063, Carsten Griwodz & Pål Halvorsen