Recommended Standards - Alliance of Information and Referral

advertisement

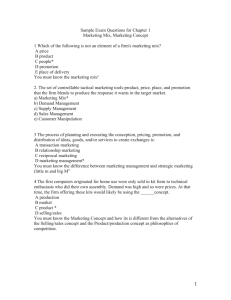

Metrics for 211 Centers and Systems Policy Dialogue with Maribel Marin Executive Director, 211 LA County – CAIRS President 29th I&R Annual Training and Education Conference May 7, 2007 Policy Objectives Identify a specific set of metrics that all 211 centers can measure and track. Provide a clear definition of each metric so that they measure “apples to apples.” Communicate a clear scope for 211 service to the public and for funders. Enable regional, statewide, and national calibration, evaluation, and assessment of 211 services. What is a metric and what is it’s value? A metric is a measure of activity or performance that enables the assessment of outcomes. Metrics can help to answer key questions about operational effectiveness: Are long term goals and objectives being achieved? What does success look like? How satisfied are callers with services? How important is the service to the community? How effective are managers and specialists? What are the Benefits? Enhanced decision making – goals can be set for desired results, results can be measured, outcomes can be clearly articulated. Improved internal accountability – more delegation and less “micro-management” when individuals are clear about responsibilities and expectations. Goals and strategic objectives are meaningful – tracking progress enables the evaluation of planning efforts and can aid in determining whether a plan is good or not. Source: Wayne Parker, Strategic Planning 101 – Why Measure Performance? Workstar Library 2003 Why Metrics Matter for 211? Most 211s collect or have the ability to collect voluminous amounts of data through their Call Management Systems (CMS), Automated Call Distribution systems (ACDs), and/or their I&R software. Much data is collected for purposes of complying with AIRS standards, particularly for agency accreditation or reporting to boards, funders, and/or contracts. We have yet to realize the full value of this data for benchmarking (comparing performance against goals/mission and/or industry peers) and for its potential for aggregation across regional, state, and national levels. Why Metrics Matter for 211? Through benchmarking and aggregation of data across the field, a story can be told about the value of 211 service as a social safety net for the entire nation on a daily basis and during times of crisis and disaster. The national 211 business plan clearly calls out the need for creating a unified system through the development of industry standards in order to avoid misuse of 211 (too broadly or too narrowly defined) and create “sustainability for the total system.” Funding, funding, funding – aggregate data and demonstrable performance outcomes enable pursuit of system-wide funding strategies. AIRS Data Requirements: Reports and Measures Service Requests Referrals Provided Service Gaps Demographic Data Zip Code City Age Gender Language Target Population First Time/Repeat Caller Follow up AIRS Standards National Reporting Total Calls Answered/Handled Services Requested Commercial Call Center Metrics Call center trade journals* consistently identify the following Key Performance Indicators (KPIs) as leading performance metrics or benchmarks: Service Level (% of calls responded to within a specified timeframe such as 80% in under 60 seconds) Speed of Answer (time caller waits in queue before live answer) First-Call Resolution (% of callers helped with one call not requiring repeat call on same issue) Adherence to Schedule (actual and scheduled work by time of day and type of work – handling calls, attending meetings, coaching, breaks, etc.) Forecasting Accuracy (measured on 2 levels: actual vs. forecasted call volume for hiring/recruiting and for existing staff schedules) Handle Time (includes talk time, hold time, and after call work) Customer Satisfaction Cost per Call Abandonment Rate (calls in queue disconnected by caller) *ICMI Call Center Magazine, Contac Professional, Benchmark Portal, Customer Operations Performance Center Inc. (COPC Inc.) incoming.com, Govt/Non-Profit Gold Standard Metric Avg Best Metric Avg Gold Service level (80% of calls answered) 62.8 sec 21.3 sec Speed of Answer 40 sec 31 sec Avg Speed of Answer 59.4 sec 18.6 sec Avg Call Length 7.04 min 4.44 min Avg Handle Time 7.1 min 6.9 min First Call Resolution 49.1% 65.3% 52% 75% Abandonment Rate 9.18% 5.46% % Very Satisfied Schedule Adherence 70.1% 73.3% Cost per Call $6.31 $3.52 Benchmark Portal (2003) Govt & NonProfit Industry Benchmark Report Govt & NonProfit industry benchmark report: Best-inBenchmark Portal (2005) class call center performance” Best Practices Study on Customer Service – City of Los Angeles Common Call Center Performance Measures Dimension Interpretation Average Speed of Answer Measures the percent of calls answered within a prescribed period of time. Queue Measures the length of time callers wait for live assistance, when requested Electronically Handled Calls Measures how many callers complete their calls using only the self-help technologies (IVR automated attendant) Measures how many callers hang up while waiting for live assistance. Measures how many calls are satisfactorily completed, or not escalated, after receiving live assistance. Generate reports on call center performance that can serve as input into budgeting process. Abandonment Rate First Contact Resolution Performance Tracking Source: Gartner Group and PwC subject matter experts Best Practice 80 percent within 20 seconds 43 seconds 30 percent 5 percent 88 percent Weekly Common 211 Metrics Quanity of Service Quality of Service Number of Calls Handled/Answered Referrals Provided to Callers Speed of Answer/Service Level Abandonment Rate/Dropped Calls Call Length Follow up Rate Service Gaps Caller Satisfaction 211 Recommended Best Practices Agency Accreditation (AIRS Standards compliance) CIRS/CRS certification 24/7 service Universal Access – cell, TTY, cable, web Service provided by a live, trained I&R Specialist without being required to leave a message or hang up and dial a separate number. Multilingual service Call monitoring 211 LA County Metrics Metric Target Actual (1st Qtr 2007) Service Level/Speed of Answer 80% in 60 sec 92% Abandonment Rate < 10% 3.5% Satisfied with Services 95% 93% Follow-up Rate (non-crisis 211) 3 calls/CRA per month 3+/CRA/mo Average calls monitored 2 calls/CRA per week 2/CRA/wk # of new programs/services added to database each year (for FY 06-07) 10% increase per year 3.6% Annual Survey Response Rate (figures for June-July 06-07 fiscal year 1 qtr remaining in process) 1st Mailing: 60% 2nd Mailing: 20% Phone Contact: 20% 49.96% 18.44% 9% # of agency site visits per year 50 20 % of eligible CRAs AIRS certified 100% 81% Employee turnover rate < 10% 7.7% Standardizing 211 Metrics Attempts to generate national reports on performance and service outcomes to support funding requests have been challenged by the lack of common definitions for basic measurements. Many 211 systems are attempting to bridge the differences through data standardization processes – just a few referenced here: Texas I&R Network: Dr. Sherry Bame, Texas A&M University United Ways of Ontario, Canada: Michael Milhoff, consultant WIN 211 (Washington and Oregon): Karen Fisher, Associate Professor, University of Washington Information School (211 Outcomes Registry project) IN 211 (Indiana): through it’s 211 operations manual development process AIRS Accreditation Committee: through 2007 revised standards led by Faed Hendry, chair and Manager of Training and Outreach at Findhelp Information Services of Toronto. Challenges to Standardization Limited data collection/reporting capability Lack of common terms and definitions Lack of standard call types/needs lists Inconsistent measurement/reporting frequencies Too much variation in data collection fields among I&R software systems Varying data sources: I&R software vs. CMS/ACD Too much data collection not enough data analysis Key Consistency Questions How is a call defined? Are demographics taken on caller or client? Are multiple clients on one call counted as a single transaction? A single call? Is age data collected by number of years, age range/group, birth date? Is location data related to caller or client? Is it location of call or location of residence? Data reported daily, monthly, quarterly, annually? Why Metrics Matter 1. 2. 3. 4. 5. 6. 7. 8. What you don’t measure doesn’t count. What gets measured gets done. If you don’t measure results, you can’t tell success from failure. If you can’t see success, you can’t reward it. If you can’t reward success, you’re probably rewarding failure. If you can’t see success, you can’t learn from it. If you can’t recognize failure, you can’t learn from it. If you can demonstrate results, you can win public support. Source: Aiming to Improve, Audit Commission, Reinventing Government, Osborne and Gaebler Next Steps Join 211 North America Metrics Project http://211metrics.updatelog.com Share findings of your region’s work Weigh in on which metrics should be collected by 211s Voice your position on how the scope of 211 service should be defined Participate in discussions on: Common definitions and terms Standard call types/needs lists Measurement/reporting frequencies Demographic data best practices Sources and References Websites www.callcentermagazine.com www.contactprofessional.com www.incoming.com (Queue Tips) www.copc.com Customer Operations Performance Center Inc. (COPC Inc.) is a leading authority on operations management and performance improvement for buyers and providers of customer contact center services. www.iawg.gov/performance/ - The Interagency Working Group (IAWG) on U.S. Government-Sponsored International Exchanges and Training created in 1997 for improving the coordination, efficiency, and effectiveness of United States Government-sponsored international exchanges and training. www.211California.org Section on 211 Standards Sources and References Reports National 211 Benchmark Survey – conducted by 211 San Diego (www.cairs.org/211.htm in 211 standards section) Devising, Implementing, and Evaluating Customer Service Initiatives (1/30/2007) – Office of Citizen Services & Communications: US GSA Agency Experiences with Outcomes Measurement – United Way of America (2000) WIN 2-1-1 Performance Evaluation and Cost-Benefit Analysis of 2-1-1 I&R Systems – University of Washington, Information School – Karen Fisher/Matt Saxton (2005) National 211 Business Plan – AIRS/UWA (2002) 211 Data Entry & Database Coding for TIRN – Texas A&M University – Dr. Sherry I. Bame 211 Across California by 2010 – Business Plan: 211 California Partnership with Sadlon & Associates, Inc. AIRS Standards for Professional Information and Referral, Version 5.1 (2006) Operations Manual – Indiana 211 Partnership, Inc. - First Approved 7/09/02; last revision 5/9/06 COPC-2000 CSP Gold Standard Release 4.1 (January 2007) Contact Information: Maribel Marin Executive Director 211 LA County (626) 350-1841 mmarin@211LA.org Real People. Real Answers. Real Help. www.211LACounty.org INFORMATION AND REFERRAL FEDERATION OF LOS ANGELES COUNTY Serving Los Angeles County since 1981