GG313 Lecture 12

advertisement

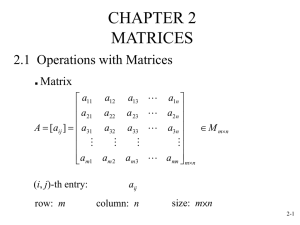

GG313 Lecture 12 Matrix Operations Sept 29, 2005 Matrix Addition Requirement: same order Characteristic: element-by-element addition Notation: A+B=C a11 a12 A a21 a22 a31 a32 1 A= 1 1 b11 b12 a11 b11 a12 b12 B b21 b22 C = A + B = a21 b21 a22 b22 b31 b32 a31 b31 a32 b32 1 1 1 2 B = -1 0 1 3 -2 3 C=A+B = 0 1 2 4 -1 Eqn 3.20 Commutative: A+B=B+A Associative: A+B+C= A+(B+C) You can add a scalar to a matrix: 1 A= 1 1 1 1 1 B=A+4= 5 5 5 5 5 5 You can also multiply a scalar by a matrix: 2 A= 4 1 4 6 3 B=A*2= 4 8 2 8 12 6 Dot Product Also known as: scalar product or inner product Requirements: row vector and column vector of the same order (dimensions) Characteristics: Result is a scalar Uses: Big in physics. The dot product between a force and a velocity yields the power being given to a body Notation: a • b = d a a1 a2 a3 b1 b b2 b3 a b d a1b1 a2b2 a3b3 Eqn. 3.25 In physics, we multiply the projection of one vector onto the other vector by the length of the second vector: a=ua,va,wa b=ub,vb,wb a•b=uaub+vavb+wawb=|a||b|cos() |a|=sqrt(ua2+va2+wa2)=length of a If a and b are orthogonal to eachother, then cos() =0 and a•b=0 If a and b are parallel to eachother, then cos() =1 and a•b= |a||b|= product of the lengths of the vectors Squaring a vector is simply taking the dot product of the vector and its transpose: For a row vector: a2 = a • aT For a column vector: a2 = aT • a Matrix Multiplication We can extend the idea of the dot product to matrices: Requirement: There must be the same number of columns in the first matrix as rows in the second. Characteristics: The resulting matrix has the same number of rows as the first matrix and same number of columns as the second. Notation: C(m,n)=A(m,p)• B(p,n) p elements of C are: c ij aikbkj k1 In Matlab, matrix multiplication is: C = A*B c11 c12 c13 a11 a12 c c c 21 22 23 a21 a22 c 31 c 32 c 33 a31 a32 a13 a23 a14 a24 a33 a34 a15 a25 a35 b11 b21 b31 b41 b51 b12 b22 b32 b42 b52 b13 b23 b33 b43 b53 c32 a31b12 a32b22 a33b32 a34b42 a35b52 C(m,n)=A(m,p). B(p,n) A C m=5, p=3 1,1 1,2 1,3 B 2,1 2,2 2,3 1,1 1,2 1,3 1,4 3,1 3,2 3,3 4,1 4,2 4,3 5,1 5,2 5,3 * 1,1 1,2 1,3 1,4 p=3, n=4 2,1 2,2 2,3 2,4 3,1 3,2 3,3 3,4 p cij=aikbkj k=1 m=5, n=4 2,1 2,2 2,3 2,4 = 3,1 3,2 3,3 3,4 4,1 4,2 4,3 4,4 5,1 5,2 5,3 5,4 The order of multiplication is important: NOT Commutative: A • B ≠ B • A Associative: (A • B) • C= A • (B • C) On the previous page, we have an order A(5,3) matrix times a B(3,4) matrix. B•A is not even possible. If the matrices are square, or if AT has the same order as B, or BT the same order as A, then both products, A•B and B•A can be formed. The transpose of a matrix product is equal to the product of the transpose of the matrices in reverse order: A • B • C = CT • BT • AT Multiplication by the identity matrix (Matlab: eye(p,p) ) leaves the original matrix unchanged - just as multiplying any regular number by “1” leaves that number unchanged. A(n,p) • I(p,p) = I • A = A For example: 1 2 3 4 5 1 2 3 4 5 6 7 8 9 10 6 7 8 9 10 11 12 13 14 15 11 12 13 14 15 1 1 1 1 1 Scaling: We can scale a coordinate system by multiplying by a diagonal matrix of scale weights: d11 D d22 d33 a11d11 a12d11 C a 21d22 a 22d22 a 31d33 a 32d33 a11 a12 a13 a14 • A = a 21 a 22 a 23 a 24 a 31 a 32 a 33 a 34 a13d11 a14 d11 a15d11 a 23d22 a 24 d22 a 25d22 a 33d33 a 34 d33 a 35d33 a15 a 25 a 35 On the previous slide we pre-multiplied by the scaling matrix. If we post-multiply instead,then each column gets multiplied by the same scaling factor: d11 a11 a12 a13 a14 a15 d 22 A = a 21 a 22 a 23 a 24 a 25• D d33 d a 31 a 32 a 33 a 34 a 35 44 d55 a11d11 a12d22 a13d33 a14 d44 C a 21d11 a 22d22 a 23d33 a 24 d44 a 31d11 a 32d22 a 33d33 a 34 d44 a15d55 a 25d55 a 35d55 Note that we had to use a diagonal order 5x5 matrix. Efficiency considerations: When dealing with large matrices, it is possible to save a considerable amount of calculating by being careful how multiplies are arranged. While there may be no difference in the result mathematically, if the number of calculations can be significantly reduced, time can be reduced and precision can be improved. Note that while (A•B)•C = A•(B•C), these two expressions can have very different numbers of multiplications. D(m,n) = A(m,p)• B(p,n) has m*n*p multiplications E(m,q) = D(m,n) • C(n,q) has m*n*q multiplications So E=(A•B)•C requires m*n(p+q) multiplications Similarly, F(p,q) =B(p,n) •C(n,q) has n*p*q multiplications E(m,q)= A(m,p)• F(p,q) has m*p*q multiplications So E=A•(B•C) requires p*q*(m+n) multiplications. If p*q >> m*n, then A•(B•C) will require far more multiplications than (A•B)•C. Fore example, say m=5, n=2, p=25, and q=100. Then one way requires 25*100*(5+2)=17,500 multiplications, and the other requires 5*2*(25+100)=1,250. As execution time and “digital noise”, or round off error, increase with every multiplication, it makes sense to perform the calculation in a particular way. In class exercises. 1) We have three vectors A, B, and C with one end at the origin and the other at xi,yi,zi. A(x,y,z)=(22,7,100), B=(-32,1,48), and C=(3,-11,33). Using Matlab matrices, transform the vectors into another coordinate system where r=3x and s=5y, t=2.5z. We set up a matrix with the x-values in the first row, y in the second, and z in the third: >> A=[22 -32 3;7 1 -11;100 48 33] A = 22 -32 3 7 1 -11 100 48 33 Now set up a diagonal matrix containing the transformations: >> D=[3 0 0;0 5 0; 0 0 2.5] D = 3.0 0 0 0 5.0 0 0 0 2.5 Now multiply: >> D*A ans = 66.0 -96.0 9.0 35.0 5.0 -55.0 250.0 120.0 82.5 This is a simple transformation; others, like changing from rectangular to spherical coordinates utilize more terms in the transformation matrix. DETERMINANT: A property of a matrix very useful in the solution of simultaneous equations. Requirement: Square matrices only Notation: det A, |A|, or ||A|| Calculation: a11 a12 A a21 a22 a31 a32 a13 a23 a11a22a33 a12a23a31 a13a32a21 a11a32a23 a12a21a33 a13a22a31 a33 As the matrix size grows, Matlab comes in handy, det(A). Determinant Characteristics: • Switching two rows or columns changes the sign. • Scalars can be factored out of rows and columns. • Multiplication of a row or column by a scalar multiplies the determinant by that scalar. • The determinant of a diagonal matrix equals its trace. Consider the determinant of a 3x3 matrix. It can be rewritten: a11 a12 A a21 a22 a31 a32 a13 a22 a23 a11 a32 a33 a23 a33 a12 a21 a23 a31 a33 a13 a21 a22 a31 a32 And the determinant can be written: |A|=a11(a22a33-a23a32)-a12(a21a33-a23a31)+a13(a21a32-a22a31) A singular matrix is a square matrix with determinant equal to 0. This happens when 1) any row or column is equal to zero or 2) Any row or column is a linear combination of any other rows or columns The degree of clustering about the principal diagonal is a property of a matrix that affects the determinant. If clustering is high, then the determinant is larger. The rank of a matrix is the number of linearly independent vectors (row or column) that it contains. The rank of a product of two matrices must be less than or equal to the smallest rank of the two matrices being multiplied. Linear independence here means that the two vectors being considered are NOT scalar multiples of each other. Matrix division (Matrix inverse) In normal math, we can easily solve the equation below for c. y= cx, c = y/x = y•x-1. X•x-1=1 We can generate inverse matrices to do the same operation in matrix algebra. In matrix algebra the inverse matrix is A-1 , where AA-1=I, the identity matrix. The inverse of a matrix exists only if its determinant is non-zero. The inverse of a matrix is easy to calculate for 2x2 matrices, but anything larger is difficult to even explain. For a 2x2 matrix: 1 a22 a12 1 A Eqn. 3.63 a a | A | 21 11 4 5 For example, A A = 4 * (-2) - 5 * 3 = -23, 3 2 1 2 5 2 /23 5 /23 1 A 23 3 4 3/23 4 /23 To check: 4 5 2 /23 5 /23 4 * 2 /23 5 * 3/23 4 * 5 /23 5 * (4 /23) 1 A • A • 3* 5 /23 2 * 4 /23 1 3 2 3/23 4 /23 3* 2 /23 2 * 3/23 1 Using matrices to calculate normal scores - as in Chapter 2. We can use matrix notation to calculate the mean and zvalues of vectors. An important concept is the unit vector that consists of ones in a row (unit row vector) or column (unit column vector). Consider the data matrix A consisting of three columns of data samples: 1 2 3 A 4 5 6and the unit row vector : jT3 1 1 1 7 8 9 We can calculate the mean of each column in A simply by multiplying A by J3T and dividing by 3: 1 T 1 x c jn A for column mean, and x r Ajm for row mean n m In our example: xc 1 1 1 3 1 1 x r 4 3 7 1 2 3 1 1 4 5 6 = 12 15 18 4 5 6, and 3 7 8 9 6 2 2 31 1 5 61 = 15 5. 3 8 9 1 24 8 We now have the mean values, but we want the normal scores (z-values), aij a j zij . sj We do the subtraction in the numerator by: D= - (1/n) JA where J is the nxm unit matrix (all entries=1). The standard deviation is not linear, and needs to be calculated separately, then entered into a diagonal matrix: 1 0 0 2 S 0 0 0 0 n1 The normal scores are then: 1 1 1 1 Z DS A JA S I JAS n n 1