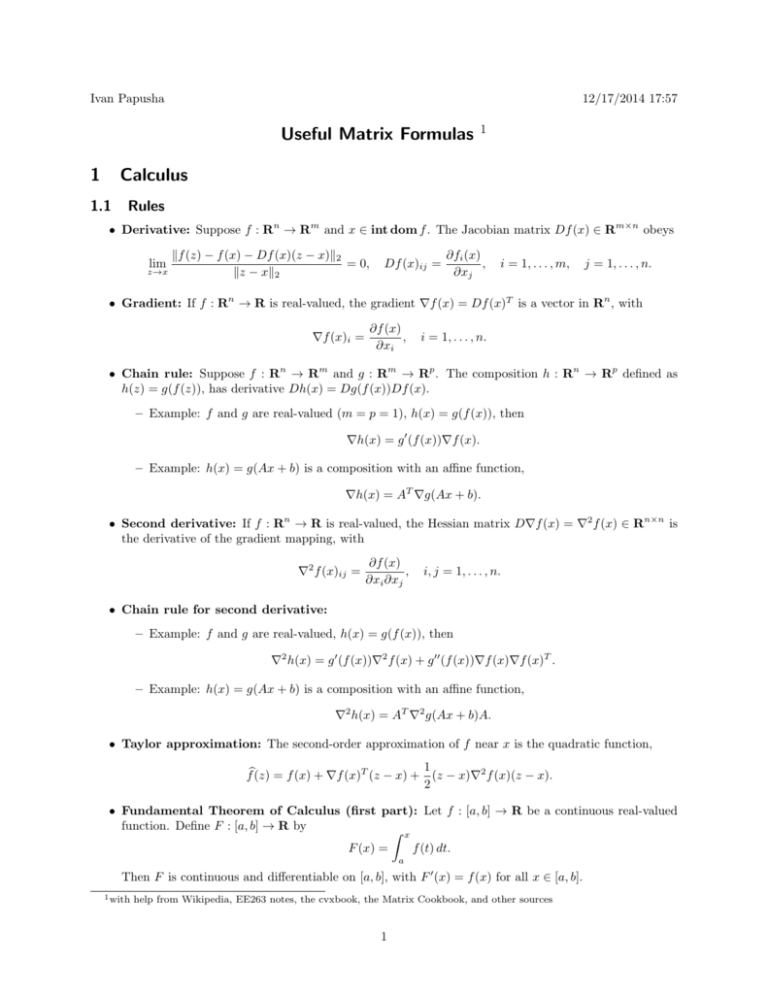

Useful Matrix Formulas 1 1 Calculus

advertisement

Ivan Papusha

12/17/2014 17:57

Useful Matrix Formulas

1

1

Calculus

1.1

Rules

• Derivative: Suppose f : Rn → Rm and x ∈ int dom f . The Jacobian matrix Df (x) ∈ Rm×n obeys

lim

z→x

kf (z) − f (x) − Df (x)(z − x)k2

= 0,

kz − xk2

Df (x)ij =

∂fi (x)

,

∂xj

i = 1, . . . , m,

j = 1, . . . , n.

• Gradient: If f : Rn → R is real-valued, the gradient ∇f (x) = Df (x)T is a vector in Rn , with

∇f (x)i =

∂f (x)

,

∂xi

i = 1, . . . , n.

• Chain rule: Suppose f : Rn → Rm and g : Rm → Rp . The composition h : Rn → Rp defined as

h(z) = g(f (z)), has derivative Dh(x) = Dg(f (x))Df (x).

– Example: f and g are real-valued (m = p = 1), h(x) = g(f (x)), then

∇h(x) = g ′ (f (x))∇f (x).

– Example: h(x) = g(Ax + b) is a composition with an affine function,

∇h(x) = AT ∇g(Ax + b).

• Second derivative: If f : Rn → R is real-valued, the Hessian matrix D∇f (x) = ∇2 f (x) ∈ Rn×n is

the derivative of the gradient mapping, with

∇2 f (x)ij =

∂f (x)

,

∂xi ∂xj

i, j = 1, . . . , n.

• Chain rule for second derivative:

– Example: f and g are real-valued, h(x) = g(f (x)), then

∇2 h(x) = g ′ (f (x))∇2 f (x) + g ′′ (f (x))∇f (x)∇f (x)T .

– Example: h(x) = g(Ax + b) is a composition with an affine function,

∇2 h(x) = AT ∇2 g(Ax + b)A.

• Taylor approximation: The second-order approximation of f near x is the quadratic function,

1

fb(z) = f (x) + ∇f (x)T (z − x) + (z − x)∇2 f (x)(z − x).

2

• Fundamental Theorem of Calculus (first part): Let f : [a, b] → R be a continuous real-valued

function. Define F : [a, b] → R by

Z x

f (t) dt.

F (x) =

a

Then F is continuous and differentiable on [a, b], with F ′ (x) = f (x) for all x ∈ [a, b].

1 with

help from Wikipedia, EE263 notes, the cvxbook, the Matrix Cookbook, and other sources

1

• Fundamental Theorem of Calculus (second part): Let f and g be functions such that for all

x ∈ [a, b], f (x) = g ′ (x). If f is Riemann integrable on [a, b], then

Z

b

a

f (x) dx = g(b) − g(a).

• Differentiation under the integral sign: Suppse we have

F (x) =

Z

b(x)

f (x, t) dt,

a(x)

then the derivative is

d

F (x) = f (x, b(x))b′ (x) − f (x, a(x))a′ (x) +

dx

1.2

Z

b(x)

a(x)

∂

f (x, t) dt.

∂x

Derivatives

• Linear functions:

∂xT a

∂aT x

=

=a

∂x

∂x

• Quadratic functions:

∂

(Ax + b)T W (Cx + d) = AT W (Cx + d) + C T W T (Ax + b)

∂x

∂

∂

(Ax + b)T W (Ax + b) =

kAx + bk2W = AT (W + W T )(Ax + b)

∂x

∂x

∂

kAx + bk22 = 2AT (Ax + b)

∂x

∂ T

x Ax = (A + AT )x

∂x

• Norms:

∂

x+b

kx + bk2 =

∂x

kx + bk2

1.3

Inverses

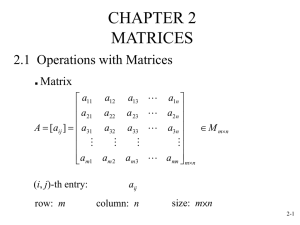

• Inverse of a 2 × 2 matrix:

a11

a21

a12

a22

−1

=

1

a22

det(A) −a21

−a12

a11

where det(A) = a11 a22 − a12 a21

• Inverse of

a11

a21

a31

a 3 × 3 matrix:

−1

a12 a13

−a23 a32 + a22 a33

1

a23 a31 − a21 a33

a22 a23 =

det(A)

a32 a33

−a22 a31 + a21 a32

a13 a32 − a12 a33

−a13 a31 + a11 a33

a12 a31 − a11 a32

−a13 a22 + a12 a23

a13 a21 − a11 a23

−a12 a21 + a11 a22

where det(A) = a13 (−a22 a31 + a21 a32 ) + a12 (a23 a31 − a21 a33 ) + a11 (−a23 a32 + a22 a33 )

2

2

Least squares and SVD

2.1

Singular value decomposition

• Mappings: Every matrix A ∈ Rm×n maps the unit ball in Rn to an ellipsoid in Rm , i.e, if

S = {x ∈ Rn : kxk ≤ 1} 7→ AS = {Ax : x ∈ S}

by the set {x ∈ Rn : xT B −1 x ≤ 1}, where the

An ellipsoid induced by the matrix B ∈ Sn++ is given

√

semi-axis lengths are square roots of eigenvalues λi and semi-axis directions are unit eigenvectors vi

of B.

Rn

√

λ2 v 2

√

λ1 v 1

Pr

• SVD: A = U ΣV T = i=1 σi ui viT , where r = Rank(A). Equivalent to Avi = σi ui , where u1 , . . . un

are the left singular vectors, v1 , . . . , vn are the right singular vectors, and σ1 ≥ · · · ≥ σn ≥ 0 are the

singular values of A.

Rn

v1

Rm

v2

σ2 u2

σ1 u1

x 7→ Ax

• Computing the SVD:

σi =

q

λi (AT A) or

q

λi (AAT )

ui = eigenvectors of AAT

vi = eigenvectors of AT A

• Range and null space: If r = Rank(A), then

{u1 , . . . , ur } is an orthonormal basis for R(A).

{vr+1 , . . . , vn } is an orthonormal basis for N (A).

• Testing for membership in span: ydes ∈ R(A),Pif Rank [ydes A] = Rank(A). Also if A = U ΣV T

r

is the SVD then the component of ydes in R(A) is i=1 ui uTi ydes , meaning

!

r

X

T

T

ui ui ydes = (I − Û Û )ydes = 0.

ydes ∈ R(A) ⇔ z = ydes −

i=1

3

2.2

Linear problems

• Pseudoinverse: Suppose A ∈ Rm×n with (thin) singular value decomposition A = Û Σ̂V̂ T The

Moore-Penrose inverse A† is given by A† = V̂ Σ̂−1 Û T . Furthermore, if A is full rank,

A† = lim (AT A + µI)−1 AT

µ→0

= lim AT (AAT + ǫI)−1

ǫ→0

(

(AT A)−1 AT if A is skinny, A† is a left inverse

=

AT (AAT )−1 if A is fat, A† is a right inverse

• Least squares (estimation):

N (A) = {0})

A is skinny and full rank (m > n, more equations than unknowns,

xopt = arg minn kAx − yk = A† y = (AT A)−1 AT y

x∈R

• Minimum norm (control):

R(A) = Rm )

A is fat and full rank (m < n, more control inputs than outputs,

xopt = arg

min

x∈Rn ,Ax=y

• Canonical multiobjective least squares:

where

kxk = A† y = AT (AAT )−1 y

Wish to minimize competing objectives J = J1 + µJ2 ,

J1 = kAx − yk2 ,

J2 = kxk2 .

Solution given by xopt = (AT A + µI)−1 AT y.

• General multiobjective least squares:

where

Wish to minimize competing objectives J = J1 + µJ2 ,

J1 = kAx − yk2

J2 = kF x − gk2

J1 + µJ2 = kAx − yk2 + µkF x − gk2

2

A

y .

√

√

=

x

−

µF

µg Solution given by xopt = (AT A + µF T F )−1 (AT y + µF T g).

• Positive semidefinite cone:

a symmetric matrix is positive semidefinite if and only if all its

principal minors (determinants of symmetric submatrices) are nonnegative, e.g.,

x1 x2 x3

x1 ≥ 0, x4 ≥ 0, x6 ≥ 0,

x2 x4 x5 0 ⇐⇒

x1 x4 − x22 ≥ 0, x4 x6 − x25 ≥ 0, x1 x6 − x23 ≥ 0

x1 x4 x6 + 2x2 x3 x5 − x1 x25 − x6 x22 − x4 x23 ≥ 0

x3 x5 x6

4

3

Matrix gymnastics

3.1

Block formulas

• Completion of squares: A ∈ Sn++ , D ∈ Sm , B ∈ Rn×m ; a, b, x, y ∈ R with a 6= 0 :

2 b2

b

2

2

y2

ax + 2bxy + dy = a x + y + d −

a

a

T A B x

x

= xT Ax + 2y T B T x + y T Dy

BT D y

y

= (x + A−1 By)T A(x + A−1 By) + y T (D − B T A−1 B)y

which gives general formula for minimization problem:

T A B x

x

= y T (D − B T A−1 B)y,

min

BT D y

y

x

xopt = arg min(·) = −A−1 By

x

• Block LDU decomposition: since above holds for all x, y

0

I

0 A

A B

I

=

B T A−1 I 0 D − B T A−1 B 0

BT D

• Asymmetric block LDU decomposition:

I

0

I

0 A

A B

=

CA−1 I 0 D − CA−1 B 0

C D

A−1 B

I

A−1 B

I

• Block matrix inverse: invert using LDU decomposition (or complete square to minimize over y)

−1 −1

A + A−1 BS −1 CA−1 −A−1 BS −1

A B

=

C D

−S −1 CA−1

S −1

T −1

−T −1 BD−1

,

=

−D−1 CT −1 D−1 + D−1 CT −1 BD−1

S = D − CA−1 B (Schur complement)

T = A − BD−1 C

• Schur complement: If the Schur complement of a block matrix is S = D − CA−1 B, then

A B

det

= det A det S

C D

• Matrix inversion lemma: equating block inverses,

(A − BD−1 C)−1 = A−1 + A−1 B(D − CA−1 B)−1 CA−1 .

• Rank-one update: special case of the matrix inversion lemma, B = u, D = −1, C = v T ,

(A + uv T )−1 = A−1 −

A−1 uv T A−1

.

1 + v T A−1 u

• Matrix determinant lemma: special case with matrix whose Schur complement is 1 + v T A−1 u,

det(A + uv T ) = (1 + v T A−1 u) det(A).

• More gymnastics:

A(I + A)−1 = I − (I + A)−1

(I + AB)−1 = I − A(I + BA)−1 B

A(I + BA)−1 = (I + AB)−1 A (push-through)

5

3.2

Schur complements

n

X ∈ S and its Schur complement are partitioned as

A B

, S = C − B T A−1 B.

X=

BT C

• X ≻ 0 if and only if A ≻ 0 and S ≻ 0.

• If A ≻ 0, then X 0 if and only if S 0.

In addition, since the condition Bv ∈ R(A) is the same as (I − AA† )Bv = 0,

• X 0 if and only if A 0, B T (I − AA† ) = 0, and C − B T A† B 0.

• X 0 if and only if A 0, (I − AA† )B = 0, and C − B T A† B 0.

3.3

S-procedure

Let fi (x) = xT Pi x + 2qiT x + ri be quadratic functions of variable x ∈ Rn with Pi = PiT for all i = 1, . . . , p.

• Nonstrict inequalities: if there exist scalars τ1 ≥ 0, . . . , τp ≥ 0 such that for all x,

f0 (x) −

p

X

i=1

τi fi (x) ≥ 0,

then

f0 (x) ≥ 0 for all x such that fi (x) ≥ 0,

i = 1, . . . , p.

In addition, the converse holds

– if the functions fi are affine (Farkas lemma), or

– if p = 1 and there is some x0 such that f1 (x0 ) > 0.

• Strict inequalities: if there exist scalars τ1 ≥ 0, . . . , τp ≥ 0 such that

P0 −

p

X

i=1

τi Pi ≻ 0,

then

xT P0 x > 0 for all x 6= 0 such that xT Pi x ≥ 0,

In addition, the converse holds

– if p = 1 and there is some x0 such that xT0 P1 x0 > 0.

6

i = 1, . . . , p.

4

Convex optimization

4.1

KKT conditions

The primal problem is

minimize

subject to

f0 (x)

fi (x) ≤ 0,

hi (x) = 0,

i = 1, . . . , m

i = 1, . . . , p,

where fi : Rn → R are convex for all i = 0, . . . , m and hi : Rn → R are affine for all i = 1, . . . , p.

⋆

• Stationarity: ∇f0 (x ) +

m

X

i=1

λ⋆i ∇fi (x⋆ )

+

p

X

i=1

νi⋆ ∇hi (x⋆ ) = 0

• Primal feasibility: fi (x⋆ ) ≤ 0 for all i = 1, . . . , m, and hi (x⋆ ) = 0 for all i = 1, . . . , p

• Dual feasibility: λ⋆i ≥ 0 for all i = 1, . . . , m

• Complementary slackness: λ⋆i fi (x⋆ ) = 0 for all i = 1, . . . , m

4.2

General vector composition rule

Define the composition

f (x) = h(g1 (x), g2 (x), . . . , gk (x)),

k

where h : R → R is convex, and gi : Rn → R. Suppose that for each i, one of the following holds:

• h is nondecreasing in the ith argument, and gi is convex

• h is nonincreasing in the ith argument, and gi is concave

• gi is affine

Then the function f is convex.

4.3

Duality

The domain of the primal problem is D =

m

Tm

i=0

dom fi ∩

p

Tp

i=1

dom hi

• Lagrangian: has dom L = D × R × R and is given by

L(x, λ, ν) = f0 (x) +

m

X

λi fi (x) +

i=1

p

X

νi hi (x)

i=1

• Dual function: g(λ, ν) = inf x∈D L(x, λ, ν). The dual optimization problem is

maximize

subject to

g(λ, ν)

λ 0.

• Slater’s condition: Suppose the primal problem is convex and the first k inequality functions

f1 , . . . , fk are affine. Then strong duality holds if there exists an x ∈ relint D with

fi (x) ≤ 0,

i = 1, . . . , k,

fi (x) < 0,

i = k + 1, . . . , m,

hi (x) = 0,

i = 1, . . . , p.

Here, we define the relative interior and the affine hull of a set C as

relint C = {x ∈ C | B(x, r) ∩ aff C ⊆ C for some r > 0}

aff C = {θ1 x1 + · · · + θk xk | x1 , . . . , xk ∈ C, θ1 + · · · + θk = 1}

7