Observations of an Accidental Computational Scientist

advertisement

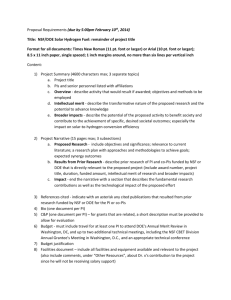

Observations of an Accidental Computational Scientist SIAM/NSF/DOE CSME Workshop 25 March 2003 David Keyes Department of Mathematics & Statistics Old Dominion University & Institute for Scientific Computing Research Lawrence Livermore National Laboratory Academic and lab backgrounds 74-78: B.S.E., Aerospace and Mechanical/Engineering Physics 78-84: M.S. & Ph.D., Applied Mathematics 84-85: Post-doc, Computer Science 86-93: Asst./Assoc. Prof., Mechanical Engineering 93-99: Assoc. Prof., Computer Science 86-02: ICASE, NASA Langley 99-03: Prof., Mathematics & Statistics 99- : ISCR, Lawrence Livermore 03- : Prof., Applied Physics & Applied Mathematics 03- : CDIC, Brookhaven 25 March 2003 SIAM/NSF/DOE CSMR Workshop Computational Science & Engineering A “multidiscipline” on the verge of full bloom Envisioned by Von Neumann and others in the 1940’s Undergirded by theory (numerical analysis) for the past fifty years Empowered by spectacular advances in computer architecture over the last twenty years Enabled by powerful programming paradigms in the last decade Adopted in industrial and government applications Boeing 777’s computational design a renowned milestone DOE NNSA’s “ASCI” (motivated by CTBT) DOE SC’s “SciDAC” (motivated by Kyoto, etc.) 25 March 2003 SIAM/NSF/DOE CSMR Workshop Niche for computational science Has theoretical aspects (modeling) Has experimental aspects (simulation) Unifies theory and experiment by providing common immersive environment for interacting with multiple data sets of different sources Provides “universal” tools, both hardware and software Telescopes are for astronomers, microarray analyzers are for biologists, spectrometers are for chemists, and accelerators are for physicists, but computers are for everyone! Costs going down, capabilities going up every year 25 March 2003 SIAM/NSF/DOE CSMR Workshop Simulation complements experimentation Experiments dangerous Experiments difficult to instrument Experiments prohibited or impossible Engineering electromagnetics aerodynamics Physics cosmology radiation transport Environment global climate wildland firespread Ex #3 Ex #2 Scientific Simulation Ex #1 Experiments expensive Energy combustion fusion Ex #4 personal examples 25 March 2003 SIAM/NSF/DOE CSMR Workshop Example #1: wildland firespread Simulate fires at the wildland-urban interface, leading to strategies for planning preventative burns, fire control, and evacuation “It looks as if all of Colorado is burning” – Bill Owens, Governor “About half of the U.S. is in altered fire regimes” – Ron Myers, Nature Conservancy Joint work between ODU, CMU, Rice, Sandia, and TRW 25 March 2003 SIAM/NSF/DOE CSMR Workshop Example #1: wildland firespread, cont. Objective Develop mathematical models for tracking the evolution of wildland fires and the capability to fit the model to fires of different character (fuel density, moisture content, wind, topography, etc.) Accomplishment to date Implemented firefront propagation with level set method with empirical front advance function; working with firespread experts to “tune” the resulting model Significance Wildland fires cost many lives and billions of dollars annually; other fire models pursued at national labs are more detailed, but too slow to be used in real time; one of our objectives is to offer practical tools to firechiefs in the field 25 March 2003 SIAM/NSF/DOE CSMR Workshop Example #2: aerodynamics Simulate airflows over wings and streamlined bodies on highly resolved grids leading to superior aerodynamic design Joint work between ODU, Argonne, LLNL, and NASA-Langley 25 March 2003 SIAM/NSF/DOE CSMR Workshop Example #2: aerodynamics, cont. Objective Develop analysis and optimization capability for compressible and incompressible external aerodynamics Accomplishment to date Developed highly parallel nonlinear implicit solvers (NewtonKrylov-Schwarz) for unstructured grid CFD, implemented in PETSc, demonstrated on a “workhorse” NASA code running on the ASCI machines (up to 6,144 processors) Significance Windtunnel tests of aerodynamic bodies are expensive and difficult to instrument; computational simulation and optimization (as for the Boeing 777) will greatly reduce the engineering risk of developing new fuel-efficient aircraft, cars, etc. 25 March 2003 SIAM/NSF/DOE CSMR Workshop Example #3: radiation transport Simulate “flux-limited diffusion” transport of radiative energy in inhomogeneous materials Joint work between ODU, ICASE, and LLNL 25 March 2003 SIAM/NSF/DOE CSMR Workshop Example #3: radiation transport, cont. Objective Enhance accuracy and reliability of analysis methods used in the simulation of radiation transport in real materials Accomplishment to date Leveraged expertise and software (PETSc) developed for aerodynamics simulations in a related physical application domain, also governed by nonlinear PDEs discretized on unstructured grids, where such methods were less developed Significance Under current stockpile stewardship policies, DOE must be able to reliably predict the performance of high-energy devices without full-scale physical experiments 25 March 2003 SIAM/NSF/DOE CSMR Workshop Example #4: fusion energy Simulate plasmas in tokomaks, leading to understanding of plasma instability and (ultimately) new energy sources Joint work between ODU, Argonne, LLNL, and PPPL 25 March 2003 SIAM/NSF/DOE CSMR Workshop Example #4: fusion energy, cont. Objective Improve efficiency and therefore extend predictive capabilities of Princeton’s leading magnetic fusion energy code “M3D” to enable it to operate in regimes where practical sustained controlled fusion occurs Accomplishment to date Augmented the implicit linear solver (taking up to 90% of execution time) of original code with parallel algebraic multigrid; new solvers are much faster and robust, and should scale better to the finer mesh resolutions required for M3D Significance An M3D-like code will be used in DOE’s Integrated Simulation and Optimization of Fusion Systems, and ITER collaborations, with the goal of delivering cheap safe fusion energy devices by early-to-mid 21st century 25 March 2003 SIAM/NSF/DOE CSMR Workshop We lead the “TOPS” project U.S. DOE has created the Terascale Optimal PDE Simulations (TOPS) project within the Scientific Discovery through Advanced Computing (SciDAC) initiative; nine partners in this 5-year, $17M project, an “Integrated Software Infrastructure Center” 25 March 2003 SIAM/NSF/DOE CSMR Workshop Toolchain for PDE Solvers in TOPS* project Design and implementation of “solvers” Time integrators (w/ sens. anal.) Nonlinear solvers (w/ sens. anal.) Constrained optimizers f ( x , x, t , p) 0 F ( x, p ) 0 min ( x, u ) s.t. F ( x, u ) 0, u 0 u Linear solvers Eigensolvers Ax b Optimizer Sens. Analyzer Time integrator Nonlinear solver Ax Bx Eigensolver Linear solver Software integration Performance optimization Indicates dependence *Terascale Optimal PDE Simulations: www.tops-scidac.org 25 March 2003 SIAM/NSF/DOE CSMR Workshop SciDAC apps and infrastructure 4 projects in high energy and nuclear physics 14 projects in biological and environmental research 17 projects in scientific software and network infrastructure 5 projects in fusion energy science 10 projects in basic energy sciences 25 March 2003 SIAM/NSF/DOE CSMR Workshop Optimal solvers Convergence rate nearly independent of discretization parameters Multilevel schemes for linear and nonlinear problems Time to Solution Newton-like schemes for quadratic convergence of nonlinear problems 700 600 500 400 200 100 0 3 60 50 150 40 100 30 10 100 12 27 procs 48 75 time 1000 Problem Size (increasing with number of processors) AMG shows perfect iteration scaling, above, in contrast to ASM, but still needs performance work to achieve temporal scaling, below, on CEMM fusion code, M3D, though time is halved (or better) for large runs (all runs: 4K dofs per processor) ASM-GMRES AMG-FMGRES AMG inner 20 scalable 0 1 ASM-GMRES AMG-FMGRES 300 200 50 iters 10 0 3 12 27 48 75 25 March 2003 SIAM/NSF/DOE CSMR Workshop We have run on most ASCI platforms… 100+ Tflop / 30 TB Livermore Capability 50+ Tflop / 25 TB 30+ Tflop / 10 TB White 10+ Tflop / 4 TB Blue Red ‘97 3+ Tflop / 1.5 TB Plan Develop 1+ Tflop / 0.5 TB ‘98 ‘99 Use ‘00 Sandia ‘01 ‘02 Time (CY) ‘03 ‘04 Livermore ‘05 ‘06 Los Alamos NNSA has roadmap to go to 100 Tflop/s by 2006 www.llnl.gov/asci/platforms …and now the SciDAC platforms IBM Power3+ SMP 16 procs per node 208 nodes 24 Gflop/s per node 5 Tflop/s (doubled in February to 10) Berkeley IBM Power4 Regatta 32 procs per node 24 nodes 166 Gflop/s per node 4Tflop/s (10 in 2003) Oak Ridge 25 March 2003 SIAM/NSF/DOE CSMR Workshop Computational Science at Old Dominion Launched in 1993 as “High Performance Computing” Keyes appointed ‘93; Pothen early ’94 Major projects: NSF Grand, National, and Multidisciplinary Challenges (19951998) [w/ ANL, Boeing, Boulder, ND, NYU] DoEd Graduate Assistantships in Areas of National Need (19952001) DOE Accelerated Strategic Computing Initiative “Level 2” (19982001) [w/ ICASE] DOE Scientific Discovery through Advanced Computing (20012006) [w/ ANL, Berkeley, Boulder, CMU, LBNL, LLNL, NYU, Tennessee] NSF Information Technology Research (2001-2006) [w/ CMU, Rice, Sandia, TRW] 25 March 2003 SIAM/NSF/DOE CSMR Workshop CS&E at ODU today Center for Computational Science at ODU established 8/2001; new 80,000 sq ft building (for Math, CS, Aero, VMASC, CCS) opens 1/2004; finally getting local buy-in ODU’s small program has placed five PhDs at DOE labs in the past three years 25 March 2003 SIAM/NSF/DOE CSMR Workshop Post-doctoral and student alumni Linda Stals, ANU Lois McInnes, ANL Satish Balay, ANL Dinesh Kaushik, ANL D. Karpeev, ANL David Hysom, LLNL Gary Kumfert, LLNL Florin Dobrian, ODU 25 March 2003 SIAM/NSF/DOE CSMR Workshop <Begin> “pontification phase” Five models that allow CS&E to prosper Laboratory institutes (hosted at a lab) ICASE, ISCR (more details to come) National institutes (hosted at a university) IMA, IPAM Interdisciplinary centers ASCI Alliances, SciDAC ISICs, SCCM, TICAM, CAAM, … CS&E fellowship programs CSGF, HPCF Multi-agency funding (cyclical to be sure, but sometimes collaborative) DOD, DOE, NASA, NIH, NSF, … 25 March 2003 SIAM/NSF/DOE CSMR Workshop LLNL’s ISCR fosters collaborations with academe in computational science Serves as lab’s point of contact for computational science interests Influences the external research community to pursue laboratory-related interests Manages LLNL’s ASCI Institute collaborations in computer science and computational mathematics Assists LLNL in technical workforce recruiting and training 25 March 2003 SIAM/NSF/DOE CSMR Workshop ISCR’s philosophy: Science is borne by people Be “eyes and ears” for LLNL by staying abreast of advances in computer and computational science Be “hands and feet” for LLNL by carrying those advances into the laboratory Three principal means for packaging scientific ideas for transfer papers software people People are the most effective! 25 March 2003 SIAM/NSF/DOE CSMR Workshop ISCR brings visitors to LLNL through a variety of programs (FY 2002 data) Seminars & Visitors 180 visits from 147 visitors 66 ISCR seminars Summer Program ISCR B451 Postdocs & Faculty 9 postdoctoral researchers 3 faculty-in-residence 43 grad students 29 undergrads 24 faculty Workshops & Tutorials 10 tutorial lectures 6 technical workshops 25 March 2003 SIAM/NSF/DOE CSMR Workshop ISCR is the largest of LLNL’s six institutes Founded in 1986 Under current leadership since June 1999 160 ISCR has grown with LLNL’s increasing reliance on simulation as a predictive science 140 120 100 80 60 Seminars Visitors Students 40 20 0 FY 97 FY 98 FY 99 FY 00 FY 01 FY 02 25 March 2003 SIAM/NSF/DOE CSMR Workshop Our academic collaborators are drawn from all over University of California Berkeley Davis Irvine Los Angeles San Diego Santa Barbara Santa Cruz ASCI ASAP-1 Centers Caltech Stanford University University of Chicago University of Illinois University of Utah Major European Centers University of Bonn University of Heidelberg Other Universities Carnegie Mellon Florida State University MIT Ohio State University Old Dominion University RPI Texas A&M University University of Colorado University of Kentucky University of Minnesota University of N. Carolina University of Tennessee University of Texas University of Washington Virginia Tech and more! 25 March 2003 SIAM/NSF/DOE CSMR Workshop Internships in Terascale Simulation Technology (ITST) tutorials Students in residence hear from enthusiastic members of lab divisions, besides their own mentor, including five authors* of recent computational science books, on a variety of computational science topics Lecturers: David Brown, Eric Cantu-Paz*, Alej Garcia*, Van Henson*, Chandrika Kamath, David Keyes, Alice Koniges*, Tanya Kostova, Gary Kumfert, John May*, Garry Rodrigue 25 March 2003 SIAM/NSF/DOE CSMR Workshop ISCR pipelines people between the university and the laboratory Universities ISCR Lab programs Students Faculty visit the ISCR, bringing students Faculty Most faculty return to university, with lab priorities Lab Employees Some students become lab employees Some students become faculty, with lab priorities A few faculty become lab employees 25 March 2003 SIAM/NSF/DOE CSMR Workshop ISCR impact on DOE computational science hiring 178 ISCR summer students in past five years (many repeaters) 51 have by now emerged from the academic pipeline 23 of these (~45%) are now working for the DOE 15 LLNL 3 each LANL and Sandia 1 each ANL and BNL 11 of these (~20%) are in their first academic appointment In US: Duke, Stanford, U California, U Minnesota, U Montana, U North Carolina, U Pennsylvania, U Utah, U Washington Abroad: Swiss Federal Institute of Technology (ETH), University of Toronto 25 March 2003 SIAM/NSF/DOE CSMR Workshop ISCR sponsors and conducts meetings on timely topics for lab missions Bay Area NA Day Common Component Architecture Copper Mountain Multigrid Conference DOE Computational Science Graduate Fellows Hybrid Particle-Mesh AMR Methods Mining Scientific Datasets Large-scale Nonlinear Problems Overset Grids & Solution Technology Programming ASCI White Sensitivity and Uncertainty Quantification 25 March 2003 SIAM/NSF/DOE CSMR Workshop We hosted a “Power Programming” short course to prepare LLNL for ASCI White Steve White, IBM ASCI White overview, POWER3 architecture, tuning for White Larry Carter, UCSD/NPACI designing kernels and data structures for scientific applications, cache and TLB issues David Culler, UC Berkeley understanding performance thresholds Clint Whalley, U Tennessee coding for performance Bill Gropp, Argonne National Lab MPI-1, Parallel I/O, MPI/OpenMP tradeoffs 65 internal attendees over 3 days 25 March 2003 SIAM/NSF/DOE CSMR Workshop We launched the Terascale Simulation Lecture Series to receptive audiences Fred Brooks, UNC Ingrid Daubechies, Princeton David Johnson, AT&T Peter Lax, NYU Michael Norman, UCSD Charlie Peskin, NYU Gil Strang, MIT Burton Smith, Cray Eugene Spafford, Purdue Andries Van Dam, Brown 25 March 2003 SIAM/NSF/DOE CSMR Workshop <Continue> “pontification phase” Concluding swipes A curricular challenge for CS&E programs Signs of the times for CS&E “Red skies at morning” ( “sailers take warning”) “Red skies at night” (“sailers delight”) Opportunities in which CS&E will shine A word to the sponsors 25 March 2003 SIAM/NSF/DOE CSMR Workshop A curricular challenge CS&E majors without a CS undergrad need to learn to compute! Prerequisite or co-requisite to becoming useful interns at a lab Suggest a “bootcamp” year-long course introducing: C/C++ and object-oriented program design Data structures for scientific computing Message passing (e.g., MPI) and multithreaded (e.g., OpenMP) programming Scripting (e.g., Python) Linux clustering Scientific and performance visualization tools Profiling and debugging tools NYU’s sequence G22.1133/G22.1144 is an example for CS 25 March 2003 SIAM/NSF/DOE CSMR Workshop “Red skies at morning” Difficult to get support for maintaining critical software infrastructure and “benchmarking” activities Difficult to get support for hardware that is designed with computational science and engineering in mind Difficult for pre-tenured faculty to find reward structures conducive to interdisciplinary efforts Unclear how stable is the market for CS&E graduates at the entrance to a 5-year pipeline Political necessity of creating new programs with each change of administrations saps time and energy of managers and community 25 March 2003 SIAM/NSF/DOE CSMR Workshop “Red skies at night” DOE’s SciDAC model being recognized and propagated NSF’s DMS budgets on a multi-year roll SIAM SIAG-CSE attracting members from outside of traditional SIAM departments CS&E programs beginning to exhibit “centripetal” potential in traditionally fragmented research universities e.g., SCCM’s “Advice” program Computing at the large scale is weaning domain scientists from “Numerical Recipes” and MATLAB and creating thirst for core enabling technologies (NA, CS, Viz, …) Cost effectiveness of computing, especially cluster computing, is putting a premium on graduate students who have CS&E skills 25 March 2003 SIAM/NSF/DOE CSMR Workshop Opportunity: nanoscience modeling Jul 2002 report to DOE Proposes $5M/year theory and modeling initiative to accompany the existing $50M/year experimental initiative in nano science Report lays out research in numerical algorithms and optimization methods on the critical path to progress in nanotechnology 25 March 2003 SIAM/NSF/DOE CSMR Workshop Opportunity: integrated fusion modeling Dec 2002 report to DOE Currently DOE supports 52 codes in Fusion Energy Sciences US contribution to ITER will “major” in simulation Initiative proposes to use advanced computer science techniques and numerical algorithms to improve the US code base in magnetic fusion energy and allow codes to interoperate 25 March 2003 SIAM/NSF/DOE CSMR Workshop A word to the sponsors Don’t cut off the current good stuff to start the new stuff Computational science & engineering workforce enters the pipeline from a variety of conventional inlets (disciplinary first, then interdisciplinary) Personal debts: NSF HSSRP in Chemistry (SDSU) NSF URP in Computer Science (Brandeis) – precursor to today’s REU NSF Graduate Fellowship in Applied Mathematics NSF individual PI grants in George Lea’s computational engineering program – really built community (Benninghof, Farhat, Ghattas, C. Mavriplis, Parsons, Powell + many others active in CS&E at labs, agencies, and universities today) at NSF-sponsored PI meetings, long before there was any university support at all 25 March 2003 SIAM/NSF/DOE CSMR Workshop Related URLs Personal homepage: papers, talks, etc. http://www.math.odu.edu/~keyes ISCR (including annual report) http://www.llnl.gov/casc/iscr SciDAC initiative http://www.science.doe.gov/scidac TOPS software project http://www.math.odu.edu/~keyes/scidac 25 March 2003 SIAM/NSF/DOE CSMR Workshop The power of optimal algorithms Advances in algorithmic efficiency rival advances in hardware architecture Consider Poisson’s equation on a cube of size N=n3 Year Method Reference Storage Flops 1947 GE (banded) Von Neumann & Goldstine n5 n7 64 1950 Optimal SOR Young n3 n4 log n 2u=f 1971 CG Reid n3 n3.5 log n 1984 Full MG Brandt n3 n3 64 If n=64, this implies an overall reduction in flops of ~16 million *On a 16 Mflop/s machine, six-months is reduced to 1 s 25 March 2003 SIAM/NSF/DOE CSMR Workshop 64 Algorithms and Moore’s Law This advance took place over a span of about 36 years, or 24 doubling times for Moore’s Law 22416 million the same as the factor from algorithms alone! relative speedup year 25 March 2003 SIAM/NSF/DOE CSMR Workshop The power of optimal algorithms Since O(N) is already optimal, there is nowhere further “upward” to go in efficiency, but one must extend optimality “outward”, to more general problems Hence, for instance, algebraic multigrid (AMG), obtaining O(N) in anisotropic, inhomogeneous problems R n error damped by pointwise relaxation AMG Framework algebraically smooth error Choose coarse grids, transfer operators, etc. to eliminate, based on numerical weights, heuristics 25 March 2003 SIAM/NSF/DOE CSMR Workshop