Altera Presentation - Critical Facilities Roundtable

advertisement

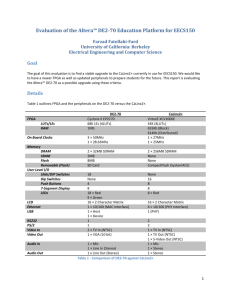

PG&E and Altera Data Center Energy Efficiency Project PG&E and Altera: A History of Energy Efficiency • After hours cooling project • Chiller VFD retrofit • CDA compressor replaced with VFD CDA compressor • Data Center Efficiency Project PG&E and Altera: A History of Energy Efficiency VFD CDA Compressor Retrofit Project Cost: $110k ROI: 3 years PG&E Rebate: $15k Annual Savings: $32k After Hours Cooling Project Project Cost: $78k ROI: 1.1 years PG&E Rebate: $36k Annual Savings: $39k Chiller VFD Retrofit Project Cost: $139k ROI: 3.8 years PG&E Rebate: $31k Annual Savings: $29k Altera Data Center Energy Efficiency Project Objectives • Keep servers between 68° and 77° F (ASHRAE) • Reduce energy use • Accommodate server growth • Increase server and data center reliability Data Center Layout Data Center Before Improvements Portable AC unit Two Interests Meet • Altera had a temporary cooling unit in place to serve a ‘hot spot’ and was looking at ways to handle planned load increases. • In PG&E’s territory, improving data center energy efficiency by 15% would save 100 GWh of electricity – the equivalent of powering 15,000 homes for a year or taking almost 8,000 cars off the road. Why is Airflow a Problem in Data Centers? • • • • Wasted energy Wasted money Less capacity Less reliability Why Do These Problems Exist? In a typical data center… • Only 40% of AC air is used to cool servers Robert 'Dr. Bob' Sullivan, Ph.D. Uptime Institute • HVAC systems are 2.6 times what is actually needed Robert 'Dr. Bob' Sullivan, Ph.D. Uptime Institute • Not a big concern when power density was low but it continues to grow (about 150w/sqft) • The culprit: allowing hot and cold air to mix First Step: Assess Current Situation • Data loggers placed in the inlet and discharge air streams of each cooling unit. • Four loggers placed in each cold aisle. • Current transformers installed on the electrical distribution circuits feeding the two roof-top condensing units. • Total rack kW load was recorded to establish baseline. Findings • Temperature across cooling units ranged from 12° to 18°F. • Temperature variance of up to 14° degrees from one server to another. • Approximately 45 kW could be saved in theory if air flow was ideal Second Step: Implement Ideas from Meeting with PG&E • ALL servers must be in hot/cold aisles (HACA) • Blanking plates between servers • Strip curtains at ends of aisles • Remove perforated tiles from hot aisles • Partitions above racks Third Step: Altera Adopts Changes • APC in-row coolers installed • Temporary cooling unit removed • Blanking plates added • Installed strip curtains to separate the computing racks from the telecom area • Shutting off CRAC unit Addition of APC IRCs’ Simple changes, big benefits Simple Changes, big benefit Final Measurement and Review • Even after Altera made all of these changes, excess cooling capacity still existed. • PG&E recommended shutting down a second CRAC unit, thus putting all primary cooling on chilled water units. Altera’s New and Improved Data Center • Temporary mobile cooling unit gone • Two CRAC units shut off • Server temperature variance a mere 2° F • Net electricity reduction of 44.9 kW • Annual energy savings of 392.9 MWh • Overall energy savings: 25% Moral of the Story? • Improving airflow is a safe and sensible strategy to simultaneously make data centers greener, more reliable, higher capacity, and more economical to operate. • To achieve results such as Altera’s, it takes teamwork between IT, Facilities, HVAC experts, and PG&E.