Benchmarking 1 - learning

advertisement

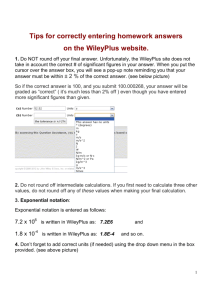

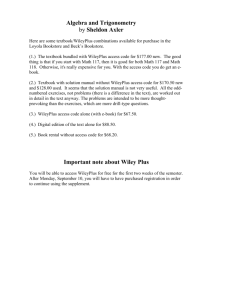

Benchmarking and Mastery: Integrating Assessment into Teaching and Learning roy.williams@port.ac.uk Dept. Mathematics Univ. of Portsmouth Paper presented at ICTMT, Portsmouth, July 2011 Some things to think about … • What’s the difference between: “Doing mathematics ”and “Thinking like a mathematician”? •What’s happening when students come back for an extra 5-7 hours of exams, after finishing the scheduled 7 hours of exams? •Can we relegate assessment to the certification of students’ own benchmarking? •What is the best resourcing model: how do we balance and manage: o Investment, Outsourcing, Crowd-sourcing or: Fixed, Contracted or Shared costs? What’s the Question? A Digitally Enhanced Learning Ecology ? (a new DELE?) Not ... What does it mean to take technology into the classroom? But rather ... What does it mean to situate teaching and learning within a digital learning ecology? Benchmarking and Mastering What? • Transformation Applications Theory • Generalisation Or … •Applying algorithms •Creating algorithms •New proofs •Alternative proofs (e.g. by Reductio ad absurdum) •More elegant proofs •More elegant solutions •New/more elegant descriptions/ applications. •Informing new strategies (biology, finance, logistics) •Facilitating new laws •Creating new tools(?): Tau/Pi, i, infinity, fractals, statistical tendency, calculus. 18 Months Large Classes “Live Pilot”: 1st Year Calculus and Algebra: Wileyplus 1. 2-week start-up (+ and -). 2. ASP (Application Service Provider): • e-text book (text book), practice tests - (algorithmic) - compile exams, ppt, PRS questions, maths palette, hints/ links/ solutions. 3. F/S Assessment // Benchmarking • Students can repeat individual questions (3 times, new values),not assessment (~ 100 questions). • 7 hours of exams ( + 7 hours more, optional). 4. Many hours practice, (new and old values), anywhere, 24/7. 5. Many students return for up to 7 extra hours of exams, to repeat ones that they may already have passed, or scored well. They are: • Accumulating competencies, not collecting grades • Shifting from external requirements for compliance >> self-organised mastery (strategic behaviour?). 6. Log books: for writing mathematics. Student “Feedback” Lectures Textbook Tutorials Mathematics Assessment Figure 1: Basic Data Graduate Student Lectures “Feedback” e/textbook Data Tutorials Mathematics Practice Tests … (Same/ New Values) Benchmarking: (New Values) Resources •PRS •ppt Tips Solution s Answers Links Competence Figure 2: Wiley Certifying Benchmarking ? Student Lectures Workshops Links R N PRS Passwords A W M/B R: resources A: apps N: networks W: wikis M/B: micro/blogging e/textbook Feedback DIMLE 1 DIMLE 2 DIMLE 3 DIMLE 4 Mathematics Assessment Tutorials Authoring, Customising Log books Benchmarking: (New Values) Certifying Benchmarkin g Practice Tests … (Same/ New Summative Values) Assessment Formative Assessment Reports Data Figure 3: Design Palette Solutions Next step Tips Links Debugging Answers Mathematician DIMLE 3 Student PRS Lectures DIMLE 1 Workshops A W Links N R M/B R A e/textbook Reports Tutorials W M/B R: resources A: apps N: networks W: wikis M/B: micro/blogging Log books N Authoring, Customising DIMLE 4 Practice Tests … (Same/ New Values) Benchmarking: Next step (New Values) Data Tips Passwords Solutions Certifying Benchmarking Figure 4: Design # 1 Links Debugging DIMLE 2 Mathematician Answers Mathematics Formative Assessment Summative Assessment Assessment Feedback Narrative 0. This is a basic outline of the presentation, which will be worked up into something more substantial, a paper, after the conference. 1. Introduction This presentation raises some questions about assessment, about the use of technology in teaching and learning, and about how all of these may be integrated, within an approach that shifts away from traditional ‘assessment’ towards ‘benchmarking and mastery’. It also provides a rather basic, but open and interactive strategy and design template for having conversations about past, present, and future approaches to resolve some of these questions. 2. Some things to think about ... In exploring these issues, we need to be mindful of the following: 2. 1 What’s the difference between ‘doing mathematics’ and ‘being a mathematician’? Richard Noss, in his keynote address, said that rather than thinking about how technology can help us to do what we already do (although I am sure he has no objection to that), we should focus on how technology can help us to explore previously unlearnable ideas. Several other papers picked up on the way that a dynamic and interactive mathematics’ is that we want students to learn. Noss spoke mathematical learning environments – DIMLE, or DILE, (Novotna & Karadag) can be used enable students to learn by exploring, trying out, and refining in these digital environments. 2.2 But we first need to ask ourselves what But first we need to ask ourselves what this ‘mathematics’ is that we want students to learn. Noss spoke simply of: transformation and generalisation. We might extrapolate this, for instance by linking it to theory and/ or application. Or we might consider which aspects we would like to include, prioritise, and how we would like to link them to, for instance: •Applying, creating, deriving algorithms •New, alternative, or more elegant proofs / solutions. •New / more elegant descriptions / applications. •Informing new strategies (biology, physics, finance, logistics). •Providing powerful ideas for articulating theory in other disciplines. •Creating new ideas and tools: Tau/Pi, i, infinity, fractals, statistical tendency, calculus. 2.3 We then we need to look at the data, and ask ourselves: What’s happening when students come back for an extra 5-7 hours of exams, after they have already finishing the scheduled 7 hours of exams? This is consistent over two semesters now, so it’s not the novelty of the experience, and it was the result of the changes that we put in place when we started using the Wileyplus platform (see below). 2.4 This leads to an important challenge: can we relegate assessment to the certification of the student’s own benchmarking? To some extent the answer is yes, this emerges from the previous point, although to some extent it might be a rather idealistic goal that still needs to be further explored (in research and in practice). 2.5 Lastly, what are the implications for resourcing good teaching and learning: how do we balance and manage: investment, outsourcing and crowd/peer-sourcing, or: fixed, contracted-out and shared costs? This arises from many years of e-assessment development and practice, and our recent research on using an ASP (Application Service Provider), i.e. Wileyplus (McCabe, Williams & Pevy, 2011). The issue is the design, development and management of an integrated, sustainable, and flexible teaching and learning environment, and whether we go down the path of internal investment (fixed in-house costs), outsourcing (via commercial ASP’s), or what might be called “peer-sourcing” (a multi-institutional variant of crowd-sourcing or open-sourcing, or what Mike McCabe calls open re-sourcing). All of this leads us to a different question from the one posed early on in the conference, namely: “What does it mean to take technology into the classroom?” We should rather turn the question around: “What does it mean to take teaching and learning into a dynamic, digitally enhanced learning environment?” 3. Eighteen month large classes “Live pilot” We are coming to the end of what could be called an 18- month large classes “live pilot”, using Wileyplus for the 150 or so student taking the 1st year courses in calculus and linear algebra. The key aspects of this were the following: 3. 1 Two-week start-up We literally got the course up and running, live, with 150+ students, in two weeks, with help from the Wileyplus team in Chichester. To be fair, it was based largely on a text book that was already used for this course, published by Wiley, and we had other e-assessment resources available too (both additional and back-up). Nevertheless, it points to two things: i) given a suitable, well known core text, it is possible to mount an integrated facility for a course in two weeks; ii) this therefore also applies, albeit with slightly more lead time, to possibly switching to an alternative ASP. The digitally based educational resource market is more flexible and more competitive than its print (only) predecessors. 3.2 ASP (Application Service Provider) An external provider, Wileyplus, was used which provided a full e-text book (with paper text book available for purchase), algorithmic practice tests, the facility to compile exams, ppt materials, PRS questions, a symbolic palette for writing answers ‘in mathematics’, basic graphic interaction in providing graphs as answers, and hints/ links/ answers/ solutions that can be set to link to particular attempts (1st attempt, 2nd, etc). 3.3 Assessment // Benchmarking These courses use a hybrid mode of formative/ summative assessment: seven tests are set over a 12 week semester, designed to be completed in 7 one hour sessions, with an additional 7 hours of testing optionally available. Students can repeat individual questions (3 times, with new values), rather than the previously used model, in which they had to repeat a whole assessment (up to 3 times). Students can also practice, in similar tests, using either the same values, or new values each time, 24/7, accessible from anywhere. 3.4 Logbooks, lab sessions, tutorials, Maths Café. It is possible to capture ‘working’ in Wileyplus, but it was decided to ask students to write up solutions for selected practice questions in a hard-cover workbook which each of them was given. This logbook served as a resource for their own learning, and for tutorials, for lab sessions, and discussions with peers or lecturers in the open space ‘Maths Café’. Students were required to hand their logbooks in at the end of the semester, and completion of a minimum amount of work contributed a small amount to their grade for the semester. 3.5 Data Date from initial research (McCabe, Williams & Pevy, 2011) as well as from more recent semesters shows that students spend substantial amounts of time doing practice tests online. Many of them put considerable effort into writing up solutions in their logbooks, and roughly a third of the students spend up to 14 hours in the exam sessions, i.e. 100% over and above what is required (i.e. a total of 14 hours rather than 7 over the semester and exam period). 4. Discussion From observation of students and a rough survey of the data in Wiley, as well as from a focus group workshop, it is clear that many students are working well beyond the minimum requirements. This is true for nearly all students using the ‘repeat individual questions’ options in Wileyplus, but is hardly true at all for other test material provided in Maple TA, in which the only option they had was to repeat the whole assignment, regardless of how much they achieved in their previous attempts. This is unusual behaviour for students, particularly as it applies across the board – to struggling, average and good students. E.g. student A did ...., student B did ...., student C did ... In broad terms, what seems to be happening is that students are: 1. First of all, complying with the course’s threshold requirements (25% per test, 40% aggregate for the course). 2. Continuing to benchmark their own ability, based on internal motivation to master more of the material, based on what they would like to try to achieve. 3. Accumulating competencies, not collecting grades. It is clear that the specific affordances of providing opportunities to repeat questions (rather than whole tests) are crucial in motivating students to go beyond the minimal compliance, and start to benchmark themselves against their own targets. This takes us back to the question of what it is that they are benchmarking and mastering: doing mathematics, or becoming mathematicians? To put it in Noss’s terms (above), are they simply mastering specific transformations, or are they also grasping the underlying principles of generalisation, as well as its use, in theoretical and applied problems? In practical terms, it might be useful to consider the following: 1. What are the limits of doing this in large classes? 2. Is benchmarking and mastery applicable to smaller classes, and if so, would it be done differently? What are the limits of compliance and mastering transformations? 4. How should mathematical thinking and working, and specifically the processes of generalisation be accommodated, and how can it usefully be benchmarked and mastered? 5. What are the limits of mastery, particularly in large classes? 6. How far is it desirable and possible to ‘relegate assessment to the certification of the student’s own benchmarking and mastery’? 5. Design and Strategy Once we have provisional answers to these issues, we can explore how we might situate teaching and learning in a digital learning ecology. One way to do this is to visually explore the way teaching, learning, assessment, benchmarking, mastery, and technology can fit together in a (digital) learning ecology. To do this we need the following: 5.1 Basic Model Figure 1 outlines the basic elements that are provided in Higher Education to enable a student to pass through ‘mathematics’ to graduate. 5.2 Wileyplus Figure 2 outlines the additional, and digitally integrated, facilities offered by Wileyplus. Wileyplus has some drawbacks, in terms of formal ‘summative’ assessment (though it is possible to ‘work-around’ them). But crucially for our purposes here, Wileyplus is designed primarily as a learning/ learning and teaching platform, rather than as an assessment platform. This is a useful place to start. 5.3 Design Template To take the Basic Model forward, we first of all need to decide what we need in our design ‘palette’ – what features need to play a part in creating a ‘digital learning ecology’ that will be suitable for taking our student through ‘mathematics’ to becoming a mathematician? Figure 3 includes some elements of such a palette: Workshops Several speakers at the conference, from the first to the last keynote, showed how digital tools and platforms, (Papert’s) ‘microworlds’, digital interactive (mathematical) learning environments (DILE’s or DIMLE’s), etc can not merely literally ‘facilitate’ teaching and learning (make it easier and more efficient), but can make the unthinkable, or the previously unteachable, possible (and fun). All of these can, for the purposes of this palette, be included in the title ‘workshops’ – which could also take non-digital forms, such as dance and movement, as well as writing about mathematics, which emerged in one or two papers and discussions. Benchmarking Crucial to DILE’s is, obviously, interaction. Several speakers talked of interaction in terms of iterative cycles of trying out, exploring, and refining. There are several elements that we might like to add to our palette to make this possible and to start to integrate more interaction into benchmarking. Many of these may help us to transform ‘assessment’ (or at least shift it) towards benchmarking and mastery. These include: practice (tests and microworlds); testing or benchmarking transformations (and generalisation?); enriched interaction (links to text, tips, solutions, answers, next steps, links to debugged examples, etc). Flexibility and Context Good teaching and learning is responsive to learners and to context (as far as is possible). It also needs to be relevant to the teachers and learners and their specific contexts, which are of course changeable. All this means quite simply that although it is useful to have large amounts of standardised material available, it is essential that flexibility allows for other material (both new and imported from elsewhere) to be integrated into the learning environment. Data and Reports Most digital platforms and tools provide data, often lots of it. What teachers (and learners) need, however, are specific, targeted reports, to answer specific questions that arise in their practice. This is often lacking, and where it is provided, it is driven not by learning, but rather by administration. Teachers and learners could use far more sophisticated reports (and ‘feedback’), for which most of the data is already available (just not in useful outputs). Certifying Benchmarking Some outputs, like ‘summative assessment’, which might be transformed into ‘certifying benchmarking’ need additional layers of security. This is best done by password-controlled access, and is essential to eliminating the unnecessary administrative (and technical) overload that is otherwise required. (Note: this should not, and does not have to shift the ‘centre of gravity’ of the learning environment back from teaching and learning into formal assessment or, even worse, into administration). Links Teachers and learners already make extensive use of materials, networks and contacts available through the Internet. There are several tools and platforms that make it possible to link and share all of these. In principle it would be more useful if they could be integrated to a greater extent with teaching and learning. The current trend is away from institutional VLE’s (virtual learning environments, many of which are no more than student management systems), towards PLE’s (personal learning environments). This is an advance, but there is still much to be done to link up PLE’s across communities of inquiry (such as courses). Our palette needs to have many type of links, so that we can decide where they need to connect within our digital learning environment, and how this is to be done. Design Draft #1 Figure 4 is an example of how the Design palette in Figure 3 could be used, to design an integrated, flexible, digital learning environment. A number of elements of the design have been ‘binned’ at the bottom left: assessment, formative and summative assessment, and feedback. These are all displaced by: practice tests, benchmarking, tips, next step, solutions, links, answers. ‘Debugging’ has been brought in too – it can capture, tag, and display common errors, and point up other errors for discussion. ‘Workshops’ now overlaps with lectures, tutorials and even the e-textbook, as it can shift the mode of all of these activities to something more interactive, particularly if other tools (DIMLE’s and Apps) are linked in here too. ‘Data’ is relegated into the background, to be replaced by ‘Reports’ (on demand) for lecturers and students. ‘Passwords’ is an essential addition, to link Benchmarking to Certifying Benchmarking. Links are added too, to wikis, networks, resources, and to other DIMLE’s and Apps. The domain of ‘mathematics’ is expanded to include all of the core elements provided by the university, as well as the links to external resources and networks. Resourcing Resourcing is a key part of design and strategy. In broad terms, three there are three options: outsourcing, in-house investment, and sharing. (See McCabe,. Williams and Pevy 2011). In principle, all three options, and combinations of them, can deliver what is required. However, to achieve integration across all of the above areas in our design palette, considerable organisational and financial resources are required. This can be done in the shared (or ‘peer-sourced’) option, see for instance Linux, but the ‘text book’ (electronic or otherwise) still seems to require a ‘publisher’. (There are interesting questions about whether the relationship between core text and core activities could be inverted, but that’s speculative, and it’s too large a question to go into here). Commercial ASP’s already provide this option, although without much of the desired flexibility. Inhouse options can and do provide quite a lot, but the management and systems requirements for a comprehensive facility exceed that of individual organisations, many of which are now required to compete against each other, rather than co-operate. 6. Conclusion This paper has raised several questions about the way in which assessment can be integrated into teaching and learning, and in fact become just a confirmation of learning that has already happened, which is more student centred, student driven, and which markedly increases student effort and motivation. One of the interesting issues that arose in the conference was the impact of e-assessment. It was asked whether e-assessment, however much the students enjoyed doing it, actually had an impact on their understanding and learning in the next years of their courses. It probably goes without saying that multiple choice e-assessment is just (and only) that – it assesses what the student knows at a particular point in time, and not much more. The impact of quite a different form of eassessment (where the answers are written ‘in mathematics’), and quite a different mode of assessment (personal benchmarking and mastery) might be different, and could be an interesting area to research. The ‘live pilot’ experience of using Wileyplus points in the direction of a different mode of assessment, teaching and learning, based on student driven mastery (however modest that may be). But if this can be achieved, the next question would be whether mastery could be opened up, and (slightly) expanded, so that motivated students could take up further challenges, beyond the confines of a particular course. The design and strategy palette is a very basic, but quite flexible, template for trying out, exploring, and refining some of the ways in which we might ‘situate teaching and learning in a digital learning ecology’. The question of resourcing remains, as none of the three options (above) currently yields all that is required. What is evident, though, is that providing the resources and the affordances for students to repeat each question up to three times (each time with different values) does lead to quite different behaviour across the board, and does open up the possibility of ‘relegating assessment to the confirmation of the student’s own benchmarking and mastery’. McCabe, M. Williams, R.T. & Pevy, L. (2011) ASPs: Snakes or Ladders for Mathematics? Forthcoming, MSOR proceedings.