CCCT05-austin

advertisement

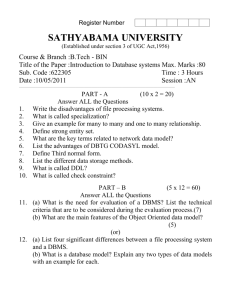

The Problem of Context in Sentence Production Surely A Case to Re-Convene the Data Base Task Group? Derek J. SMITH Centre for Psychology University of Wales Institute, Cardiff smithsrisca@btinternet.com http://www.smithsrisca.co.uk As presented to the 3rd International Conference on Computing, Communications, and Control Technologies Austin, TX, Wednesday 27th July 2005 A BIT MORE ABOUT THE AUTHOR • 1980s - specialized in the design and operation of very large DBTG databases. • Since 1991 taught cognitive science and neuropsychology to Speech and Language Pathologists. • Hence interdisciplinary in database, cognitive neuropsychology, and psycholinguistics. THE PROBLEM • Information systems love duplicating your data. E.g. duplicate postings to a transaction file, duplicate entries in a master file, entire duplicated files (try moving house, and see how long it takes for the old address to stop being used!) • To help cure this problem, by the late 1950s steps had been taken to specify organizational data more accurately using “data dictionaries“ and “data models". • This accumulation of "metadata" - data about data – was then used to specify a central shared-access data “base”, and the software products which managed the whole process became known as "database management systems", or "DBMSs". • And yet those not directly involved with DBMSs know little about their technical construction, evolution, or how to design or operate them effectively (Haigh, 2004). THE PLAN OF ATTACK • This paper concerns itself with the network database. • It reminds us of the history, not just of the database itself, but of the whole idea of associative networks ….. • ….. and then considers the trans-disciplinary relevance of the underlying concepts and mechanisms to the science of psycholinguistics, because they might just help solve some of that science's long-standing problems. • So history ..... networks ..... words in the mind ..... • and always in the nomansland between pure IT and pure psychology • and if nothing else, you will at least learn the difference between speech and language! NETWORK DATABASE HISTORY (1) • The story of the network database begins in the early 1960s at the General Electric Corporation's laboratories in New York, where Charles W. Bachman had been given the job of building GE a DBMS. • The resulting system was the "Integrated Data Store" (IDS), and was built around a clever combination of two highly innovative design features, namely (1) a “direct access” facility similar to IBM’s acclaimed RAMAC, and (2) Bachman’s own "data structure diagram" (soon to become famous as the "Bachman Diagram"). • This is how the direct access part of the equation is implemented ….. NETWORK DATABASE HISTORY (2) • Bachman Diagrams prepare your data for maximum usability by analyzing it on a set owner/set member basis. • Owner records are stored using the direct access facility, and their related members are identified using chain pointer addressing. • "Via-clustering" is often used to keep member records physically close to their owners. (This cannot always be done, but is very efficient in disc accesses when it can be.) NETWORK DATABASE HISTORY (3) • And here, from Maurer and Scherbakov (2005, online) is a typical ownermember set (left) and the corresponding Bachman Diagram (right) ….. NETWORK DATABASE HISTORY (4) • Bachman had the IDS prototype running early 1963, and by 1964 it was managing GE's own stock levels. • Initial user feedback was so positive that the Bachman-GE approach soon came to the attention of CODASYL, the committee set up by the Pentagon in May 1959 to produce a general purpose programming language. • However, CODASYL had published the specifications for COBOL in January 1960, so it predated IDS by four years and had accordingly not been designed to support the particular processing requirements of DBMSs ….. NETWORK DATABASE HISTORY (5) • In fact, it was so difficult for COBOL to implement IDS's chain pointer sets (or "lists") of records, that in October 1965 CODASYL established a List Processing Task Force (LPTF) to look into possible improvements to the specification. • The LPTF meetings immediately became so dominated by database issues in general that they renamed themselves the Data Base Task Group (DBTG). • We may thus refer to IDS as a “DBTG database”, a “CODASYL database”, or a “network database”. All these terms are synonymous and used inter-changeably in the literature. NETWORK DATABASE HISTORY (6) • A curious turn of events then saw IDS development taken over by one of GE's early customers, the B.F. Goodrich Chemical Corporation. They had been highly impressed with IDS, but wanted greater functionality, so they bought the rights to develop an IBM version. • By 1969, Goodrich were able to market their improved system in its own right, badging it as the "Integrated Database Management System" (IDMS). The new product was heavily deployed in the 1980s, and survives to this day as Computer Associates' CA-IDMS, in which incarnation it continues to power many of the world's heaviest duty on line systems. • Bachman was awarded the 1973 A.C.M. Turing Award for his achievements ….. NETWORK DATABASE HISTORY (7) • ….. however, it’s just possible that Bachman had actually been cleverer with his network memory technology than even he or the Turing Awards panel realized ….. • ….. because in historical terms the idea of associative memory underlies much of psychology as well. Indeed, it dates all the way back to the classical Greek philosophers. • So now for some history from a totally different discipline ….. THE BIRTH OF COGNITIVE SCIENCE (1) • Aristotle had suggested back in around 350 BCE that memory was based on the "incidental association" of one stored concept with another. • That same general orientation went on to give its name to the entire "Associationist" tradition of philosophy, culminating in Freud's "association of ideas" technique of psychoanalysis and the modern connectionist net and semantic network industries. • Data networks, in other words, are nothing new to students of the mind, and in this paper we are going to select one application in particular for attention ….. THE BIRTH OF COGNITIVE SCIENCE (2) • ….. namely early attempts at machine translation (MT). • It was one of these early "computational linguists" - Cambridge University's Richard H. Richens, plant geneticist by profession but self-taught database designer into the bargain - who first coined the popular modern term "semantic net" (Richens, 1956, p23). • Richens was actually half of an important research partnership in the history of cognitive science. Just after the war he had toyed with Hollerith technology to help him analyze his genetics research data, and had ended up with a rudimentary punched-card database. • This experience convinced him that with the right arrangement of data and greater processing power it ought to be possible to automate most anything, including natural language translation. So he began designing the card layouts for a bilingual machine dictionary – making him arguably the first database designer? THE BIRTH OF COGNITIVE SCIENCE (3) • Richens then discovered that his enthusiasm for MT was shared by a University of London crystallographer named Andrew D. Booth, himself something of an expert in computers. During WW2, Booth had been a “boffin” in the rubber industry, x-raying slices of rubber from destroyed enemy aircraft and vehicles. And because X-ray crystallography generates a lot of numbers, Booth had built calculating machinery to assist him. He had continued this work when he got a peacetime lectureship at Birkbeck College. • This research had then brought him to the attention of the US National Defense Research Committee's Warren Weaver. Weaver duly met with Booth on 8th March 1947 while the latter was on a fact-finding visit to the University of Pennsylvania's Moore School of Engineering. • Weaver was so enthusiastic about what Booth had to say that he used his influence to put him forward for a study scholarship under John von Neumann at Princeton's Institute of Advanced Studies. THE BIRTH OF COGNITIVE SCIENCE (4) • Booth was at Princeton from March to September 1947, and upon his return to Britain proceeded to build a small relay [i.e. electromechanical] computer, complete with one of the first magnetic drum memories (10 years before IBM’s RAMAC). • A chance meeting of minds then changed the world. It took place between Richens and Booth on 11th November 1947 (Hutchins, 1997), and focused on the pair's shared interest in MT. • They concluded that Booth's magnetic drum might provide the sort of random access technology needed to host Richens' proposed lexical database - giving them 15 years prior claim to Bachman's basic IDS architecture. THE BIRTH OF COGNITIVE SCIENCE (5) • There followed a decade of collaborative research during which this and eventually many other teams – found out just how complicated natural language really was! • To start with, MT took the scientific world by storm, with the first MT conference being organized at MIT by Yehoshua Bar-Hillel (an MT skeptic). This took place 17-20th June 1952. • Centers of academic excellence soon emerged at MIT (Victor Yngve), Washington (Erwin Reifler), and Berkeley (Sydney Lamb). Britain's effort was concentrated at the Cambridge Language Research Unit, under Margaret Masterman, where the researchers included Richens himself, Frederick Parker-Rhodes, Yorick Wilks, Michael Halliday, and Karen Spärck Jones. THE BIRTH OF COGNITIVE SCIENCE (6) • In short, this was interdisciplinary science at its best, and its target – language - lay at the very heart of cognition. We therefore date the birth of cognitive science to that foggy November 1947 meeting between Richens and Booth. • Semantic networks are now a major research area within AI (for an excellent review, see Lehmann, 1992). OUR PROBLEM AND OUR PLAN • However, as an IDMS designer-programmer turned cognitive scientist, our personal complaint is that network researchers typically ignore the explanatory and practical potential of the network database. • To help restore the balance, the present paper will explore how IDMS concepts might help with one of cognitive science’s most troublesome problems, that of context in speech production. • So let us move away from all the history and look at some modern psycholinguistics. Specifically, we need to look at the staged cognitive processing which takes place during speech production. • WARNING: “Language” and “speech” are - crucially - NOT THE SAME THING, as we shall shortly be seeing. SPEECH PRODUCTION STAGES (1) • The notion that voluntary speech production involves a succession of hierarchically organized processing stages may be seen in a number of influential 19th century models of cognition, but the subject was largely ignored until UCLA's Victoria A. Fromkin reawakened interest in it in the early 1970s (Fromkin, 1971). • Fromkin proposed six processing stages. The first three stages constitute the language part of the speech and language equation, while the latter three provide the speech to go with it. • Reassuringly, there is virtual unanimity amongst authors ancient and modern as to where in the overall scheme of things to place the bulk of the semantic network ….. • ….. you simply attach the semantic network to the command and control module at the top of the cognitive hierarchy, to serve as that module's resident knowledge base. SPEECH PRODUCTION STAGES (2) • The result is a mental champagne-cascade ….. • ….. with ideas pouring down from the top ….. • ….. words being added on the way down ….. • ..... the ideas plus the words give you your language ..... • ….. sounds being added below that ..... • ..... and “linear” speech emerging at the bottom. SPEECH PRODUCTION STAGES (3) • This diagram is from Ellis (1982) and shows how psycholinguists typically summarize the flow of information between cognitive modules. • Click here to see full sized diagram and here for a detailed explanatory commentary. SPEECH PRODUCTION STAGES (4) • Here we see the speech production (lower left) leg of Ellis (1982) in close-up. • Note the three successive modules. Fromkin’s six stages map roughly two each onto these hierarchically separated processing levels ….. SPEECH PRODUCTION STAGES (5) STAGE #1 - PURE IDEATION • Stage #1 - Propositional Thought: This is the selective activation of propositions within the semantic network, as part of the broader phenomenon of reasoning, and it is vitally important to students of the mind because it establishes the semantic “context” for whatever happens next, and especially the use and interpretation of words. • This stage is known by Associationist epistemologists as "ratiocinative" thought. SPEECH PRODUCTION STAGES (6) STAGE #2 - SPEECH ACTS • Stage #2 - Speech Act Volition: This is where a carefully selected subset of the aforementioned stream of propositions is converted into a "speech act" of some sort. • Speech acts are preverbal linguistic manipulations of the social environment, each calculated to achieve some discrete behavioral effect. • Fully functioning adult humans have a repertoire of around 1000 different speech acts to choose from (see Bach and Harnish, 1979, for a fuller list). • In the Chomskyan sense, speech acts give us much of our "deep" sentence structure. • This structure is what gets passed down to Stage #3, thus interfacing the original thought with the spoken word. SPEECH PRODUCTION STAGES (7) THE POINT ABOUT SPEECH ACTS • Because it is the final outcome which matters, speech acts are free to generate sentences which use words ironically or figuratively. • E.g. such everyday phrases as • "when you have a moment" (i.e. now) • and • "if you don't mind" (i.e. whether you do or not). SPEECH PRODUCTION STAGES (8) THE POINT ABOUT ENCODING • Note very carefully that all the mental content we have talked about so far has been NONVERBAL. • In fact, you should think of it as encoded in “images”, “icons”, “sprites”, “ideograms”, etc., both concrete and abstract. • This is very awkward in practice, because you usually end up having to describe in words something whose very essence is that the words haven't yet been selected. SPEECH PRODUCTION STAGES (9) STAGE #3 – “LEXICALIZATION” (REPLACING IDEAS WITH WORDS) • Stage #3 – Word Finding: The deep structure produced by Stage #2 is now passed block by block (grammarians call them "phrases") down the motor hierarchy. • Stage #3 determines the surface words to be used and how they will need to be combined syntactically. Identifying the “agent” of a sentence is particularly vital. For example, consider the ideation “<IDEO = Fido> <IDEO = bite> <IDEO = Derek> <SPEECH ACT = warn>”. • If you get the agent-object relationship confused, then the sentence “Derek bites Fido” will be just as likely to occur as “Fido bites Derek”. CONTEXT IN SPEECH PRODUCTION (1) THE PROBLEM OF PRONOUNS • • • • • There is an even bigger problem with pronouns, thus ..... “Fido is going to bite Derek” “Fido is going to bite him” “He is going to bite Derek” “He is going to bite him” • Context allows the most appropriate NOUN-PRONOUN option to be selected, hence the process is highly sensitive to the prior state of the concept network, IN BOTH SPEAKER AND LISTENER. • Indeed, it is fair to say that it is the mind’s context maintenance mechanisms – whatever they are – which allow everyday conversation to rely so heavily on what is NOT being said! CONTEXT IN SPEECH PRODUCTION (2) THE PROBLEM OF DEIXIS • The use of language to point in some way at a thing referred to is known as "deixis". Here are some examples of its subtypes ..... • Example 1: "It is bad enough when it might have been mentioned many words beforehand, but you also get “forward deixis”, where the referent is still to come. • Example 2: “They have particular problems with pronoun deixis, MT programmers, because they have to work out - occasionally from phrases not yet spoken - what they are supposed to be translating.” • Example 3: "You also get non-explicit deixis, where the referent is left to establish itself without specific mention, as in 'They are out to get me' ”. A CROSS-DISCIPLINARY EXPERIMENT • So what would happen if we used IDMS - a network architecture by design - to implement the knowledge network at the top of the speech motor hierarchy? • Would its systems internals be able to cope (where rival systems have not) with the combined load of philosophical, psychological, psycholinguistic, and linguistic problems? • Specifically, might it help machines master language as well as speech? • Well it is going to take a sustained research effort to answer these questions fully, but the DBTG metaphor certainly promises much in three important areas, as follows ..... DBTG PROMISE #1 HASH RANDOM ADDRESSING • The IDMS hash random facility would be ideal for storing noun concepts such as <IDEO = Fido> and <IDEO = Derek> ….. • ….. giving us cumulatively our personal knowledge base. DBTG PROMISE #2 CHAIN POINTER ADDRESSING • The IDMS chain pointer facility is already ideal for implementing Bachman’s logical data sets, weaving the individual data fragments into a complex yet "navigable" lattice. • Chain pointers thus give more than two millennia's worth of philosophers their associative network. DBTG PROMISE #3 SET CURRENCY ADDRESSING • Perhaps more importantly still, the IDMS device known as the "set currency" does for the DBTG database what calcium-modulated synaptic sensitization appears to be doing in biological memory systems (Smith, 1997). • Biological set currencies allow specific memories to be sustained up to an hour after first activation. E.g the pronoun “him” in the earlier example can point to one noun in particular out of potentially many tens of thousands. DBTG PROMISE #3 A BIOLOGICAL SET CURRENCY? • Readers may simulate the phenomenon of memory sensitization right now by trying to recall the year of Bar-Hillel's MIT conference. You have ten seconds ….. DBTG PROMISE #3 A BIOLOGICAL SET CURRENCY? • The year in question – 1952 - is perhaps ten minutes of listening time ago, but its “engram” – its memory trace - is nonetheless still in a raised state of excitation ….. DBTG PROMISE #3 A BIOLOGICAL SET CURRENCY? • ….. It is long-term memory left "glowing" in some way by the original activation. • This is possibly the mechanism of maintaining referential context and supporting deixis over timeextensive thought or conversation. • Click here for a more detailed introduction to the biochemistry of memory. CONCLUSION (1) • We have been considering the trans-disciplinary relevance of the concepts and mechanisms underlying the DBTG database to the science of psycholinguistics. • Our central complaint was that despite a long tradition of semantic network simulations in computational linguistics none of the established research technologies really implements a network data model as a network physical form. Instead, they prefer to keep the physical storage relatively simple, typically in a "flat file" format. • By contrast, the only architecture which has ever been able to cope with volatile data in bulk is the DBTG architecture. This is because it is largely self-indexing, often via- clustered, and uses pre-allocated expansion space. (This is precisely why CA-IDMS is still supporting the heavy end of the world's OLTP industry, despite repeated attempts to dislodge it.) CONCLUSION (2) • Our humble (and not entirely tongue-in-cheek) proposal is therefore that the DBTG - having delivered on behalf of the volatile data industry in the 1960s - now needs to be reconstituted in the interests of a better understanding of the mind - the ultimate database. • We are ourselves currently researching the nature of the interdisciplinary collaboration which such an exercise would involve. REFERENCES • • • • • • • • Bach, K. and Harnish, R.M. (1979). Linguistic Communication and Speech Acts. Cambridge, MA: MIT Press. Fromkin, V.A. (1971). The non-anomalous nature of anomalous utterances. Language, Vol. 47, pp. 27-52. Haigh, T. (2004). A veritable bucket of facts. In M. E. Bowden and B. Rayward (Eds.), The History and Heritage of Scientific and Technical Information System, Medford, NJ: Information Today. Hutchins, W.J. (1997). From first conception to first demonstration. Machine Translation, Vol. 12, No. 3, pp. 195252. Lehmann, F. (Ed.) (1992). Semantic Networks in Artificial Intelligence. Oxford: Pergamon. [Being a special issue of the journal Computers and Mathematics with Applications, 23(2-9).] Maurer, H. and Scherbakov, N. (2005, online). Network (CODASYL) Data Model. [Electronic document retrieved 17th July 2005 from http://coronet.iicm.edu/wbtmaster/allcoursescontent/netlib/ndm1.htm) Richens, R.H. (1956). Preprogramming for mechanical translation. Mechanical Translation, Vol. 3, No. 1, pp. 2025. Smith, D.J. (1997). The IDMS Set Currency and Biological Memory. Cardiff: UWIC. [ISBN: 1900666057] [Workbook to support poster presented 10th March 1997 at the Interdisciplinary Workshop on Robotics, Biology, and Psychology, Department of Artificial Intelligence, University of Edinburgh.]