Information Flows - University of Virginia

advertisement

Information Flow and Covert

Channels

November, 2006

Objectives

• Understand information flow principles

• Understand how information flows can be

identified

• Understand the purpose of modeling

information access

• Understand covert channels and how to

prevent them

Why Model?

• What is an information security model? Why use one?

• “A security policy is a statement that partitions the states

of the system into a set of authorized, or secure, states

and a set of unauthorized, or nonsecure, states” Bishop,

pg. 95

• “A security mechanism is an entity or procedure that

enforces some part of the security policy.” Bishop, pg. 98

• “A security model is a model that represents a particular

policy or set of policies.” Bishop, pg. 99

Excerpts from Computer Security by Matt Bishop

Examples

• Security Policy – e.g. Those described for use in

the military

• Security Model – e.g. Bell La Padula Model

• Security Mechanism – e.g. Virtual memory (page

tables) that support access protection checking

or

Tagging mechanism that tags all variables with

security access information

Why Formal Models?

• Regulations are generally descriptive

rather than prescriptive, so they don’t tell

you how to implement

• Systems must be secure

– security must be demonstrable --> proofs

– therefore, formal security models

• For “real systems” this is not easy to do.

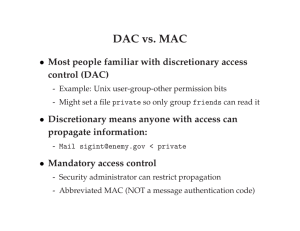

Categories of InfoSec Models

• Two major categories of information security

models:

– Access Control models: protect access to data*

– Integrity Control models: verify that data* is not

changed

* applies to data in storage or in transit

Traditional Models

• Chinese Wall

– Prevent conflicts of interest

• Bell-LaPadula (BLP)

– Address confidentiality

• Biba

– Address integrity with static/dynamic levels

• Information flow

– Close “some” covert channels

Bell-LaPadula Security Model

The Bell-LaPadula (BLP) model is about

information confidentiality, and this

model formally represents the long

tradition of attitudes about the flow of

information concerning national secrets.

.

Bell – LaPadula - Details

• Earliest formal model

• Each user subject and information object

has a fixed security class – labels

• Use the notation ≤ to indicate dominance

• Simple Security (ss) property:

the no read-up property

– A subject s has read access to an object iff the class of

the subject C(s) is greater than or equal to the class of

the object C(o)

– i.e. Subjects can read Objects iff C(o) ≤ C(s)

Access Control: Bell-LaPadula

Top Secret

Read OK

Top Secret

Secret

Secret

Unclassified

Unclassified

Access Control: Bell-LaPadula

Top Secret

Secret

Unclassified

Top Secret

Read OK

Secret

Unclassified

Access Control: Bell-LaPadula

Top Secret

Top Secret

Secret

Secret

Unclassified

Read OK

Unclassified

Bell - LaPadula (2)

• * property

(star):

the no write-down property

– While a subject has read access to object O, the subject can

only write to object P if

C(O) ≤ C (P)

• Leads to concentration of irrelevant detail at upper levels

• Discretionary Security (ds) property

If discretionary policies are in place, accesses are

further limited to this access matrix

– Although all users in the personnel department can read all

[personnel] documents, the personnel manager would expect to

limit the readers of some documents, e.g. file for the Prez !

Access Control: Bell-LaPadula

Top Secret

Write OK

Top Secret

Secret

Secret

Unclassified

Unclassified

Access Control: Bell-LaPadula

Top Secret

Secret

Unclassified

Top Secret

Write OK

Secret

Unclassified

Access Control: Bell-LaPadula

Top Secret

Top Secret

Secret

Secret

Unclassified

Write OK

Unclassified

Security Models - Biba

• Based on the Cold War experiences,

information integrity is also important, and

the Biba model, complementary to BellLaPadula, is based on the flow of

information where preserving integrity is

critical.

• The “dual” of Bell-LaPadula

Integrity Control: Biba

• Designed to preserve integrity, not limit

access

• Three fundamental concepts:

– Simple Integrity Property – no read down

– Star Integrity Property (*) – no write up

– No execute up

Integrity Control: Biba

High Integrity

Read OK

High Integrity

Medium Integrity

Medium Integrity

Medium Integrity

Medium Integrity

Integrity Control: Biba

High Integrity

High Integrity

Medium Integrity

Medium Integrity

Low Integrity

Write OK

Low Integrity

Basic Security Theorem

• A state transition is secure if both the initial

and the final states are secure, so

• If all state transitions are secure and the

initial system state is secure, then every

subsequent state will also be secure,

regardless of which inputs occur.

• This is information flow!

Implementation Question

• What are “all” of the information flows?

– Files

– Memory

– Page faults

– CPU use

–?

Information Flow

• Information Flow: transmission of

information from one “place” to another.

Absolute or probabilistic.

• How does this relate to confidentiality policy?

– Confidentiality: What subjects can see what

objects. So, confidentiality specifies what is

allowed.

– Flow: Controls what subjects actually see. So,

information flow describes how policy is

enforced.

Information Flow

• Next: How do we measure/capture flow?

– Entropy-based analysis

• Change in entropy flow

– Confinement

• “Cells” where information does not leave

– Language/compiler based mechanisms?

– Guards

What is Entropy?

• Idea: Entropy captures uncertainty

• If there is complete uncertainty, is there an

information flow?

Information Flow – Informal

• What do we mean by information flow?

– y = x; // what do we know before & after assignment?

– y = x/z;

• A command sequence c causes a flow of

information from x to y if the value of y after the

commands allows one to deduce information about

the value of x before the commands executed.

– tmp = x;

– y = tmp;

– Transitive

Information Flow – Informal

• Consider a conditional statement

– if x == 1 then y = 0 else y = 1

– what do we know before & after execution?

– What about: if x == 1 then y = 0

– No explicit assignment to y in one case

• This is called implicit information flow

Information Flow Models

• Two categories of information flows

– explicit – opn’s causing flow are independent

of value of x, e.g. assignment operation, x=y

– implicit - conditional assignment

• (if x then y=z)

• Components

– Lattice of security levels (L,

– Set of labeled objects

– Security policy

Information-Flow Model

• Flow relation forms a lattice

• A program is secure if it does not specify

any information flows that violate the given

flow relation

Security Levels

• Linear

– Top secret

– Secret

– Confidential

– Unclassified

• Lattice

– Security level

– Compartment

Security Level Examples

• Linear

– Marking contains the name of the level

– Each higher level dominates those below it

• Lattice

– Marking contains name of level + name of

compartment (e.g. TOPSECRET OIF)

– Only those “read into” the compartment can

read the information in that compartment, and

then only at the level of their overall access

Who Can Read What?

• In a linear system?

• In a lattice system?

Universally bounded lattice

• What is a universally bounded lattice?

• “a structure consisting of a finite partially

ordered set together with least upper and

greatest lower bound operators on the

set.”

• So, what is a partially ordered set?

What’s a Partial Ordering?

• Partial ordering on a set L is a relation

where:

– for all a L, a a holds (reflexive)

– for all a,b,c L, if a b, b c, then a c

(transitive)

– for all a,b L, if a b, b a, then a =b

(antisymmetric)

Universally Bounded Lattice

• So, what are least upper and greatest lower

bounds?

• Suppose <= is the dominates relation. C is

an upper bound of A and B if A <= C and B

<= C. C is a least upper bound of A and B if

for any upper bound D of A and B, C <= D.

Lower bounds and greatest lower bounds

work the same way.

• See next example using Bell-LaPadula

Model

B-LP Security Level Lattice

• S is the set of all security levels

– Suppose the classifications are T, S, U

– Suppose the categories are NATO and SIOP. Then

the possible category sets are

• {}, {NATO}, {SIOP}, {NATO, SIOP}

– Then S = [ (T, {}), (T,{NATO}), (T,{SIOP}),

(T,{NATO,SIOP}), (S, {}), (S,{NATO}), (S,{SIOP}),

(S,{NATO,SIOP}), (U, {}) ].

• R dominates, as described for B-LP

– Convince yourself that the dominates relation is

reflexive, antisymmetric and transitive.

Bell-LaPadula Example

T,{NATO,SIOP}

Least upper bound:

T,{NATO,SIOP}

T,{NATO}

Greatest Lower Bound:

U,{ }

T,{SIOP}

S,{NATO,SIOP}

T,{ }

S,{NATO}

S,{SIOP}

S, { }

U,{ }

End of Lattice modeling

discussion

Consider what can be done at

compile time and execution time

Recognizing Information Flows

• Compiler-based

– Verifies that information flows throughout a

program are authorized. Determines if a

program could violate a flow policy.

• Execution-based

– Prevents information flows that violate policy.

• Both analyze code

• Execution-based typically requires tracking

the security level of the PC as the program

executes.

Compiler Mechanisms

• Declaration approach

– x: integer class { A,B }

– Specifies what security classes of information are allowed in x

• Function parameter: class = argument

• Function result: class = parameter classes

– Unless function verified stricter

• Rules for statements

– Assignment: LHS must be able to receive all classes in RHS

– Conditional/iterator: then/else must be able to contain if part

• Verifying a program is secure becomes type checking!

Examples

• Assignments:

– x = w+y+z;

– lub{w,y,z} x

• Compound Statements:

begin

x = y+z;

a = b+c –x

end

lub{y,z} x and lub{b,c,x} a

Compiler-Based Mechanisms

int sum (int x class{x}) {

int out class{x, out};

out = out + x;

}

What is required for this to be a secure flow?

x out and out out

Compiler-Based Mechanisms

•

Iterative statements - Information can flow from

the absence of execution.

while f(x1, x2, …, xn) do

S;

•

Which direction are the flows?

– from var’s in the conditional stmt thru assignments to

variables in S

•

1.

2.

3.

For iterative statements to be secure:

Statement terminates

S is secure

lub {x1, x2, …, xn } glb {target of an

assignment of S}

Execution Mechanisms

• Problem with compiler-based mechanisms

– May be too strict

– Valid executions not allowed

• Solution: run-time checking

• Difficulty: implicit flows

– if x=1 then y:=0;

– When x:=2, does information flow to y?

• Solution: Data mark machine

– Tag variables

– Tag Program Counter

– Any branching statement affects PC security level

• Affect ends when “non-branched” execution resumes

Data Mark: Example

• Statement involving only variables x

– If PC ≤ x then statement

• Conditional involving x:

– Push PC, PC = lub(PC,x), execute inside

– When done with conditional statement, Pop PC

• Call: Push PC

• Return: Pop PC

• Halt

– if stack empty then halt execution

Covert Channels

• Covert channels are found in everyday life

• Name some!

Covert Channels

• A path of communication that was not

designed to be used for communication

• An information flow that is not controlled

by a security mechanism

• Can occur by allowing low-level subjects

to see names, results of comparisons, etc.

of high-level objects

• Difficult to find, difficult to control, critical to

success

Covert Channels

• Program that leaks confidential information

intentionally via secret channels.

• Not that hard to leak a small amount of

data

– A 64 bit shared key is quite small!

• Example channels

– Adjust the formatting of output: use the “\t”

character for “1” and 8 spaces for “0”

– Vary timing behavior based on key

Definition of convert channel

• Definition 1 : A communication channel is covert if it is neither designed

nor intended to transfer information at all

• Definition 2 : A communication channel is covert if it is based on

transmission by storage into variables that describe resource states

• Definition 3 : Those channels that are a result of resource allocation

policies and resource management implementation

• Definition 4 : Those that use entities not normally viewed as data objects

to transfer information from one subject to another

• Definition 5 : Given a non-discretionary security policy model M and its

interpretation I(M) in an operating system, any potential communication

between two subjects I(S1) and I(S2) of I(M) is covert if and only if any

communication between the corresponding subjects S1 and S2 of the

model M is illegal in M.

Covert Channels Result From

• Transfer unauthorized information

• Do not violate access control and other security mechanisms

• Available almost anytime

• Result from following conditions

• Design oversight during system or network implementation

• Incorrect implementation or operation of the access control mechanism

• Existence of a shared resource between the sender and the receiver

• The ability to implant and hide a Trojan horse

information

encoding

Unauthorized information flow

information

decoding

Receiver

Sender

Legitimate information flow

Covert Channels

client, server and

collaborator

processes

encapsulated server can

still leak to collaborator

via covert channels

Covert storage channel

• Involves the direct or indirect writing to storage

location by one process and direct or indirect

reading of the storage by another process.

• Example storage mechanisms

• Disk space

• Print spacing

• File naming

Sender

Receiver

Storage area

(e.g. disk, memory)

Covert Channels

A covert channel using file locking

Covert timing channel

• Covert timing channel

• Signals information to another by modulating its

own use of system resource is such way that this

manipulation affects the real response time

observed by second process.

• Sequence of events

• CPU utilization

• Resource availability

Sender

Event

Event

Event

Event

Event

Receiver

Differential Power Analysis

• Read the value of a DES password off

of a smartcard by watching power

consumption!

• This figure shows simple power analysis

of DES encryption. The 16 rounds are

clearly visible.

Covert channel identification:

Shared resource matrix (SRM) method

Four steps

1. Analyze all Trusted Computing Base primitive operations

2. Build a shared resource matrix

3. Perform a transitive closure on the entries of the SRM

4. Analyze each matrix column containing row entries with either ‘R’ or ‘M’

L : legal channel exists

N : one cannot gain useful information from channel

S : sending and receiving processes are the same

P : potential channel exists

primitives

shared global variables

mode

access

R

chmod

RM

write

RM

link

RM

...

...

mode

file table

M

...

...

R : Read

M : Modify

Covert channel identification:

Shared resource matrix (SRM) method

Conditions

1. Two or more process must have access to a common resource

2. At least One process must be able to alter the condition of the resource

3. The other process must be able to sense if the resource has been altered

4. There must be a mechanism for initiating and sequencing communications

over this channel

Advantages

Can be applied to both formal and informal specifications

Does not differentiate between storage and timing channels

Does not require that security levels be assigned to internal TCP variables

Drawbacks

Individual TCB primitives cannot be proven secure in isolation

May identify potential channels that could otherwise be eliminated automatically

by information flow analysis

Covert Channel Mitigation

• Can covert channels be eliminated?

– Eliminate shared resource?

• Severely limit flexibility in using resource

– Otherwise we get the halting problem

– Example: Assign fixed time for use of

resource

• Closes timing channel

• Not always realistic

– Do we really need to close every channel?

Covert Channel Analysis

• Solution: Accept covert channel

– But analyze the capacity

• How many bits/second can be “leaked”

• Allows cost/benefit tradeoff

– Risk exists

– Limits known

• Example: Assume data time-critical

– Ship location classified until next commercial satellite

flies overhead

– Can covert channel transmit location before this?

Conclusion

• Have you ever used or even seen a

language with security types?

• Why not?

• Under what circumstances would you

worry about covert channels?

Back Ups

Formal Definition

• Flow from x to y if H(xs | yt) < H(xs | ys)

• Has the uncertainty of xs gone down from

knowing yt?

• Examples showing possible flow from x to y:

– y := x

• No uncertainty – H(x|y) = 0

– y := x / z

• Greater uncertainty (we only know x for some values of y)

– Why possible?

– Does information flow from y to x?

Iteration Example

while i < n do

begin

a[i] = b[i]; // S1

i = i + 1;

// S2

end;

– List the requirements for this to be a secure flow.

May want to draw a lattice.

– Reqt’s for each stmt S1, S2.

– Reqt’s for conditional

– “Combine” the requirements – See homework